Facebook is a failed experiment

It's time to admit the platform is unfixable

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

Only a few years ago, the claim that Facebook was bad for society sounded like baseless techno-panic. How times have changed.

In the Trump era, Facebook's vulnerabilities should be clear to all. Just this week, the company revealed that the platform was being misused by accounts based in Iran that were peddling propaganda. Perhaps worse still, a New York Times article suggested that Facebook helps lure people into virulent, even violent xenophobia. Couple this recent news with the obvious influence of Facebook on elections, its negative effect on mental health, and privacy concerns and a plain truth emerges: Facebook causes as much harm as good.

Facebook is, of course, aware of this criticism. CEO Mark Zuckerberg has been on a campaign ever since the election to try and mollify his increasingly vociferous critics. The company has hired far more content moderators, has tweaked its algorithm and approach to trust and verification, and is now also in the process of assigning users a trust rating. Facebook is doing everything it can to fix itself and the perception that it's a burden on the world.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

But perhaps all of that effort is in vain. Facebook's problems are endemic to the platform. Its scale and structure are themselves the source of the company's troubles. And far from being a few small changes away from improving itself, Facebook's problems may simply not have any good solutions. What if Facebook is just a mistake?

That might sound like an extreme position, but the New York Times report, in particular, was quite damning. It pointed to an academic paper that analyzed Facebook use and anti-refugee crime and found a correlation between the two. The key finding: "Wherever per-person Facebook use rose to one standard deviation above the national average, attacks on refugees increased by about 50 percent."

There has been some controversy over whether the conclusions of the paper have been overstated — that is, whether the case of anti-refugee crime in Germany can be more broadly generalized. But that a relation exists at all is enough to make us question how and why the very existence of Facebook can fan the flames of prejudice.

The reasons behind the connection are in part a function of Facebook's scale and popularity. Because so many people are on Facebook, it allows communities and groups to coalesce around ideas. In Germany, anti-refugee groups have emerged in response to rising xenophobia, and they are filled with articles, memes, and discussion amongst those who oppose allowing in refugees. Such ideas have existed for decades. But it is precisely Facebook's capacity to aggregate users and then present them with a concentrated feed on a topic that allows fringe ideas to gather both momentum and an audience.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

The Times article also suggests that "superposters" play a key role in this process. That term refers to very active users who become akin to community leaders, influencing others by shifting norms so that once-distasteful ideas become accepted. And while Facebook has clamped down on explicit hate speech, the study in question suggests that won't help. It states that "experts believe that much of the link to violence doesn't come through overt hate speech, but rather through subtler and more pervasive ways that the platform distorts users' picture of reality and social norms."

So, ironically, it is precisely Facebook's capacity to build communities that allows it to become a toxic space that exerts a downward pressure on community standards.

Yet if part of that equation is Facebook's scale — i.e. how it works like a virtual town square to gather mobs around fraught topics — the other part is Facebook's very structure. Because of the way in which its algorithm was built to prioritize content that generates the most attention and likes, it induces behavior and posts that tend toward the extreme. Then, because that same algorithm prioritizes the stuff that catches the most eyeballs, it pushes that content in front of more people, creating a vicious cycle.

Up until this point, the demands made of Facebook have almost universally been ones to simply improve the service. There have been suggestions that the company should have a supreme court for content moderation, or that it should behave like a media company and exercise editorial control. There have also been calls for regulation.

But the combination of Facebook's scale and structure have resulted in a machine that cultivates our basest instincts and tendencies: our predilection to follow group norms, to seek out what confirms our pre-existing views, our rush to judge or react through instinct, and our fear of the unknown. As such, far from being an online service in need of some tweaks, or a platform that will eventually get better, perhaps it makes more sense to think of Facebook as a historical anomaly, a kind of huge experiment gone wrong.

It is not that the stated goals of Facebook are in and of themselves bad. A global community is an appealing concept. But reality has shown that Facebook is incompatible with history. Instead of fostering connection, it sows division; rather than increasing understanding, Facebook cultivates and spreads falsehoods and mistrust. And rather than endlessly trying to fix it or make it better, maybe it's time to admit that Facebook was simply a part of the digital revolution that now needs to be discarded.

Facebook needs to be recognized for for what it was: a mistake.

Navneet Alang is a technology and culture writer based out of Toronto. His work has appeared in The Atlantic, New Republic, Globe and Mail, and Hazlitt.

-

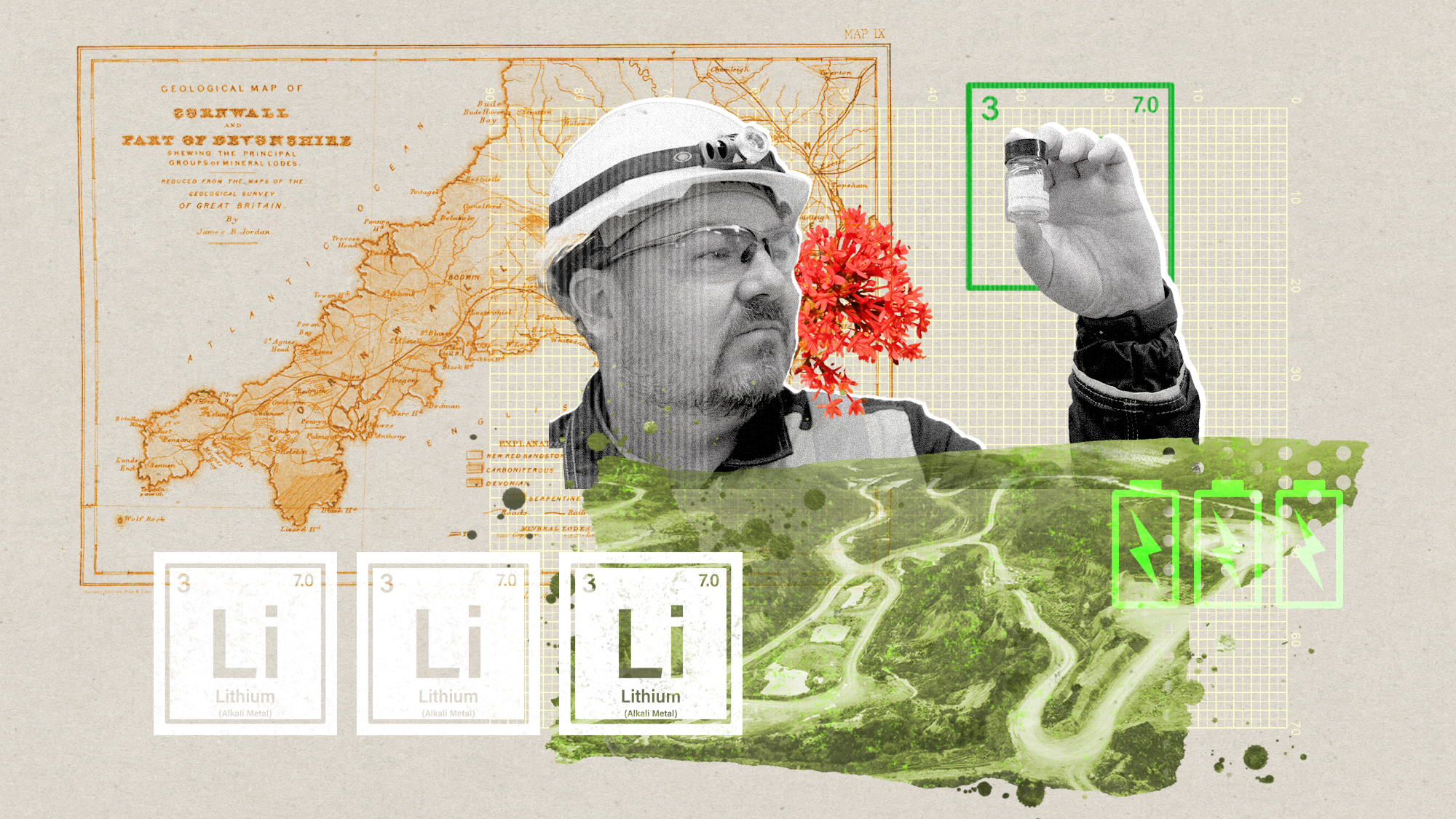

The ‘ravenous’ demand for Cornish minerals

The ‘ravenous’ demand for Cornish mineralsUnder the Radar Growing need for critical minerals to power tech has intensified ‘appetite’ for lithium, which could be a ‘huge boon’ for local economy

-

Why are election experts taking Trump’s midterm threats seriously?

Why are election experts taking Trump’s midterm threats seriously?IN THE SPOTLIGHT As the president muses about polling place deployments and a centralized electoral system aimed at one-party control, lawmakers are taking this administration at its word

-

‘Restaurateurs have become millionaires’

‘Restaurateurs have become millionaires’Instant Opinion Opinion, comment and editorials of the day