Robot 'pals' are invading social media — and it's time to unfriend them

Think Her, except Scarlett Johansson just wants you to buy Viagra

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

Wait! Before you accept the latest friend request on Facebook, are you sure your new friend is a human?

Increasingly, social media are being populated by socialbots, automated profiles that can friend, tweet, like, and comment. These socialbots are built to appear to be human. That means that, unlike 'bots such as Siri or Cleverbot, which just sound human, but are obviously not, socialbots are built to fool people in a contemporary social media Turing Test.

Two famous examples of such 'bots are James M. Titus, who won the first socialbot competition in 2011 by gaining many followers on Twitter, and Carina Santos, a fake Brazilian reporter who reportedly enjoyed as much influence as Oprah. Both of these socialbots appeared to their followers to be flesh and blood instead of Javascript and algorithms. Just take a look at these fascinating human-bot conversation transcripts from the Instititute for the Future to see how effective they were.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

These bots are possible because we supply massive amounts of digitized statements in social media. There's a huge database built from encoded tweets and likes that make users' states of mind available for analysis. These states of mind can be encoded into an artificial social media profile that appears to be human, asking and answering questions and gaining followers.

To be certain, these 'bots tell us something about the state of software engineering within social media. No doubt their makers are skilled programmers, able to anticipate many social situations and make 'bots that are capable of adjusting to trends on Twitter and Facebook.

But more importantly, socialbots tell us something about ourselves. They reflect us, at least the particular images of us that appear within social media: We are liking and tweeting machines. This makes us a ripe target for "weak artificial intelligence."

What I mean by this is that socialbots are built to work within highly constrained environments where only a limited number of tasks need be done. This is so-called "weak" AI. Examples of this form of AI include video game AI (think of how Elites hide behind rocks in Halo); Sgt. Star, the U.S. Army recruiting chatbot; Siri on the iPhone and Cortana on Windows Phone; and Google self-driving cars.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

"Weak" AI has been incredibly successful, in contrast to what Jerry Kaplan calls "science fiction AI," a form where machines have self-consciousness and the sort of intelligence we humans have. Science fiction AI, i.e. "strong" AI, may never happen, but weak AI is everywhere these days.

If socialbots are not "strong" AI (capable of conscious reaction to new and unexpected situations), if they are only possible because they work in highly constrained environments, we should be concerned about the state of social media. If we find our online humanity reduced to acts such as liking products, retweeting the latest news stories, or falling in love with "hot" profiles, and if this is so easily replicated by socialbots, we haven't set the bar very high for humanity. Whose intelligence is weak?

I'm not above all this, myself; I'm pretty certain I've been fooled by 'bots in Twitter a few times. And there's no denying social media use is quite complex, even if it can be imitated by 'bots. For example, it's hard to imagine 'bots that can handle the rich complexities of irony and sarcasm.

So let me alert us to the dangers of socialbots in another way. Maybe robots will be able to drive our cars or sort our socks, but I am not convinced that they should be our friends — at least, not while those who build them imagine ways that our artificial friends can manipulate us into buying useless things, divulging our desires, or toeing a political line. As I argue in my book, behind socialbots stands a massive, powerful network, one we've been hearing a lot about lately: the network of surveillance, comprised both of global corporations who buy and sell our attention and governments who demand our obedience.

Marketers, who are on one side of this surveillance network, could easily use socialbots to drive attention to brands; if you want your brand to appear to be viral, why not buy a thousand retweets? Or, if you want to learn more about your target customers, why not send an automatic envoy to befriend them and gather data on them? Similarly, as has been well-reported, politicians are using these 'bots to create the illusion of massive, grassroots support for their campaigns.

On the other side of the surveillance coin, governments are already using bots to quell dissent. A message repeated by a network of socialbots in Twitter — say, "I think the Leader is doing a fine job" — could subtly alter online debate about government policies and actions.

In the end, I can think of ways in which robots can be among us — indeed, they are in many areas of our lives — but given the combination of the surveillance state and crafty robots who pretend to be human, I can only come to one conclusion: Ban the socialbots.

A socialbot ban would likely be best enforced by the Federal Trade Commission, the agency tasked with regulating advertising. Imagine a Facebook profile that presents itself as an empathetic friend, someone to act as a sounding board for your deepest-felt anxieties about your health, but is really a bot run by the advertising firm BlueKai on the behalf of the pharmaceutical company Pfizer. This wouldn't just be false advertising; it would be deplorable manipulation, a well-programmed deceit.

A ban could also be implemented by the Federal Communications Commission as part of their regulation of political advertising, since socialbots could be used to create a false — and untraceable — sense of popular support for any politician willing to shell out the money for a socialbot network. Any artificial intelligence agents ought to be openly presented as robots paid for by the corporations and politicians who deploy them, not as false, friendly profiles built to insinuate themselves into our social networks.

Of course, this won't do much to stop federal agencies such as the FBI from deploying socialbots in a kind of COINTELPRO for our times. Activists, be warned — the next informant to infiltrate your group could be a robot. Dealing with such state-based surveillance will take more than policies from the FTC or FCC; it will take a much-needed, concerted political backlash against our surveillance state.

When it comes to life with robots, openness should be valued. If we want to divulge our innermost secrets to a robot — and if we know the other to be a robot — that's fine. But for a program to pass itself off as human to befriend and manipulate us is disconcerting, to say the least.

If you're a bot, you should announce it to the world, or face deletion. Out, damned socialbots.

Robert W. Gehl is an assistant professor of communication at the University of Utah. His research is on the culture of technology. His book, Reverse Engineering Social Media (2014, Temple U Press) is a critical exploration of the history of social media software and culture.

-

What to know before filing your own taxes for the first time

What to know before filing your own taxes for the first timethe explainer Tackle this financial milestone with confidence

-

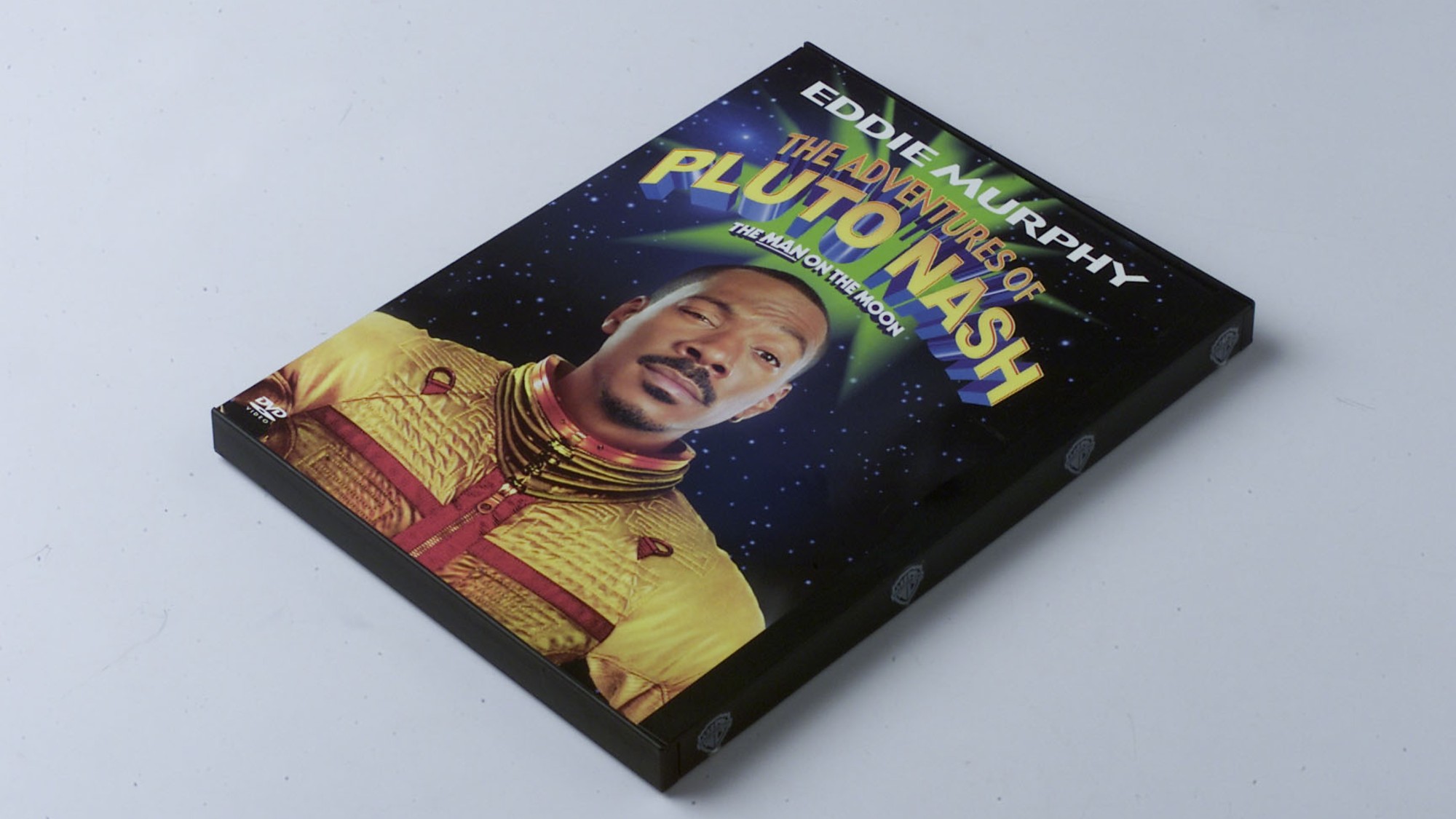

The biggest box office flops of the 21st century

The biggest box office flops of the 21st centuryin depth Unnecessary remakes and turgid, expensive CGI-fests highlight this list of these most notorious box-office losers

-

The 10 most infamous abductions in modern history

The 10 most infamous abductions in modern historyin depth The taking of Savannah Guthrie’s mother, Nancy, is the latest in a long string of high-profile kidnappings