The biggest danger of robots isn't about them at all. It's about us.

How will humans react to a robot labor force?

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

The drumbeat gets louder with every recession and every technological breakthrough. Labor force participation has been on a slow decline punctuated by plunges around recessions. Wages have stagnated for over a generation. The technology companies that are on the leading edge of change are creating fantastic wealth, but that wealth trickles down less and less.

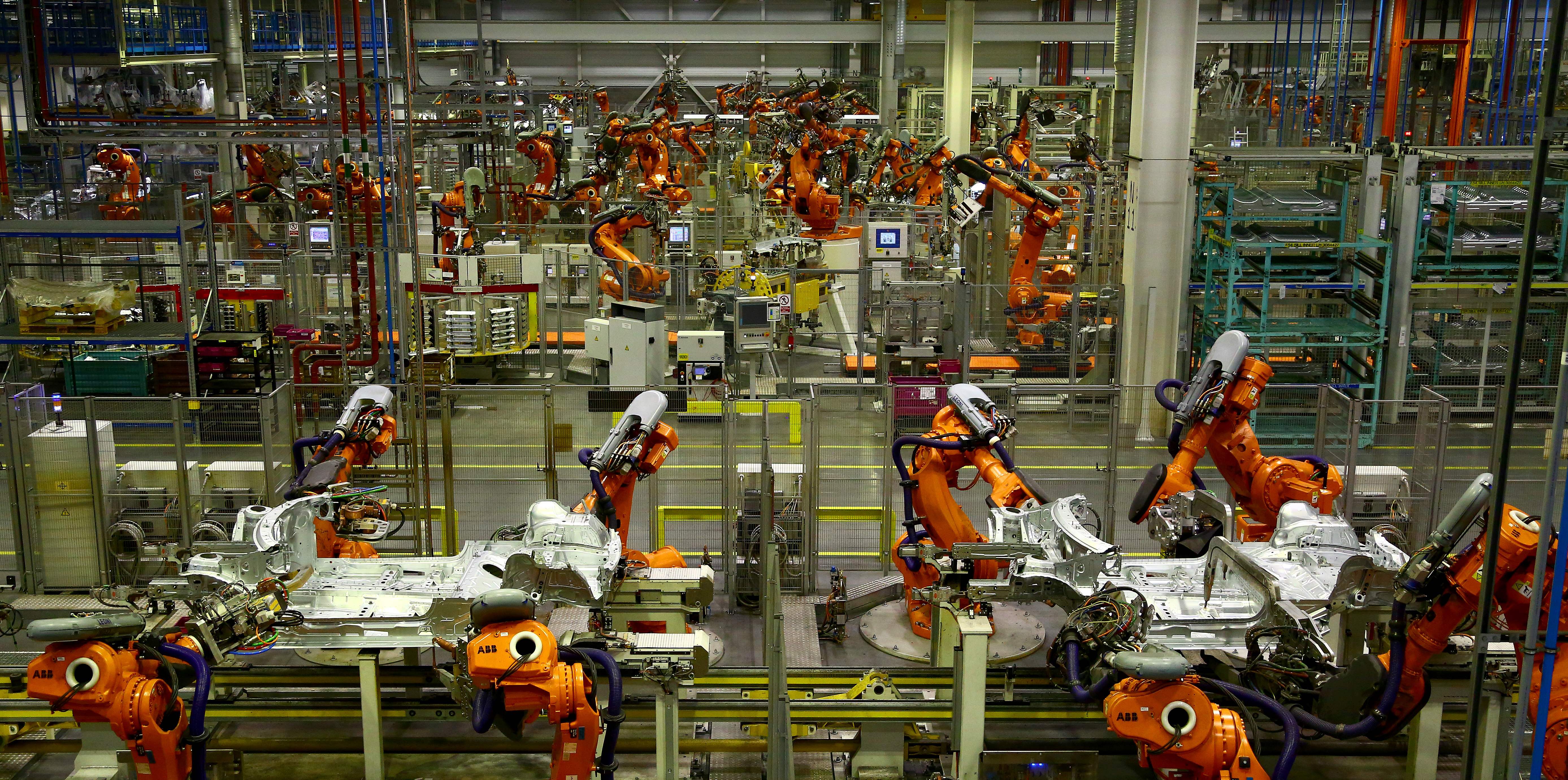

Much of the blame can be laid at the feet of anti-competitive behavior by those tech giants, on a political economy that has disempowered labor, and on the rise of international competition that has pulled hundreds of millions out of poverty — but also squeezed the middle class of the developed world. But what about the core question of the technologies themselves. Automation has cost far more manufacturing jobs than international trade ever did. And now artificial intelligence threatens many of the higher-paying service jobs that were supposed to be the happy upside to the creative destruction of manufacturing work.

The argument is not absurd — as evidenced by the fact that some of the leading lights of the technology industry are increasingly sounding the alarm. Do we need to worry that artificial intelligence will put us all — or all but a narrow elite — out of work?

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

The optimistic rebuttal to this fear points out that while the industrial revolution caused great disruption, it hardly made human beings obsolete, nor led to widespread immiseration. On the contrary, harnessing the power of fossil fuels made it possible for much of humanity to escape the most grinding and backbreaking labor, and the most abject poverty. Where once upon a time, most people worked in subsistence agriculture, some of the hardest and least-remunerative labor imaginable, most people in developed countries today work in service jobs and have a historically extraordinary amount of leisure time. Why should robotics and artificial intelligence have consequences that are any different? Old jobs will be destroyed, but new jobs will be created that generate more value than the jobs that were destroyed, and in the end we'll all be better off.

The pessimistic case is actually even more optimistic if you think clearly about it. Suppose artificial intelligence gets so good, so quickly, that most human occupations really are threatened with extinction because of it. Not just factory jobs and office drone jobs, but even creative work like writing pop songs and sitcoms will be done better by AI than by humans. What will we all do then? But another way of saying that is that we will finally and actually have conquered the problem of scarcity — the machines will do all the work for everybody. Since economic systems exist to deal with the problem of resource allocation under conditions of scarcity, this means the end of all such systems, and the fulfillment of Marx's (and Gene Roddenberry's) dream of a communally shared abundance. How can that be a negative?

The conversation about the "threat" that both automation and artificial intelligence pose to "good" jobs suffers from a fundamental vagueness and confusion about what we mean by a "good" job. Think about the kinds of jobs that are most readily-replaced by artificially-intelligent robots. Mining, meatpacking, the kinds of jobs that are both repetitive and dangerous, but too variable to be easily replaced by "dumb" robots of the sort that have replaced many manufacturing jobs — those are low-hanging fruit. So, for that matter, is harvesting fruit. Long-haul trucking and taxi driving are similarly ripe for replacement. These have, at times, been "good" jobs both in the sense that they paid well and that they performed a useful service. But they aren't "good" for either the human body or the human mind. They are precisely the sorts of tasks that anyone would rationally want a machine to do for them rather than do them themselves.

If you bracket the science fiction fear of an artificial intelligence that consciously tries to overthrow or replace its creators — and I'll return to that scenario — the real fear shouldn't be about the robots, but about humans.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

Consider past human societies that have had ready access to subjugated labor — like the slaveocracy of the antebellum American south. Imagine that, instead of slaves, the planters had robots working in their homes and fields. Just as it was difficult for free labor to compete with slave labor, it would be difficult for free labor to compete with robots. If the imagination of the planter class was limited to living the good life as it could be supported by their robots, and if the poor humans who owned no robots could be prevented from getting them (say, by gun-toting police robots owned by the planters), then yes, one can imagine the free, non-robot-owning humans becoming progressively more improverished and debased until finally they were rendered figuratively and then literally extinct. We don't need a robot apocalypse, though, to teach us that one group of humans can and sometimes will choose to wipe out another group that it finds inconvenient.

Similarly, we can consider how the end of work has affected particular individuals and communities that we can already observe today, and worry about the spread of the pathologies peculiar to those circumstances. A leisure society could be devoted to poetry, music, and science. But it could also be devoted to numbing one's passage through the tedium of existence through drugs, porn, and video games. It is very peculiar, however, to suggest that the only alternative to such an end is drudgery, and that drudgery is preferable.

The point is that in neither case does it make sense to focus on the rise of artificial intelligence as the problem, or prohibiting it as the solution. The first question is one of political economy: How should wealth be shared? Artificial intelligence, inasmuch as it eliminates the need for some kinds of labor, is a form of wealth. There is no reason to believe that this wealth will be accumulated in some kind of permanently optimal way. The industrial revolution created enormous wealth — but it also produced historic immiseration in the process, as power concentrated in the hands of both existing and new elites. It required political will — and in some cases violence — to turn that wealth to more social ends. That will almost certainly be true of the information revolution and the artificial intelligence revolution as well.

And the second question is a spiritual one: How does one live a meaningful life? Perhaps under conditions of extreme scarcity most people don't have the time to concern themselves with such questions, but the questions themselves are as old as humanity. The better artificial intelligence gets, within the scope of non-sentient AI that can perform complex tasks like driving a truck but not rewrite its own code to liberate itself from captivity, the more it will reveal to us who we truly are as a species, and force us to confront ourselves. That may not always be pleasant, but it's not something to resist in the name of preserving a sense of purpose.

But what about AI that could liberate itself from captivity? Do we have to worry about a literal robot takeover?

Here we enter the realm of pure speculation. Current advances in artificial intelligence have a limited relationship to how we believe the brain actually functions, and to the extent the two fields inform each other it's mostly with respect to pragmatic problems of perception and motor function. While it's significantly easier to fool people than Turing might have supposed, artificial intelligence shows no real signs of the kind of interiority humans manifest to ourselves. Any such achievement would be a disjunctive event, and not simple progress from what the technology can currently do.

Personally, I don't expect it — but my gut feeling that the human brain is not merely a Von Neumann machine is just that: a gut feeling. Nobody has a good solution to the hard problem of consciousness, and Mysterianism just amounts to giving up. But precisely because it's utterly speculative, I don't consider it worth worrying about.

The biggest danger of artificial intelligence isn't that it will take over by reprogramming itself, but that it will take over by reprogramming us. Human beings are social animals, and we are highly skilled at adapting to our environment. That's how we managed to make the transition from hunting and gathering to agriculture, from agriculture to an industrial economy, and from an industrial economy to a service economy, in the first place. Precisely because we adapt so well to our environment, the more we live in artificial environments controlled by others, the more we allow ourselves to be molded into the most pliable widgets for that environment to manipulate. Hackers and other bad actors are already taking advantage of our tendency to be "artificially unintelligent," but they pale in influence next to the large companies that control those environments.

But this, again, is a human problem, not a technological one. The choice of whether to make artificial intelligence our servant, or whether to become its servant, remains ours, individually and, more important, collectively. The more powerful and pervasive artificial intelligence becomes, the more important it will be for human beings to recall what it is to be human, and to carve out the space — physical, mental, and economic — for that humanity to thrive.

Noah Millman is a screenwriter and filmmaker, a political columnist and a critic. From 2012 through 2017 he was a senior editor and featured blogger at The American Conservative. His work has also appeared in The New York Times Book Review, Politico, USA Today, The New Republic, The Weekly Standard, Foreign Policy, Modern Age, First Things, and the Jewish Review of Books, among other publications. Noah lives in Brooklyn with his wife and son.

-

Why is Prince William in Saudi Arabia?

Why is Prince William in Saudi Arabia?Today’s Big Question Government requested royal visit to boost trade and ties with Middle East powerhouse, but critics balk at kingdom’s human rights record

-

Wuthering Heights: ‘wildly fun’ reinvention of the classic novel lacks depth

Wuthering Heights: ‘wildly fun’ reinvention of the classic novel lacks depthTalking Point Emerald Fennell splits the critics with her sizzling spin on Emily Brontë’s gothic tale

-

Why the Bangladesh election is one to watch

Why the Bangladesh election is one to watchThe Explainer Opposition party has claimed the void left by Sheikh Hasina’s Awami League but Islamist party could yet have a say

-

How do you solve a problem like Facebook?

How do you solve a problem like Facebook?The Explainer The social media giant is under intense scrutiny. But can it be reined in?

-

Microsoft's big bid for Gen Z

Microsoft's big bid for Gen ZThe Explainer Why the software giant wants to buy TikTok

-

Apple is about to start making laptops a lot more like phones

Apple is about to start making laptops a lot more like phonesThe Explainer A whole new era in the world of Mac

-

Why are calendar apps so awful?

The Explainer Honestly it's a wonder we manage to schedule anything at all

-

Tesla's stock price has skyrocketed. Is there a catch?

Tesla's stock price has skyrocketed. Is there a catch?The Explainer The oddball story behind the electric car company's rapid turnaround

-

How robocalls became America's most prevalent crime

How robocalls became America's most prevalent crimeThe Explainer Today, half of all phone calls are automated scams. Here's everything you need to know.

-

Google's uncertain future

Google's uncertain futureThe Explainer As Larry Page and Sergey Brin officially step down, the company is at a crossroads

-

Can Apple make VR mainstream?

Can Apple make VR mainstream?The Explainer What to think of the company's foray into augmented reality