Why do all our virtual assistants sound female?

Alexa, are you a woman?

Voice assistant technology is arguably one of the coolest and most useful innovations built into many of today's devices. Amazon Echo can use Alexa to order you toothpaste, and Google Assistant can basically control your house, or recognize which voice belongs to which family member and customize the conversation accordingly. In other words, our digital personal assistants are getting pretty advanced.

But for all that technological progressiveness, there's an old-fashioned flaw lingering in our digital assistants: They all sound like women.

Of course, your digital assistant won't admit to being female. Ask Siri, Google Assistant, or Cortana their gender and they'll assure you, in one way or another, that they are genderless. Pose the gender question to Amazon's Alexa, and this is the answer you'll get: "I'm female in character." But the feminine voices with which they respond are unmistakable.

Subscribe to The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

Even the names of these assistants imply femininity. Siri, Cortana, Alexa — they all have a distinctly feminine ring to them. Only Google Assistant tries to be somewhat androgynous, but this is a thinly veiled effort. Sure, in theory, they could be androgynous labels. But science would put these names in the "female" category. Back in 1995, researchers at the University of Pittsburgh came up with a phonetic chart outlining why some names sound more masculine or feminine than others. According to their findings, if the name ends with an "ah" sound or any other vowel, we usually consider it feminine. If you've ever taken a romance language course that covered genders of nouns, you learned masculine nouns typically end in "o," and feminine nouns end in "a." It seems this concept carries over into how we perceive proper names, not just nouns.

So all of this raises a question: As voice assistant technology becomes more prevalent, could there be downsides to casting our voice assistants exclusively as women?

Historically, women have outnumbered men in roles that involve serving others — jobs such as secretaries and office assistants. Because many of us have become accustomed to seeing women standing behind reception desks, carrying trays of food to hungry diners, or changing bed linens in hotel rooms, perhaps it's a subconscious bias that leads developers to give their servile creations feminine qualities.

There could also be a monetary, or simply a user-experience, motivation behind this trend. A study from Indiana University's School of Informatics and Computing found that we prefer feminine-sounding voice assistants — and that's true for both men and women. Why? The research suggests it's because female voices sound "warmer." As such, software developers may explicitly choose to develop future virtual assistants with feminine voices because, to some extent, consumers like female voices better than male-voiced gadgets.

Sign up for Today's Best Articles in your inbox

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

But we also can't pretend that socially engrained biases don't exist, and don't influence our decisions. Whether people realize it or not, we often contextualize women within certain helping roles. And making our tech helpers exclusively female by default reinforces this stereotype.

If all voice assistants are women, could this encourage people to order women around more, or view them as somehow less human?

The same question could be posed regarding the ethnicity of our voice assistants. While some GPS systems let users switch settings to hear voices with various vocal qualities that might indicate non-white ethnicities, this option is not available on all devices yet. People who aren't native English speakers have also pointed out that some voice assistants can't recognize their accents, an issue that is likely to become much more common as voice commands become more pervasive in our everyday technology.

We've seen throughout history the impact that language can have on one's self-identity and ability to be accepted into a society. For example, during Europe's Napoleonic Wars, the British imposed their own laws and English language on the Dutch-descended South Africans (called Boers) who lived on South Africa's Western Cape, essentially limiting their ability to express themselves in their native language and thereby also limiting the power they had within the society.

Likewise, when English settlers expanded west across North America, they set up rules to govern local Native American populations. Many Native American families were forced to send their children to boarding schools, where the children were forbidden to speak their native languages, and were even forced to give up their names and adopt more traditionally English-sounding names.

The commonality across both of these examples (and the many, many others found throughout history) is the way in which dominant cultural groups try to suppress and remove "other" cultures from mainstream society.

Mohammad Khosravi Shakib wrote in a 2011 research paper in the Journal of Languages and Culture that language and culture are tied to one another. "Language carries culture, and culture carries, particularly through orature and literature, the entire body of values by which we perceive ourselves and our place in the world," he says.

In essence, to remove someone's ability to communicate in their own language removes part of their culture as well. More perceptibly, it removes that person's ability to have power in society. When voice assistants become the gatekeepers to your checking account, your car, and your education, it's going to matter a whole lot if voice technology only responds to perfect, "proper" English.

To have voice assistants that demonstrate no diversity, that can't recognize certain languages, slang words, or expressions is to have a technology that could alienate entire groups of people from this technology. And maybe that wouldn't be such a huge issue, if it didn't seem that voice-controlled technology was poised to become an integral part of U.S. society in the future.

Going forward, developers would do well to build diversity into their technologies. If the goal is to make these technologies more human-like, they need to better reflect the human race as a whole.

Kayla Matthews is a technology journalist and writer, contributing to The Week as well as publications like VentureBeat, Motherboard, and MakeUseOf. She is also the owner and editor of the productivity and tech blog Productivity Bytes.

-

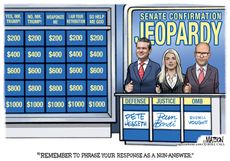

5 Senate-approved cartoons on the Trump confirmation hearings

5 Senate-approved cartoons on the Trump confirmation hearingsCartoons Artists take on non-answers, drunken rhetoric, and more

By The Week US Published

-

The best new cars for 2025

The best new cars for 2025The Week Recommends From family SUVs to luxury all-electrics these are the most hotly anticipated vehicles

By The Week UK Published

-

Jean-Marie Le Pen: rabble-rousing co-founder of the French National Front

Jean-Marie Le Pen: rabble-rousing co-founder of the French National FrontIn the Spotlight Once called the 'most hated man in France', Le Pen maintained that his ideas were simply 'ahead of their time'

By The Week UK Published