5 ways good science goes bad

It's a dangerous world out there

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

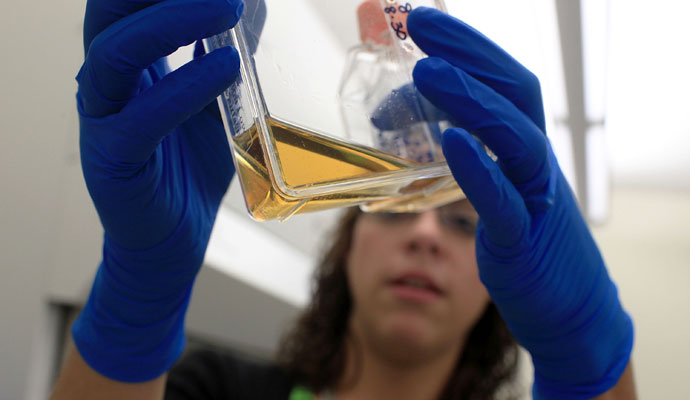

Good science is a continual process, susceptible at many points to introduced errors and outright manipulation by the misguided and the devious. It's critically important, as public faith in science continues to be tested, to take an honest look at some of the ways good science can be turned into something that misleads and erodes public trust.

1. Publication bias stunts the free flow of ideas

Publication in a top journal like Nature or The New England Journal of Medicine is the "coin of the realm" in science, says Ivan Oransky, vice president and global editorial director of MedPage Today and the founder of Embargo Watch. Unfortunately, research has shown that journals suffer from publication bias — subjectively favoring some studies over others.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

"Positive publication bias" is the tendency for the leading journals to print positive studies and avoid publishing negative ones. Oransky notes that many journals make revenue from selling copies of published studies. When pharmaceutical companies use positive clinical trial results in their drug sales pitches, for example, they pay for many reprints — reducing the incentive for journals to run less lucrative stories on drug trials that didn't work out.

It helps with any journal's branding to publish ooh-ahh findings and breakthrough discoveries that result in citations in other papers, and that "impact factor" can introduce a different bias. "There's a straight line between the sexiness of a study's results and its number of citations," Oransky says. With a premium on papers with citation-worthy big outcomes, prestigious journals sometimes overlook basic but useful research papers.

(More from World Science Festival: 11 small wonders captured on camera)

The case of the antidepressant reboxetine is one example of publication bias. Ben Goldacre, a doctor and author of The Guardian's Bad Science column working hard to blow the whistle on publication bias, discovered that seven clinical trials had been conducted on the drug. Only one — the one with the smallest number of participants — found reboxetine had a marked benefit vs. a placebo. That study was published, and the other six were not, giving doctors a false impression of consensus.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

2. Scientists commit fraud, leading to retractions

Each year, more than a million scientific papers are published in hundreds of peer-reviewed journals…and between 400 to 500 of these are later retracted. That's a very small percentage, admits Adam Marcus, the managing editor of two medical publications and co-founder, with Oransky, of the watchdog blog Retraction Watch. The big problem, Marcus says, is that two-thirds of retractions stem from researcher misconduct — fraud, fabrication, plagiarism, or other ethical failures. The general public may not hear about every retraction, but some cases are so egregious they make headlines.

Take discredited anesthesiologist Scott Reuben. In 2009, he admitted fabricating data in 21 papers that praised the benefits of pain drugs like Celebrex and Lyrica. Or Haruko Obokata, a stem-cell researcher at Japan's top institute, who had two papers published in Nature retracted this year. Obokata claimed she found a way to generated embryonic stem cells from an adult cell through simple stress, but her peers were unable to replicate the blockbuster results. But the world-record holder, according to Marcus, is Japanese anesthesiologist Yoshitaka Fujii with a grand total of 173 retractions for various offenses.

This kind of shoddy science, when widely reported, can have disastrous long-term impact. Disgraced British researcher Andrew Wakefield claimed, in a 1998 paper, that the measles, mumps, and rubella (MMR) vaccine could cause autism in children. Following its investigation in 2011, the British Medical Journal said Wakefield had misrepresented his 12 study subjects (some of whom did not have autism at all) and willfully faked data. But the widely-cited results of this flawed study became the foundation of an anti-vaccine movement putting untold numbers of children at risk today.

As comfortingly low as the overall percentage of retractions may be, that number has increased tenfold since 1975. Greater scrutiny from watchdog groups and investigative journalists are bringing the growing problem into the open, in hopes of stemming the tide.

3. Disinformation spreads, impairing public understanding of science

The infamous Climategate scandal is a shining example of what can go wrong when the scientific process meets conspiracy theory. Anonymous hackers broke into servers at the University of East Anglia's Climate Research Unit in November 2009, stealing thousands of private documents and emails, just before world leaders gathered to discuss climate change solutions at a meeting in Copenhagen. Global warming skeptics said that the unvarnished conversations proved that scientists had cooked up anthropogenic change as a vast environmental conspiracy.

(More from World Science Festival: Life in the universe: An optimistic cosmic perspective)

The researchers, including Michael Mann, said their emails had been taken out of context, and critics said the timing of the hack was suspicious. Multiple independent investigators combed the documents for scientific misconduct and found nothing, but the damage had been done. A Yale University survey in 2010 found that the public's belief in anthropogenic climate change and its trust in scientists had fallen significantly following the Climategate kerfuffle. Journalists and science educators are still trying to combat the incorrect impression that climate change is a hoax.

4. Scientists flock to well-funded fields, increasing risk of distorted results

It's tempting to follow the money. When the government, corporations, or foundations announce a major initiative to fund a specific type of research, there's an obvious incentive for scientists to flock to that field. Oransky says that some universities encourage its post-docs to use "keywords" like cancer or HIV in their proposals, or spin their project descriptions to suggest a real-world outcome for the average Joe, to attract the attention of the big grant-givers. Industry funders, in turn, might try to control the parameters of a study once they release its funding.

A 2010 study led by Trinity College economics researcher William Butos found three major consequences when the bulk of research funding comes from a handful of major institutions: "Effects on the choices of research activity, destabilizing effects (both long-run and short-run) on physical arrangements and resources supporting science and on the employment of scientists themselves, and distorting effects on the procedures scientists use to generate and validate scientific knowledge." And, there's an even greater chance of distortion when the funder exerts regulatory oversight.

The byproduct of this scenario? Fewer scientists focus on untrendy, poorly-funded research; and scientists in the better-funded fields have a greater risk of distorted results. It's a lose-lose scenario, which will likely continue as long as a small number of superrich funders are pulling the majority of the strings.

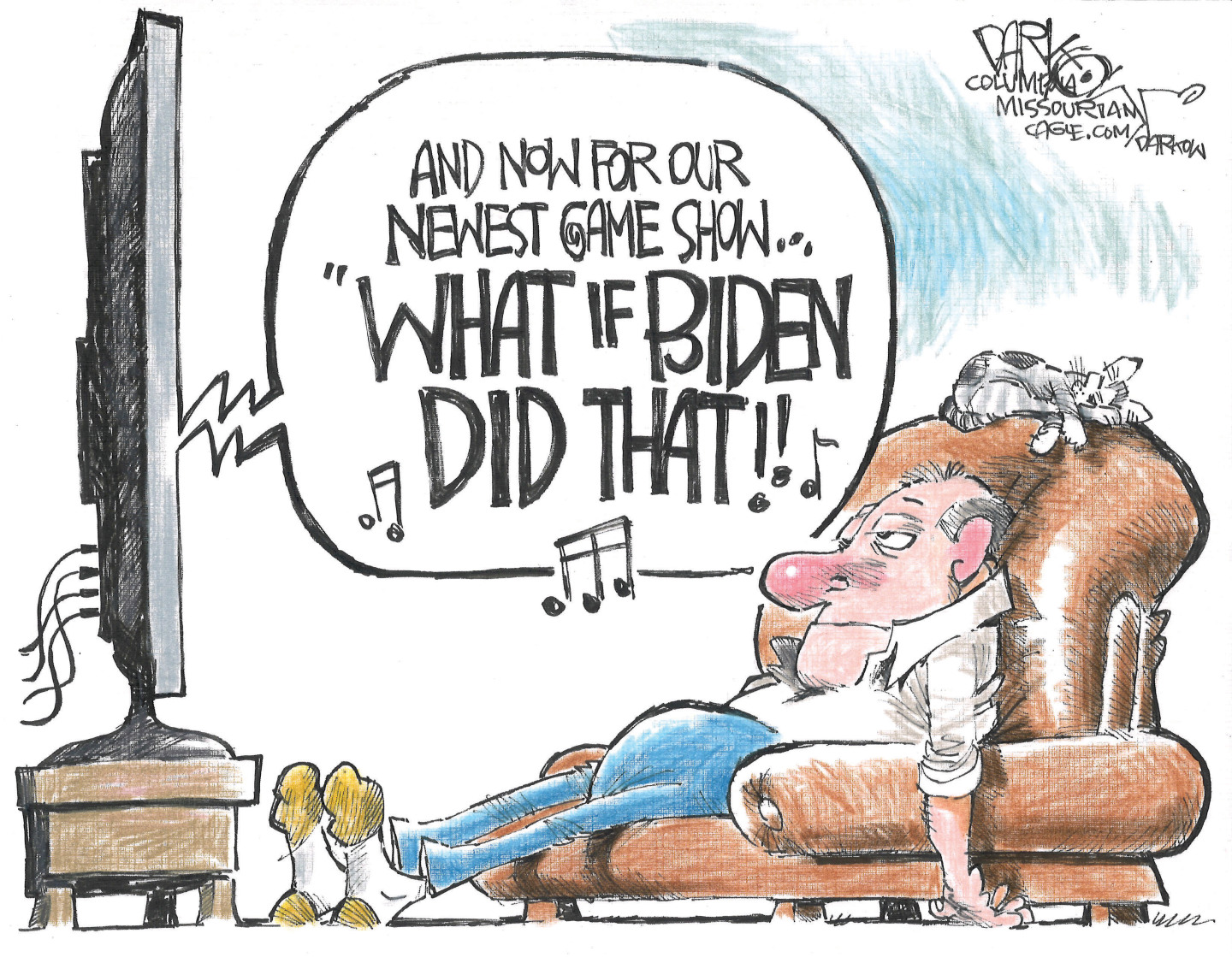

5. Science is skewed to suit political and corporate agendas

Politicians can be quick to misinterpret science to push their agenda or shape their message, but the media is no better when it comes to reporting on climate change. Cable news outlets discuss global warming with wildly varying degrees of accuracy, a UCS survey found: Fox News is scientifically accurate only 28 percent of the time, while MSNBC gets it right 92 percent of the time.

(More from World Science Festival: The sugary secrets of candy-making chemistry)

Earlier this year, conservative lawmakers pounced on reports of a lull in the rate of Earth's surface warming — badly misworded as a "global warming pause" — as proof that global warming had stopped or had never really existed at all. Researchers, however, explained that the increasing amount of heat trapped in Earth's atmosphere affects oceans much more than land, so surface temperature isn't an accurate tool for measuring all the warming that's actually occurring, but it was a point largely lost on the audience.

As fallible as scientists can be, censoring them from speaking creates its own kind of damaging distortion, as their biased counterparts in the political and corporate spheres are not similarly restrained. The U.S. Environmental Protection Agency has moved to prohibit its scientists and independent advisers from talking to reporters without permission, and the Canadian government has prevented its climatologists from holding press conferences or speaking to the media. In hearings before the House Committee on Science, Space and Technology of the 112th Congress, industry witnesses from oil and gas, aerospace, computer and agriculture corporations outnumbered academic and independent witnesses.

-

Political cartoons for February 13

Political cartoons for February 13Cartoons Friday's political cartoons include rank hypocrisy, name-dropping Trump, and EPA repeals

-

Palantir's growing influence in the British state

Palantir's growing influence in the British stateThe Explainer Despite winning a £240m MoD contract, the tech company’s links to Peter Mandelson and the UK’s over-reliance on US tech have caused widespread concern

-

Quiz of The Week: 7 – 13 February

Quiz of The Week: 7 – 13 FebruaryQuiz Have you been paying attention to The Week’s news?