Big Finance's hyper-focus on frequency creates a blindspot on magnitude

And what can be done to change that

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

My main takeaway from Alan Greenspan's latest book, The Map and the Territory: Risk, Human Nature, and the Future of Forecasting: Forecasters (and all those who rely on them) fail to realize that over the long run, even frequently accurate predictions mean little without taking into account the magnitude of relatively infrequent mistakes.

"In practice," Greenspan writes, "model builders (myself included) keep altering the set of chosen variables and equations specifications until we get a result that appears to replicate the historical record in an economically credible manner."

Consider an example from the financial crisis. House prices did nothing but go up in value for a long time, so all of the models assumed that this would continue to be the case. This perfectly predicted the past, but not the future. As we now know, house prices can indeed go down.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

Economic models are used to make policy decisions, set interest rates, and intervene in the so-called normal functioning of the market. When the models work, they work very well. When they don't work, we're left with a massive mess.

Greenspan, for one, places too much weight on the historical accuracy of models. And his plan for the future — essentially, more complicated and better models — is unlikely to avoid catastrophes like what we saw during the Great Recession.

"September 2008 was a watershed moment for forecasters, myself included," he writes. "It has forced us to find ways to incorporate into our macro models those animal spirits that dominate finance." But this still leaves us relying on these models, which will effectively forecast the future — until they don't.

It doesn't matter if your forecast is right 99 times if on the 100th time you are not only wrong, but catastrophically wrong.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

Consider an investment. Let's say a statistical model has helped your mutual fund generate 20 percent returns compounded annually for 20 years. Everything is going splendidly, and you have just one more year until retirement. In that last year, however, a historical anomaly hits, the model fails, and everything is wiped out. The model has only been wrong one year, but it cost you everything.

You don't need a math degree to realize the importance of magnitude. "The frequency," writes Nassim Taleb, in Antifragile, "i.e., how often someone is right is largely irrelevant in the real world, but alas, one needs to be a practitioner, not a talker, to figure it out. On paper the frequency of being right matters, but only on paper."

But how are statistics- and historical-pattern-based models so wrong sometimes? Because the world is always changing. Consider the ancient advice of Heraclitus:

The river where you set your foot just now is gone: those waters giving way to this, now this.

Just because something worked in the past doesn't mean it will work in the future. Even perfect historical models are just that — perfect at predicting the future only when it replicates the past.

If we are to have a hope of avoiding or at least minimizing the magnitude of the next crises, better models alone are not enough. We need to employ a cross-disciplinary approach. Let's not just look at things through the lens of a purely economic model. Let's borrow from psychology and engineering, too.

Engineers, for instance, deal with uncertainty by incorporating a margin of safety. Perhaps 99 percent of the vehicles that drive across a bridge are 10 tons or less. But there are still the infrequent trucks that exceed that. If we designed bridges the way we do economic policy, every once in awhile we'd drive 11-ton trucks over a bridge designed to carry 10 tons. That's way too risky. Far better to design the bridge to carry 20 tons.

We must also re-examine incentives for people who work in finance. Our systems cannot be based on the premise, "Heads I win, tails you lose." Upsides must be balanced with downsides. Warren Buffett seems to be onto something when he says:

If I were running things if a bank had to go to the government for help, the CEO and his wife would forfeit all their net worth. ... And that would apply to any CEO that had been there in the previous two years. ... I think you have to change the incentives. The incentives a few years ago were try and report higher quarterly earnings. It's nice to have carrots, but you need sticks. The idea that some guy who's worth $500 million leaves and only has $50 million left is not much of a stick as far as I'm concerned.

Buffett's idea, of course that sounds a lot like like a modern manifestation of Hummurabi's Code.

Shane Parrish is a Canadian writer, blogger, and coffee lover living in Ottawa, Ontario. He is known for his blog, Farnam Street, which features writing on decision making, culture, and other subjects.

-

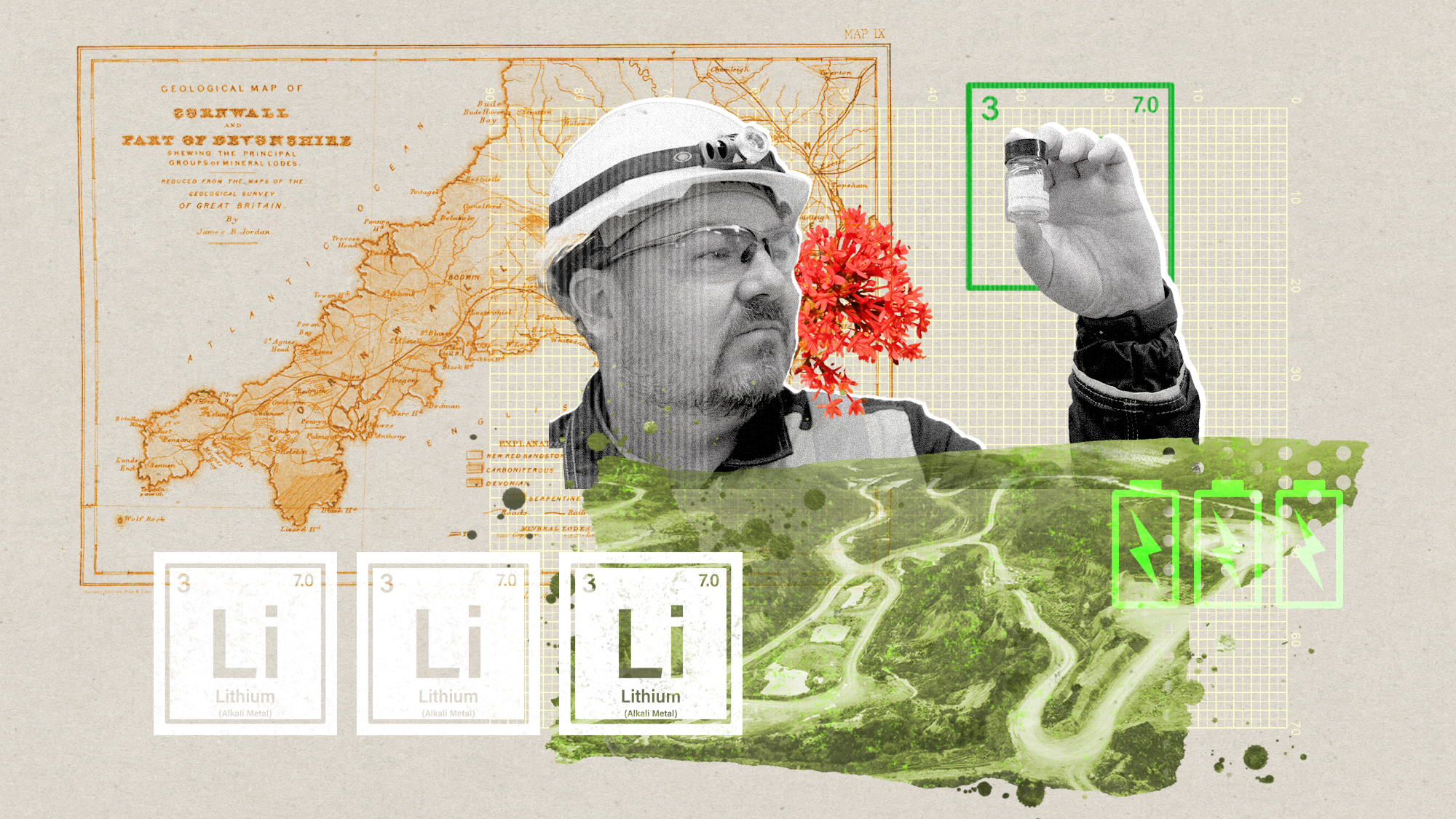

The ‘ravenous’ demand for Cornish minerals

The ‘ravenous’ demand for Cornish mineralsUnder the Radar Growing need for critical minerals to power tech has intensified ‘appetite’ for lithium, which could be a ‘huge boon’ for local economy

-

Why are election experts taking Trump’s midterm threats seriously?

Why are election experts taking Trump’s midterm threats seriously?IN THE SPOTLIGHT As the president muses about polling place deployments and a centralized electoral system aimed at one-party control, lawmakers are taking this administration at its word

-

‘Restaurateurs have become millionaires’

‘Restaurateurs have become millionaires’Instant Opinion Opinion, comment and editorials of the day