The dangers of quick thinking

Our intuition is often wrong, says Daniel Kahneman, especially when we're searching for patterns and causes

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

CONSIDER THIS: A study of the incidence of kidney cancer in the 3,141 counties of the United States reveals a remarkable pattern. The counties in which the incidence of kidney cancer is lowest are mostly rural, sparsely populated, and located in traditionally Republican states in the Midwest, the South, and the West. Now, what do you make of this information?

Your mind has been very active in the last few seconds, and it was mainly operating in what I call System 2 — the "slow" mode of thinking involved in effortful activities such as doing taxes, comparing two washing machines for best value, or driving in traffic. You deliberately searched memory and formulated hypotheses. Some effort was involved; your pupils dilated, and your heart rate increased measurably. But System 1 — the "fast" mode, which includes reacting to loud sounds, understanding simple sentences, and driving on empty roads — was not idle: You probably rejected the idea that Republican politics provide protection against kidney cancer. Very likely, you ended up focusing on the fact that the counties with low incidence of cancer are mostly rural. The statisticians Howard Wainer and Harris Zwerling, from whom I learned this example, commented, "It is both easy and tempting to infer that their low cancer rates are directly due to the clean living of the rural lifestyle — no air pollution, no water pollution, access to fresh food without additives." This makes perfect sense.

Now consider the counties in which the incidence of kidney cancer is highest. These ailing counties tend to be mostly rural, sparsely populated, and located in traditionally Republican states in the Midwest, the South, and the West. Wainer and Zwerling comment, "It is easy to infer that their high cancer rates might be directly due to the poverty of the rural lifestyle — no access to good medical care, a high-fat diet, and too much alcohol, too much tobacco." Something is wrong, of course. The rural lifestyle cannot explain both a very high and a very low incidence of kidney cancer.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

The key factor is not that the counties were rural or predominantly Republican. It is that rural counties have small populations. And the main lesson to be learned is not about epidemiology, it is about the difficult relationship between our mind and statistics. System 1 is highly adept in one form of thinking — it automatically and effortlessly identifies causal connections between events, sometimes even when the connection is spurious. When told about the high-incidence counties, you immediately assumed that these counties are different from other counties for a reason, that there must be a cause that explains this difference. As we shall see, however, System 1 is inept when faced with "merely statistical" facts, which change the probability of outcomes but do not cause them to happen.

The predictability of randomness

A RANDOM EVENT, by definition, does not lend itself to explanation, but collections of random events do behave in a highly regular fashion. Imagine a large urn filled with marbles. Half the marbles are red, half are white. Next, imagine a very patient person (or a robot) who blindly draws four marbles from the urn, records the number of red balls in the sample, throws the balls back into the urn, and then does it all again, many times. If you summarize the results, you will find that the outcome "two red, two white" occurs (almost exactly) six times as often as the outcome "four red" or "four white." This relationship is a mathematical fact.

A related statistical fact is relevant to the cancer example. From the same urn, two very patient marble counters take turns. Jack draws four marbles on each trial, Jill draws seven. They both record each time they observe a homogeneous sample — all white or all red. If they go on long enough, Jack will observe such extreme outcomes more often than Jill — by a factor of eight (the expected percentages are 12.5 percent and 1.56 percent). No causation, but a mathematical fact: Samples of four marbles yield extreme results more often than samples of seven marbles do.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

Now imagine the population of the United States as marbles in a giant urn. Some marbles are marked KC, for kidney cancer. You draw samples of marbles and populate each county in turn. Rural samples are smaller than other samples. Just as in the game of Jack and Jill, extreme outcomes (very high and/or very low cancer rates) are most likely to be found in sparsely populated counties. This is all there is to the story.

Our predilection for causal thinking exposes us to serious mistakes in evaluating the randomness of truly random events. For an example, take the sex of six babies born in sequence at a hospital. The sequence of boys and girls is obviously random; the events are independent of each other, and the number of boys and girls who were born in the hospital in the last few hours has no effect whatsoever on the sex of the next baby. Now consider three possible sequences:

BBBGGG

GGGGGG

BGBBGB

Are the sequences equally likely? The intuitive answer — "of course not!" — is false. Because the events are independent and because the outcomes B and G are (approximately) equally likely, then any possible sequence of six births is as likely as any other. Even now that you know this conclusion is true, it remains counterintuitive, because only the third sequence appears random. As expected, BGBBGB is judged much more likely than the other two sequences. We are pattern seekers, believers in a coherent world, in which regularities (such as a sequence of six girls) appear not by accident but as a result of mechanical causality or of someone's intention. We do not expect to see regularity produced by a random process, and when we detect what appears to be a rule, we quickly reject the idea that the process is truly random. Random processes produce many sequences that convince people that the process is not random after all. You can see why assuming causality could have had evolutionary advantages. It is part of the general vigilance that we have inherited from ancestors. We are automatically on the lookout for the possibility that the environment has changed. Lions may appear on the plain at random times, but it would be safer to notice and respond to an apparent increase in the rate of appearance of prides of lions, even if it is actually due to the fluctuations of a random process.

The myth of the hot hand

AMOS TVERSKY AND his students Tom Gilovich and Robert Vallone once caused a stir with their study of misperceptions of randomness in basketball. The "fact" that players occasionally acquire a hot hand is generally accepted by players, coaches, and fans. The inference is irresistible: A player sinks three or four baskets in a row and you cannot help forming the causal judgment that this player is now hot, with a temporarily increased propensity to score. Players on both teams adapt to this judgment — teammates are more likely to pass to the hot scorer and the defense is more likely to double-team. Analysis of thousands of sequences of shots led to a disappointing conclusion: There is no such thing as a hot hand in professional basketball, either in shooting from the field or scoring from the foul line. Of course, some players are more accurate than others, but the sequence of successes and missed shots satisfies all tests of randomness. The hot hand is entirely in the eye of the beholders, who are consistently too quick to perceive order and causality in randomness. The hot hand is a massive and widespread cognitive illusion.

The public reaction to this research is part of the story. The finding was picked up by the press because of its surprising conclusion, and the general response was disbelief. When the celebrated coach of the Boston Celtics, Red Auerbach, heard of Gilovich and his study, he responded, "Who is this guy? So he makes a study. I couldn't care less." The tendency to see patterns in randomness is overwhelming — certainly more impressive than a guy making a study.

The secret of successful schools

I BEGAN WITH the example of cancer incidence across the United States. The example appears in a book intended for statistics teachers, but I learned about it from an amusing article by the two statisticians I quoted earlier, Wainer and Zwerling. Their essay focused on a large investment, some $1.7 billion, which the Gates Foundation made to follow up intriguing findings on the characteristics of the most successful schools. Many researchers have sought the secret of successful education by identifying the most successful schools in the hope of discovering what distinguishes them from others. One of the conclusions of this research is that the most successful schools, on average, are small. In a survey of 1,662 schools in Pennsylvania, for instance, six of the top 50 were small, which is an overrepresentation by a factor of four. These data encouraged the Gates Foundation to make its investment in the creation of small schools, sometimes by splitting large schools into smaller units.

This probably makes intuitive sense to you. It is easy to construct a causal story that explains how small schools are able to provide superior education and thus produce high-achieving scholars by giving them more personal attention and encouragement than they could get in larger schools.

Unfortunately, the causal analysis is pointless because the facts are wrong. If the statisticians who reported to the Gates Foundation had asked about the characteristics of the worst schools, they would have found that bad schools also tend to be smaller than average. The truth is that small schools are not better on average; they are simply more variable. If anything, say Wainer and Zwerling, large schools tend to produce better results, especially in higher grades, where a variety of curricular options is valuable.

The law of small numbers is part of a larger story about the workings of the mind: Statistics produce many observations that appear to beg for causal explanations but do not lend themselves to such explanations. Many facts of the world are due to chance, including accidents of sampling. And causal explanations of chance events are inevitably wrong.

©2011 by Daniel Kahneman. Reprinted by permission of Farrar, Straus & Giroux. All rights reserved. Excerpted from Thinking, Fast and Slow.

-

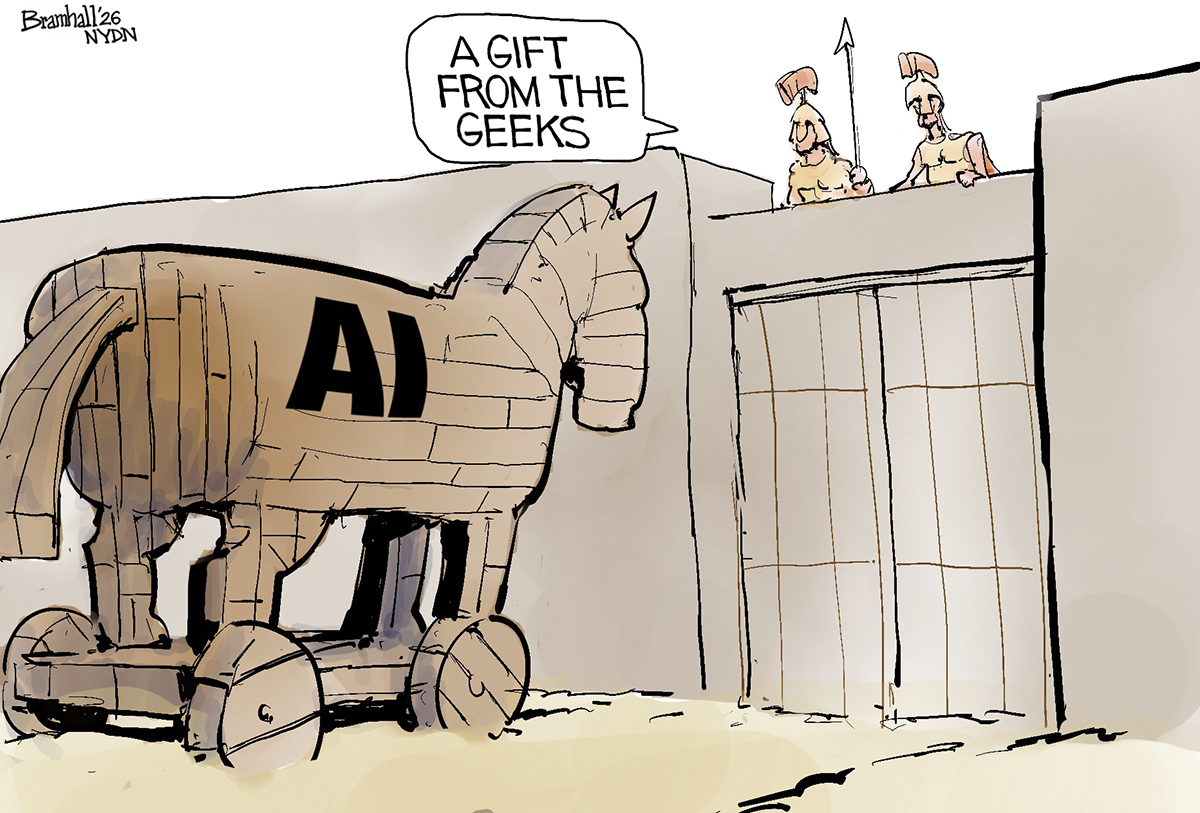

Political cartoons for February 19

Political cartoons for February 19Cartoons Thursday’s political cartoons include a suspicious package, a piece of the cake, and more

-

The Gallivant: style and charm steps from Camber Sands

The Gallivant: style and charm steps from Camber SandsThe Week Recommends Nestled behind the dunes, this luxury hotel is a great place to hunker down and get cosy

-

The President’s Cake: ‘sweet tragedy’ about a little girl on a baking mission in Iraq

The President’s Cake: ‘sweet tragedy’ about a little girl on a baking mission in IraqThe Week Recommends Charming debut from Hasan Hadi is filled with ‘vivid characters’