Is facial recognition technology safe?

Use of controversial surveillance techniques by UK police is being challenged in landmark court case

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

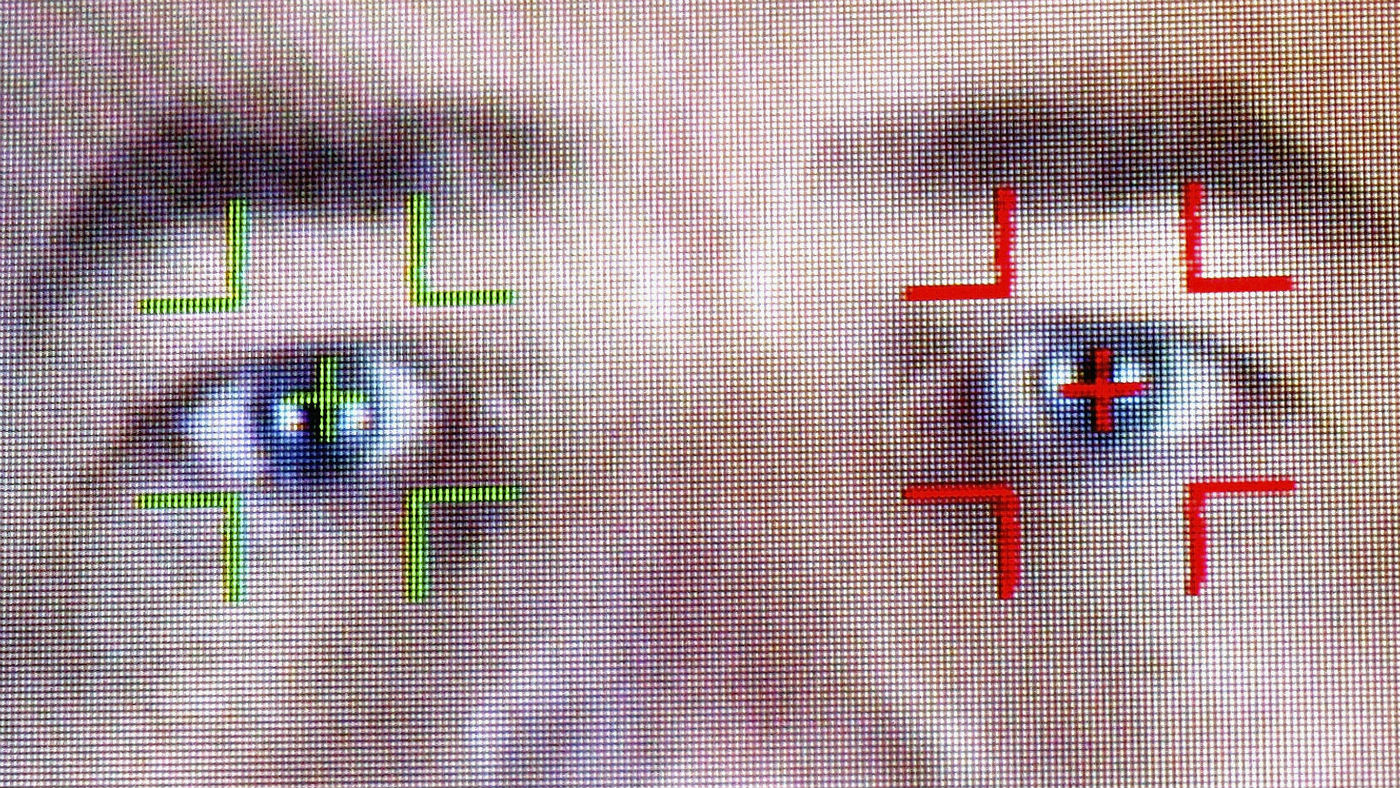

The first major legal challenge to the use of automated facial recognition (AFR) surveillance by British police begins this week.

Human rights group Liberty has launched a case against South Wales Police, the UK force which has pioneered AFR capable of mapping faces and comparing them to a database in real time.

Supporters claim facial recognition technology “will boost the safety of citizens and could help police catch criminals and potential terrorists”, reports The Daily Telegraph. But critics have labelled it “Orwellian” and say police have not been “transparent” about how they will use the data.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

What's the case about?

The case has been brought by Ed Bridges, an employee at Cardiff University and former Lib Dem councillor, who claims that police scanned his face twice while he was out shopping in the Welsh capital.

Ahead of the hearing at Cardiff Administrative Court, Bridges said: “The police are supposed to protect us and make us feel safe but the technology is intimidating and intrusive.”

AFR technology “is a far more powerful policing tool than traditional CCTV - as the cameras take a biometric map, creating a numerical code of the faces of each person who passes the camera”, says the BBC.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

The numerical code has similar properties to DNA or fingerprints but unlike these two markers, “there is no specific regulation governing how police use facial recognition or manage the data gathered”, the broadcaster reports.

Liberty says that even if there were regulations, the technology is too intrusive and should not be used for policing.

But Chris Phillips, former head of the National Counter Terrorism Security Office, argues: “If there are hundreds of people walking the streets who should be in prison because there are outstanding warrants for their arrest, or dangerous criminals bent on harming others in public places, the proper use of AFR has a vital policing role. The police need guidance to ensure this vital anti-crime tool is used lawfully.”

What are the problems with AFR?

Facial recognition has attracted criticism from researchers and privacy advocates across the world, amid reports that authorities have been using the technology to track millions of Muslims in northwest China, as well as fears about race- and gender-related software failings.

And as GeekWire notes, “the face is such a big part of a person’s public identity”, which raises privacy issues.

Earlier this month, San Francisco became the first US city to ban the use of the technology for law enforcement purposes, over fears about its reliability and infringements of citizens’ liberties. In particular, the city’s municipal board highlighted concerns that face ID technology can exhibit racial bias.

Commercial face recognition software has repeatedly been shown to be “less accurate” about people with darker skin, and civil rights advocates claim that face-scanning can be used in “disturbingly targeted” ways, reports Gizmodo.

A recent example involved Amazon and its new technology Rekognition. The facial recognition tool, which Amazon sells to web developers, wrongly identified 28 members of the US Congress – of whom a disproportionate number were people of colour – as police suspects from mugshots, reports Reuters.

Why do these problems occur?

The reason for the racial false positives seems to be “not that the machines are inherently set against anyone, but that the people who are, in essence, teaching the programmes to identify features aren’t providing them with a diverse sample in the first place”, says USA Today.

A recent report on the technology by experts at Washington D.C.’s Georgetown Law school warns: “It doesn’t matter how good the machine is if it is still being fed the wrong figures – the wrong answers are still likely to come out.”

Gizmodo says that Massachusetts Institute of Technology (MIT) researchers believe solving the problem will require “hard limits” on how and when face-scanning can be used, in order to protect vulnerable communities. Even then, they warn, face recognition will be “impossible without addressing racism in the criminal justice system it will inevitably be used in”.

In the UK, the Metropolitan Police is deciding whether to roll out the technology on a wider scale following a two-year trial. However, the BBC reports that “at least three chances to assess how well the systems dealt with ethnicity had been missed by police over five years”.

Will the technology improve?

Though not yet perfect, accuracy rates are as high as 99.98% thanks to machine learning and improved neural networks, according to Pam Dixon, executive director of the World Privacy Forum. “That is a stunning advancement,” she told USA Today.

And facial recognition could be just the beginning. “There’s actually a whole bunch of other things that have similar properties to facial recognition that are equally pernicious, but don’t generate the same visceral reaction,” Cornell University information scientist Solon Barocas told GeekWire.

Margaret Mitchell, a senior scientist at Google Research and Machine Intelligence, added that these could include analysing a person’s walking gait, or the cadence of a person’s speech. “Machine learning is going to discover a whole bunch of new ways,” Barocas said.

-

Antonia Romeo and Whitehall’s women problem

Antonia Romeo and Whitehall’s women problemThe Explainer Before her appointment as cabinet secretary, commentators said hostile briefings and vetting concerns were evidence of ‘sexist, misogynistic culture’ in No. 10

-

Local elections 2026: where are they and who is expected to win?

Local elections 2026: where are they and who is expected to win?The Explainer Labour is braced for heavy losses and U-turn on postponing some council elections hasn’t helped the party’s prospects

-

6 of the world’s most accessible destinations

6 of the world’s most accessible destinationsThe Week Recommends Experience all of Berlin, Singapore and Sydney