Canada to deploy AI that can identify suicidal thoughts

Programme will scan social media pages of 160,000 people

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

The Canadian government is launching a prototype artificial intelligence (AI) programme this month to “research and predict” suicide risks in the country.

The Canadian government partnered with AI firm Advanced Symbolics to develop the system, which aims to identify behavioural patterns associated with suicidal thoughts by scanning a total of 160,000 social media pages, reports Gizmodo.

The AI company’s chief scientist, Kenton White, told Vice News that scanning social media platforms for information provides a more accurate sample than using online surveys, which have seen a drop in response rates in recent years.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

“We take everyone from a particular region and we look for patterns in how they’re talking,” White said.

According to a contract document for the pilot programme, reported by Engadget, the AI system scans for several categories of suicidal behaviour, ranging from self-harm to attempts to commit suicide.

The government will use the data to assess which areas of Canada “might see an increase in suicidal behaviour”, the website says.

This can then be used to “make sure more mental health resources are in the right places when needed”, the site adds.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

It’s not the first time AI has been used to identify and prevent suicidal behaviour.

In October, the journal Nature Human Behaviour reported that a team of US researchers had developed an AI programme that could recognise suicidal thoughts by analysing MRI brain scans.

The system was able to identify suicidal thoughts with a reported accuracy of 91%. However, the study’s sample of of just 34 participants was criticised by Wired as being too small to accurately reflect the system’s potential for the “broader population”.

-

How the FCC’s ‘equal time’ rule works

How the FCC’s ‘equal time’ rule worksIn the Spotlight The law is at the heart of the Colbert-CBS conflict

-

What is the endgame in the DHS shutdown?

What is the endgame in the DHS shutdown?Today’s Big Question Democrats want to rein in ICE’s immigration crackdown

-

‘Poor time management isn’t just an inconvenience’

‘Poor time management isn’t just an inconvenience’Instant Opinion Opinion, comment and editorials of the day

-

Claude Code: Anthropic’s wildly popular AI coding app

Claude Code: Anthropic’s wildly popular AI coding appThe Explainer Engineers and noncoders alike are helping the app go viral

-

Will regulators put a stop to Grok’s deepfake porn images of real people?

Will regulators put a stop to Grok’s deepfake porn images of real people?Today’s Big Question Users command AI chatbot to undress pictures of women and children

-

Most data centers are being built in the wrong climate

Most data centers are being built in the wrong climateThe explainer Data centers require substantial water and energy. But certain locations are more strained than others, mainly due to rising temperatures.

-

The dark side of how kids are using AI

The dark side of how kids are using AIUnder the Radar Chatbots have become places where children ‘talk about violence, explore romantic or sexual roleplay, and seek advice when no adult is watching’

-

Why 2025 was a pivotal year for AI

Why 2025 was a pivotal year for AITalking Point The ‘hype’ and ‘hopes’ around artificial intelligence are ‘like nothing the world has seen before’

-

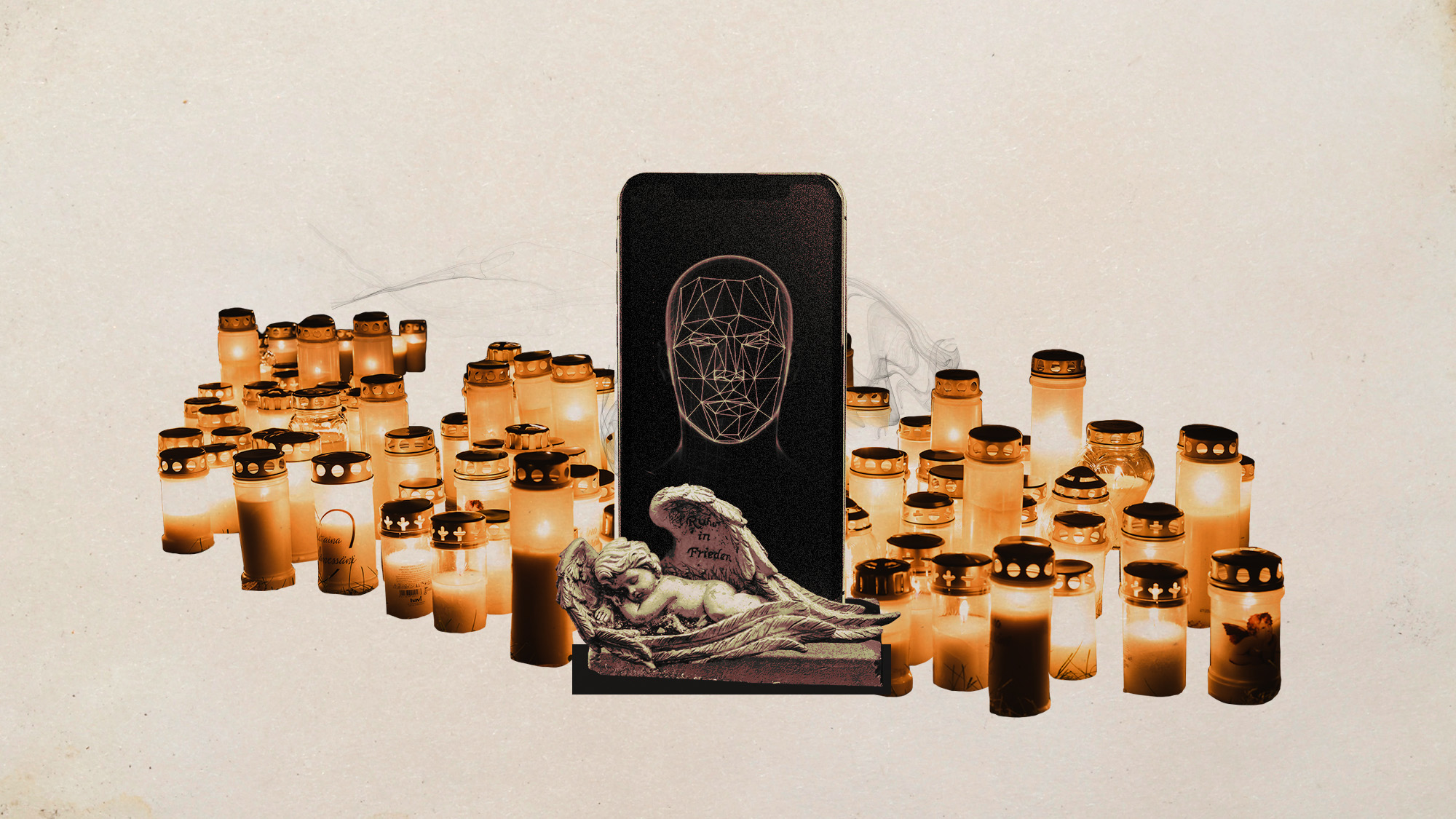

AI griefbots create a computerized afterlife

AI griefbots create a computerized afterlifeUnder the Radar Some say the machines help people mourn; others are skeptical

-

The robot revolution

The robot revolutionFeature Advances in tech and AI are producing android machine workers. What will that mean for humans?

-

Separating the real from the fake: tips for spotting AI slop

Separating the real from the fake: tips for spotting AI slopThe Week Recommends Advanced AI may have made slop videos harder to spot, but experts say it’s still possible to detect them