AI ripe for exploitation by criminals, experts warn

Researchers call for lawmakers to help prevent hacks and attacks

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

Artificial intelligence (AI) could be used for nefarious purposes within as little as five years, according to a new report by experts.

The newly published report, called The Malicious Use of Artificial Intelligence, by 26 researchers from universities and tech firms warns that the ease of access to “cutting-edge” AI could lead to it being exploited by bad actors.

The technology is still in its infancy and is mostly unregulated. If laws over AI development are not introduced soon, say the researchers, a major attack using the technology could occur by as soon as 2022.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

According to The Daily Telegraph, cybercriminals could use the tech to scan a target’s social media presence “before launching ‘phishing’ email attacks to steal personal data or access sensitive company information”.

Terrorists could also use AI to hack into driverless cars, the newspaper adds, or hijack “swarms of autonomous drones to launch attacks in public spaces”.

The new report calls for lawmakers to work with tech experts “to understand and prepare for the malicious use of AI”, BBC News says.

The authors are also urging firms to acknowledge that AI “is a dual-use technology” that poses both benefits and dangers to society, and to adopt practices “from disciplines with a longer history of handling dual-use risks”.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

Co-author Miles Brundage, of the Future of Humanity Institute at Oxford University, insists people shouldn’t abandon AI development, however.

“The point here is not to paint a doom-and-gloom picture, there are many defences that can be developed and there’s much for us to learn,” Brundage told The Verge.

“I don’t think it’s hopeless at all, but I do see this paper as a call to action,” he added.

-

Crisis in Cuba: a ‘golden opportunity’ for Washington?

Crisis in Cuba: a ‘golden opportunity’ for Washington?Talking Point The Trump administration is applying the pressure, and with Latin America swinging to the right, Havana is becoming more ‘politically isolated’

-

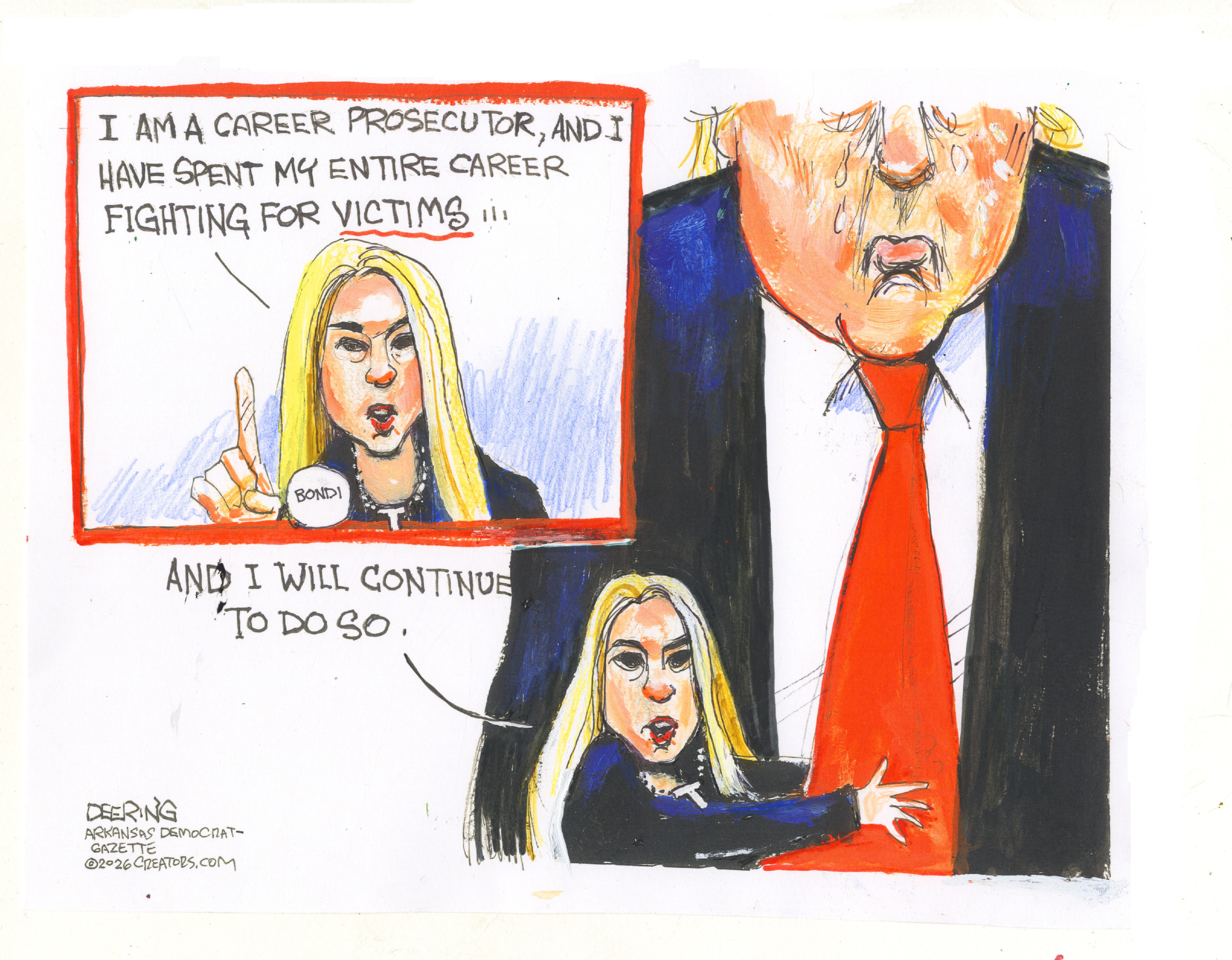

5 thoroughly redacted cartoons about Pam Bondi protecting predators

5 thoroughly redacted cartoons about Pam Bondi protecting predatorsCartoons Artists take on the real victim, types of protection, and more

-

Palestine Action and the trouble with defining terrorism

Palestine Action and the trouble with defining terrorismIn the Spotlight The issues with proscribing the group ‘became apparent as soon as the police began putting it into practice’

-

Claude Code: Anthropic’s wildly popular AI coding app

Claude Code: Anthropic’s wildly popular AI coding appThe Explainer Engineers and noncoders alike are helping the app go viral

-

Will regulators put a stop to Grok’s deepfake porn images of real people?

Will regulators put a stop to Grok’s deepfake porn images of real people?Today’s Big Question Users command AI chatbot to undress pictures of women and children

-

Most data centers are being built in the wrong climate

Most data centers are being built in the wrong climateThe explainer Data centers require substantial water and energy. But certain locations are more strained than others, mainly due to rising temperatures.

-

The dark side of how kids are using AI

The dark side of how kids are using AIUnder the Radar Chatbots have become places where children ‘talk about violence, explore romantic or sexual roleplay, and seek advice when no adult is watching’

-

Why 2025 was a pivotal year for AI

Why 2025 was a pivotal year for AITalking Point The ‘hype’ and ‘hopes’ around artificial intelligence are ‘like nothing the world has seen before’

-

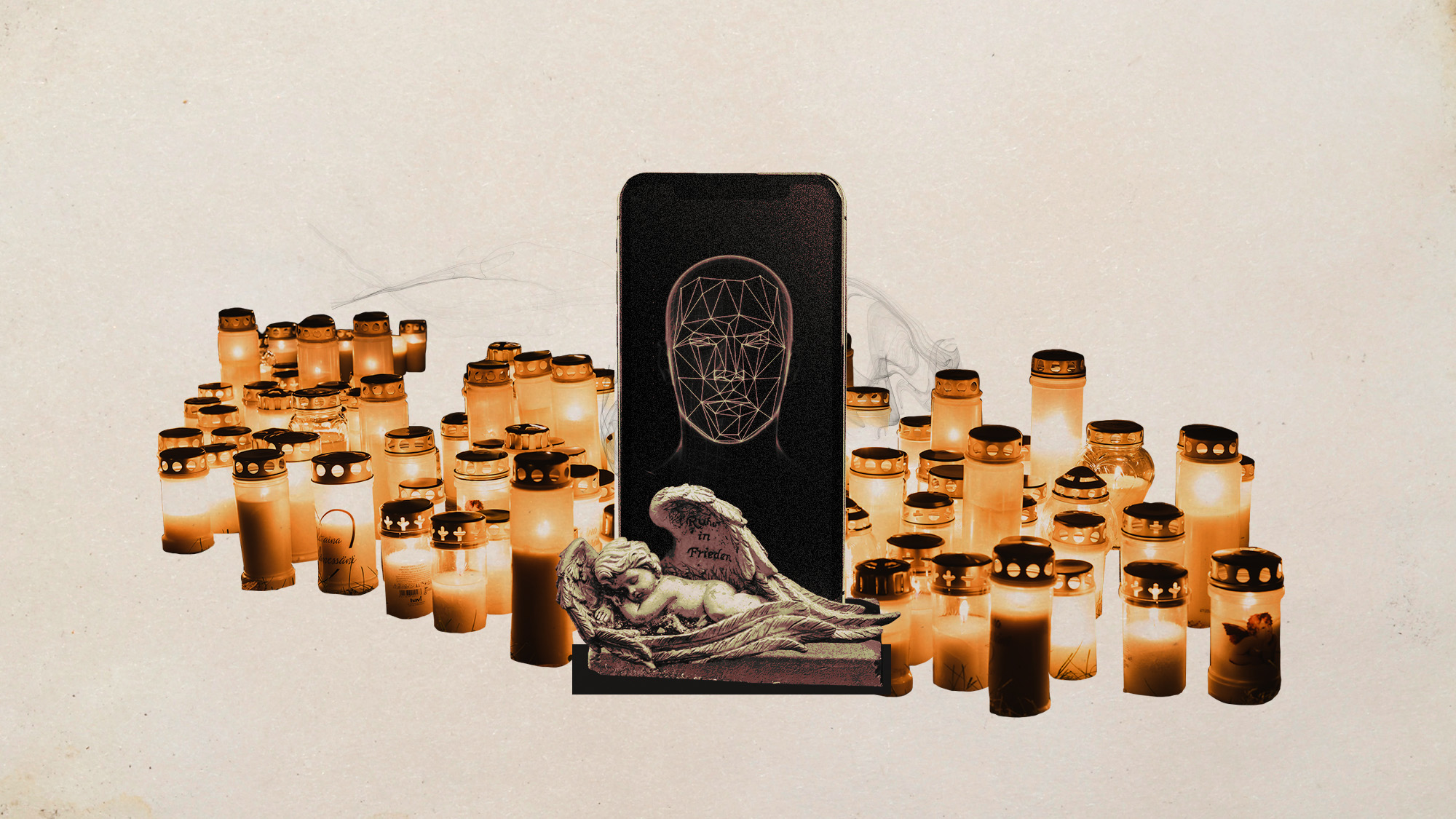

AI griefbots create a computerized afterlife

AI griefbots create a computerized afterlifeUnder the Radar Some say the machines help people mourn; others are skeptical

-

The robot revolution

The robot revolutionFeature Advances in tech and AI are producing android machine workers. What will that mean for humans?

-

Separating the real from the fake: tips for spotting AI slop

Separating the real from the fake: tips for spotting AI slopThe Week Recommends Advanced AI may have made slop videos harder to spot, but experts say it’s still possible to detect them