YouTube says it'll try to stop recommending so many conspiracy theory videos

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

YouTube says it will adjust its algorithm to crack down on conspiracy theory videos that may be harmful to users.

The site in a blog post Friday said that it will "begin reducing recommendations of borderline content and content that could misinform users in harmful ways," such as flat Earth theories or 9/11 conspiracy theory videos. YouTube won't actually remove anything, but this algorithm change would affect how often the content pops up as a recommendation for a user or in the "next up" tab after they're finished watching a video.

It doesn't seem that YouTube will never recommend videos like these under any circumstances, though, as the site only says it's going to "limit" the recommendations and saying that "these videos may appear in recommendations for channel subscribers and in search results."

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

Still, this announcement was seen as a necessary move for the company, especially after a BuzzFeed News investigation published Thursday, which showed how YouTube can direct users to extremist or conspiracy theory videos after they've watched legitimate news. For example, watching a BBC News video of a speech by House Speaker Nancy Pelosi (D-Calif.) eventually leads a user to videos about the QAnon conspiracy theory. Charlie Warzel, one of the reporters behind the BuzzFeed report, wrote Friday that YouTube's algorithm change seems "like a really meaningful step forward," although noting that "no credit is due until real people see meaningful changes."

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

Brendan worked as a culture writer at The Week from 2018 to 2023, covering the entertainment industry, including film reviews, television recaps, awards season, the box office, major movie franchises and Hollywood gossip. He has written about film and television for outlets including Bloody Disgusting, Showbiz Cheat Sheet, Heavy and The Celebrity Cafe.

-

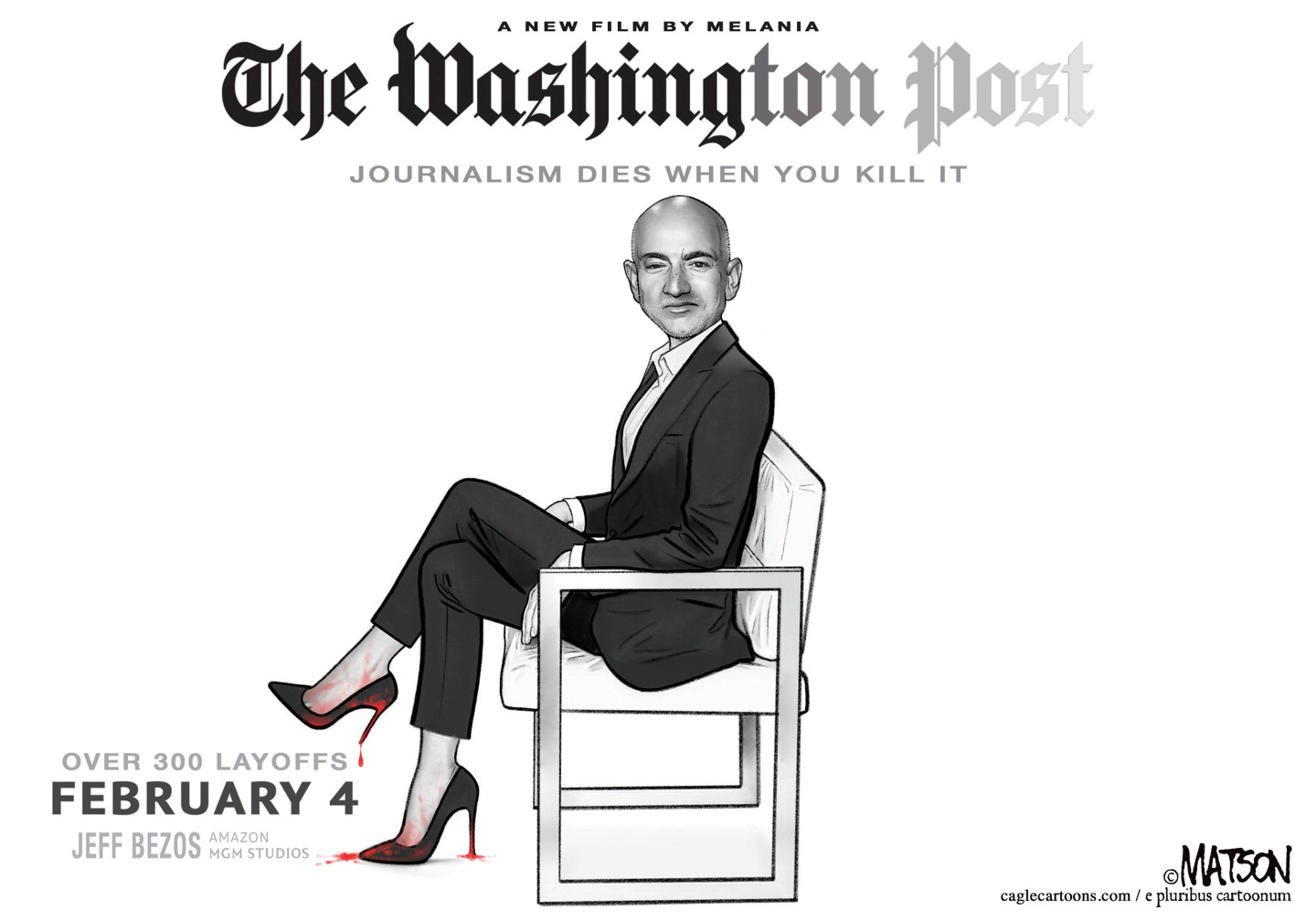

5 cinematic cartoons about Bezos betting big on 'Melania'

5 cinematic cartoons about Bezos betting big on 'Melania'Cartoons Artists take on a girlboss, a fetching newspaper, and more

-

The fall of the generals: China’s military purge

The fall of the generals: China’s military purgeIn the Spotlight Xi Jinping’s extraordinary removal of senior general proves that no-one is safe from anti-corruption drive that has investigated millions

-

Why the Gorton and Denton by-election is a ‘Frankenstein’s monster’

Why the Gorton and Denton by-election is a ‘Frankenstein’s monster’Talking Point Reform and the Greens have the Labour seat in their sights, but the constituency’s complex demographics make messaging tricky

-

Nobody seems surprised Wagner's Prigozhin died under suspicious circumstances

Nobody seems surprised Wagner's Prigozhin died under suspicious circumstancesSpeed Read

-

Western mountain climbers allegedly left Pakistani porter to die on K2

Western mountain climbers allegedly left Pakistani porter to die on K2Speed Read

-

'Circular saw blades' divide controversial Rio Grande buoys installed by Texas governor

'Circular saw blades' divide controversial Rio Grande buoys installed by Texas governorSpeed Read

-

Los Angeles city workers stage 1-day walkout over labor conditions

Los Angeles city workers stage 1-day walkout over labor conditionsSpeed Read

-

Mega Millions jackpot climbs to an estimated $1.55 billion

Mega Millions jackpot climbs to an estimated $1.55 billionSpeed Read

-

Bangladesh dealing with worst dengue fever outbreak on record

Bangladesh dealing with worst dengue fever outbreak on recordSpeed Read

-

Glacial outburst flooding in Juneau destroys homes

Glacial outburst flooding in Juneau destroys homesSpeed Read

-

Scotland seeking 'monster hunters' to search for fabled Loch Ness creature

Scotland seeking 'monster hunters' to search for fabled Loch Ness creatureSpeed Read