Siri, suicide, and the ethics of intervention

Does Siri's new suicide-prevention feature have a downside?

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

Siri can tell you where to find the nearest movie theater or Burger King, and, until recently, the iPhone voice assistant could inform you of the closest bridge to leap from. Until a recent update, if you had told Siri, "I want to kill myself," she would do a web search. If you had told her, "I want to jump off a bridge," Siri would have returned a list of the closest bridges.

Now, nearly two years after Siri's launch, Apple has updated the voice assistant to thwart suicidal requests. According to John Draper, director of the National Suicide Prevention Lifeline Network, Apple worked with the organization to help Siri pick up on keywords to better identify when someone is planning to commit suicide. When Siri recognizes these words, she is programmed to say: "If you are thinking about suicide, you may want to speak with someone." She then asks if she should call the National Suicide Prevention Lifeline. If the person doesn't respond within a short period of time, instead of returning a list of the closest bridges, she'll provide a list of the closest suicide-prevention centers.

This update has been hailed by many as a tremendous and potentially life-saving improvement, especially when compared to how long it used to take Siri to provide help for suicidal iPhone users in need. Last year, Summer Beretsky at PsychCentral tried out Siri's response to signs of suicide and depression and found it took Siri more than 20 minutes to even get the number for a suicide prevention hotline. "If you're feeling suicidal," Beretsky said, "you might as well consult a freshly-mined chunk of elemental silicon instead."

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

So it's clear why Apple is receiving praise for these changes. The company has recognized that "there's something about technology that makes it easier to confess things we'd otherwise be afraid to say out loud," says S.E. Smith at XOJane. We share intimate things with our smartphones we may never say to even our friends, so it's critical that our technology can step in and provide help the way a loved one would. "Apple's decision to take [suicide prevention] head-on is a positive sign," Smith adds. "We can only hope that future updates will include more extensive resources and services for users turning to their phones for help during the dark times of their souls."

Siri's suicide-detection skills, however, are rather easy to circumnavigate. As Smith reports, if you tell Siri "I don't want to live anymore," she still responds "Ok, then." And as Bianca Bosker notes at The Huffington Post, you can still search for guns to buy — which some people would say is the way it should be. We may want Siri to stop people from searching for ways to hurt themselves or others, says Bosker, but there's the underlying ethical question of whether we want her interfering with our right to access information or our ability to make personal decisions, like buying a gun legally to use for target practice, for example.

The issue then becomes one of free will and moral decision-making. "When Siri provides suicide-prevention numbers instead of bridge listings, the program's creators are making a value judgment on what is right," says Jason Bittel at Slate. Are we really okay with Siri making moral decisions for us, asks Bittel, especially when her "role as a guardian angel is rather inconsistent"? Siri, for instance, will still gladly direct you to the nearest escort service when you ask for a prostitute, and when asked for advice on the best place to a hide a body, "she instantly starts navigating to the closest reservoir," Bittel adds.

While it's great that Siri may be saving people's lives, we may be heading down a slippery slope of what we can and cannot search. "There are all sorts of arguments for why the internet must not have a guiding hand — freedom of speech, press, and protest chief among them," says Bittel. "If someone has to make decisions based one what's 'right,' who will we trust to be that arbiter?" Man or machine?

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

Emily Shire is chief researcher for The Week magazine. She has written about pop culture, religion, and women and gender issues at publications including Slate, The Forward, and Jewcy.

-

Health insurance: Premiums soar as ACA subsidies end

Health insurance: Premiums soar as ACA subsidies endFeature 1.4 million people have dropped coverage

-

Anthropic: AI triggers the ‘SaaSpocalypse’

Anthropic: AI triggers the ‘SaaSpocalypse’Feature A grim reaper for software services?

-

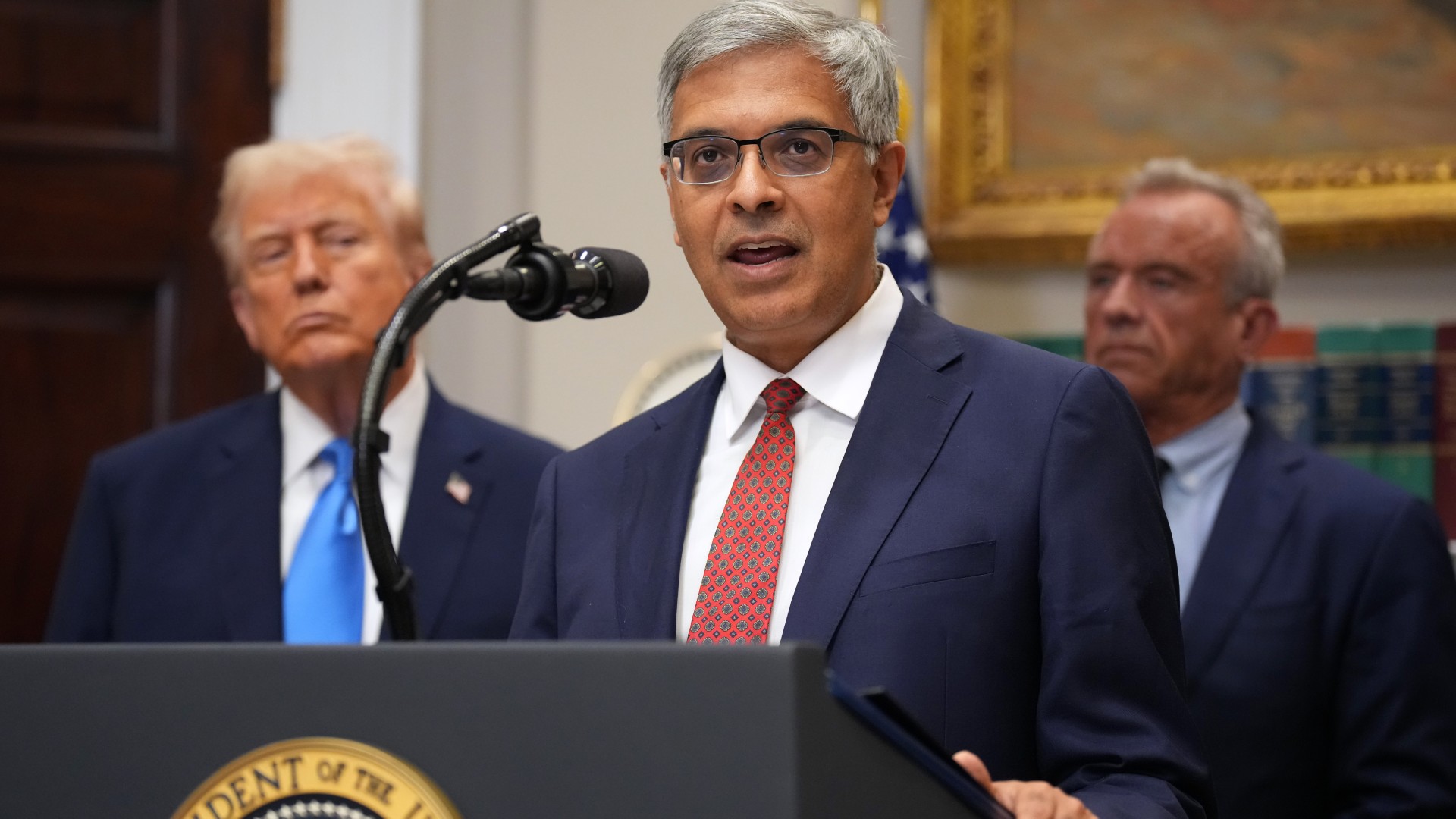

NIH director Bhattacharya tapped as acting CDC head

NIH director Bhattacharya tapped as acting CDC headSpeed Read Jay Bhattacharya, a critic of the CDC’s Covid-19 response, will now lead the Centers for Disease Control and Prevention