Why 'deepfakes' aren't the problem

Our shared sense of reality has already been fatally undermined

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

There sits Mark Zuckerberg, staring into a camera, proclaiming that the secret to controlling the future is controlling the stolen data of billions. A shocking declaration for the head of one of the world's most important and powerful companies to make. The only problem? Realistic as the video appears, it's fake — or, as these types of videos have come to be known, a deepfake.

The clip, shared on Instagram, came from the artists Bill Posters and Daniel Howe and was produced as part of an art exhibit called Spectre that recently premiered in the U.K. It did exactly what art should do: poked not only at Facebook's power, but also at the company's own moderation policies that state it will not take down footage that is fake. Recently, doctored footage of Nancy Pelosi, edited to make her seem slow and her speech slurred, was circulated on Facebook and the company refused to take it down, claiming that such content did not violate the company's guidelines.

Deepfakes are held up as a terrifying example of the sinister power of technology. They are the result of sophisticated technology that allows creators to make it appear that someone is saying something they never did. Last year, there was a clip that purported to show Barack Obama but was in fact the voice of comedian and director Jordan Peele, synced to Obama's face. Glanced at, it was pretty believable. Even just 18 months later, the technology has progressed at a frightening pace; now, you can simply type in what you want someone to say, and software can generate both believable video and audio of almost anyone saying almost anything.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

Deepfakes are unnerving because of what they augur: a future in which it may become impossible to trust anything we see. But for all the panic, deepfakes themselves may be something of a red herring — that rather than simply being the result of a new technological problem, our current political moment is instead the product of how a broader set of material circumstances interact with the new world of digital technology.

In one sense, deepfakes aren't entirely new because image manipulation isn't new. Photo editing, camera trickery, and arguably even the simple existence of things like fiction and satire have always made us skeptical of what we see. As each new thing emerges, we gain a media literacy in response and learn to look critically at what is in front of us.

But deepfakes don't just seem to threaten our relationship to the image; they instead poke at something discomfiting about the idea of shared reality. Everything from political consensus to social cohesion depends on the idea that we are all roughly talking about the same thing. Deepfakes join the turn toward fake news and the mainstreaming of conspiracy theories which make it seem there are competing worlds in play — one in which, say, climate change and vaccine science are real, and another in which they are not. What a fake but mostly convincing video of someone speaking does is cement the sense that the facts that make up our shared sense of reality have become unstable, that instead of building a worldview out of evidence, we build it out of assumption and bias and simply seek out things that support what we already believe.

Yet, oddly, perhaps this very worrying idea is precisely why deepfakes aren't as terrifying as they initially appear — or at least, why the worry they represent isn't entirely new. We have, after all, always had an ideological relationship to reality. While we often think of the progression of history as an accumulation of facts, when we think of, say, the rise of feminism or the criticisms of colonialism, the "evidence" at their base was there all along — what was needed was to reshape how we relate to the world. Ideology has always been a method of structuring reality.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

It is thus tempting to say that deepfakes are just an extension of this reality and are nothing to worry about. But this isn't quite true, either. Instead, the emergence of each new technology has particular effects on our understanding of the world. The mere existence of technology to manipulate images and video is itself enough to sow doubt along ideological lines.

Consider: In the early 2010s, news broke that the now late Toronto mayor Rob Ford was seen on video smoking crack. Almost immediately, skepticism arose because the video might be doctored, despite the fact that it was viewed by two reporters who had seen Ford up close hundreds of times. What mattered was not plausibility — that some random person could have believably created a video of a big city mayor — but instead plausible deniability: that the simple possibility that one could in theory manipulate video meant that if you were inclined to support the populist mayor, the video must have been false.

Deepfakes are thus neither some novel terror nor are they insignificant. Instead, what they highlight is the role media plays in our current politics. The division between left and right isn't disagreement about policy; it's about competing understanding of what reality actually is. And what digital technology of all kinds allows us to do is form communities around these realities — in Facebook groups, on Twitter, on Instagram, building a mediascape for ourselves composed of what we want to see and what we want to believe.

In that sense, it is the broader material effect of digital media that is the key change — not just deepfakes or Photoshop, but the breakdown of barriers to broadcasting and publishing that in turn allows "realities" to form, replete with their own communities, media, memes, and in-jokes. Deepfakes themselves may not challenge our reality, but it's because at this moment in our history — full of digital manipulation, political polarization, and a willingness to stay ensconced in one's own communities — there's not that much left to be challenged.

Navneet Alang is a technology and culture writer based out of Toronto. His work has appeared in The Atlantic, New Republic, Globe and Mail, and Hazlitt.

-

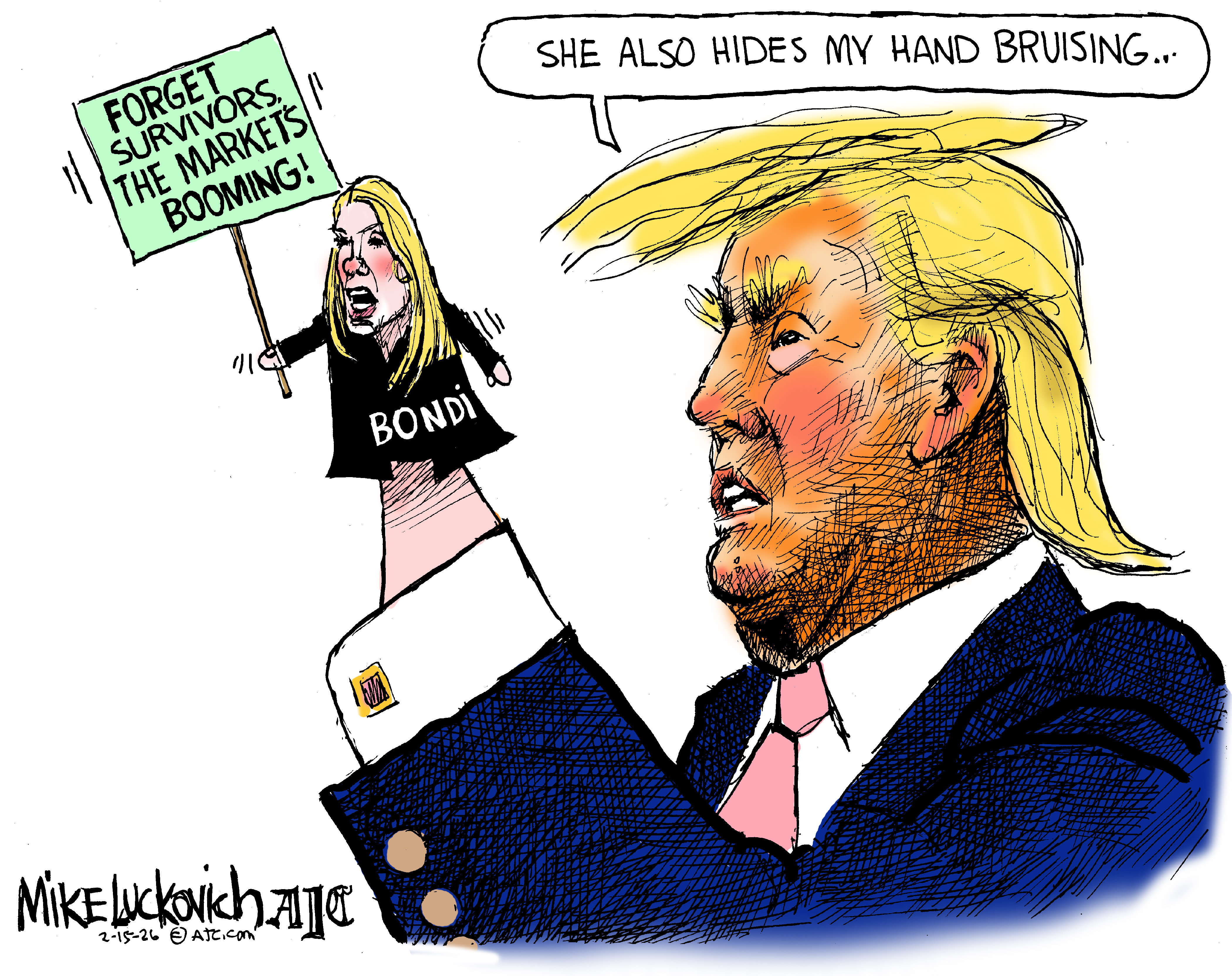

Political cartoons for February 15

Political cartoons for February 15Cartoons Sunday's political cartoons include political ventriloquism, Europe in the middle, and more

-

The broken water companies failing England and Wales

The broken water companies failing England and WalesExplainer With rising bills, deteriorating river health and a lack of investment, regulators face an uphill battle to stabilise the industry

-

A thrilling foodie city in northern Japan

A thrilling foodie city in northern JapanThe Week Recommends The food scene here is ‘unspoilt’ and ‘fun’

-

The billionaires’ wealth tax: a catastrophe for California?

The billionaires’ wealth tax: a catastrophe for California?Talking Point Peter Thiel and Larry Page preparing to change state residency

-

Bari Weiss’ ‘60 Minutes’ scandal is about more than one report

Bari Weiss’ ‘60 Minutes’ scandal is about more than one reportIN THE SPOTLIGHT By blocking an approved segment on a controversial prison holding US deportees in El Salvador, the editor-in-chief of CBS News has become the main story

-

Has Zohran Mamdani shown the Democrats how to win again?

Has Zohran Mamdani shown the Democrats how to win again?Today’s Big Question New York City mayoral election touted as victory for left-wing populists but moderate centrist wins elsewhere present more complex path for Democratic Party

-

Millions turn out for anti-Trump ‘No Kings’ rallies

Millions turn out for anti-Trump ‘No Kings’ ralliesSpeed Read An estimated 7 million people participated, 2 million more than at the first ‘No Kings’ protest in June

-

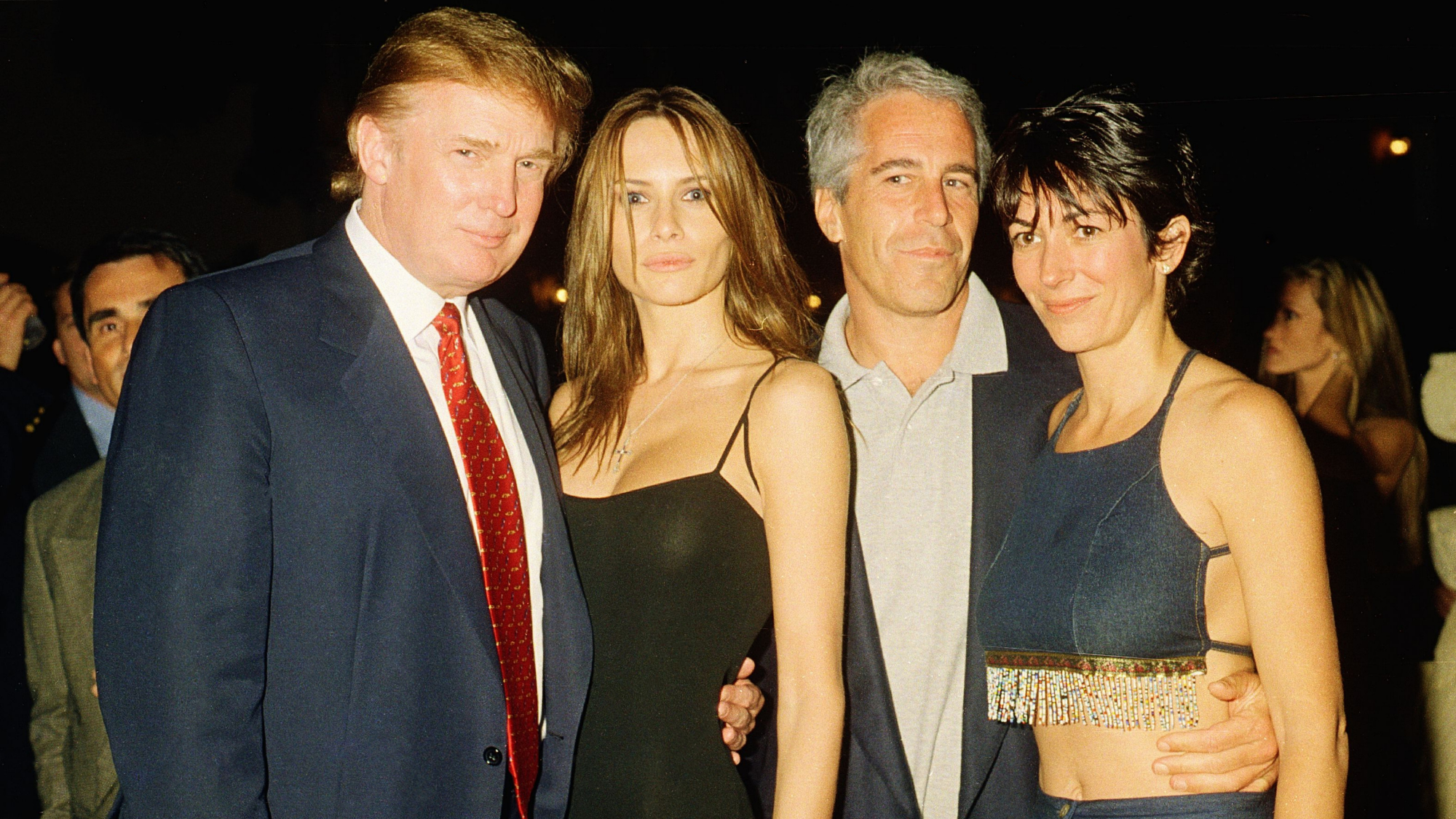

Ghislaine Maxwell: angling for a Trump pardon

Ghislaine Maxwell: angling for a Trump pardonTalking Point Convicted sex trafficker's testimony could shed new light on president's links to Jeffrey Epstein

-

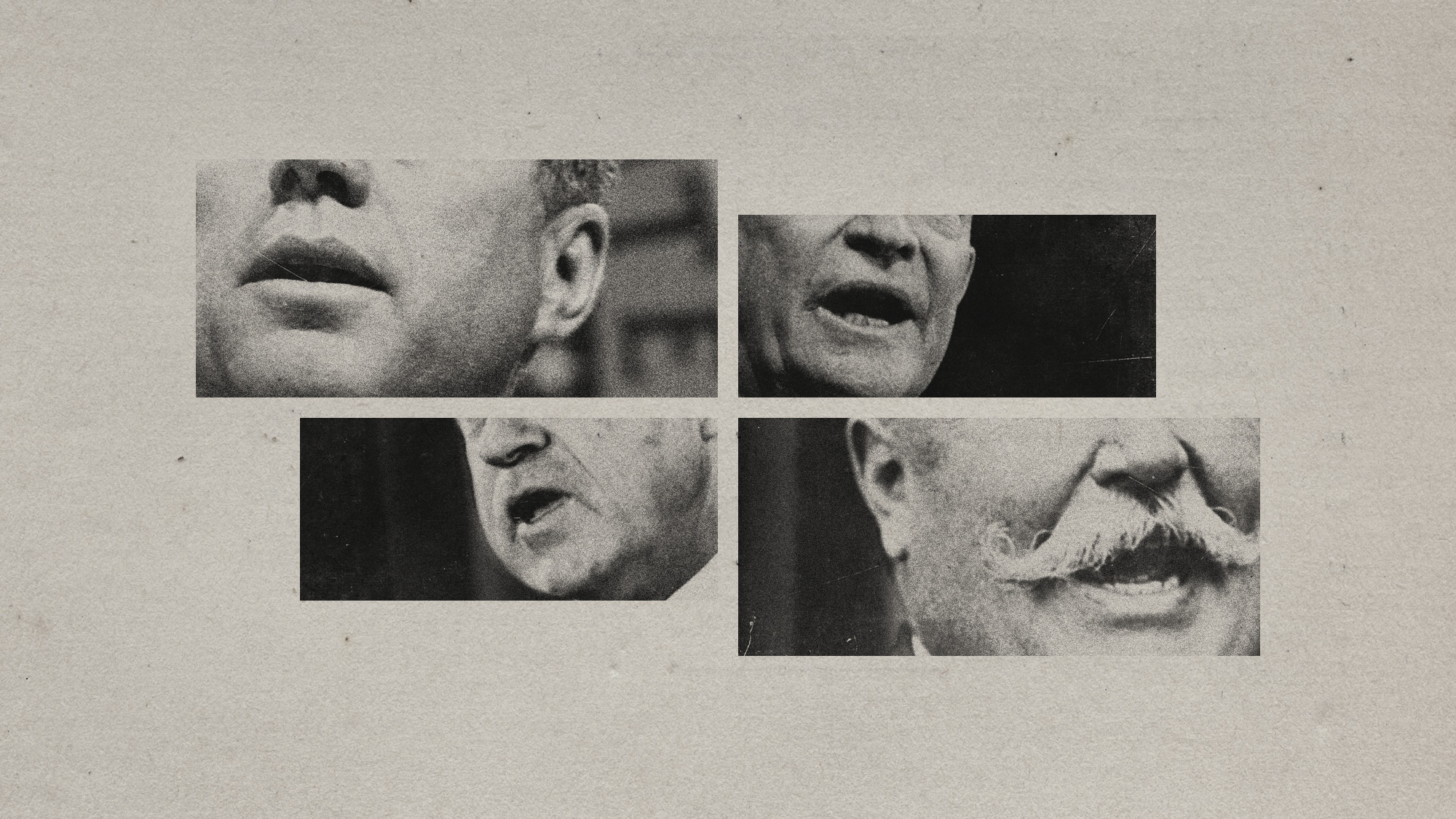

The last words and final moments of 40 presidents

The last words and final moments of 40 presidentsThe Explainer Some are eloquent quotes worthy of the holders of the highest office in the nation, and others... aren't

-

The JFK files: the truth at last?

The JFK files: the truth at last?In The Spotlight More than 64,000 previously classified documents relating the 1963 assassination of John F. Kennedy have been released by the Trump administration

-

'Seriously, not literally': how should the world take Donald Trump?

'Seriously, not literally': how should the world take Donald Trump?Today's big question White House rhetoric and reality look likely to become increasingly blurred