How to bring back the old internet

Section 230 has outlived its usefulness

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

People who grew up with the internet of the 1990s probably remember forums — those clunky, lo-fi spaces where people came together to argue about cars, cycling, video games, cooking, or a million other topics. They had their problems, but in retrospect the internet of those days felt like a magical land of possibility, not a place for organizing pogroms.

What killed most forums is the same thing that killed local journalism across the country, and has turned the internet into a cesspool of abuse, racism, and genocidal propaganda: corporate monopolies. A few giants, led by Facebook and Google, now command an overwhelming share of online activity. What enables the modern online hellscape is Section 230, an obscure legal provision that protects internet companies from certain legal liability, and allows them to grow as large as they have.

It's time to reconsider this liability shield.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

Section 230 is part of the Communications Decency Act, and it stipulates that content-hosting companies like Facebook cannot be considered the publisher of that content, and so can't be sued if that content violates the law. As Rachel Lerman explains at the Washington Post, the intent was actually to incentivize website moderation — when one company was sued and defended itself by noting it did no moderation whatsoever, the bill drafters figured this was a bad incentive, and so carved out explicit protection so websites could feel free to moderate without opening themselves up to liability. It seemingly worked alright in the early days of the internet. "The idea was that people would use whichever sites suited them and had rules they agreed with," writes Lerman.

But as Steve Randy Waldman argues in a brilliant article, now that a few corporations have consolidated effective control over the internet, Section 230 doesn't work anything like this. Instead it provides an excuse for the big platforms to do very little moderation, which is a requirement for them to exist because they host such a gigantic firehose of content. Moreover, hate speech and political extremism are at the core of the business model of Facebook and YouTube, because inflammatory content is a very reliable way of producing "engagement" and therefore profits.

The side effects of this business model are bloody indeed. Facebook has been used to coordinate genocide and ethnic cleansing, and a study in Germany found that heavy use was associated with a marked increase in attacks on refugees. Elsewhere, the New Zealand government recently published an investigation into the Christchurch terrorist massacre, and found that the culprit was radicalized on YouTube. As Josh Marshall writes, "Facebook is [akin] to a fantastically profitable nuclear energy company whose profitability is based on dumping the waste on the side of the road and accepting frequent accidents and explosions as inherent to the enterprise."

On the other hand, when platforms do moderate, it is consistently half-hearted or worse — banning some but not all Holocaust deniers here, labeling President Trump's tweets as misleading there, but ultimately just trying to appease the loudest and best-organized interest groups as cheaply as possible. Often that means boosting conservative propaganda, no matter how flagrantly deceptive — indeed, Facebook has both tweaked its algorithm to stop punishing conservatives for publishing misinformation, and rigged it to boost right-wing posts while downplaying left-wing ones, apparently in part to appease the sensitive feelings of Ben Shapiro.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

It's the worst of both worlds — the most commonly-used online spaces are infested with Nazis and pandemic deniers, while massive corporations exercise dictatorial control over what people are allowed to say and see.

This wretched situation strikes at the heart of the early understanding of why the internet was good, and the moral self-justification of the big platforms. By enabling an unprecedented amount of communication — where any people on Earth who are hooked in can talk to each other instantaneously — it was thought the internet would usher in a new age of rational dialogue and human understanding. Free speech was an unqualified good, it was thought, and more speech was by definition better. This ideology allowed the platform behemoths to pose as somehow being on the side of the impoverished masses. "Zuckerberg believes peer-to-peer communications will be responsible for redistributing global power, making it possible for any individual to access and share information," reads a Wired profile of Facebook's founder back in 2016, neatly stating the precise opposite of truth.

It's easy to look back at those days and think we were fools, and undoubtedly there was some naive techno-optimism behind such sentiments. But the early internet genuinely did have many of these characteristics. I know because I was there. We just didn't realize how fragile those early communities were, what made them actually work, and how quickly they would die once capitalism got its teeth into them.

And that brings me back to the forums and blog comment sections of old. The reason these worked as well as they did was not just that people could post on them from anywhere on the globe (though that of course was a precondition). The reason was moderation. Participants quickly realized that if there weren't clear rules about what could be posted, the place would quickly be overrun by trolls or straight-up Nazis, and developed quality standards they could enforce through warnings or bans. That in turn implies a modest scale, because moderating requires work, which is expensive and even to this day impossible to automate well. Mods of course could be abusive, as any person with power can be. But they were key to the functioning of the early internet — and the tiny size of most forums meant that even the bad ones couldn't destabilize entire nations.

The major lesson of the past decade of the big platforms' cancerous growth across the globe is this: Free speech, like any public good, requires regulation. As sociologist Zeynep Tufecki argues, the mere ability to express oneself online means little for free speech today — what matters is the ability to be heard. It is "attention, not speech, that is restricted and of limited quantity that the gatekeepers can control and allocate," she writes. The big platforms have more effective control over speech than any entities in human history, and they use that power to inflame polarization, extremism, conspiracy theories, and genocidal racism, because that rakes in the most short-term profit. They need to be cut down to size, and reforming Section 230 is one way to do it. (As an aside, this is not meant as a replacement for more traditional anti-trust. It would still be a good idea to force the Big Tech barons to sell off their lesser companies, like making Facebook divest itself of Instagram.)

So what do we do? I am not a legal expert, and of course I can't write out a whole new law here. But let me suggest some broad principles. First, as Waldman suggests, there should still be common carriers like internet service providers that would not be liable for the content disseminated over their infrastructure. But they would be required to identify the people or companies transmitting over their services.

Second, all companies that host online content will be considered publishers, but with some distinctions. Those that rely on automated moderation — that is, the big platforms — will be considered strictly liable for everything. However, ones that can prove they made a good-faith moderation effort involving a real person will get the benefit of the doubt, basically as they currently do. So if Facebook's crummy algorithm misses a terrorist cell organizing on its platform for months, it would be criminally liable. But if a celebrity threatens a news website with defamation for a comment posted on an article, the publisher will be able to defend itself by pointing to a hand moderation process (for example, if comments are held for review prior to being published, and actually examined).

The overall point here would be to force the big platforms to undertake the expensive moderation required to actually police their platforms, while simultaneously carving out a space for smaller spaces to operate with some 230-style protections.

Such a proposal would go well with restrictions on abusive lawsuits. As First Amendment lawyer Dan Horwitz points out, monied interests are already suppressing online speech all the time by filing, or threatening to file, lawsuits against people or companies without the means to defend themselves. Even those with money and legal insurance aren't immune — billionaire (and Facebook board member) Peter Thiel, for instance, destroyed the journalism website Gawker by filing a blizzard of lawsuits on ginned-up pretexts until one stuck and bankrupted the company. A federal "anti-SLAPP" (strategic lawsuit against public participation) law that made such behavior itself illegal and liable for damages would make the legal system more fair, and less the plaything of litigious rich jerks.

A good indicator for whether reformers are on the right track will be Facebook's reaction to any proposed changes. With the previous consensus around Section 230 crumbling, the company has expressed rhetorical support for regulatory adjustments. But what it for sure wants is weak new requirements that it could easily handle but smaller upstart companies could not — effectively entrenching its dominance even further. If Facebook is for it, it is probably bad, and vice versa.

There are sure to be many objections to this proposal. For instance, there was a slight rollback of Section 230 a couple years ago in the form of the SESTA/FOSTA Act, which removed protection for posts about sex trafficking. The idea supposedly was to protect women from exploitation, but it seems to have backfired. Online platforms like Reddit and Craigslist had provided a quasi-legitimate way for workers to conduct business and monitor potential clients, but SESTA/FOSTA effectively forced those pages to shut down whether there was evidence of trafficking or not. Just as many predicted at the time, doing so seems to have driven women back underground or onto the street, where they are more vulnerable to abuse or traffickers (and in the process created a movement of sex worker organizing).

I am definitely in favor of making sex work as safe as possible. But the problem here is not so much Section 230 as the fact that prostitution is illegal, and that police virtually always prosecute the sex workers instead of the johns. The pre-SESTA/FOSTA world was far from ideal, and there was indeed some trafficking on those sites. Sex trafficking would be much easier to deal with if prostitution was legalized and regulated, so that women would not fear going to the authorities to protect themselves.

Make no mistake, amending Section 230 in this way would be a hugely disruptive change — basically blowing up most of the global system of publishing websites as we know it. Facebook, Twitter, YouTube, and all the other big platforms would have to drastically increase the amount of moderation they are doing. The likely endgame for those platforms is that they would end up like network TV — relatively bland and boring, purged of anything seriously controversial. But they would also probably shrink drastically, because it would be more expensive to moderate heavily, and because political discussion would migrate to smaller, more curated websites with paid teams of moderators — or volunteer hobbyists, like how most blogs and forums used to work.

The early promise of the internet has not worked out. But that is because ruthless capitalist profiteers have been allowed to consolidate control over so much of online communication. The few genuinely great early websites that still survive are always regulated — like Wikipedia, which has both moderation and a quasi-constitution establishing rules for what can be published and cited. We could rediscover that early promise by forcing Facebook and it ilk to take responsibility for the civilization-wrecking nightmare they have inflicted on humanity.

Ryan Cooper is a national correspondent at TheWeek.com. His work has appeared in the Washington Monthly, The New Republic, and the Washington Post.

-

What is the endgame in the DHS shutdown?

What is the endgame in the DHS shutdown?Today’s Big Question Democrats want to rein in ICE’s immigration crackdown

-

‘Poor time management isn’t just an inconvenience’

‘Poor time management isn’t just an inconvenience’Instant Opinion Opinion, comment and editorials of the day

-

Bad Bunny’s Super Bowl: A win for unity

Bad Bunny’s Super Bowl: A win for unityFeature The global superstar's halftime show was a celebration for everyone to enjoy

-

The billionaires’ wealth tax: a catastrophe for California?

The billionaires’ wealth tax: a catastrophe for California?Talking Point Peter Thiel and Larry Page preparing to change state residency

-

Bari Weiss’ ‘60 Minutes’ scandal is about more than one report

Bari Weiss’ ‘60 Minutes’ scandal is about more than one reportIN THE SPOTLIGHT By blocking an approved segment on a controversial prison holding US deportees in El Salvador, the editor-in-chief of CBS News has become the main story

-

Has Zohran Mamdani shown the Democrats how to win again?

Has Zohran Mamdani shown the Democrats how to win again?Today’s Big Question New York City mayoral election touted as victory for left-wing populists but moderate centrist wins elsewhere present more complex path for Democratic Party

-

Millions turn out for anti-Trump ‘No Kings’ rallies

Millions turn out for anti-Trump ‘No Kings’ ralliesSpeed Read An estimated 7 million people participated, 2 million more than at the first ‘No Kings’ protest in June

-

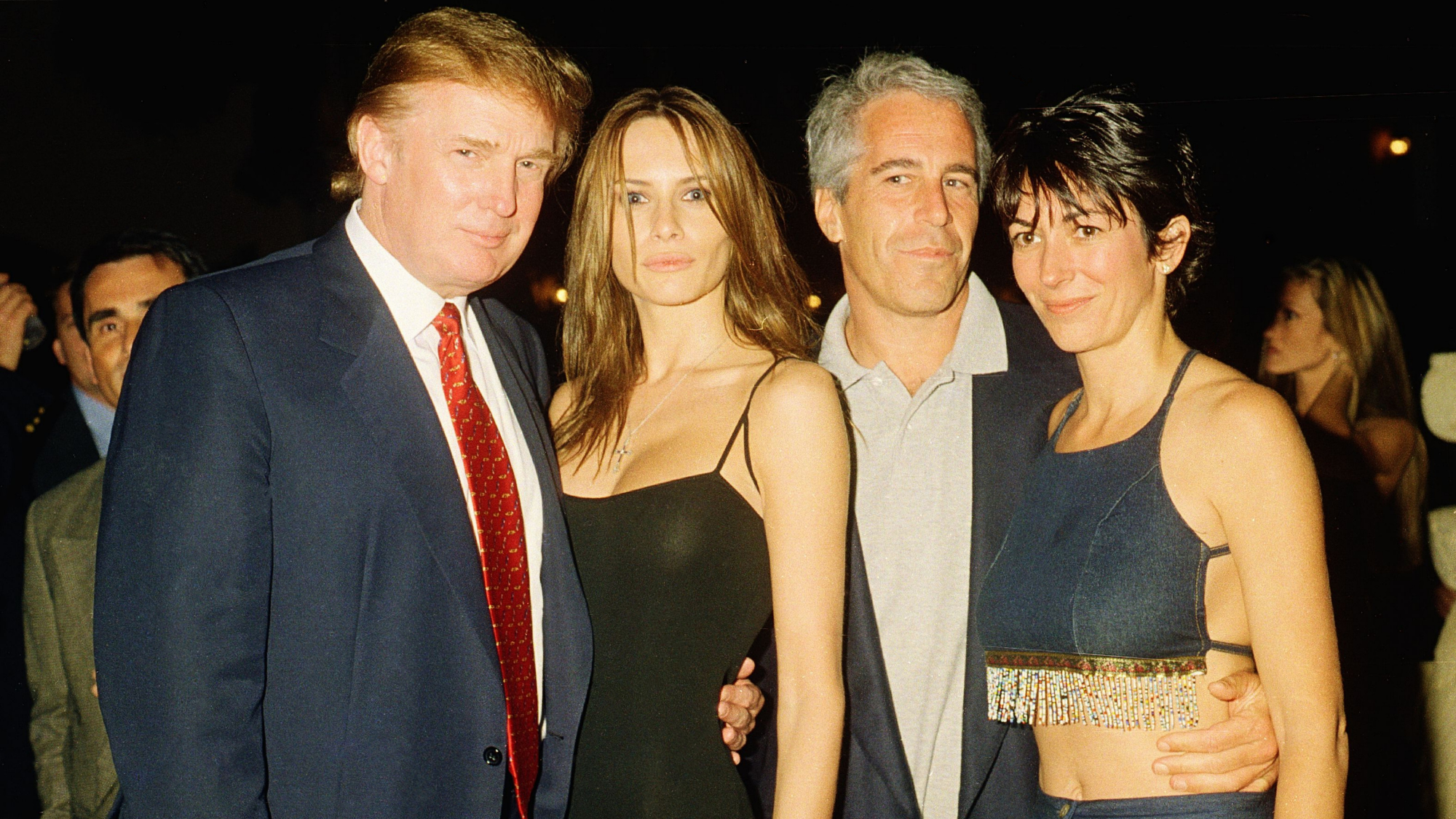

Ghislaine Maxwell: angling for a Trump pardon

Ghislaine Maxwell: angling for a Trump pardonTalking Point Convicted sex trafficker's testimony could shed new light on president's links to Jeffrey Epstein

-

The last words and final moments of 40 presidents

The last words and final moments of 40 presidentsThe Explainer Some are eloquent quotes worthy of the holders of the highest office in the nation, and others... aren't

-

The JFK files: the truth at last?

The JFK files: the truth at last?In The Spotlight More than 64,000 previously classified documents relating the 1963 assassination of John F. Kennedy have been released by the Trump administration

-

'Seriously, not literally': how should the world take Donald Trump?

'Seriously, not literally': how should the world take Donald Trump?Today's big question White House rhetoric and reality look likely to become increasingly blurred