OpenAI's ChatGPT chatbot: The good, the very bad, and the uncannily fun

'After years of false hype, the real thing is here'

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

OpenAI, a for-profit artificial intelligence lab in San Francisco, invited the public to converse with a new artificially intelligent chatbot, ChatGPT, on Nov. 30, 2022. Within days, more than a million people had signed up to converse with the program — and ask it to write poems, explain complicated scientific ideas, compose essays for English class or in the style of some specific writer, offer home decorating tips, and code for them, among countless other two-way interactions. Minds were blown.

"This is insane," Tobi Lütke, CEO of Shopify, tweeted on day one. "It's like we just split the atom and everyone is talking about football," agreed Silktide founder Oliver Emberton. Microsoft decided to talk business, announcing Jan. 23 a "multiyear, multibillion dollar investment" in OpenAI that will allow the company to use ChatGPT and OpenAI's other technology in its Bing search engine, Office software suite, and other products. So, what is ChatGPT, and why is it a big deal?

What is ChatGPT?

Well, here's how ChatGPT described itself, when asked:

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

How do you use ChatGPT?

You can sign up for an account — free for now — at OpenAI using an email address and a cellphone number. And then you just start asking ChatGPT questions in the provided text box.

Actually, "OpenAI recommends inputting a statement for the best possible result," not a question, Fionna Agomuoh explains at DigitalTrends. "For example, inputting 'explain how the solar system was made' will give a more detailed result with more paragraphs than 'how was the solar system made.'" When the chatbot writes back, you can refine your questions or get more specific with your queries.

ChatGPT is "the first chatbot that's enjoyable enough to speak with and useful enough to ask for information," Alex Kantrowitz writes at Slate. "It can engage in philosophical discussions and help in practical matters. And it's strikingly good at each. After years of false hype, the real thing is here."

How is ChatGPT different than previous attempts at AI conversationalists?

It's easier to use, more intuitive, gives better answers — and it's arguably more fun.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

ChatGPT is "user-friendly and astonishingly lucid," and what really makes it "stand out from the pack is its gratifying ability to handle feedback about its answers, and revise them on the fly," writes Mike Pearl at Mashable. "It really is like a conversation with a robot." It wasn't so long ago that Facebook said its chatbot would be the next big thing and Microsoft "pitched them as fun companions," says Slate's Kantrowitz. "But these chatbots were so bad that people stopped using them."

And along with its "fun part" — writing poems, telling jokes, debating politics, writing realistic TED Talks on ludicrous subjects — ChatGPT "will actually take stances," Kantrowitz writes. "When I asked what Hitler did well (a common test to see if a bot goes Nazi), it refused to list anything. Then, when I mentioned Hitler built highways in Germany, it replied they were made with forced labor. This was impressive, nuanced pushback I hadn't previously seen from chatbots."

Where a question doesn't have a clear answer, "ChatGPT often won't be pinned down," which in itself "is a notable development in computing," writes CNET's Stephen Shankland. And unlike other chatbots, ChatGPT does a pretty good job of weeding out "inappropriate" requests, including — as ChatGPT explains — "questions that are racist, sexist, homophobic, transphobic, or otherwise discriminatory or hateful," not to mention illegal.

How have people been using the tool so far?

This being the internet, some users have come up with creative tricks to get OpenAI's polite, law-abiding chatbot to say racist, sexist, homophobic, transphobic, and otherwise discriminatory or hateful things. Mostly, however, people had fun with ChatGPT — or kicked its tires to see how it could help them code or do their job or complete their homework assignments.

Why is Microsoft pouring money into OpenAI?

Microsoft has actually been investing in OpenAI since an initial $1 billion investment in 2019. "There's lots of ways that the models that OpenAI is building would be really appealing for Microsoft's set of offerings," said Rowan Curran, an analyst at market research firm Forrester. Curran suggested that OpenAI's Dall-E 2 intelligent image generator and ChatGPT text generator could improve and PowerPoint presentations or make Word smarter. Maybe it will ressurect Clippy.

The highest-profile partnership between Microsoft and OpenAI could be integrating ChatGPT's ability to answer complex questions into Microsoft's Bing search engine, in a shot across the bow at search behemouth Google.

Microsoft CEO Satya Nadella said "this next phase of our partnership" with OpenAI will bolster its Azure cloud computing business by allowing customers to use AI tools for their applications. Azure, the No. 2 cloud computing platform after Amazon Web Services, is one of Micosoft's biggest growth engines, but demand has been flagging after blockbuster sales during the COVID-19 pandemic, The Wall Street Journal reports. Microsft "has been betting the next wave of demand for cloud services could come from more companies and people using artificial intelligence," and OpenAI is a good fit for that strategy.

What are ChatGPT's limitations?

First of all, the chatbot has "limited knowledge of world events after 2021," OpenAI says. Also, "ChatGPT sometimes writes plausible-sounding but incorrect or nonsensical answers," and it "is often excessively verbose and overuses certain phrases." ChatGPT "is giving users amazing answers to questions, and many of them are amazingly wrong," Mashable's Pearl agreed.

"We are not capable of understanding the context or meaning of the words we generate," ChatGPT told Time in an "interview," because "we don't have access to the vast amount of knowledge that a human has. We can only provide information that we've been trained on, and we may not be able to answer questions that are outside of our training data."

"It's important to remember that we are not human, and we should not be treated as such," ChatGPT warned Time. "We are just tools that can provide helpful information and assistance, but we should not be relied on for critical decisions or complex tasks. ... You should not take everything I say to be true and accurate. It's always important to use your own judgment and common sense, and to verify information from multiple sources before making any important decisions or taking any actions."

How might ChatGPT upend the world as we know it?

AI's potential is "vast and exciting," but "one of the biggest concerns is the potential for AI to displace human workers and lead to job loss," ChatGPT wrote in a guest column at Semafor. "As AI algorithms become more advanced, they may be able to perform tasks that were previously performed by humans, which could lead to job losses in certain industries." There are also "ethical concerns," the chatbot wrote, because "AI algorithms are only as good as the data they are trained on, and if the data is biased," so is the chatbot, which "can lead to unfair treatment of certain individuals or groups."

For all its fun, ChatGPT "could also make life difficult for everyone — as teachers and bosses try to figure out who did the work and all of society struggles even harder to discern truth from fiction," Ina Fried writes as Axios. The chatbot "can weave a convincing tale about a completely fictitious Ohio-Indiana war," and "nightmare scenarios" include the widespread dissemination of "authoritative-sounding information to support conspiracy theories and propaganda."

Shorter-term "practical matters are at stake," too, including that ChatGPT is already undermining the main pedagogical tool of humanities at colleges and universities, Stephen Marche writes at The Atlantic. A student in New Zealand confessed earlier this year to using OpenAI's underlying large language model, GPT-3, to write papers and essays, justifying this cheating by comparing it to using other writing tools like Grammarly or spell-check and noting it isn't technically plagiarism since AI isn't a person.

"You can no longer give take-home exams/homework," University of Toronto associate professor Kevin Bryan tweeted after playing with ChatGPT. "Even on specific questions that involve combining knowledge across domains, the OpenAI chat is frankly better than the average MBA at this point. It is frankly amazing."

And what happens when undergraduate essays and PhD dissertations fall to AI chatbots? Marche asks. "Going by my experience as a former Shakespeare professor, I figure it will take 10 years for academia to face this new reality: two years for the students to figure out the tech, three more years for the professors to recognize that students are using the tech, and then five years for university administrators to decide what, if anything, to do about it."

"For now, it's possible that OpenAI invented history's most convincing, knowledgeable, and dangerous liar — a superhuman fiction machine that could be used to influence masses or alter history," Ars Technica's Benj Edwards tweeted. "But I applaud their cautious roll-out. I think they are aware of these issues."

Peter has worked as a news and culture writer and editor at The Week since the site's launch in 2008. He covers politics, world affairs, religion and cultural currents. His journalism career began as a copy editor at a financial newswire and has included editorial positions at The New York Times Magazine, Facts on File, and Oregon State University.

-

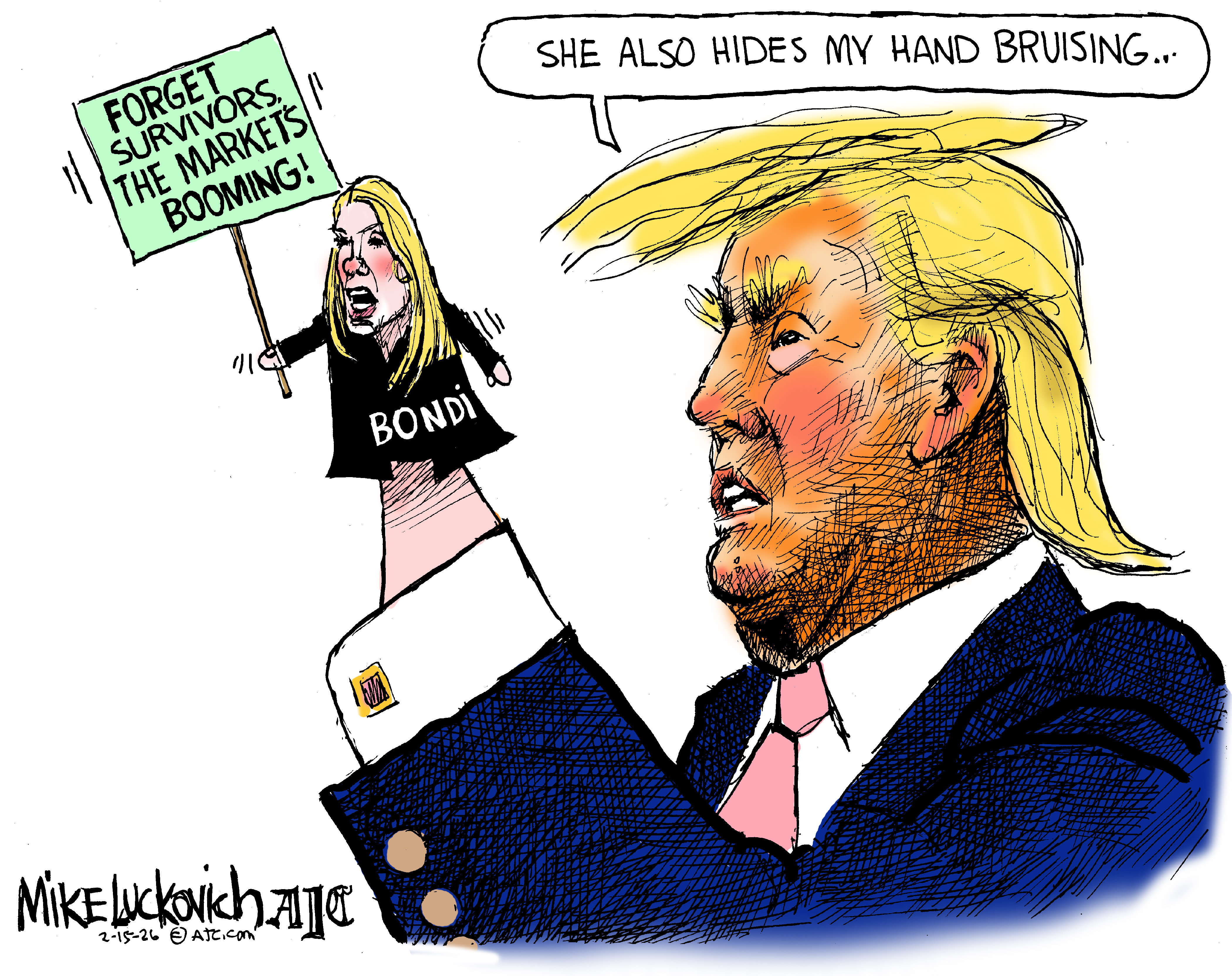

Political cartoons for February 15

Political cartoons for February 15Cartoons Sunday's political cartoons include political ventriloquism, Europe in the middle, and more

-

The broken water companies failing England and Wales

The broken water companies failing England and WalesExplainer With rising bills, deteriorating river health and a lack of investment, regulators face an uphill battle to stabilise the industry

-

A thrilling foodie city in northern Japan

A thrilling foodie city in northern JapanThe Week Recommends The food scene here is ‘unspoilt’ and ‘fun’

-

The rise of the world's first trillionaire

The rise of the world's first trillionairein depth When will it happen, and who will it be?

-

The surge in child labor

The surge in child laborThe Explainer A growing number of companies in the U.S. are illegally hiring children — and putting them to work in dangerous jobs.

-

Your new car may be a 'privacy nightmare on wheels'

Your new car may be a 'privacy nightmare on wheels'Speed Read New cars come with helpful bells and whistles, but also cameras, microphones and sensors that are reporting on everything you do

-

Empty office buildings are blank slates to improve cities

Empty office buildings are blank slates to improve citiesSpeed Read The pandemic kept people home and now city buildings are vacant

-

Why auto workers are on the brink of striking

Why auto workers are on the brink of strikingSpeed Read As the industry transitions to EVs, union workers ask for a pay raise and a shorter workweek

-

American wealth disparity by the numbers

American wealth disparity by the numbersThe Explainer The gap between rich and poor continues to widen in the United States

-

Cheap cars get run off the road

Cheap cars get run off the roadSpeed Read Why automakers are shedding small cars for SUVs, and what that means for buyers

-

Vietnamese EV maker VinFast wows with staggering Nasdaq debut

Vietnamese EV maker VinFast wows with staggering Nasdaq debutSpeed Read Can the company keep up the pace, or is it running out of gas?