Does big tech monetise adolescent pain?

Father of Molly Russell, 14, said she had been sucked into a ‘vortex’ of ever darker material

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

“It’s the rueful half-smile that breaks my heart,” said Judith Woods in The Daily Telegraph. Ambushed by a camera-wielding parent outside their home, a younger child would have beamed or scowled.

But Molly Russell offers that “oh-go-on-then-if-you-must” expression that “every self-aware teen gives her soppy dad”. It is an “intimation of adulthood” – the willingness to make “brief accommodations” to spare other people’s feelings, and get on with the day.

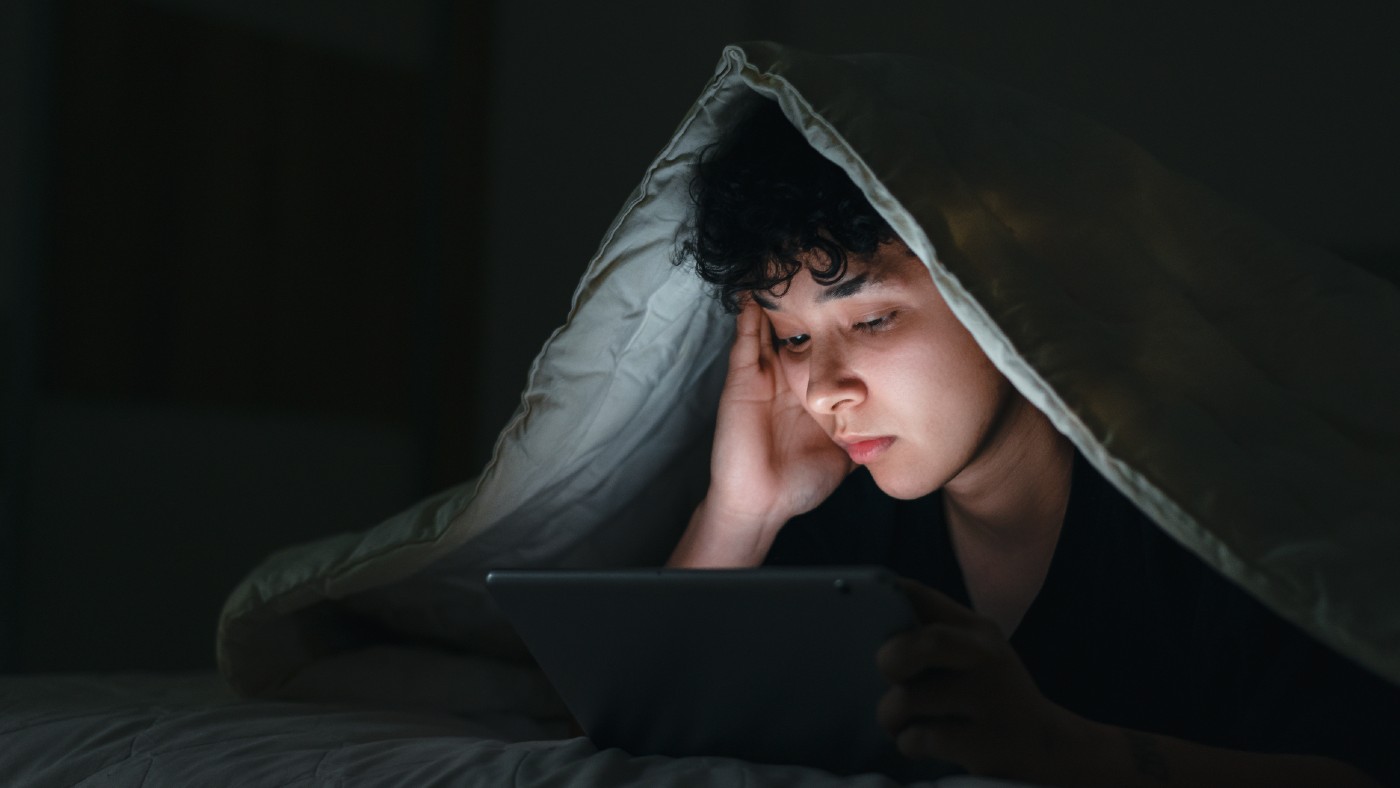

But Molly will not reach adulthood. In November 2017, the 14-year-old was found dead in her bedroom, having spent months viewing online content linked to depression, anxiety, self-harm and suicide. Some of it romanticised self-harm. Much of it was so graphic, bleak and violent that a psychiatrist told the inquest into Molly’s death that he’d been “unable to sleep well” for weeks after seeing it.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

A ‘vortex’ of dark material

Not only had a child been able to access this distressing material, many of the posts had been provided to Molly, unasked, by Instagram and Pinterest’s “recommendation engines”, said John Naughton in The Observer. Thus the teenager – described by her father as a “positive” young woman, who seemed to be having only “normal teenage mood swings” – had been sucked into a “vortex” of ever darker material.

At the inquest, an executive from Meta, Instagram’s owner, denied that the 2,100 depression, self-harm or suicide-linked posts that Molly had liked or saved in the six months before her death were unsafe for children, and said that it was good for them to be able to share their feelings. But in what may be a world first, north London’s senior coroner ruled last week that Molly had died from an act of self-harm while suffering from depression – and “the negative effects of online content”.

Potential impact of Online Safety Bill

There are some who hope that the Government’s Online Safety Bill will make the internet safer for our children, said Hugo Rifkind in The Times. But this legislation keeps being delayed because trying to ban harmful content leads ministers into a “thicket of debate about free speech and censorship”. It’s complex; it’s also a red herring.

Teenagers have always sought out dark material, and found it. The difference now is the manner in which it is delivered to them. To read one sad post may be cathartic – it’s being bombarded with them that is dangerous. This is what we should tackle: not the content itself, but Big Tech’s “relentless monetising” of people’s absorption in content about self-harm, or “anything else”. “It’s a system, a design, a business model.” And the tech giants can fix it, if we make them.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com