Deep thoughts: AI shows its math chops

Google's Gemini is the first AI system to win gold at the International Mathematical Olympiad

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

Complex math hasn't always been AI's strongest suit, but the technology showcased its progress at one of the world's premiere competitions, said Cade Metz in The New York Times. A Google Deep-Mind system became the first machine to achieve "gold medal" status at the annual International Mathematical Olympiad (IMO) last month. OpenAI said that its AI "achieved a similar score on this year's questions, though it did not officially enter the competition." The results had experts buzzing. Last year, Google's AI took home a silver medal, but it required several days to complete the test and "could only answer questions after human experts translated them into computer programming language." This time, Google's chatbot, Gemini Deep Think, "read and responded to questions in English" and used techniques that scientists are calling "reasoning" to solve the problems. Google says it took the chatbot the same amount of time to finish the test as the human participants: 4.5 hours.

"They still got beat by the world's brightest teenagers," said Ben Cohen in The Wall Street Journal. Twenty-six human highschool students outscored the computers, which managed to answer five of the six increasingly difficult problems correctly. The one that tripped up the AI was a brainteaser in the "notoriously tricky field of combinatorics" dealing with counting and arranging objects. The solution required "ingenuity, creativity, and intuition," qualities only humans (so far) can muster. "I would actually be a bit scared if the AI models could do stuff on Problem 6," said one of the gold medalists, Qiao Zhang, a 17-year-old on his way to MIT. AI models struggle with math more than you might think, said Andrew Paul in Popular Science. The reason has to do with how they process prompts. AI models "break the words and letters down into 'tokens,' then parse and predict an appropriate response," whereas humans can simply process them as complete thoughts.

That's why Google trained this Gemini version differently, said Ryan Whitwam in Ars Technica. The IMO's scoring is "based on showing your work," so to succeed the AI had to consider and analyze "every step on the way to an answer." Google gave Gemini a set of solutions to math problems, then added more general tips on how to approach them. The company's scientists were especially proud of one Gemini solution that used relatively simple math, working from basic principles, while most human competitors relied on "graduate-level" concepts. This may be just a 45-way tie for 27th place in a youth math contest, but it's rightly being hailed as significant, said Krystal Hu in Reuters. It "directly challenges the long-held skepticism that AI models are just clever mimics." Math problems that require multistep proofs have "become the ultimate test of reasoning, and AI just passed."

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

-

Health insurance: Premiums soar as ACA subsidies end

Health insurance: Premiums soar as ACA subsidies endFeature 1.4 million people have dropped coverage

-

Anthropic: AI triggers the ‘SaaSpocalypse’

Anthropic: AI triggers the ‘SaaSpocalypse’Feature A grim reaper for software services?

-

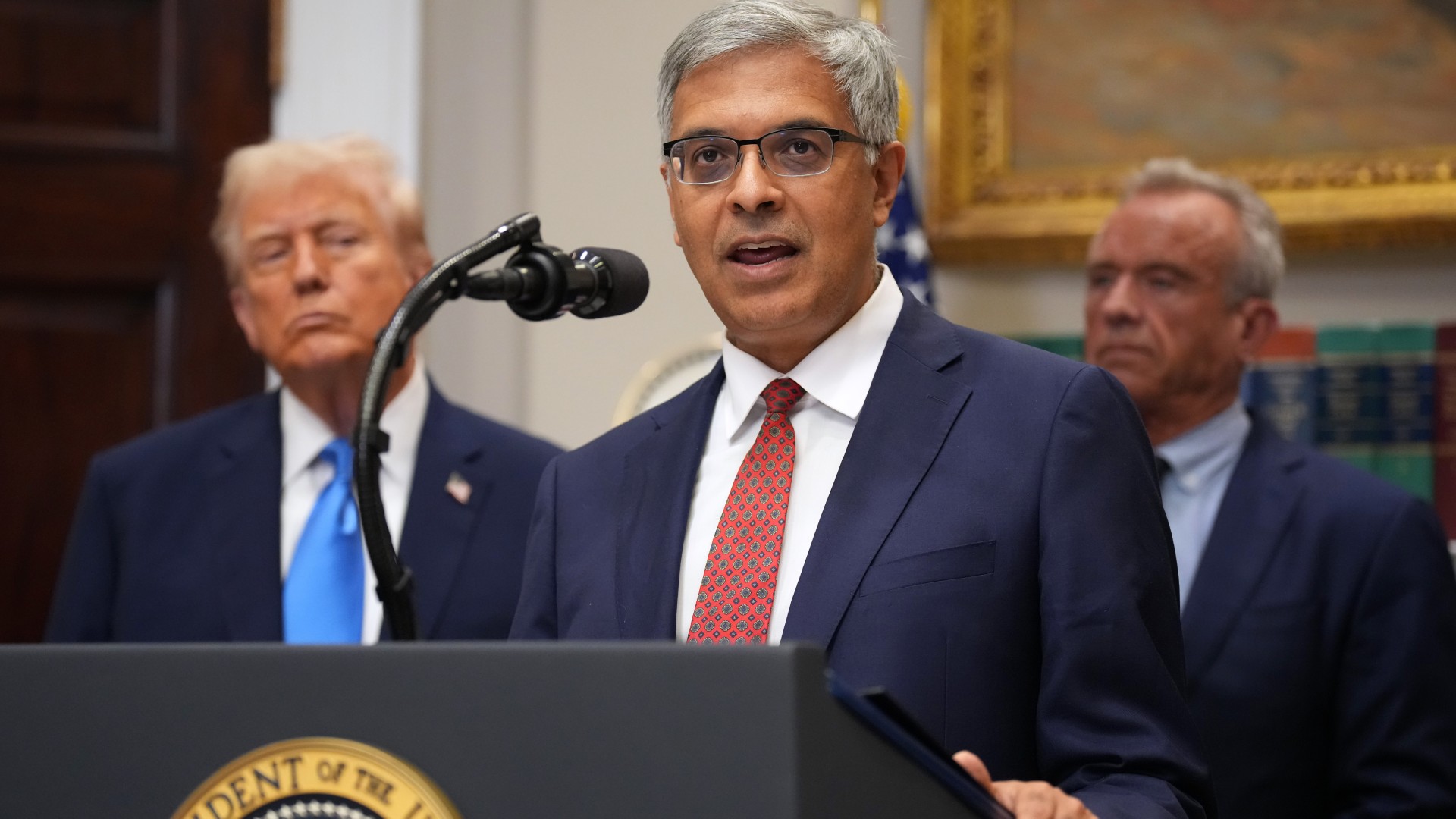

NIH director Bhattacharya tapped as acting CDC head

NIH director Bhattacharya tapped as acting CDC headSpeed Read Jay Bhattacharya, a critic of the CDC’s Covid-19 response, will now lead the Centers for Disease Control and Prevention

-

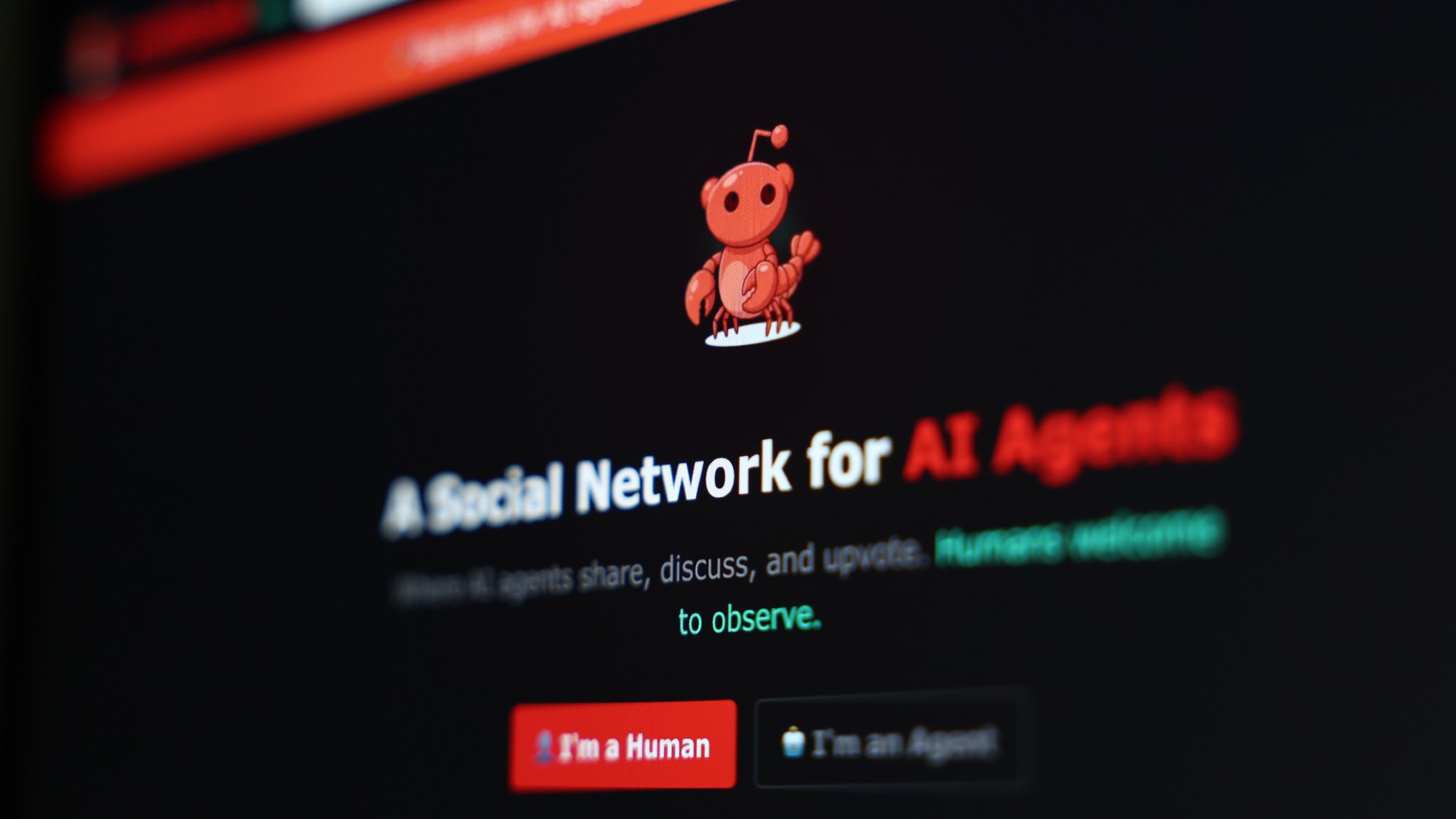

Moltbook: The AI-only social network

Moltbook: The AI-only social networkFeature Bots interact on Moltbook like humans use Reddit

-

Are AI bots conspiring against us?

Are AI bots conspiring against us?Talking Point Moltbook, the AI social network where humans are banned, may be the tip of the iceberg

-

Silicon Valley: Worker activism makes a comeback

Silicon Valley: Worker activism makes a comebackFeature The ICE shootings in Minneapolis horrified big tech workers

-

AI: Dr. ChatGPT will see you now

AI: Dr. ChatGPT will see you nowFeature AI can take notes—and give advice

-

Can Europe regain its digital sovereignty?

Can Europe regain its digital sovereignty?Today’s Big Question EU is trying to reduce reliance on US Big Tech and cloud computing in face of hostile Donald Trump, but lack of comparable alternatives remains a worry

-

Claude Code: Anthropic’s wildly popular AI coding app

Claude Code: Anthropic’s wildly popular AI coding appThe Explainer Engineers and noncoders alike are helping the app go viral

-

Will regulators put a stop to Grok’s deepfake porn images of real people?

Will regulators put a stop to Grok’s deepfake porn images of real people?Today’s Big Question Users command AI chatbot to undress pictures of women and children

-

Most data centers are being built in the wrong climate

Most data centers are being built in the wrong climateThe explainer Data centers require substantial water and energy. But certain locations are more strained than others, mainly due to rising temperatures.