Facebook's thought police

Do you really want the social network's information cops deciding what the truth is?

The social panic and media hysteria over fake news continues unabated. And once again, Facebook's reaction is all wrong.

The left's intense focus on false news stories exploded in the wake of what seemed like an inexplicable Republican victory in the 2016 election, with Donald Trump beating Hillary Clinton despite an avalanche of bad press directed at the former, especially in the final weeks of the campaign. The GOP also unexpectedly retained control of the Senate, winning surprise victories in Wisconsin and Indiana to confound the Democratic Party's advantage in incumbent seats and normal presidential-cycle turnout models.

Attention quickly focused on a BuzzFeed story about traffic generated by stories on Facebook, and how much more popular fake news was over real news. The analysis had significant flaws, however, beginning with the fact that there was no evidence of correlation between Facebook traffic and voting behavior, let alone causation. Furthermore, the top five "real news" articles in the analysis turned out to be four opinion essays opposing Trump and the New York Post's story on Melania Trump's suggestive modeling pictures from two decades earlier.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

Nonetheless, much of the left and the mainstream media stuck with the fake news narrative to explain the election outcome, and have continued to pressure Facebook to take action against it.

That obsession backfires occasionally, as The New York Times discovered last week when it attempted to demonstrate Trump's unpopularity by comparing pictures on Twitter from a visit by the NFL champion New England Patriots to a similar 2015 visit during Barack Obama's presidency. The Patriots objected to that characterization, replying on Twitter with pictures of their own demonstrating that the two events had similar turnout. Times sports editor Colin Campbell issued a retraction the next day, saying, "I'm an idiot."

Now obviously, there's a difference between fake news that maliciously intends to deceive and flawed news that well-meaning journalistic organizations just mess up — but whining about fake news makes your flawed news a bigger problem than it otherwise would be.

Nonetheless, Facebook is convinced it has a fake news problem. And it's scrambling to fix it.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

Facebook founder Mark Zuckerberg once scoffed at the allegation that Facebook news feeds were a factor in the decision process for voters, accusing those pushing that theory of "a certain profound lack of empathy" with Facebook's consumers. Last week, however, Fortune reported that Zuckerberg had "come around" on the fake news crisis, and announced new algorithms to challenge the credibility of items on news feeds, partnering with fact checkers, and having users drive the process of disputing news stories. He pointed to Facebook's earlier success in reducing the amount of clickbait in news feeds — stories with sensational headlines that rarely deliver on their promise — and pledged to adapt that approach to fake news.

However, Facebook's chief operating officer rejected calls for the company to set itself up as a kind of information police force. Sheryl Sandberg reminded critics that they do not publish news, but only provide a platform for their users to share it. "We definitely don't want to be the arbiter of the truth," Sandberg told the BBC. "We don't think that's appropriate for us. … Newsrooms have to do their part, media companies, classrooms, and technology companies."

Sandberg leaves out an important player in that equation: the consumer. If consumers want better news, then they need to seek it out. Consumers should not rely on a community-driven news feed for their information, but instead seek out original sources, determine which they can trust, and then verify information before sharing it.

This is not a new problem, and it didn't originate with Facebook. Before the advent of social media, email was the favored medium for fever-swamp claims and conspiracy theories. Since the advent of the internet, websites of varying degrees of sophistication have amplified the silly and the serially stupid. Before that, supermarket tabloids specialized in what's now called fake news — and both still operate as distribution channels for fake news to this day, in the same manner as social media.

And yet, America still held elections over the last few decades with all of these sources of fake news, and did so successfully. Why? Because despite the attempts to paint the U.S. electorate as a bunch of unsophisticated hicks, most adults have no problem distinguishing fake news from the real thing. Voters have more resources than ever to help them consume news responsibly. They don't need Facebook to pre-digest their news and then spoon-feed it to them.

Facebook is a private-sector, voluntary-association community, and they can set up their system as they like. If they want to police news stories and block access to some based on their own assessment of credibility, that's their choice. It comes, however, at the expense of choice among their members, and in the most paternalistic manner conceivable.

That might have some Facebook consumers exercising choices in ways that Facebook won't like.

Edward Morrissey has been writing about politics since 2003 in his blog, Captain's Quarters, and now writes for HotAir.com. His columns have appeared in the Washington Post, the New York Post, The New York Sun, the Washington Times, and other newspapers. Morrissey has a daily Internet talk show on politics and culture at Hot Air. Since 2004, Morrissey has had a weekend talk radio show in the Minneapolis/St. Paul area and often fills in as a guest on Salem Radio Network's nationally-syndicated shows. He lives in the Twin Cities area of Minnesota with his wife, son and daughter-in-law, and his two granddaughters. Morrissey's new book, GOING RED, will be published by Crown Forum on April 5, 2016.

-

Will the mystery of MH370 be solved?

Will the mystery of MH370 be solved?Today’s Big Question New search with underwater drones could finally locate wreckage of doomed airliner

-

The biggest astronomy stories of 2025

The biggest astronomy stories of 2025In the spotlight From moons, to comets, to pop stars in orbit

-

Why are micro-resolutions more likely to stick?

Why are micro-resolutions more likely to stick?In the Spotlight These smaller, achievable goals could be the key to building lasting habits

-

Bari Weiss’ ‘60 Minutes’ scandal is about more than one report

Bari Weiss’ ‘60 Minutes’ scandal is about more than one reportIN THE SPOTLIGHT By blocking an approved segment on a controversial prison holding US deportees in El Salvador, the editor-in-chief of CBS News has become the main story

-

Has Zohran Mamdani shown the Democrats how to win again?

Has Zohran Mamdani shown the Democrats how to win again?Today’s Big Question New York City mayoral election touted as victory for left-wing populists but moderate centrist wins elsewhere present more complex path for Democratic Party

-

Millions turn out for anti-Trump ‘No Kings’ rallies

Millions turn out for anti-Trump ‘No Kings’ ralliesSpeed Read An estimated 7 million people participated, 2 million more than at the first ‘No Kings’ protest in June

-

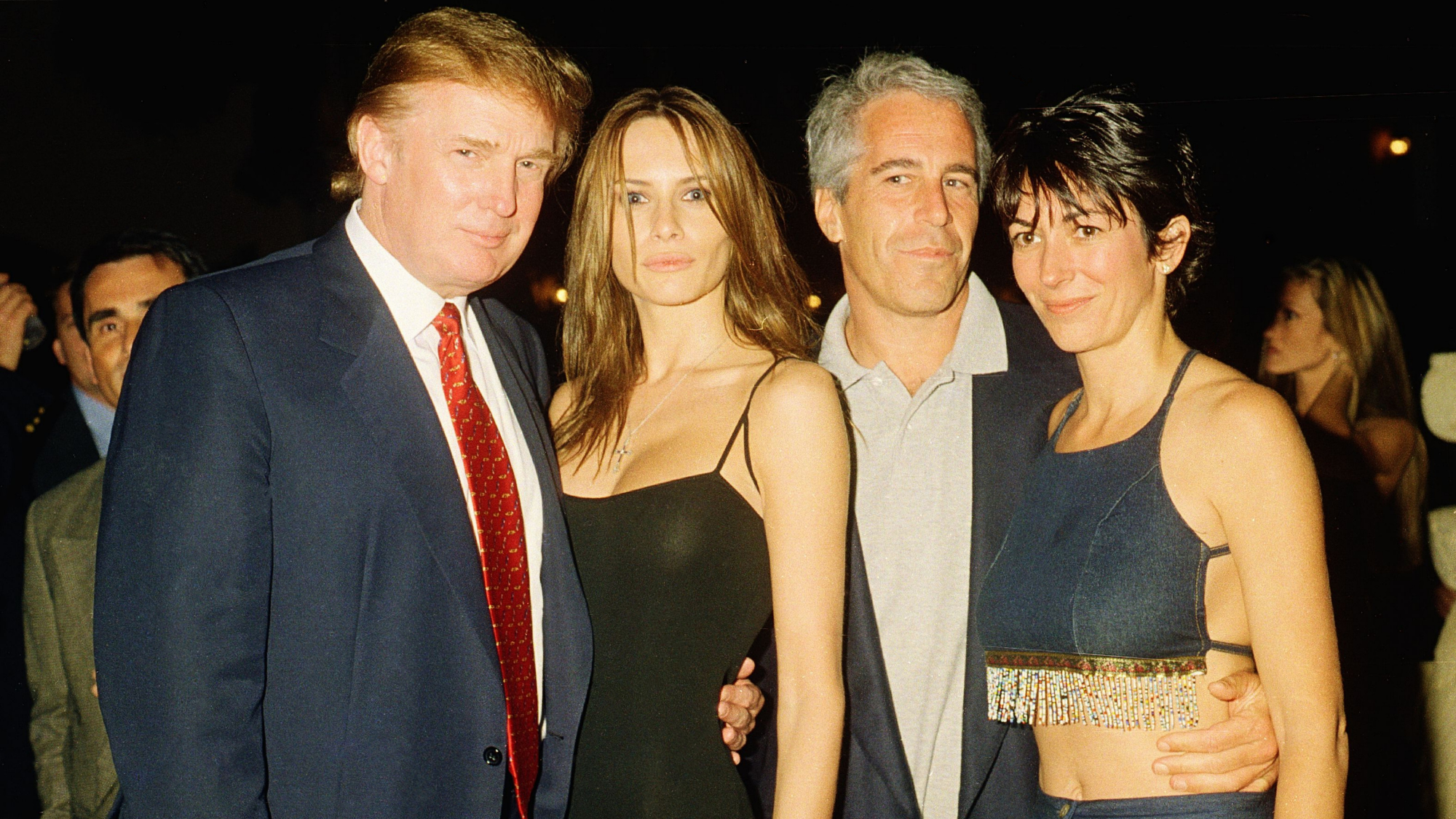

Ghislaine Maxwell: angling for a Trump pardon

Ghislaine Maxwell: angling for a Trump pardonTalking Point Convicted sex trafficker's testimony could shed new light on president's links to Jeffrey Epstein

-

The last words and final moments of 40 presidents

The last words and final moments of 40 presidentsThe Explainer Some are eloquent quotes worthy of the holders of the highest office in the nation, and others... aren't

-

The JFK files: the truth at last?

The JFK files: the truth at last?In The Spotlight More than 64,000 previously classified documents relating the 1963 assassination of John F. Kennedy have been released by the Trump administration

-

'Seriously, not literally': how should the world take Donald Trump?

'Seriously, not literally': how should the world take Donald Trump?Today's big question White House rhetoric and reality look likely to become increasingly blurred

-

Will Trump's 'madman' strategy pay off?

Will Trump's 'madman' strategy pay off?Today's Big Question Incoming US president likes to seem unpredictable but, this time round, world leaders could be wise to his playbook