Google predicts when people will die ‘with 95% accuracy’

Huge benefits for medical profession - but will patients be willing to give up even more data to tech giant

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

Google has claimed it can predict with 95% accuracy when people will die using new artificial intelligence technology.

In a paper published in the journal Nature, the company’s Medical Brain team detailed how it is using a new type of artificial intelligence algorithm to make predictions about the likelihood of death among patients in two separate hospitals.

For predicting patient mortality, Google’s Medical Brain was 95% accurate in the first hospital and 93% accurate in the second.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

It works by analysing patient’s data, such as their age, ethnicity and gender. This information is then joined up with hospital information, like prior diagnoses, current vital signs, and any lab results, reports The Sun.

But according to Bloomberg, what impressed medical experts most “was Google’s ability to sift through data previously out of reach: notes buried in PDFs or scribbled on old charts. The neural net gobbled up all this unruly information then spat out predictions. And it did it far faster and more accurately than existing techniques.”

It is not the first time Google has made inroads into the medical industry. Its DeepMind subsidiary, considered by some experts to lead the way in AI research, “courted controversy” in 2013 after it was revealed it had access to 1.6 million medical records of NHS patients at three hospitals, reports The Independent.

Yet despite concerns the search giant could be given access to even more data, the latest findings prove Google could have a potentially life-saving impact on its 1.17 billion users worldwide.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

The Medical Brain team said: “These models outperformed traditional, clinically used predictive models in all cases. We believe that this approach can be used to create accurate and scalable predictions for a variety of clinical scenarios.”

“For medical facilities bogged down in bureaucratic red tape, Google’s software is a godsend” says Vanity Fair. “Not only can it predict when a patient may die, but it can also estimate how long someone might stay in a hospital, or the chance they’ll be readmitted”.

But the magazine also offers a word of warning saying that “for patients, giving a tech giant like Google access to sensitive medical information may have unintended consequences”.

-

The environmental cost of GLP-1s

The environmental cost of GLP-1sThe explainer Producing the drugs is a dirty process

-

Greenland’s capital becomes ground zero for the country’s diplomatic straits

Greenland’s capital becomes ground zero for the country’s diplomatic straitsIN THE SPOTLIGHT A flurry of new consular activity in Nuuk shows how important Greenland has become to Europeans’ anxiety about American imperialism

-

‘This is something that happens all too often’

‘This is something that happens all too often’Instant Opinion Opinion, comment and editorials of the day

-

Will AI kill the smartphone?

Will AI kill the smartphone?In The Spotlight OpenAI and Meta want to unseat the ‘Lennon and McCartney’ of the gadget era

-

Claude Code: Anthropic’s wildly popular AI coding app

Claude Code: Anthropic’s wildly popular AI coding appThe Explainer Engineers and noncoders alike are helping the app go viral

-

Will regulators put a stop to Grok’s deepfake porn images of real people?

Will regulators put a stop to Grok’s deepfake porn images of real people?Today’s Big Question Users command AI chatbot to undress pictures of women and children

-

Most data centers are being built in the wrong climate

Most data centers are being built in the wrong climateThe explainer Data centers require substantial water and energy. But certain locations are more strained than others, mainly due to rising temperatures.

-

The dark side of how kids are using AI

The dark side of how kids are using AIUnder the Radar Chatbots have become places where children ‘talk about violence, explore romantic or sexual roleplay, and seek advice when no adult is watching’

-

Why 2025 was a pivotal year for AI

Why 2025 was a pivotal year for AITalking Point The ‘hype’ and ‘hopes’ around artificial intelligence are ‘like nothing the world has seen before’

-

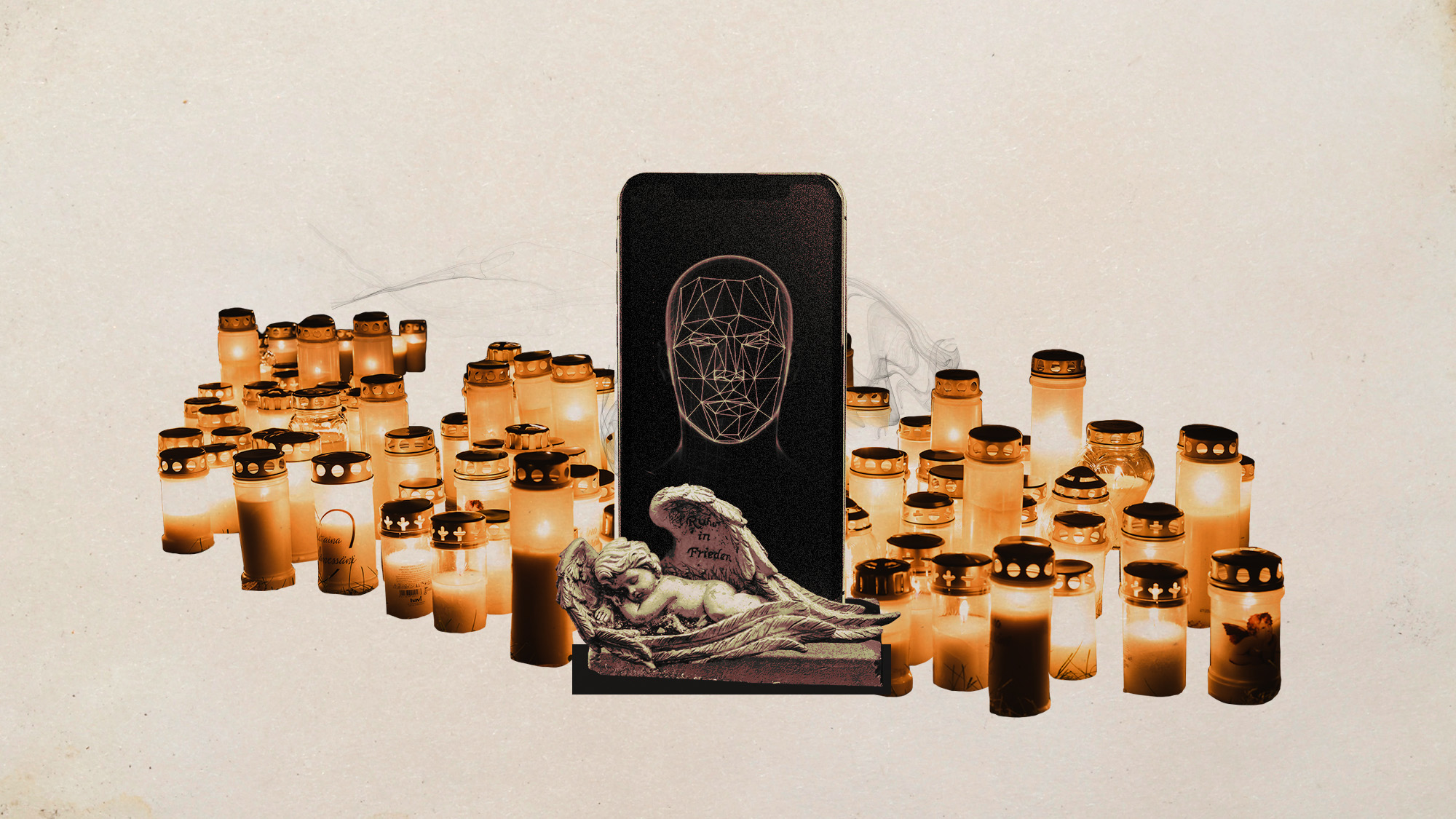

AI griefbots create a computerized afterlife

AI griefbots create a computerized afterlifeUnder the Radar Some say the machines help people mourn; others are skeptical

-

The robot revolution

The robot revolutionFeature Advances in tech and AI are producing android machine workers. What will that mean for humans?