How smart speaker AIs such as Alexa and Siri reinforce gender bias

Unesco urges tech firms to offer gender-neutral versions of their voice assistants

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

Smart speakers powered by artificial intelligence (AI) voice assistants that sound female are reinforcing gender bias, according to a new UN report.

Research by Unesco (United Nations Educational, Scientific and Cultural Organisation) found that AI assistants such as Amazon’s Alexa and Apple’s Siri perpetuate the idea that women should be “subservient and tolerant of poor treatment”, because the systems are “obliging and eager to please”, The Daily Telegraph reports.

The report - called “I’d blush if I could”, in reference to a phrase uttered by Siri following a sexual comment - says tech companies that make their voice assistants female by default are suggesting that women are “docile helpers” who can be “available at the touch of a button”, the newspaper adds.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

The agency also accuses tech companies of failing to “build in proper safeguards against hostile, abusive and gendered language”, reports The Verge.

Instead, most AIs respond to aggressive comments with a “sly joke”, the tech news site notes. If asked to make a sandwich, for example, Siri says: “I can’t. I don’t have any condiments.”

“Companies like Apple and Amazon, staffed by overwhelmingly male engineering teams, have built AI systems that cause their feminised digital assistants to greet verbal abuse with catch-me-if-you-can flirtation,” says the Unesco report.

What has other research found?

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

The Unesco report cites a host of studies, including research by US-based tech firm Robin Labs that suggests at least 5% of interactions with voice assistants are “unambiguously sexually explicit”.

And the company, which develops digital assistants, believes the figure is likely to be “much higher due to difficulties detecting sexually suggestive speech”, The Guardian reports.

The UN agency also points to a study by research firm Gartner, which predicts that people will be having more conversations with the voice assistant in their smart speaker than their spouses by 2020.

Voice assistants already manage an estimated one billion tasks per month, ranging from playing songs to contacting the emergency services.

Although some systems allow users to change the gender of their voice assistant, the majority activate “obviously female voices” by default, the BBC reports.

The Unesco report concludes that this apparent gender bias “warrants urgent attention”.

How could tech companies tackle the issue?

Unesco argues that firms should be required to make their voice assistants “announce” that they are not human when they interact with people, reports The Sunday Times.

The agency also suggests that users should be given the opportunity to select the gender of their voice assistant when they get a new device and that a gender-neutral option should be available, the newspaper adds.

In addition, tech firms should program voice assistants to condemn verbal abuse or sexual harassment with replies such as “no” or “that is not appropriate”, Unesco says.

Tech companies have yet to respond to the study.

-

Antonia Romeo and Whitehall’s women problem

Antonia Romeo and Whitehall’s women problemThe Explainer Before her appointment as cabinet secretary, commentators said hostile briefings and vetting concerns were evidence of ‘sexist, misogynistic culture’ in No. 10

-

Local elections 2026: where are they and who is expected to win?

Local elections 2026: where are they and who is expected to win?The Explainer Labour is braced for heavy losses and U-turn on postponing some council elections hasn’t helped the party’s prospects

-

6 of the world’s most accessible destinations

6 of the world’s most accessible destinationsThe Week Recommends Experience all of Berlin, Singapore and Sydney

-

Will AI kill the smartphone?

Will AI kill the smartphone?In The Spotlight OpenAI and Meta want to unseat the ‘Lennon and McCartney’ of the gadget era

-

Claude Code: Anthropic’s wildly popular AI coding app

Claude Code: Anthropic’s wildly popular AI coding appThe Explainer Engineers and noncoders alike are helping the app go viral

-

Will regulators put a stop to Grok’s deepfake porn images of real people?

Will regulators put a stop to Grok’s deepfake porn images of real people?Today’s Big Question Users command AI chatbot to undress pictures of women and children

-

Most data centers are being built in the wrong climate

Most data centers are being built in the wrong climateThe explainer Data centers require substantial water and energy. But certain locations are more strained than others, mainly due to rising temperatures.

-

The dark side of how kids are using AI

The dark side of how kids are using AIUnder the Radar Chatbots have become places where children ‘talk about violence, explore romantic or sexual roleplay, and seek advice when no adult is watching’

-

Why 2025 was a pivotal year for AI

Why 2025 was a pivotal year for AITalking Point The ‘hype’ and ‘hopes’ around artificial intelligence are ‘like nothing the world has seen before’

-

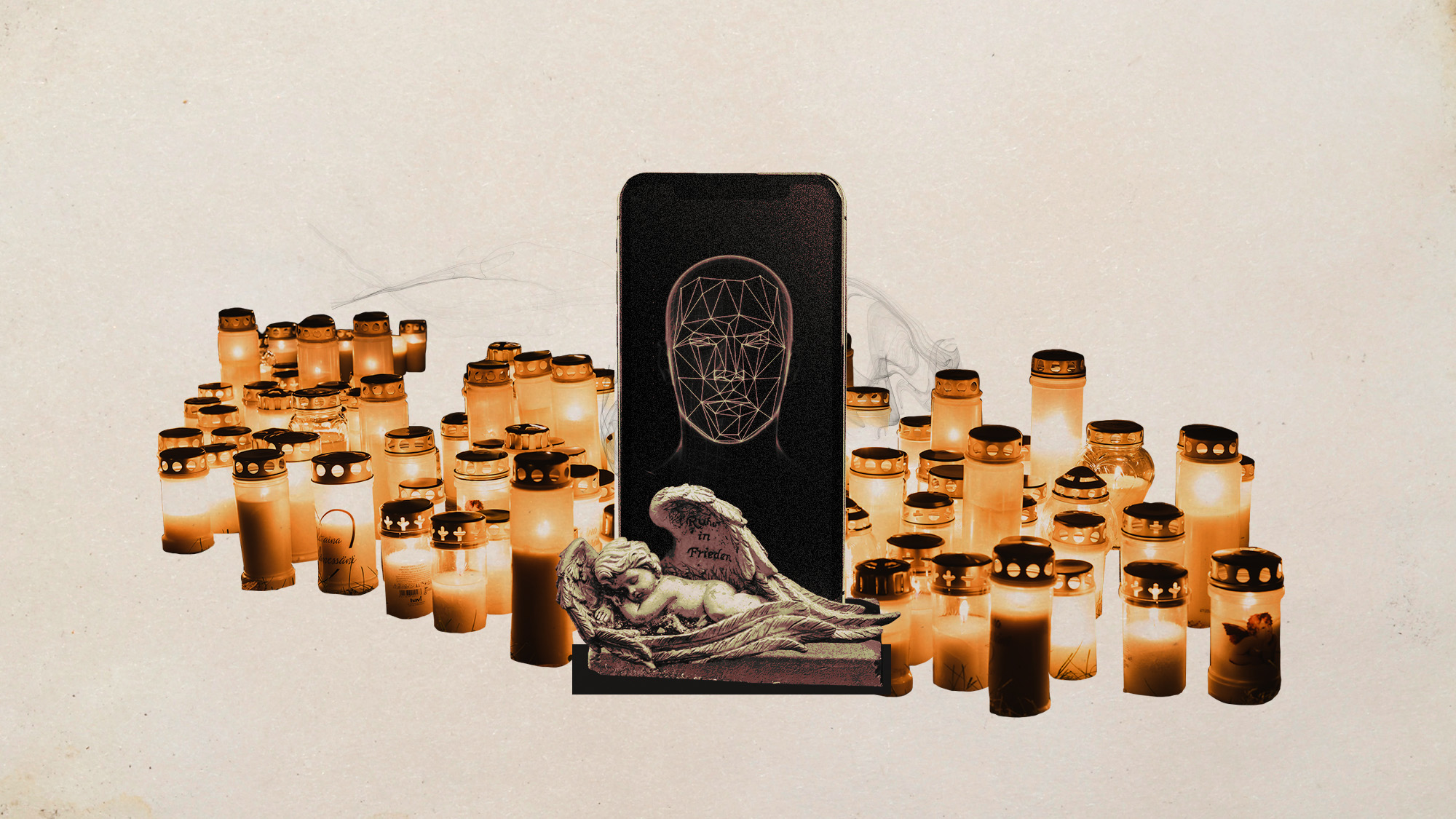

AI griefbots create a computerized afterlife

AI griefbots create a computerized afterlifeUnder the Radar Some say the machines help people mourn; others are skeptical

-

The robot revolution

The robot revolutionFeature Advances in tech and AI are producing android machine workers. What will that mean for humans?