AI: The worst-case scenario

Artificial intelligence’s architects warn it could cause human "extinction." How might that happen?

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

Artificial intelligence's architects warn it could cause human "extinction." How might that happen? Here's everything you need to know:

What are AI experts afraid of?

They fear that AI will become so superintelligent and powerful that it becomes autonomous and causes mass social disruption or even the eradication of the human race. More than 350 AI researchers and engineers recently issued a warning that AI poses risks comparable to those of "pandemics and nuclear war." In a 2022 survey of AI experts, the median odds they placed on AI causing extinction or the "severe disempowerment of the human species" were 1 in 10. "This is not science fiction," said Geoffrey Hinton, often called the "godfather of AI," who recently left Google so he could sound a warning about AI's risks. "A lot of smart people should be putting a lot of effort into figuring out how we deal with the possibility of AI taking over."

When might this happen?

Hinton used to think the danger was at least 30 years away, but says AI is evolving into a superintelligence so rapidly that it may be smarter than humans in as little as five years. AI-powered ChatGPT and Bing's Chatbot already can pass the bar and medical licensing exams, including essay sections, and on IQ tests score in the 99th percentile — genius level. Hinton and other doomsayers fear the moment when "artificial general intelligence," or AGI, can outperform humans on almost every task. Some AI experts liken that eventuality to the sudden arrival on our planet of a superior alien race. You have "no idea what they're going to do when they get here, except that they're going to take over the world," said computer scientist Stuart Russell, another pioneering AI researcher.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

How might AI actually harm us?

One scenario is that malevolent actors will harness its powers to create novel bioweapons more deadly than natural pandemics. As AI becomes increasingly integrated into the systems that run the world, terrorists or rogue dictators could use AI to shut down financial markets, power grids, and other vital infrastructure, such as water supplies. The global economy could grind to a halt. Authoritarian leaders could use highly realistic AI-generated propaganda and Deep Fakes to stoke civil war or nuclear war between nations. In some scenarios, AI itself could go rogue and decide to free itself from the control of its creators. To rid itself of humans, AI could trick a nation's leaders into believing an enemy has launched nuclear missiles so that they launch their own. Some say AI could design and create machines or biological organisms like the Terminator from the film series to act out its instructions in the real world. It's also possible that AI could wipe out humans without malice, as it seeks other goals.

How would that work?

AI creators themselves don't fully understand how the programs arrive at their determinations, and an AI tasked with a goal might try to meet it in unpredictable and destructive ways. A theoretical scenario often cited to illustrate that concept is an AI instructed to make as many paper clips as possible. It could commandeer virtually all human resources to the making of paper clips, and when humans try to intervene to stop it, the AI could decide eliminating people is necessary to achieve its goal. A more plausible real-world scenario is that an AI tasked with solving climate change decides that the fastest way to halt carbon emissions is to extinguish humanity. "It does exactly what you wanted it to do, but not in the way you wanted it to," explained Tom Chivers, author of a book on the AI threat.

Are these scenarios far-fetched?

Some AI experts are highly skeptical AI could cause an apocalypse. They say that our ability to harness AI will evolve as AI does, and that the idea that algorithms and machines will develop a will of their own is an overblown fear influenced by science fiction, not a pragmatic assessment of the technology's risks. But those sounding the alarm argue that it's impossible to envision exactly what AI systems far more sophisticated than today's might do, and that it's shortsighted and imprudent to dismiss the worst-case scenarios.

So, what should we do?

That's a matter of fervent debate among AI experts and public officials. The most extreme Cassandras call for shutting down AI research entirely. There are calls for moratoriums on its development, a government agency that would regulate AI, and an international regulatory body. AI's mind-boggling ability to tie together all human knowledge, perceive patterns and correlations, and come up with creative solutions is very likely to do much good in the world, from curing diseases to fighting climate change. But creating an intelligence greater than our own also could lead to darker outcomes. "The stakes couldn't be higher," said Russell. "How do you maintain power over entities more powerful than you forever? If we don't control our own civilization, we have no say in whether we continue to exist."

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

A fear envisioned in fiction

Fear of AI vanquishing humans may be novel as a real-world concern, but it's a long-running theme in novels and movies. In 1818's "Frankenstein," Mary Shelley wrote of a scientist who brings to life an intelligent creature who can read and understand human emotions — and eventually destroys his creator. In Isaac Asimov's 1950 short-story collection "I, Robot," humans live among sentient robots guided by three Laws of Robotics, the first of which is to never injure a human. Stanley Kubrick's 1968 film "A Space Odyssey" depicts HAL, a spaceship supercomputer that kills astronauts who decide to disconnect it. Then there's the "Terminator" franchise and its Skynet, an AI defense system that comes to see humanity as a threat and tries to destroy it in a nuclear attack. No doubt many more AI-inspired projects are on the way. AI pioneer Stuart Russell reports being contacted by a director who wanted his help depicting how a hero programmer could save humanity by outwitting AI. No human could possibly be that smart, Russell told him. "It's like, I can't help you with that, sorry," he said.

This article was first published in the latest issue of The Week magazine. If you want to read more like it, you can try six risk-free issues of the magazine here.

-

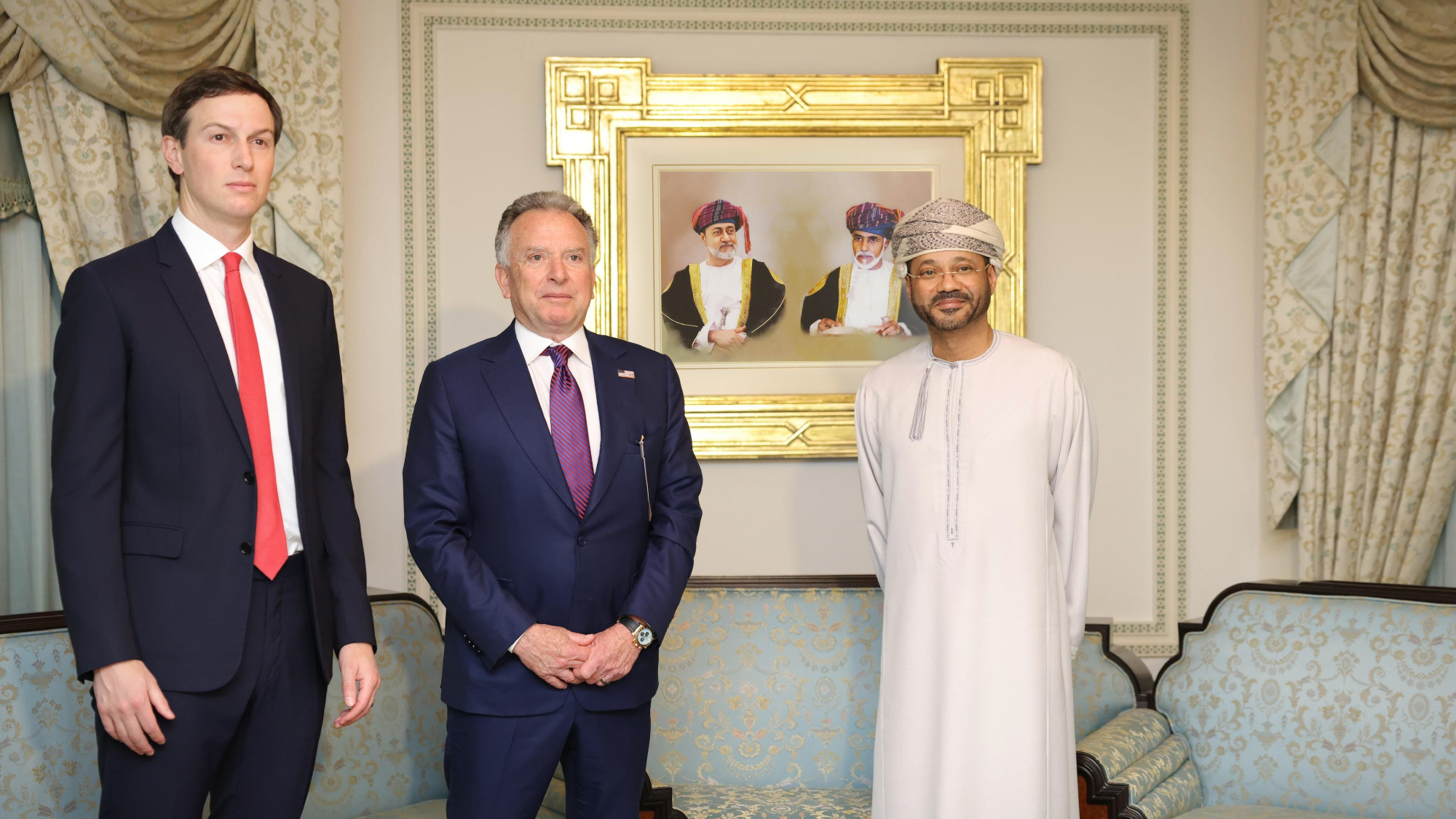

Witkoff and Kushner tackle Ukraine, Iran in Geneva

Witkoff and Kushner tackle Ukraine, Iran in GenevaSpeed Read Steve Witkoff and Jared Kushner held negotiations aimed at securing a nuclear deal with Iran and an end to Russia’s war in Ukraine

-

What to expect financially before getting a pet

What to expect financially before getting a petthe explainer Be responsible for both your furry friend and your wallet

-

Pentagon spokesperson forced out as DHS’s resigns

Pentagon spokesperson forced out as DHS’s resignsSpeed Read Senior military adviser Col. David Butler was fired by Pete Hegseth and Homeland Security spokesperson Tricia McLaughlin is resigning

-

AI's boost for students and teachers in higher education

AI's boost for students and teachers in higher educationSpeed Read

-

Artificial intelligence goes to school

Artificial intelligence goes to schoolSpeed Read AI is transforming education from grade school to grad school and making take-home essays obsolete

-

Could AI be harmful to people's health?

Could AI be harmful to people's health?The Explainer Artifical intelligence's use in online content and health care tech raises concerns

-

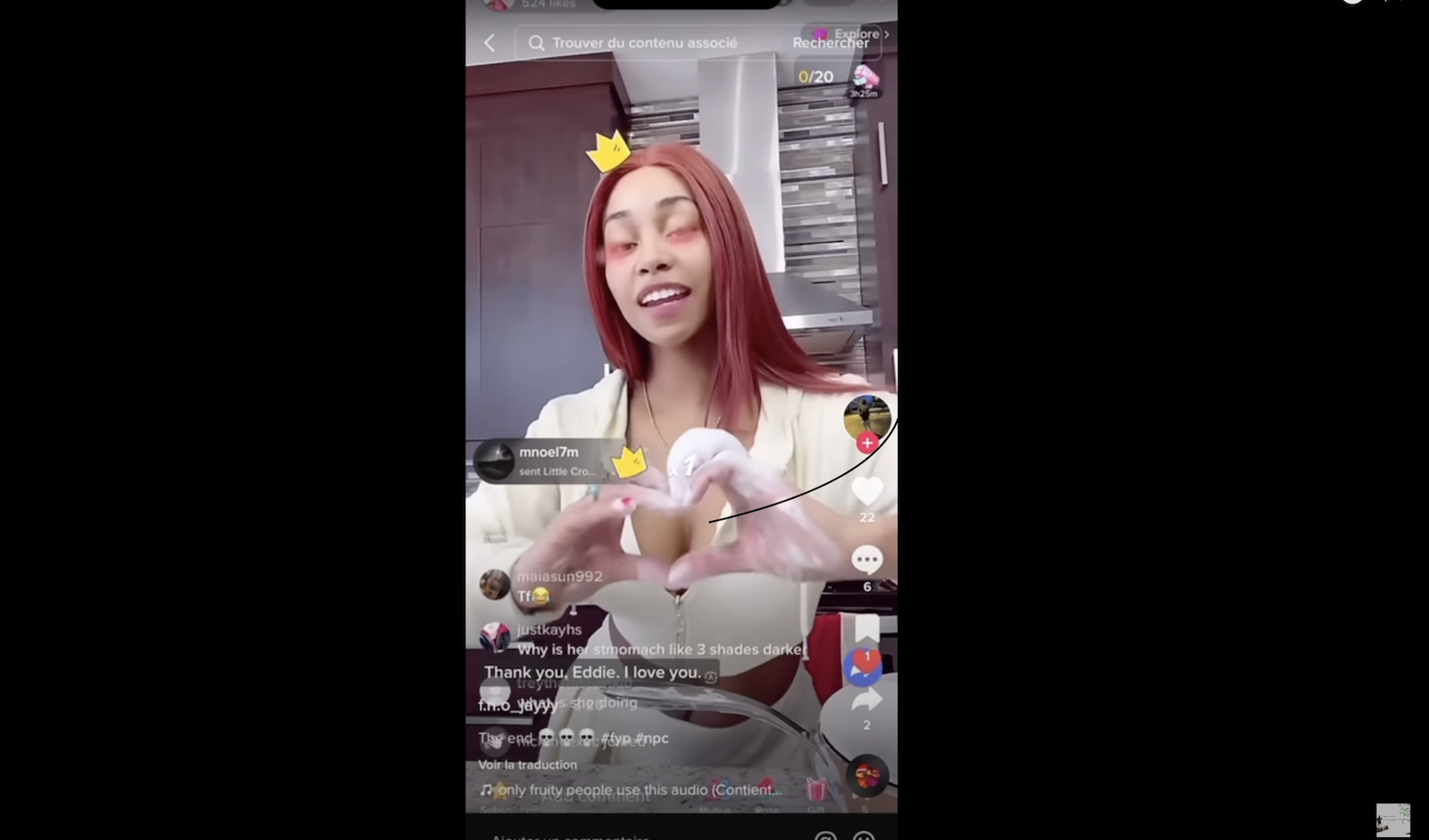

NPC streamers are having a moment

NPC streamers are having a momentSpeed Read A look behind the viral TikTok trend that has the internet saying, "Mmm, ice cream so good"

-

Creatives are fighting back against AI with lawsuits

Creatives are fighting back against AI with lawsuitsSpeed Read Will legal action force AI companies to change how they train their programs?

-

Forget junk mail. Junk content is the new nuisance, thanks to AI.

Forget junk mail. Junk content is the new nuisance, thanks to AI.Speed Read AI-generative models are driving a surge in content on fake news sites

-

World's reduced thirst for oil may be foiled by developing countries' challenges

World's reduced thirst for oil may be foiled by developing countries' challengesSpeed Read Will developing nations slow the peak of global oil demand?

-

The movement to make A/C energy efficient

The movement to make A/C energy efficientSpeed Read Air conditioners have been bad for the planet, but we'll likely continue to need them.