Durham police to use AI for custody decisions

System has an 89 per cent success rate in identifying suspects who are likely to offend

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

Police in Durham are preparing to use artificial intelligence (AI) to assist officers deciding whether or not to send a suspect into custody, reports the BBC.

A system has been developed to categorise suspects into "low, medium or high risk of offending". It has been developed using five years of criminal history data.

Sheena Urwin, head of criminal justice at Durham Constabulary, told the BBC: "I imagine in the next two to three months we'll probably make it a live tool to support officers' decision making".

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

Police trialled the harm assessment risk tool (Hart) for a two-year period starting in 2013, says Alphr, during which researchers discovered it had a 98 per cent success rate in identifying low-risk subjects and an 89 per cent rate for high-risk subjects.

It's decisions are based on factors such as "seriousness of alleged crime and previous criminal history".

Hart "leans towards a cautious outlook", says Alphr, so it is more likely to label a suspect as medium or high-risk, reducing the danger of "releasing dangerous criminals".

Such technology is becoming a vital tool in helping police in their investigations.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

Last month, a man was charged with murdering his wife after investigators were able to work out her final moments using her Fitbit health tracker.

Information on how many steps the victim had walked indicated she had been active for an hour after the time her husband said she died, says The Guardian.

It also suggested she had "traveled more than 1,200ft after arriving home", adds the paper, while her husband said she was murdered by intruders immediately after arriving.

-

Touring the vineyards of southern Bolivia

Touring the vineyards of southern BoliviaThe Week Recommends Strongly reminiscent of Andalusia, these vineyards cut deep into the country’s southwest

-

American empire: a history of US imperial expansion

American empire: a history of US imperial expansionDonald Trump’s 21st century take on the Monroe Doctrine harks back to an earlier era of US interference in Latin America

-

Elon Musk’s starry mega-merger

Elon Musk’s starry mega-mergerTalking Point SpaceX founder is promising investors a rocket trip to the future – and a sprawling conglomerate to boot

-

Claude Code: Anthropic’s wildly popular AI coding app

Claude Code: Anthropic’s wildly popular AI coding appThe Explainer Engineers and noncoders alike are helping the app go viral

-

Will regulators put a stop to Grok’s deepfake porn images of real people?

Will regulators put a stop to Grok’s deepfake porn images of real people?Today’s Big Question Users command AI chatbot to undress pictures of women and children

-

Most data centers are being built in the wrong climate

Most data centers are being built in the wrong climateThe explainer Data centers require substantial water and energy. But certain locations are more strained than others, mainly due to rising temperatures.

-

The dark side of how kids are using AI

The dark side of how kids are using AIUnder the Radar Chatbots have become places where children ‘talk about violence, explore romantic or sexual roleplay, and seek advice when no adult is watching’

-

Why 2025 was a pivotal year for AI

Why 2025 was a pivotal year for AITalking Point The ‘hype’ and ‘hopes’ around artificial intelligence are ‘like nothing the world has seen before’

-

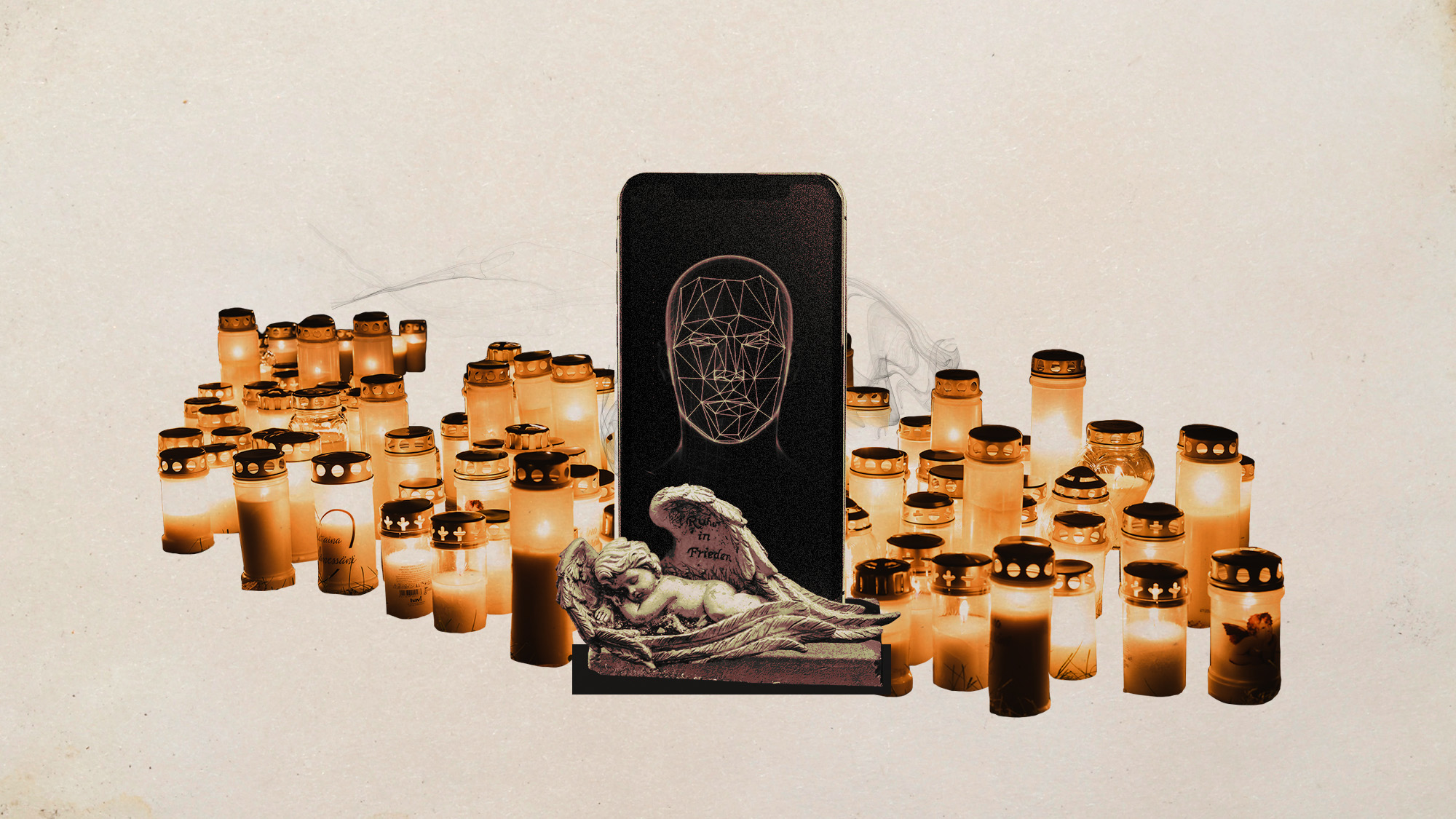

AI griefbots create a computerized afterlife

AI griefbots create a computerized afterlifeUnder the Radar Some say the machines help people mourn; others are skeptical

-

The robot revolution

The robot revolutionFeature Advances in tech and AI are producing android machine workers. What will that mean for humans?

-

Separating the real from the fake: tips for spotting AI slop

Separating the real from the fake: tips for spotting AI slopThe Week Recommends Advanced AI may have made slop videos harder to spot, but experts say it’s still possible to detect them