Why King’s Cross facial recognition tech is proving so controversial

Use of hi-tech surveillance systems raises fears over the public’s privacy

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

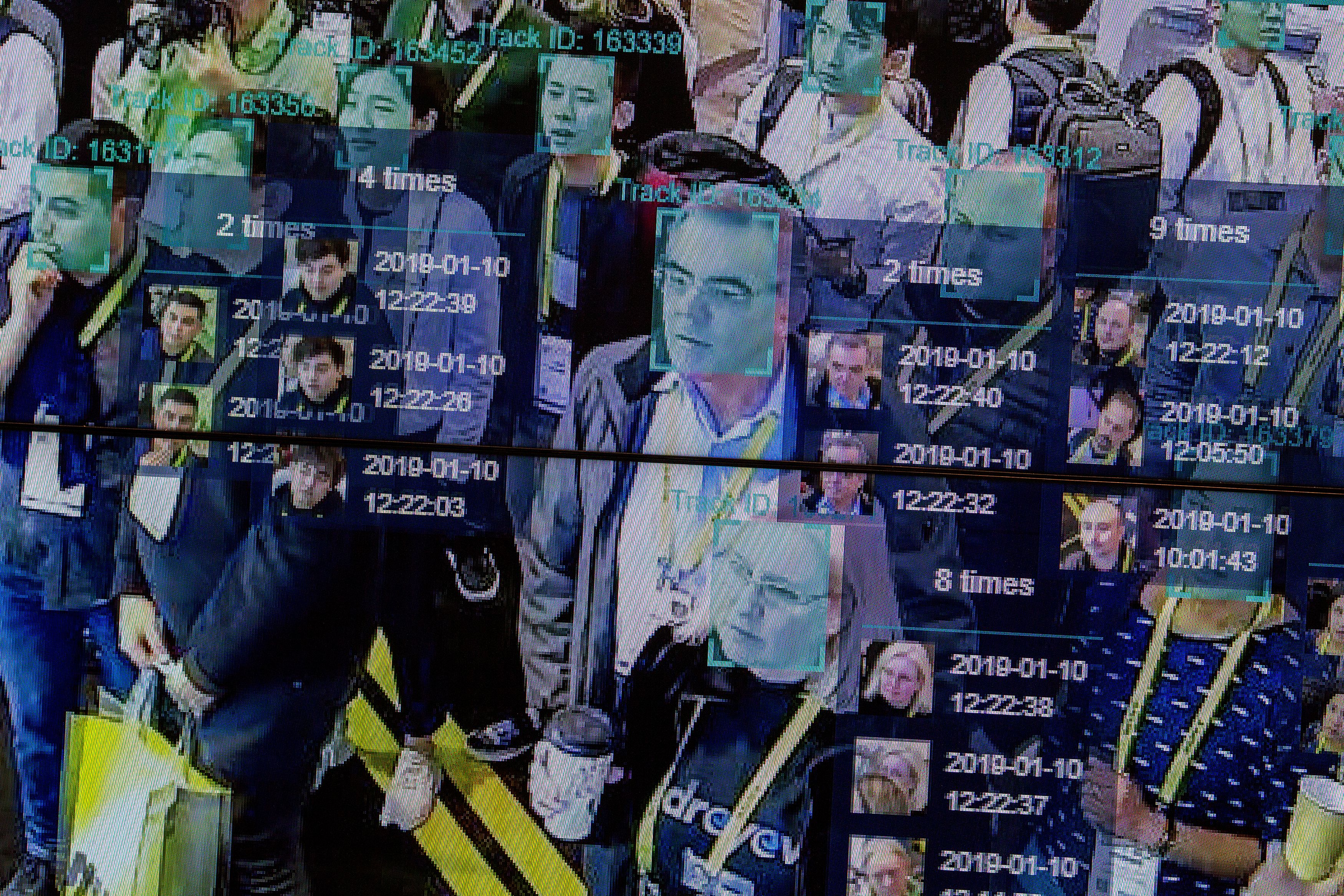

Facial-scanning technology used in the King’s Cross area of London to track “tens of thousands of people” has come under fire from privacy campaigners.

The 67-acre site, which has recently been redeveloped to include more housing and a new British headquarters for Google, features “multiple cameras” that monitor the activity of visitors, the Financial Times reports.

Argent, the site’s developer, told the BBC that the technology had been deployed “in the interest of public safety” and compared the area to other public spaces.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

But privacy campaigners fear that private firms could use the technology to conduct “secret identity checks on the public”, The Daily Telegraph notes.

How does face-recognition tech work?

Simply put, face-scanning technology uses a combination of cameras and artificial intelligence (AI) to scan and register the details of a person based on their facial profile.

According to The Guardian, a computer “scans frames of video” and allocates a “vector” to each face, which essentially maps and converts a person’s facial profile into a quantifiable data format.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

The data is then cross-checked with “people on a watchlist”, before being ranked and presented for a human moderator to review, the newspaper notes.

In the case of the King’s Cross area, an Argent spokesperson said that facial recognition was one of “a number of detection and tracking methods” used in the developed zone, the BBC reports.

However, the spokesperson insisted that the firm has “sophisticated systems in place to protect the privacy of the general public”.

What legal hurdles do face-recognition systems bring?

Under the European Union’s General Data Protection Regulation (GDPR) laws, introduced last May, face-scanning cameras are classified as systems that “collect information that is inherently personal”, according to The Times.

The technology is legal, provided organisers inform the public that such systems are in place and how their data will be processed, the newspaper adds. Information can be collected through facial-detecting systems only for “legitimate interest”, such as for security, but it cannot be passed on to third parties for marketing purposes.

Argent insists that the systems it uses in King’s Cross are for public safety and to offer “best possible experience”, the Times reports.

But the FT claims that the developer has not confirmed how many cameras are in use in the area, nor how long the system has been in place.

A similar system is also set to be installed across a 97-acre estate in Canary Wharf, east London, though the technology will not be used to monitor pedestrians and workers continuously, sources close to the matter told the paper. Instead, face-scanning tech will be “limited to specific purposes or threats”.

How have privacy campaigners responded?

Silkie Carlo, director of the non-profit privacy group Big Brother Watch, told the Daily Telegraph that “huge areas of our capital have been sold off, privately policed, and are now being covered with Chinese-style surveillance.

“Private companies are asserting the right to monitor and secretly conduct identity checks on tens of thousands of us,” she said. “What happens with our data is anyone’s guess.”

Meanwhile, Hannah Couchman, a policy and campaigns officer at human rights group Liberty, told the Times that the tech is “more likely to misidentify people of colour and subject them to an intrusive and unjustified stop.

“There has been no transparency about how this tool is being deployed.”

The use of face-scanning cameras has also attracted the attention of the Information Commissioner’s Office (ICO), an independent data regulatory office that reports to the Government.

“The ICO is currently looking at the use of facial recognition technology by law enforcement in public spaces and by private sector organisations, including where they are partnering with police forces,” it said in a statement. “We’ll consider taking action where we find non-compliance with the law.”