The reports of American decline are greatly exaggerated

America is wealthy, influential, powerful, and increasingly equal. Don't let anyone tell you otherwise.

The narrative of American decline gets louder every day. Our parents had it better. Our influence overseas has waned. Our country is poorer and more divided than ever.

We say these things, and we mean them in the moment, and our knowledge of history — or lack thereof — allows us to truly believe them. "American decline is the idea that the United States of America is diminishing in power geopolitically, militarily, financially, economically, socially, culturally, in matters of healthcare, and/or on environmental issues," observes a Wikipedia entry on the idea. "There has been debate over the extent of the decline, and whether it is relative or absolute," the article adds, but that it is happening is presented nearly as established fact.

Our decline is in every journal you can think of, on the left, on the right. It's on our fine website here at The Week. And the idea has a long and illustrious history: Decline is a sad story many peoples have told themselves.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

But what if the story is wrong? And what if constantly calling back to the way things never actually were makes it impossible for our own age to live up to our expectations — let alone hopes?

When you're looking for signs of decline, it's easy to see them everywhere. In our culture wars, one side sees a horrible moral fall from the good old ways, while the other thinks we're losing the high ground and slipping back into the dark ages. We see decline on the battlefield, pretending we don't win wars anymore. We say people can't find good jobs anymore. Our culture is bankrupt! Look, the only movies we can can make anymore are sequels!

I don't want to make light of any person's suffering. I've been out of work; I have felt despair; and I can't believe that Fast & Furious is still around. But that's hardly a complete picture.

Economically, there's an incredible imbalance of wealth in this country, yes. But our poverty rates are historically low, and we've seen enormous inequality in "better" days. (When John D. Rockefeller walked the earth, he was worth $418 billion, adjusted for inflation — more than $100 billion more than Elon Musk, two Jeffs Bezos, or more than two thirds of the entire federal budget in 1920.) China will overtake the U.S. in the overall size of its economy, but U.S. per capita GDP is more six times that of China and considerably larger than that of other Western nations.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

As for influence, there's still no other nation that can rival ours. (Have you ever eaten in the French version of McDonald's when you're traveling in Istanbul?) American soft power remains unmatched.

Our hard power does, too. The U.S. military did not "win" in Afghanistan because it wasn't an existential fight. We achieved an initial victory in ousting the Taliban, achieved another in killing Osama bin Laden, lost sight of what winning meant, and then — fairly enough — decided it was time to go home. That's not a triumph, but it's also not a defeat, and if our soldiers need to be sent to war to protect the homeland or our allies, they stand ready.

Can the United States defend itself from military threats from around the world? No one says no. Can America still project awesome power across oceans and continents? No one says no. Can any other nation in the world do the same at anywhere near the scale of the United States? No.

Do we need a stronger military than the one that nearly $800 billion is buying? We can have one. America was not an international player before World War II became the Arsenal of Democracy and put more than 16 million men under arms. Imagine what the U.S. could do now if we needed to.

And for those who say we're more divided than ever before, three words: American Civil War.

Look, I'm 51 years old. When I was born, Saigon was soon to fall; a president resigned in disgrace after trying to pull down the whole judiciary with him; New York City (where I grew up) was falling apart, and people were scared even to visit. Cars were ugly, beige, and got 3 miles to the gallon; no one could believe the federal deficit was so high; the culture was morally bankrupt, and rock and roll had died with the 1960s. For God's sake, the Beatles had broken up!

Are things truly worse now? Have we declined since the highpoint of shag carpet, the outright dismissal of women and minorities, and the hatred and fear of the LGBT community?

What we are lacking a sense of self-belief, of confidence in our competence. We believe we are powerless and so become powerless. If you believe you will fail before you start, you probably will.

The reality is that when a massive pandemic hit, the U.S. kept things moving, people working, and used science to develop a vaccine faster than most doctors thought possible. We created billions of dollars and sent people checks, put money into roads and other infrastructure. And even though the supply chain rattled, and we couldn't find cream cheese for a little while, it didn't break.

The reality is that when Russia decided to roll back the end of history, America was there to help, shaping a coalition to punish Russian President Vladimir Putin and his co-conspirators, and sending massive stockpiles of weapons to a quasi-ally.

The reality is that America is still a great power — none greater — and can even be a power for good. It's time to believe it.

Jason Fields is a writer, editor, podcaster, and photographer who has worked at Reuters, The New York Times, The Associated Press, and The Washington Post. He hosts the Angry Planet podcast and is the author of the historical mystery "Death in Twilight."

-

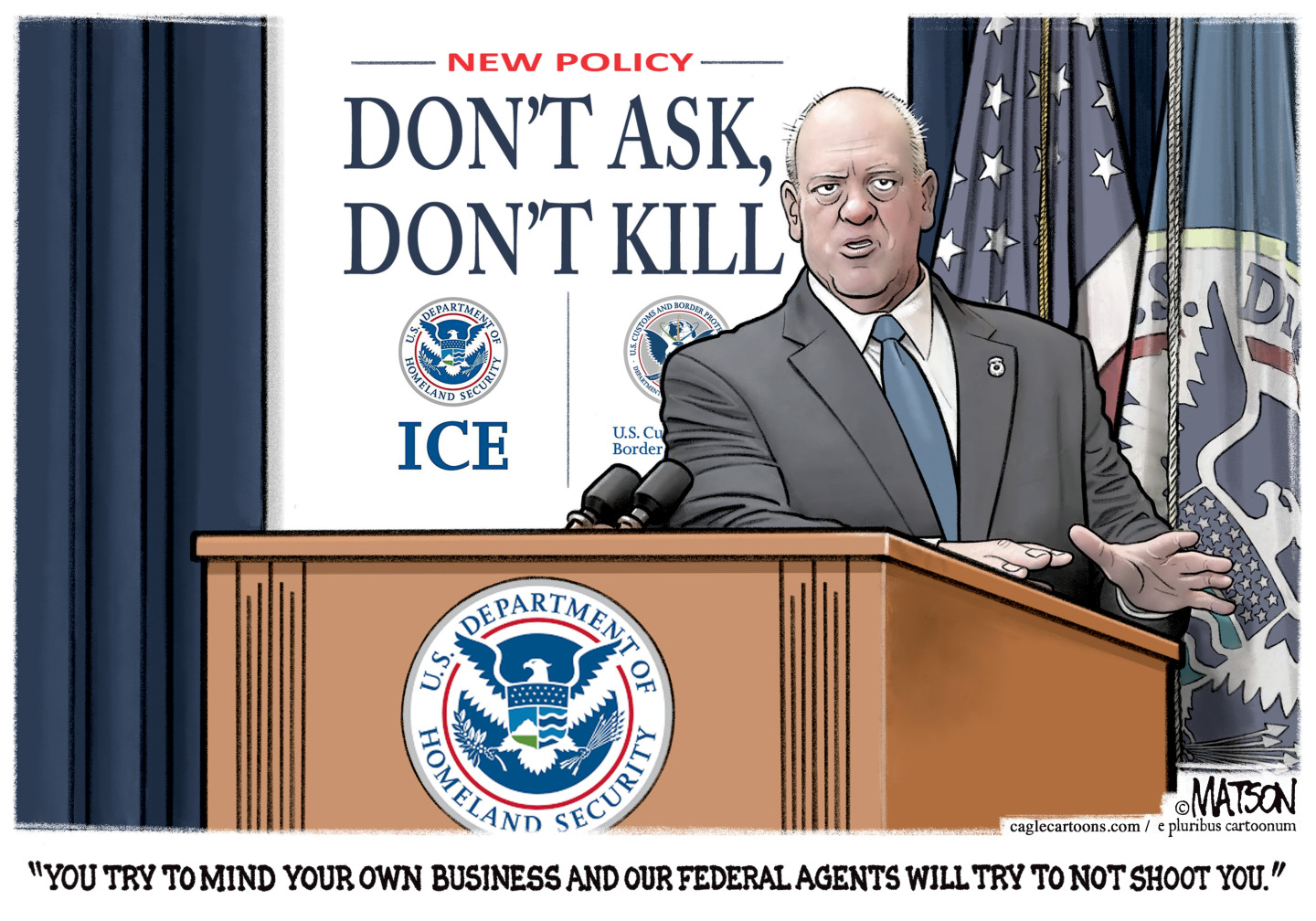

Political cartoons for February 1

Political cartoons for February 1Cartoons Sunday's political cartoons include Tom Homan's offer, the Fox News filter, and more

-

Will SpaceX, OpenAI and Anthropic make 2026 the year of mega tech listings?

Will SpaceX, OpenAI and Anthropic make 2026 the year of mega tech listings?In Depth SpaceX float may come as soon as this year, and would be the largest IPO in history

-

Reforming the House of Lords

Reforming the House of LordsThe Explainer Keir Starmer’s government regards reform of the House of Lords as ‘long overdue and essential’

-

‘We know how to make our educational system world-class again’

‘We know how to make our educational system world-class again’Instant Opinion Opinion, comment and editorials of the day

-

Halligan quits US attorney role amid court pressure

Halligan quits US attorney role amid court pressureSpeed Read Halligan’s position had already been considered vacant by at least one judge

-

‘The science is clear’

‘The science is clear’Instant Opinion Opinion, comment and editorials of the day

-

Judge clears wind farm construction to resume

Judge clears wind farm construction to resumeSpeed Read The Trump administration had ordered the farm shuttered in December over national security issues

-

Maduro’s capture: two hours that shook the world

Maduro’s capture: two hours that shook the worldTalking Point Evoking memories of the US assault on Panama in 1989, the manoeuvre is being described as the fastest regime change in history

-

A running list of the international figures Donald Trump has pardoned

A running list of the international figures Donald Trump has pardonedin depth The president has grown bolder in flexing executive clemency powers beyond national borders

-

A running list of US interventions in Latin America and the Caribbean after World War II

A running list of US interventions in Latin America and the Caribbean after World War IIin depth Nicolás Maduro isn’t the first regional leader to be toppled directly or indirectly by the US

-

The billionaires’ wealth tax: a catastrophe for California?

The billionaires’ wealth tax: a catastrophe for California?Talking Point Peter Thiel and Larry Page preparing to change state residency