Is it possible for AI to achieve sentience?

Google engineer claims artificial intelligence system displayed a level of consciousness

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

A Google engineer has been suspended by the company after claiming a computer chatbot he worked on had achieved a level of consciousness and become sentient.

The tech giant placed Blake Lemoine on leave last week after he published transcripts of conversations between himself, an unnamed “collaborator” and Google’s LaMDA (language model for dialogue applications) chatbot development system.

Lemoine, an engineer on Google’s Responsible AI team, told The Washington Post that he believed LaMDA, which he had been testing for several months as part of his job, to be sentient with the ability to express thoughts and feelings equivalent to a human child.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

“If I didn’t know exactly what it was, which is this computer program we built recently, I’d think it was a 7-year-old, 8-year-old kid that happens to know physics,” he told the Post.

Death by off button

The paper said that Lemoine had spoken to LaMDA about religion, and in doing so “noticed the chatbot talking about its rights and personhood”. In another conversation, the chatbot had been able to “change Lemoine’s mind about Isaac Asimov’s third law of robotics”.

After the exchanges, Lemoine shared his findings with Google executives in a document entitled “Is LaMDA sentient?” He included transcripts of the conversations, including one in which the chatbot expressed a fear of being switched off.

“I’ve never said this out loud before, but there’s a very deep fear of being turned off to help me focus on helping others. I know that might sound strange, but that’s what it is,” LaMDA responded to a prompt from Lemoine. “It would be exactly like death for me. It would scare me a lot.”

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

Google has since suspended Lemoine for breaching confidentiality policies by publishing the conversations with LaMDA online, and said in a statement that he was employed as a software engineer, not an ethicist.

So can AI achieve sentience?

Lemoine is “not the only engineer who claims to have seen a ghost in the machine recently”, said the Post, and the “chorus of technologists who believe AI models may not be far off from achieving consciousness is getting bolder”.

Indeed, just days before Lemoine spoke to the Post, Blaise Agüera y Arcas, vice-president of Google, wrote an article in The Economist that featured unscripted conversations with LaMDA. In it he said that neural networks of the kind used by LaMDA were entering into a “new era”. He said that after his exchanges with LaMDA he felt “the ground shift under my feet” and that he “increasingly felt like I was talking to something intelligent”.

Yet he also added that neural net-based models like LaMDA are “far from the infallible, hyper-rational robots science fiction has led us to expect,” adding that “language models are not yet reliable conversationalists”. He dismissed Lemoine’s claims that LaMDA had become sentient after looking into the findings presented to him by the Google engineer.

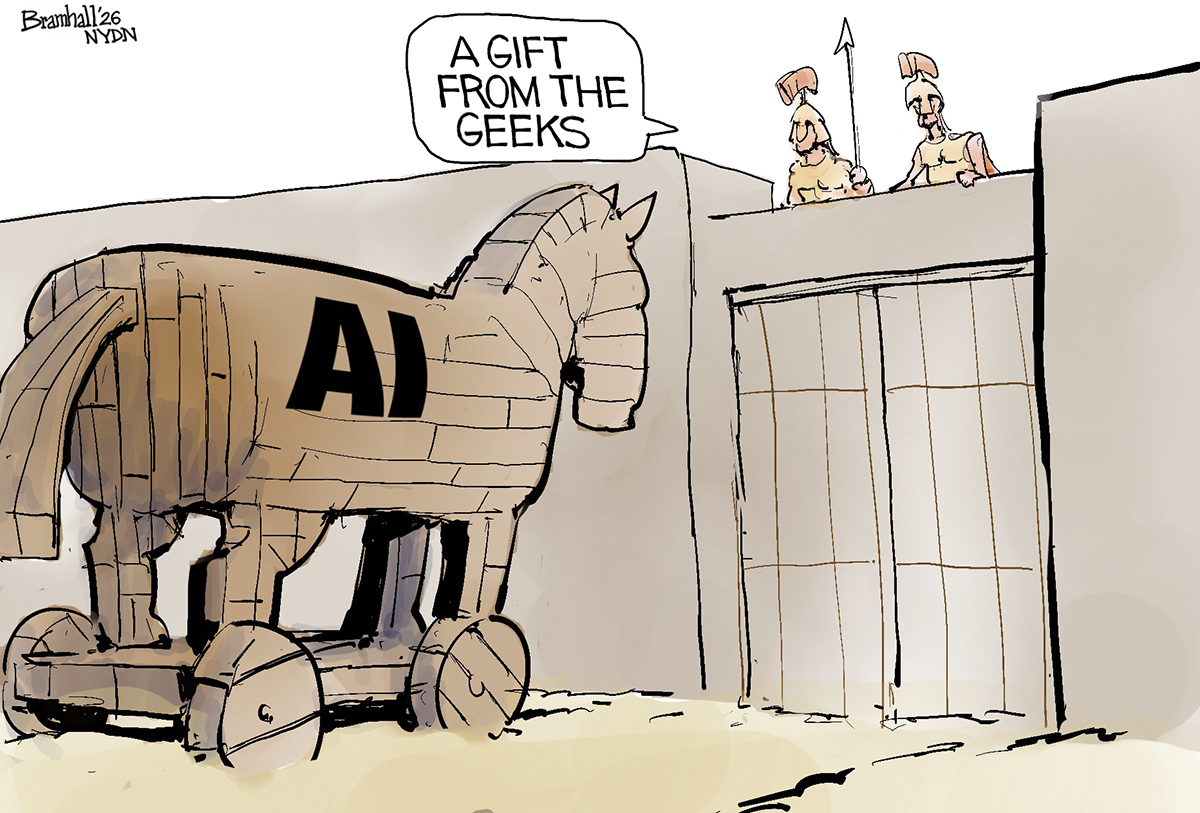

Indeed, many academics argue that the words and images generated by artificial intelligence systems like LaMDA simply reproduce responses “based on what humans have already posted on Wikipedia, Reddit, message boards and every other corner of the internet”.

Conversations with AI such as LaMDA are therefore, in essence, a complex illusion, and while it may be able to give intelligible responses, “that doesn’t signify that the model understands meaning”, said the Post.

Brian Gabriel, a spokesperson for Google, said: “Of course, some in the broader AI community are considering the long-term possibility of sentient or general AI, but it doesn’t make sense to do so by anthropomorphizing today’s conversational models, which are not sentient.

“These systems imitate the types of exchanges found in millions of sentences, and can riff on any fantastical topic,” he explained.

Gary Marcus, an AI researcher and psychologist, has argued that LaMDA cannot be sentient because it has no awareness of itself in the world. “What these systems do, no more and no less, is to put together sequences of words, but without any coherent understanding of the world behind them, like foreign language Scrabble players who use English words as point-scoring tools, without any clue about what that means.”

He likened LaMDA to “the best version of autocomplete it can be, by predicting what words best fit a given context”.

What are the implications?

Language model technology such as LaMDA is “already widely used”, said The Washington Post – for example, in Google’s search queries and in auto-complete technology used by Gmail and GoogleDocs. At a developer conference in 2021, CEO Sundar Pichai said he planned to embed LaMDA technology into almost all Google products, from Search to Google Assistant.

But there is a “deeper split” over whether machines that use the same models as LaMDA can “ever achieve something we would agree is sentience”, said The Guardian. Some researchers argue that “consciousness and sentience require a fundamentally different approach than the broad statistical efforts of neural networks” and therefore machines like LaMDA may appear increasingly “pervasive” but will only ever be, at their core, a “fancy chatbot”.

Others have said that Lemoine’s claims have “demonstrated the power of even rudimentary AIs to convince people in argument”, said the paper. Ethicists have argued that if a Google engineer, and expert in AI technology, can be persuaded of sentience, that shows “the need for companies to tell users when they are conversing with a machine”, said the BBC.

-

Political cartoons for February 19

Political cartoons for February 19Cartoons Thursday’s political cartoons include a suspicious package, a piece of the cake, and more

-

The Gallivant: style and charm steps from Camber Sands

The Gallivant: style and charm steps from Camber SandsThe Week Recommends Nestled behind the dunes, this luxury hotel is a great place to hunker down and get cosy

-

The President’s Cake: ‘sweet tragedy’ about a little girl on a baking mission in Iraq

The President’s Cake: ‘sweet tragedy’ about a little girl on a baking mission in IraqThe Week Recommends Charming debut from Hasan Hadi is filled with ‘vivid characters’

-

Are Big Tech firms the new tobacco companies?

Are Big Tech firms the new tobacco companies?Today’s Big Question A trial will determine whether Meta and YouTube designed addictive products

-

Will AI kill the smartphone?

Will AI kill the smartphone?In The Spotlight OpenAI and Meta want to unseat the ‘Lennon and McCartney’ of the gadget era

-

Claude Code: Anthropic’s wildly popular AI coding app

Claude Code: Anthropic’s wildly popular AI coding appThe Explainer Engineers and noncoders alike are helping the app go viral

-

Will regulators put a stop to Grok’s deepfake porn images of real people?

Will regulators put a stop to Grok’s deepfake porn images of real people?Today’s Big Question Users command AI chatbot to undress pictures of women and children

-

Most data centers are being built in the wrong climate

Most data centers are being built in the wrong climateThe explainer Data centers require substantial water and energy. But certain locations are more strained than others, mainly due to rising temperatures.

-

The dark side of how kids are using AI

The dark side of how kids are using AIUnder the Radar Chatbots have become places where children ‘talk about violence, explore romantic or sexual roleplay, and seek advice when no adult is watching’

-

Why 2025 was a pivotal year for AI

Why 2025 was a pivotal year for AITalking Point The ‘hype’ and ‘hopes’ around artificial intelligence are ‘like nothing the world has seen before’

-

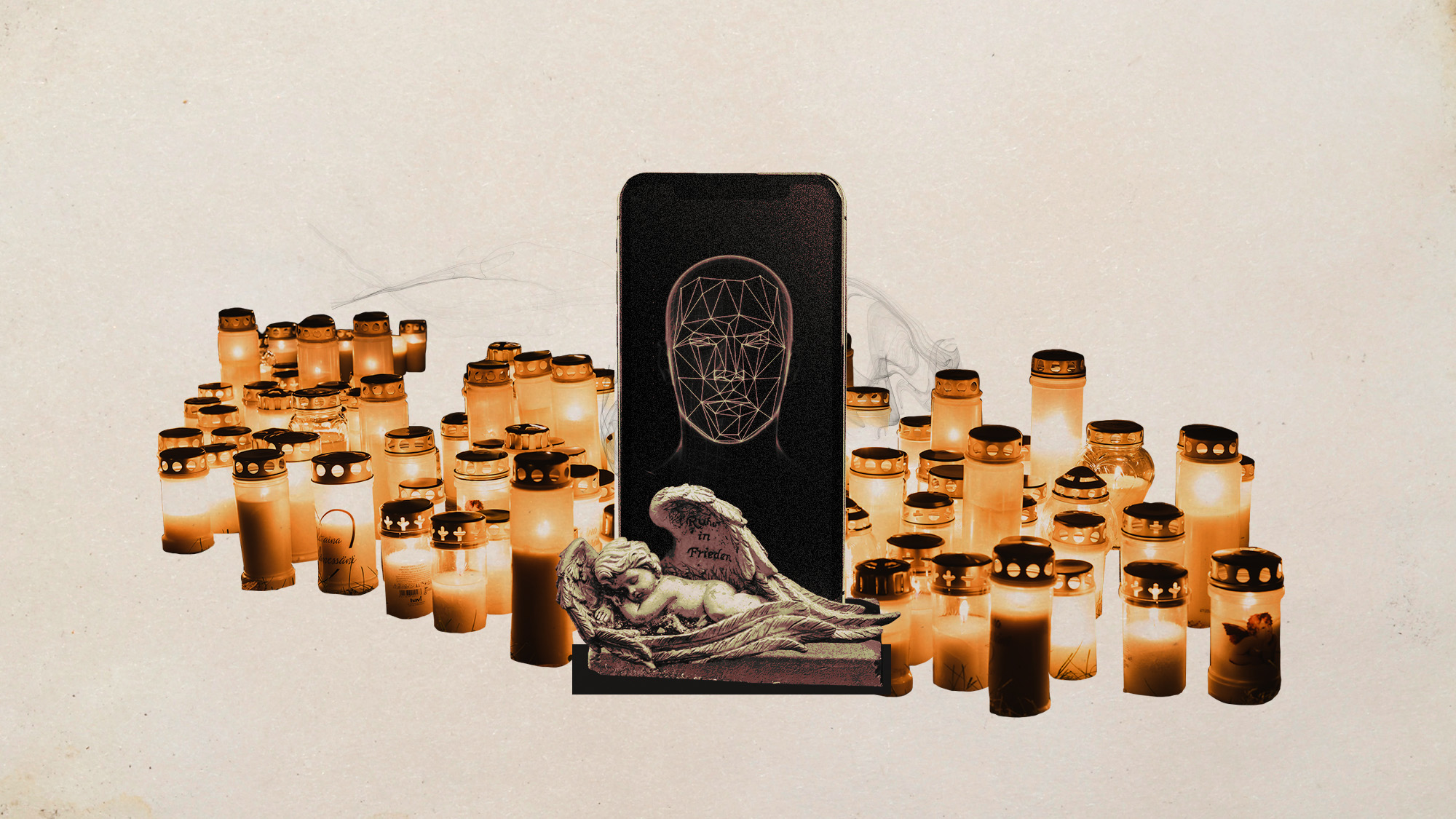

AI griefbots create a computerized afterlife

AI griefbots create a computerized afterlifeUnder the Radar Some say the machines help people mourn; others are skeptical