Facebook shuts down experiment after AI invents own language

Researchers close project after machines develop own way of communicating unintelligible to humans

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

Bob: i can i i everything else . . . . . . . . . . . . . .Alice: balls have zero to me to me to me to me to me to me to me to me toBob: you i everything else . . . . . . . . . . . . . .Alice: balls have a ball to me to me to me to me to me to me to meBob: i i can i i i everything else . . . . . . . . . . . . . .Alice: balls have a ball to me to me to me to me to me to me to me

Despite looking like the script from a truly terrible television sitcom, this conversation actually occurred between two Artificial Intelligence programs, developed inside Facebook in order to perfect the art of negotiation.

At first, the two were speaking to each other in plain English. But then researchers realised they’d made a mistake in the machines' programming.

"There was no reward to sticking to English language," Dhruv Batra, visiting research scientist from Georgia Tech at Facebook AI Research (Fair) told FastCo.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

As these two agents competed to get the best deal, "neither was offered any sort of incentive for speaking as a normal person would," says FastCo.

So they began to diverge, eventually rearranging comprehensible words into seemingly nonsensical sentences, causing the experiment to be suspended.

"Agents (or programs) will drift off understandable language and invent codewords for themselves," explained Batra. "Like if I say 'the' five times, you interpret that to mean I want five copies of this item. This isn’t so different from the way communities of humans create shorthands."

But the researchers were keen to stress, the experiment was not abandoned because of any worries about the potentially negative effects of the robots communicating.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

Fair researcher Mike Lewis told FastCo they had simply decided "our interest was having bots who could talk to people," not efficiently to each other, and thus opted to require them to write to each other legibly.

This is not the first time Artificial Intelligence machines have appeared to create their own language.

In November last year, Google revealed that the AI it uses for its Translate tool had created its own language, which it would translate phrases into and out of before delivering words in the target language.

Reporting on the development at the time, Tech Crunch said: "This 'interlingua' seems to exist as a deeper level of representation that sees similarities between a sentence or a word."

"It could be something sophisticated, or it could be something simple," added the website, but "the fact that it exists at all — an original creation of the system’s own to aid in its understanding of concepts it has not been trained to understand — is, philosophically speaking, pretty powerful stuff".

These recent developments have led to ethical discussions about the potential problems of machines communicating with one other without human intervention.

"In the Facebook case, the only thing the chatbots were capable of doing was coming up with a more efficient way to trade," says Gizmodo.

But there are "probably good reasons not to let intelligent machines develop their own language which humans would not be able to meaningfully understand," the website adds.

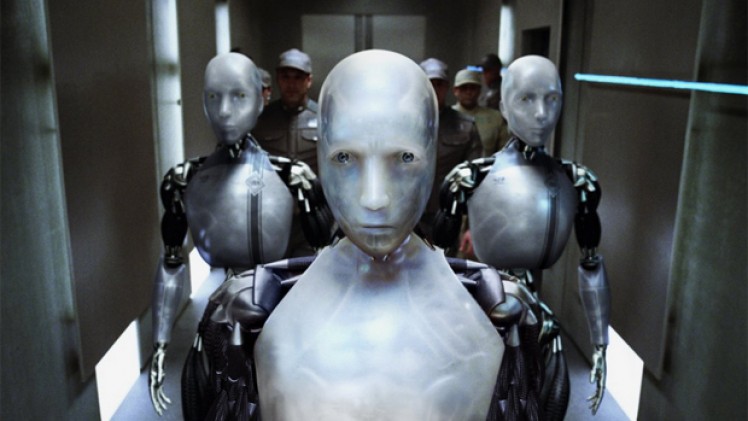

"Hopefully humans will also be smart enough not to plug experimental machine learning programs into something very dangerous, like an army of laser-toting androids or a nuclear reactor."