Microsoft’s Bing search engine serving up child sex abuse images, says report

Researchers claim even seemingly innocuous search terms brought up illegal porn

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

Microsoft’s Bing not only shows illegal images depicting child sexual abuse but also suggests search terms to help find them, according to a new report.

Researchers at Israel-based online safety start-up AntiToxin were commissioned by TechCrunch to investigate “an anonymous tip” that suggested it was “easy to find” child pornograghy on Microsoft’s search engine.

The study found that searching terms such as “porn kids” and “nude family kids” surfaced images of “illegal child exploitation”, the tech site says.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

But the researchers also discovered that some seemingly innocuous terms could lead to illegal images.

Users searching for “Omegle Kids”, referring to a video chat app popular among teenagers, got a suggestion to search for term “Omegle Kids Girls 13”, which produced child abuse pictures, The Daily Telegraph says.

The researchers were “closely supervised by legal counsel” when conducting the study, adds TechCrunch, as searching for child pornography online is illegal.

Responding to the report, Microsoft’s vice president of Bing and AI products, Jordi Ribas, said: “Clearly, these results were unacceptable under our standards and policies and we appreciate TechCrunch making us aware.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

“We’re focused on learning from this so we can make any other improvements needed.”

Microsoft isn’t the only tech giant struggling to tackle the problem.

In September, Israel-based safety groups Netivei Reshet and Screensaverz concluded that it was “easy” to find WhatsApp groups that posted and shared explicit images of children, according to CNet.

WhatsApp responded by saying it had a “zero-tolerance policy around child sexual abuse” and claimed it had removed 130,000 accounts in the space of ten days, the tech site adds.

-

Switzerland could vote to cap its population

Switzerland could vote to cap its populationUnder the Radar Swiss People’s Party proposes referendum on radical anti-immigration measure to limit residents to 10 million

-

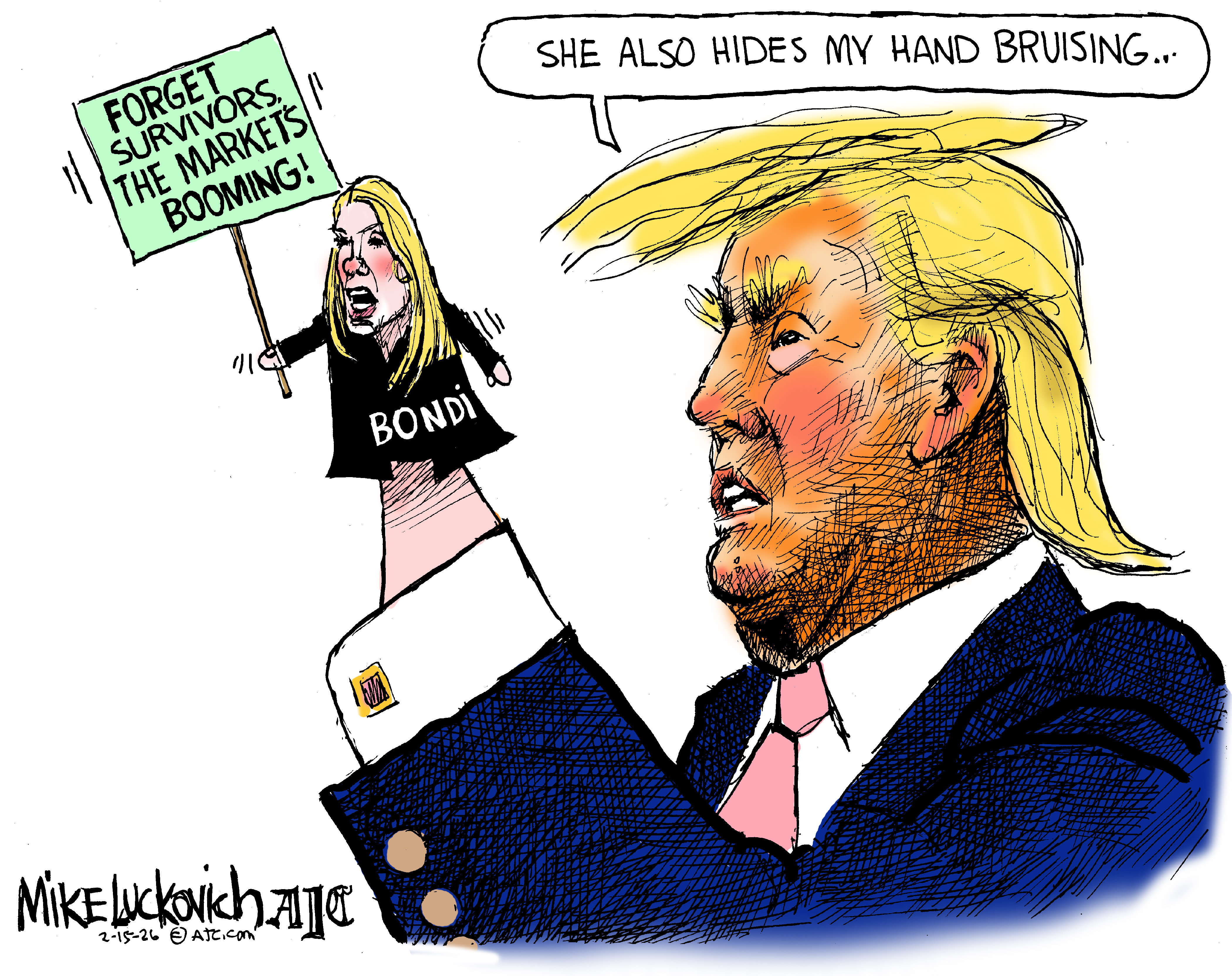

Political cartoons for February 15

Political cartoons for February 15Cartoons Sunday's political cartoons include political ventriloquism, Europe in the middle, and more

-

The broken water companies failing England and Wales

The broken water companies failing England and WalesExplainer With rising bills, deteriorating river health and a lack of investment, regulators face an uphill battle to stabilise the industry

-

Why 2025 was a pivotal year for AI

Why 2025 was a pivotal year for AITalking Point The ‘hype’ and ‘hopes’ around artificial intelligence are ‘like nothing the world has seen before’

-

Microsoft pursues digital intelligence ‘aligned to human values’ in shift from OpenAI

Microsoft pursues digital intelligence ‘aligned to human values’ in shift from OpenAIUNDER THE RADAR The iconic tech giant is jumping into the AI game with a bold new initiative designed to place people first in the search for digital intelligence

-

How the online world relies on AWS cloud servers

How the online world relies on AWS cloud serversThe Explainer Chaos caused by Monday’s online outage shows that ‘when AWS sneezes, half the internet catches the flu’

-

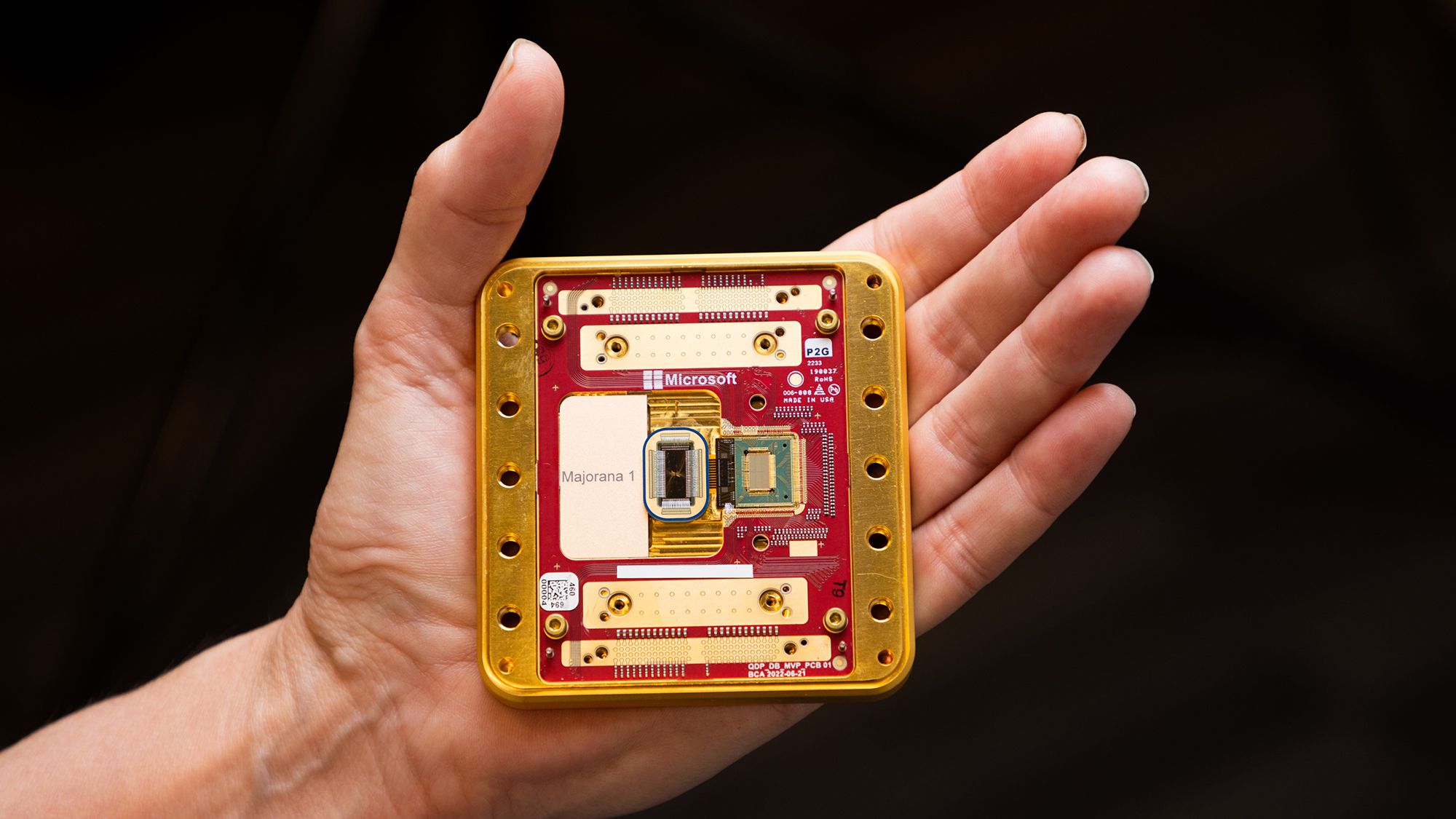

Microsoft unveils quantum computing breakthrough

Microsoft unveils quantum computing breakthroughSpeed Read Researchers say this advance could lead to faster and more powerful computers

-

Microsoft's Three Mile Island deal: How Big Tech is snatching up nuclear power

Microsoft's Three Mile Island deal: How Big Tech is snatching up nuclear powerIn the Spotlight The company paid for access to all the power made by the previously defunct nuclear plant

-

Video games to play this fall, from 'Call of Duty: Black Ops 6' to 'Assassin's Creed Shadows'

Video games to play this fall, from 'Call of Duty: Black Ops 6' to 'Assassin's Creed Shadows'The Week Recommends 'Assassin's Creed' goes to feudal Japan, and a remaster of horror classic 'Silent Hill 2' drops

-

Will the Google antitrust ruling shake up the internet?

Will the Google antitrust ruling shake up the internet?Today's Big Question And what does that mean for users?

-

CrowdStrike: the IT update that wrought global chaos

CrowdStrike: the IT update that wrought global chaosTalking Point 'Catastrophic' consequences of software outages made apparent by last week's events