How tech encoded the culture wars

Is it time for big tech to take responsibility for what is said on their platforms?

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

He zucked up.

Asked this week to explain why hoax site Infowars was still allowed to post to Facebook, CEO Mark Zuckerberg told Recode's Kara Swisher that the company had to give users leeway because it's difficult to impugn intent. Things took a left turn, however, when Zuckerberg used Holocaust deniers as an example, suggesting that it wasn't the platform's place to determine whether such people really intend to mislead. The blowback was fierce and immediate enough that the Facebook CEO was forced to issue a clarification only a few hours after his interview was published.

Yet, poor examples aside, this really is what the titans of tech seem to believe. And it is in some ways easy to understand. Facebook, Twitter, and other companies are simply adhering to what they see as the basic ideals of liberal democracy: fairness, equality, and free speech. As Zuckerberg stated in his clarification, "I believe that often the best way to fight offensive bad speech is with good speech."

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

That same commitment to liberal ideals is also precisely what has helped tech platforms become the battle ground for a new sort of "culture war" — a Trump-era conflict that pitches progressives against those who think that concepts like racial and gender diversity represent a threat to established norms. In taking the stance that the Enlightenment values of neutrality and universalism are fit to police digital networks, tech is helping to encode the culture wars into their very DNA.

The most obvious problem is that the decision to leave up false, misleading, or outright incendiary posts allows the system to be gamed. As BuzzFeed's Charlie Warzel has convincingly argued, Facebook seems to misunderstand how its platform is deliberately misused by bad faith actors who, for example, post false, inflammatory information and then remove it after the damage is already done. By dodging shifting standards for what can and cannot be taken down, those looking to spread misinformation can do considerable harm before having their content removed.

Yet there's also something deeper at work. It is no coincidence the rise of social networks has coincided with so-called "both-sidesism," in which equal time and weight is given to opposite sides of a debate no matter how abhorrent or absurd one view might be. The most obvious example here would be President Trump's "there were very good people on both sides" comment after the white supremacist march and terrorist attack in Charlottesville. But the trend that has seen men's rights groups or racists take on the language of oppression points to the way in which a neutral or universal approach to content ends up fostering a climate in which patently awful things are talked about as if they were no different from the ordinary.

To be clear, the idea that a private company should get to determine what is true or right, especially when a company like Facebook is immensely popular, is deeply disturbing. While one might breezily claim a site like Infowars should be banned, there are countless edge cases that are less clear cut.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

But as New York's Max Read points out, relying on notions of free speech and equality — key ideas to liberal democracies — are at best hypocritical when the checks and balances of a representative government are missing from private companies like Facebook and Twitter. In that regard, social media companies are more like dictatorships. In outsourcing the infrastructure for public discourse to a handful of companies on America's West Coast, we have also given up the ability to have a say through voting, policy, or other forms of pressure because we've allowed these organizations to take on a state-like function.

So what can be done? Read suggests that Facebook produce a kind of constitution to at least make the process of content removal transparent and consistent. There is also the more aggressive option of regulation, which would necessitate new laws to deal with the specificity of digital networks. More extreme would be actually breaking up these companies in order to mitigate how their scale helps produce these negative effects; considering the current regulatory climate, this option seems the least likely.

But as is clear in the world of fake news, the potential return of neo-fascism, and an increasingly polarized, manipulated public sphere, perhaps old principles are no longer as reliable as they once were, and that instead, we need to find a way to insist digital networks take responsibility for the content on their platforms.

In not doing so, Facebook, Twitter are more are simply encoding the culture wars into their DNA — and we are all worse off for it.

Navneet Alang is a technology and culture writer based out of Toronto. His work has appeared in The Atlantic, New Republic, Globe and Mail, and Hazlitt.

-

Palantir's growing influence in the British state

Palantir's growing influence in the British stateThe Explainer Despite winning a £240m MoD contract, the tech company’s links to Peter Mandelson and the UK’s over-reliance on US tech have caused widespread concern

-

Quiz of The Week: 7 – 13 February

Quiz of The Week: 7 – 13 FebruaryQuiz Have you been paying attention to The Week’s news?

-

Nordic combined: the Winter Olympics sport that bars women

Nordic combined: the Winter Olympics sport that bars womenIn The Spotlight Female athletes excluded from participation in demanding double-discipline events at Milano-Cortina

-

The billionaires’ wealth tax: a catastrophe for California?

The billionaires’ wealth tax: a catastrophe for California?Talking Point Peter Thiel and Larry Page preparing to change state residency

-

Bari Weiss’ ‘60 Minutes’ scandal is about more than one report

Bari Weiss’ ‘60 Minutes’ scandal is about more than one reportIN THE SPOTLIGHT By blocking an approved segment on a controversial prison holding US deportees in El Salvador, the editor-in-chief of CBS News has become the main story

-

Has Zohran Mamdani shown the Democrats how to win again?

Has Zohran Mamdani shown the Democrats how to win again?Today’s Big Question New York City mayoral election touted as victory for left-wing populists but moderate centrist wins elsewhere present more complex path for Democratic Party

-

Millions turn out for anti-Trump ‘No Kings’ rallies

Millions turn out for anti-Trump ‘No Kings’ ralliesSpeed Read An estimated 7 million people participated, 2 million more than at the first ‘No Kings’ protest in June

-

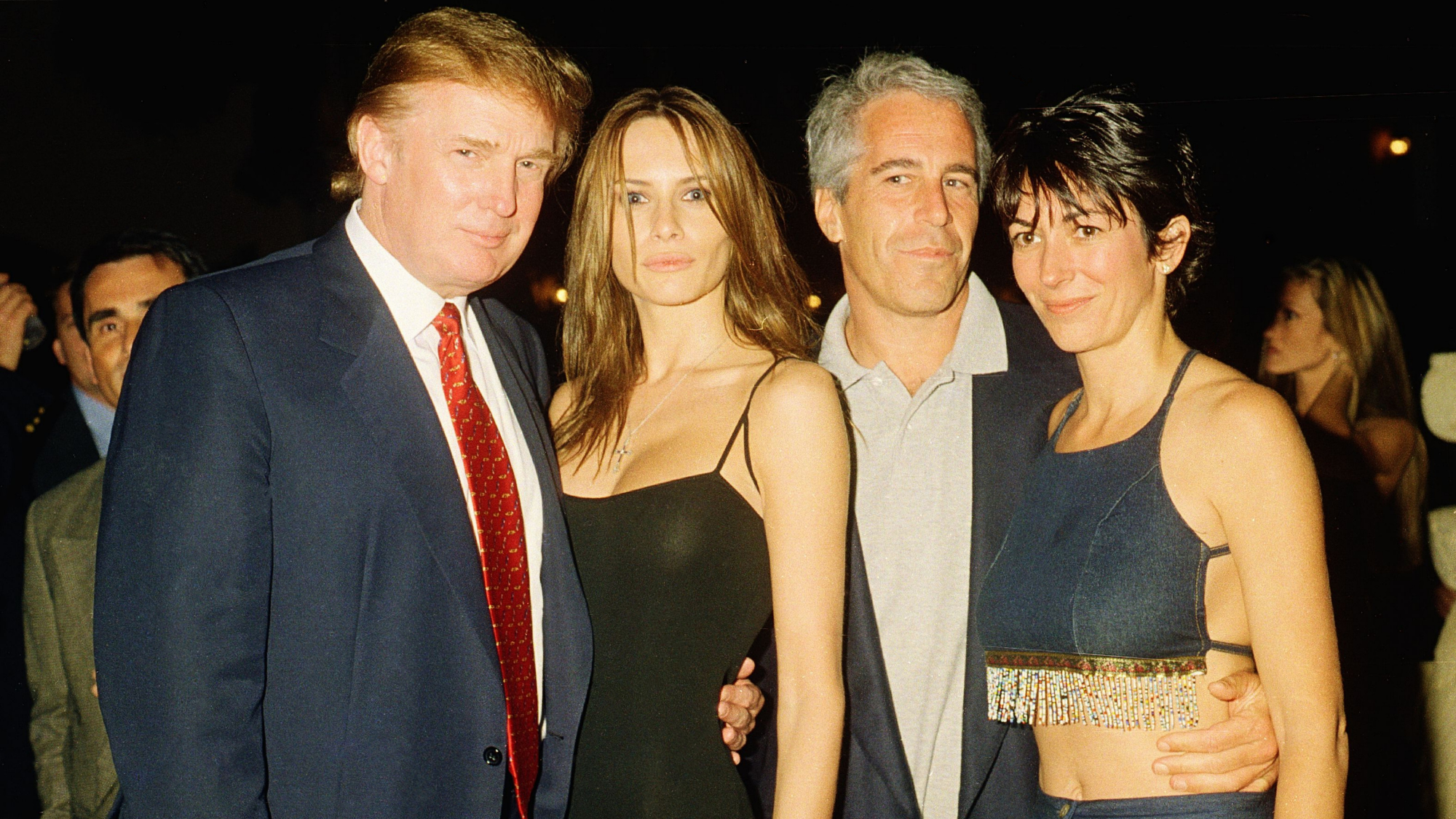

Ghislaine Maxwell: angling for a Trump pardon

Ghislaine Maxwell: angling for a Trump pardonTalking Point Convicted sex trafficker's testimony could shed new light on president's links to Jeffrey Epstein

-

The last words and final moments of 40 presidents

The last words and final moments of 40 presidentsThe Explainer Some are eloquent quotes worthy of the holders of the highest office in the nation, and others... aren't

-

The JFK files: the truth at last?

The JFK files: the truth at last?In The Spotlight More than 64,000 previously classified documents relating the 1963 assassination of John F. Kennedy have been released by the Trump administration

-

'Seriously, not literally': how should the world take Donald Trump?

'Seriously, not literally': how should the world take Donald Trump?Today's big question White House rhetoric and reality look likely to become increasingly blurred