Why Nancy Pelosi's attack on Facebook is dangerous

Beware the slippery slope of social media censorship

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

Democrats have rightly slammed President Trump for labeling the news media as "enemies of the people." But the nation's most powerful Democrat, House Speaker Nancy Pelosi, comes pretty close to making a similar accusation when she calls Facebook executives "willing enablers" of Russian election interference in 2016. As she told a San Francisco radio station: "We have said all along, 'Poor Facebook, they were unwittingly exploited by the Russians.' I think wittingly, because right now they are putting up something that they know is false."

Pelosi apparently sees the social media giant's unwillingness to remove a "cheapfake" altered video of her as evidence company boss Mark Zuckerberg learned nothing from the Russia scandal. Or that he simply doesn't care about harmful content on his site as long as it engages users. Maybe both.

Pelosi's take-down demand is politically fascinating given how it publicly escalates the left's attack on Big Tech, an industry that favors Democrats. Facebook employees, for instance, have given nearly 10 times more campaign cash to Democrats than Republicans since it was founded in 2004. But the policy implications are more important and concerning. It's doubtful Pelosi really thought out the long-term implications of powerful politicians regularly pressuring social media firms to remove content that's not advocating illegal action. (Facebook said it would remove the video only if it came from a bogus account or threatened public safety.)

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

It's easy to imagine a certain "fake news" president demanding the removal of all manner of posts and videos. Maybe Trump will call for Saturday Night Live to remove "very unfair" sketches from its Facebook feed. This is a slippery slope coated in teflon.

And it's not as if Facebook did nothing about the video. Far from it. The company reduced the video's appearance in news feeds and added pop-up menus telling users there is "additional reporting" on the video.

Yet Facebook could have done considerably more. That wimpy warning could have clearly stated the the video was a fake, especially given that it was evidently meant to misinform rather than just mock. And that warning could be more obvious and applied more quickly, says Alexios Mantzarlis, a fellow at TED who helped create Facebook's partnership with fact-checkers. There is obviously a lot of space between just leaving a video up unmoderated and taking it down when some politician or activist objects.

And American policymakers should look closely at how other nations have been struggling to curb misinformation or hate speech online. In The Atlantic, historian Heidi Tworek points out how Germany's 2017 law for social media companies may have inadvertently publicized, via the Streisand Effect, the very speech it was designed to combat. Indeed, Pelosi's complaint about the video, manipulated to make her seem drunk, undoubtedly makes it a must-see, despite Facebook's mild warning. Tworek also notes that many nations are passing fuzzy laws to combat misinformation that seem likely to give governments greater power to suppress speech they don't like.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

So many unintended consequences. Of course, that's pretty much the rule with any regulatory attempt. But it seems especially true when it comes to the internet and the dynamic, fast-evolving technology sector. Trade-offs — the tensions between uncomfortable speech and freedom of expression — are ignored or simply overlooked. Europe's big privacy law, the General Data Protection Regulation, just had its one-year anniversary. And along with a host of new privacy rights, Europeans have also received a great lesson in how public policy works. More personal control of data? Sure. But also it appears less investment in tech startups and a greater ad-market share for Google and Facebook. That stuff wasn't the intention of the law, yet it's all still happening.

Surely it isn't Pelosi's intention to limit or chill free speech on the internet or enable government suppression of the rights of individuals and businesses. But she might have made it a bit more likely just the same.

James Pethokoukis is the DeWitt Wallace Fellow at the American Enterprise Institute where he runs the AEIdeas blog. He has also written for The New York Times, National Review, Commentary, The Weekly Standard, and other places.

-

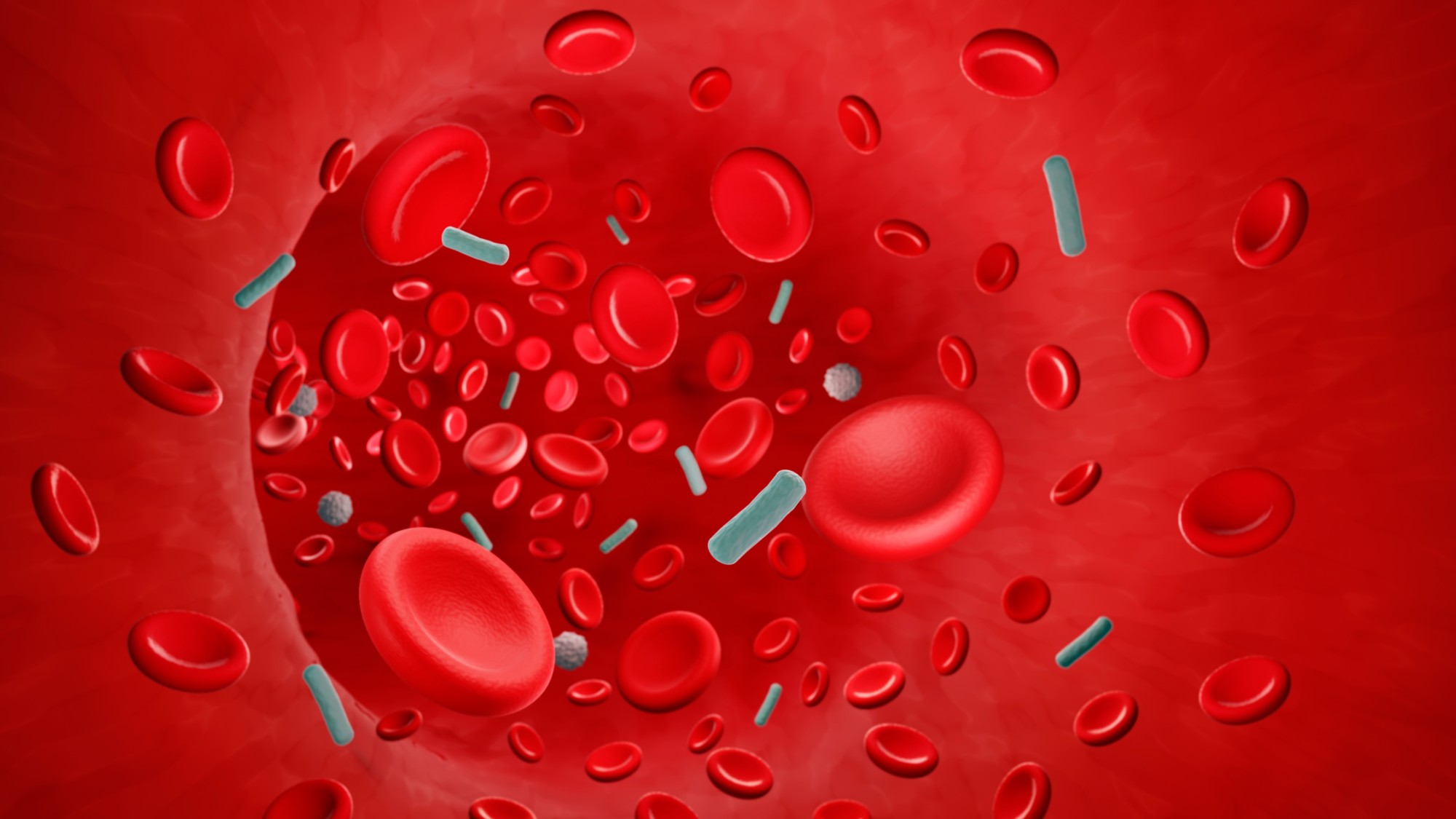

Sepsis ‘breakthrough’: the world’s first targeted treatment?

Sepsis ‘breakthrough’: the world’s first targeted treatment?The Explainer New drug could reverse effects of sepsis, rather than trying to treat infection with antibiotics

-

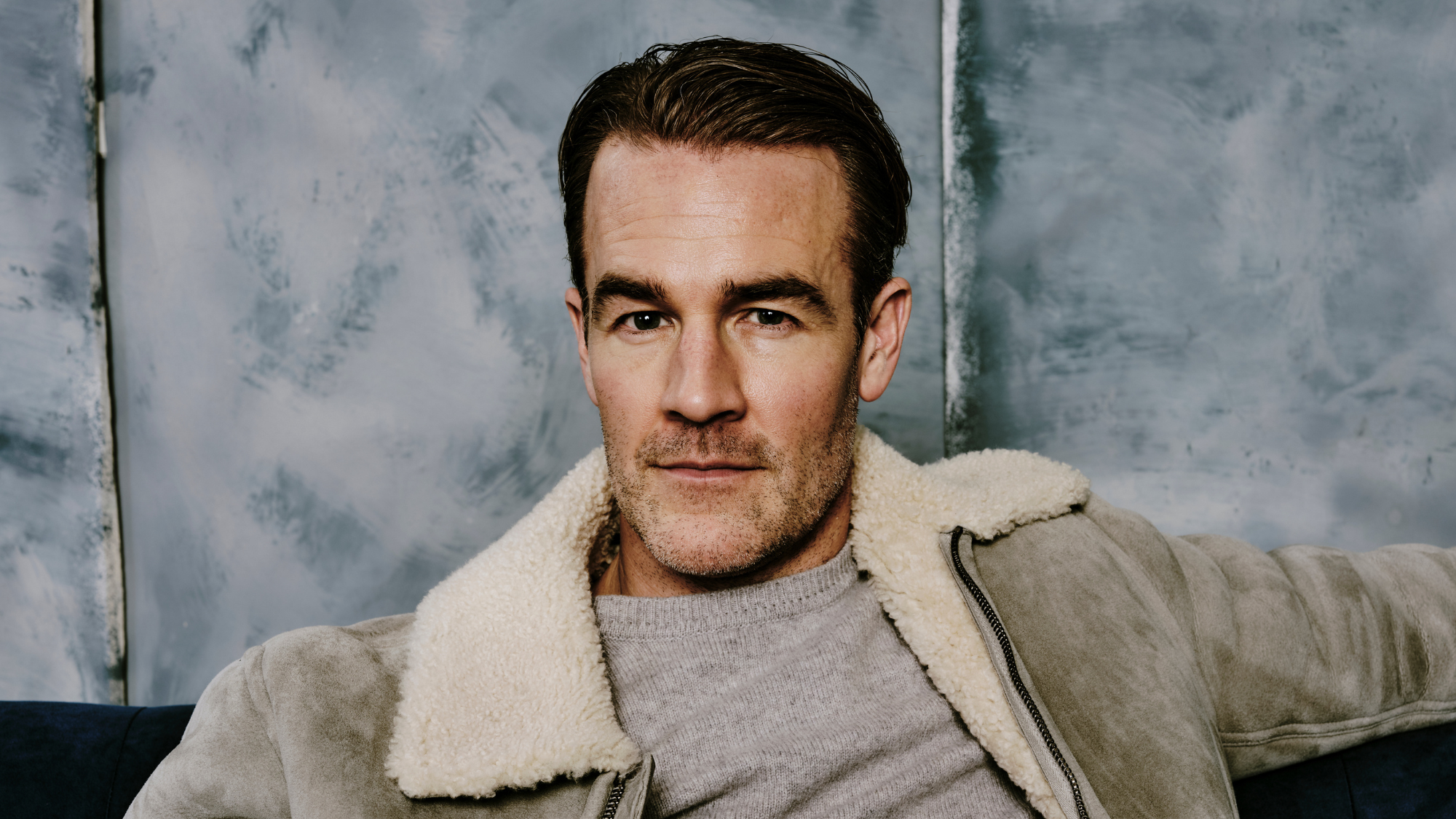

James Van Der Beek obituary: fresh-faced Dawson’s Creek star

James Van Der Beek obituary: fresh-faced Dawson’s Creek starIn The Spotlight Van Der Beek fronted one of the most successful teen dramas of the 90s – but his Dawson fame proved a double-edged sword

-

Is Andrew’s arrest the end for the monarchy?

Is Andrew’s arrest the end for the monarchy?Today's Big Question The King has distanced the Royal Family from his disgraced brother but a ‘fit of revolutionary disgust’ could still wipe them out

-

The billionaires’ wealth tax: a catastrophe for California?

The billionaires’ wealth tax: a catastrophe for California?Talking Point Peter Thiel and Larry Page preparing to change state residency

-

Bari Weiss’ ‘60 Minutes’ scandal is about more than one report

Bari Weiss’ ‘60 Minutes’ scandal is about more than one reportIN THE SPOTLIGHT By blocking an approved segment on a controversial prison holding US deportees in El Salvador, the editor-in-chief of CBS News has become the main story

-

Has Zohran Mamdani shown the Democrats how to win again?

Has Zohran Mamdani shown the Democrats how to win again?Today’s Big Question New York City mayoral election touted as victory for left-wing populists but moderate centrist wins elsewhere present more complex path for Democratic Party

-

Millions turn out for anti-Trump ‘No Kings’ rallies

Millions turn out for anti-Trump ‘No Kings’ ralliesSpeed Read An estimated 7 million people participated, 2 million more than at the first ‘No Kings’ protest in June

-

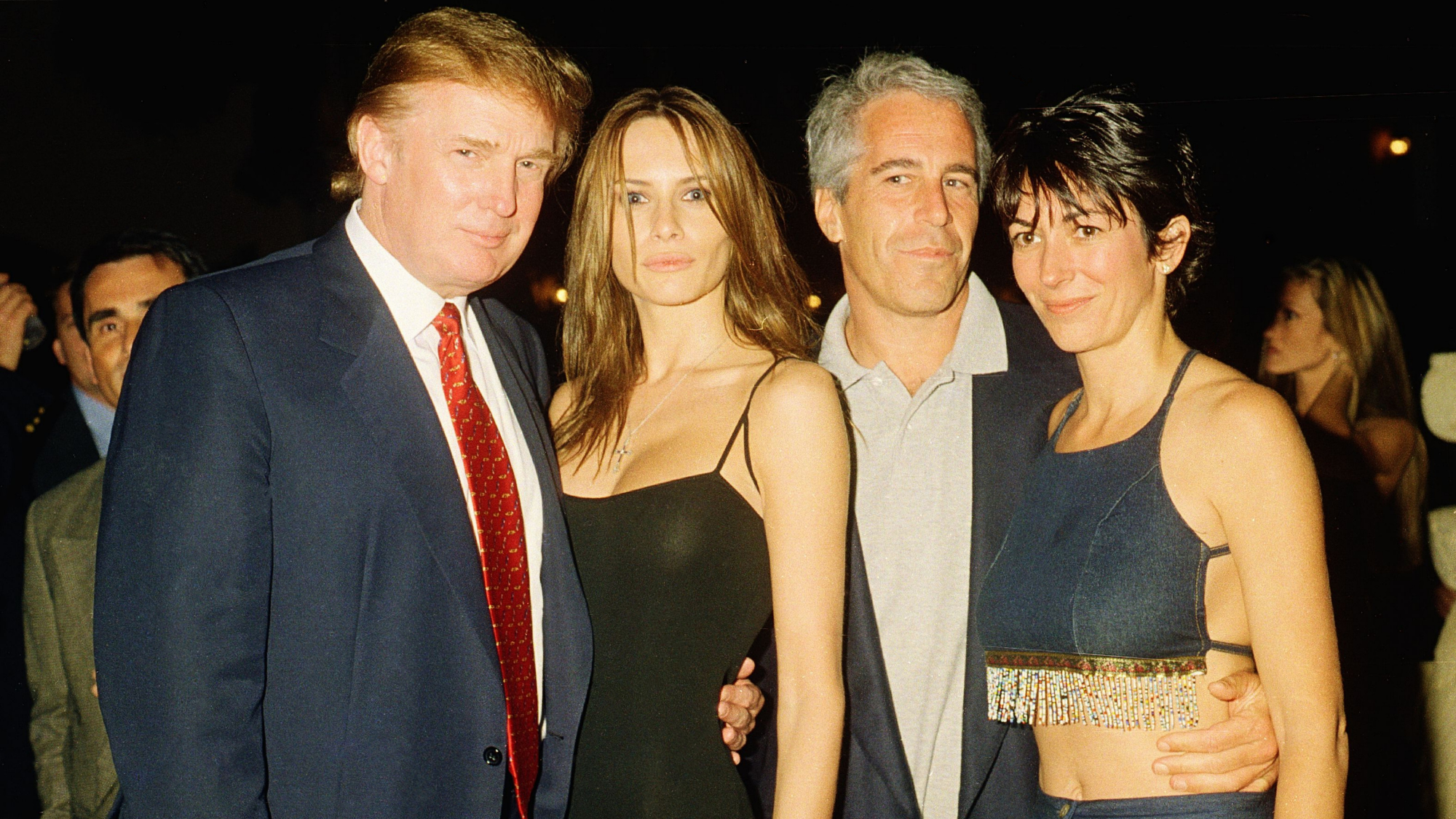

Ghislaine Maxwell: angling for a Trump pardon

Ghislaine Maxwell: angling for a Trump pardonTalking Point Convicted sex trafficker's testimony could shed new light on president's links to Jeffrey Epstein

-

The last words and final moments of 40 presidents

The last words and final moments of 40 presidentsThe Explainer Some are eloquent quotes worthy of the holders of the highest office in the nation, and others... aren't

-

The JFK files: the truth at last?

The JFK files: the truth at last?In The Spotlight More than 64,000 previously classified documents relating the 1963 assassination of John F. Kennedy have been released by the Trump administration

-

'Seriously, not literally': how should the world take Donald Trump?

'Seriously, not literally': how should the world take Donald Trump?Today's big question White House rhetoric and reality look likely to become increasingly blurred