Call for regulation to stop AI ‘eliminating the whole human race’

Professor said artificial intelligence could become as dangerous as nuclear weapons

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

Experts have called for global regulation to prevent out-of-control artificial intelligence systems that could end up “eliminating the whole human race”.

Researchers from Oxford University told MPs on the science and technology committee that just as humans wiped out the dodo, AI machines could eventually pose an “existential threat” to humanity.

The committee “heard how advanced AI could take control of its own programming”, said The Telegraph.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

“With superhuman AI there is a particular risk that is of a different sort of class, which is, well, it could kill everyone,” said doctoral student Michael Cohen. If it is smarter than humans “across every domain” it could “presumably avoid sending any red flags while we still could pull the plug”.

Michael Osborne, professor of machine learning at Oxford, said that “the bleak scenario is realistic”. This is because, he explained, “we’re in a massive AI arms race… with the US versus China and among tech firms there seems to be this willingness to throw safety and caution out the window and race as fast as possible to the most advanced AI”.

There are “some reasons for hope in that we have been pretty good at regulating the use of nuclear weapons”, he said, adding that “AI is as comparable a danger as nuclear weapons”.

He hoped that countries across the globe would recognise the “existential threat” from advanced AI and agree treaties that would prevent the development of dangerous systems.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

“Similar concerns appear to be shared by many scientists who work with AI,” said The Times, pointing to a survey in September by a team at New York University. It found that more than a third of 327 scientists who work with artificial intelligence agreed it is “plausible” that decisions made by AI “could cause a catastrophe this century that is at least as bad as an all-out nuclear war”.

As the Daily Mail put it: “The doomsday predictions have worrying parallels to the plot of science fiction blockbuster The Matrix, in which humanity is beholden to intelligent machines.”

All in all though, said Time magazine when the New York University research came out, “the fact that ‘only’ 36% of those surveyed see a catastrophic risk as possible could be considered encouraging, since the remaining 64% don’t think the same way”.

Chas Newkey-Burden has been part of The Week Digital team for more than a decade and a journalist for 25 years, starting out on the irreverent football weekly 90 Minutes, before moving to lifestyle magazines Loaded and Attitude. He was a columnist for The Big Issue and landed a world exclusive with David Beckham that became the weekly magazine’s bestselling issue. He now writes regularly for The Guardian, The Telegraph, The Independent, Metro, FourFourTwo and the i new site. He is also the author of a number of non-fiction books.

-

James Van Der Beek obituary: fresh-faced Dawson’s Creek star

James Van Der Beek obituary: fresh-faced Dawson’s Creek starIn The Spotlight Van Der Beek fronted one of the most successful teen dramas of the 90s – but his Dawson fame proved a double-edged sword

-

Is Andrew’s arrest the end for the monarchy?

Is Andrew’s arrest the end for the monarchy?Today's Big Question The King has distanced the Royal Family from his disgraced brother but a ‘fit of revolutionary disgust’ could still wipe them out

-

Quiz of The Week: 14 – 20 February

Quiz of The Week: 14 – 20 FebruaryQuiz Have you been paying attention to The Week’s news?

-

Claude Code: Anthropic’s wildly popular AI coding app

Claude Code: Anthropic’s wildly popular AI coding appThe Explainer Engineers and noncoders alike are helping the app go viral

-

Will regulators put a stop to Grok’s deepfake porn images of real people?

Will regulators put a stop to Grok’s deepfake porn images of real people?Today’s Big Question Users command AI chatbot to undress pictures of women and children

-

Most data centers are being built in the wrong climate

Most data centers are being built in the wrong climateThe explainer Data centers require substantial water and energy. But certain locations are more strained than others, mainly due to rising temperatures.

-

The dark side of how kids are using AI

The dark side of how kids are using AIUnder the Radar Chatbots have become places where children ‘talk about violence, explore romantic or sexual roleplay, and seek advice when no adult is watching’

-

Why 2025 was a pivotal year for AI

Why 2025 was a pivotal year for AITalking Point The ‘hype’ and ‘hopes’ around artificial intelligence are ‘like nothing the world has seen before’

-

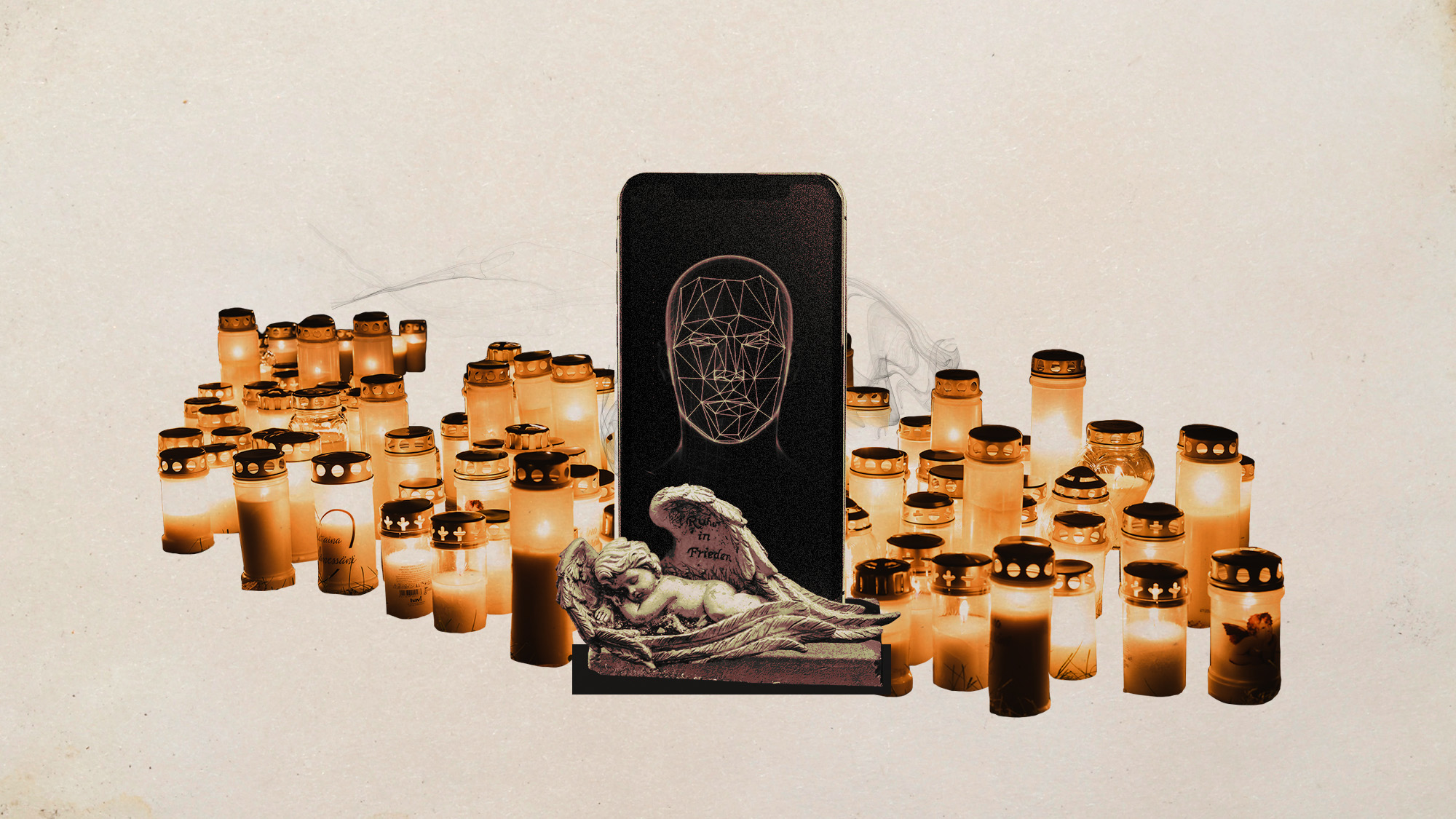

AI griefbots create a computerized afterlife

AI griefbots create a computerized afterlifeUnder the Radar Some say the machines help people mourn; others are skeptical

-

The robot revolution

The robot revolutionFeature Advances in tech and AI are producing android machine workers. What will that mean for humans?

-

Separating the real from the fake: tips for spotting AI slop

Separating the real from the fake: tips for spotting AI slopThe Week Recommends Advanced AI may have made slop videos harder to spot, but experts say it’s still possible to detect them