Fake AI job seekers are flooding US companies

It's getting harder for hiring managers to screen out bogus AI-generated applicants

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

The introduction of generative artificial intelligence has complicated the job-seeking and hiring process, causing confusion as the line between human beings and AI gets thinner. In the hands of bad actors, generative AI enables an emerging security threat for companies seeking employees amid a flood of fake job seekers.

Fake job applicants 'ramped up massively'

Companies have long had to defend themselves from hackers "hoping to exploit vulnerabilities in their software, employees or vendors," but now "another threat has emerged," said CNBC. Hiring employers are being inundated by applicants "who aren't who they say they are," who are "wielding AI tools to fabricate photo IDs, generate employment histories and provide answers during interviews." The spike in fake AI-generated applicants means that by 2028, 1 in 4 job candidates globally will be bogus, according to research and advisory firm Gartner.

Gen AI has "blurred the line between what it is to be human and what it means to be machine," said the CEO and co-founder of voice authentication startup Pindrop, Vijay Balasubramaniyan, to CNBC. As a result, "individuals are using these fake identities and fake faces and fake voices to secure employment," sometimes going so far as "doing a face swap with another individual who shows up for the job." Hiring a fake job seeker can put the company at risk for malware ransom attacks and theft of trade secrets or funds.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

Industry experts said that cybersecurity and cryptocurrency firms have recently seen a surge in fake job seekers. Since the companies often hire remote roles, they are particularly alluring targets for bad actors. News of the issue surfaced a year ago, but the number of fraudulent job candidates has "ramped up massively" this year, said Ben Sesser, the CEO of BrightHire, to CNBC. Humans are "generally the weak link in cybersecurity," and since hiring is an "inherently human process," it has become a "weak point that folks are trying to expose.”

The fake applicants phenomenon "isn't limited to cybersecurity jobs," said Inc. Last year, the Justice Department alleged that "over 300 U.S. companies had accidentally hired impostors to work remote IT-related jobs." The employees were actually tied to North Korea, sending millions in wages home, which the DOJ alleged "would be used to help fund the authoritarian nation's weapons program."

Hiring managers in the dark

The fake employee industry has expanded to include criminal groups in Russia, China, Malaysia and South Korea, said Roger Grimes, a computer security consultant, to CNBC. Sometimes they will "do the role poorly," and then sometimes "they perform it so well that I've actually had a few people tell me they were sorry they had to let them go."

Despite the DOJ case and a few other publicized incidents, hiring managers at most companies are generally unaware of the risks of fake job candidates, according to BrightHire's Sesser. They are responsible for talent strategy, but "being on the front lines of security has historically not been one of them,” he said. “Folks think they're not experiencing it," but it is more likely that they are "just not realizing that it's going on.”

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

Dawid Moczadlo, cofounder of cybersecurity startup Vidoc Security Lab, recently posted a video on LinkedIn of an interview with a deepfake AI job candidate, "which serves as a master class in potential red flags," Fortune said. The audio and video of the Zoom call didn't quite sync up, and the video quality also seemed off. When the person was moving and speaking, there was "different shading on his skin," and it "looked very glitchy, very strange," Moczadlo said to Fortune. "Before this happened, we just gave people the benefit of the doubt, that maybe their camera is broken," he said. But after the incident, "if they don't have their real camera on, we will just completely stop" the interview.

Theara Coleman has worked as a staff writer at The Week since September 2022. She frequently writes about technology, education, literature and general news. She was previously a contributing writer and assistant editor at Honeysuckle Magazine, where she covered racial politics and cannabis industry news.

-

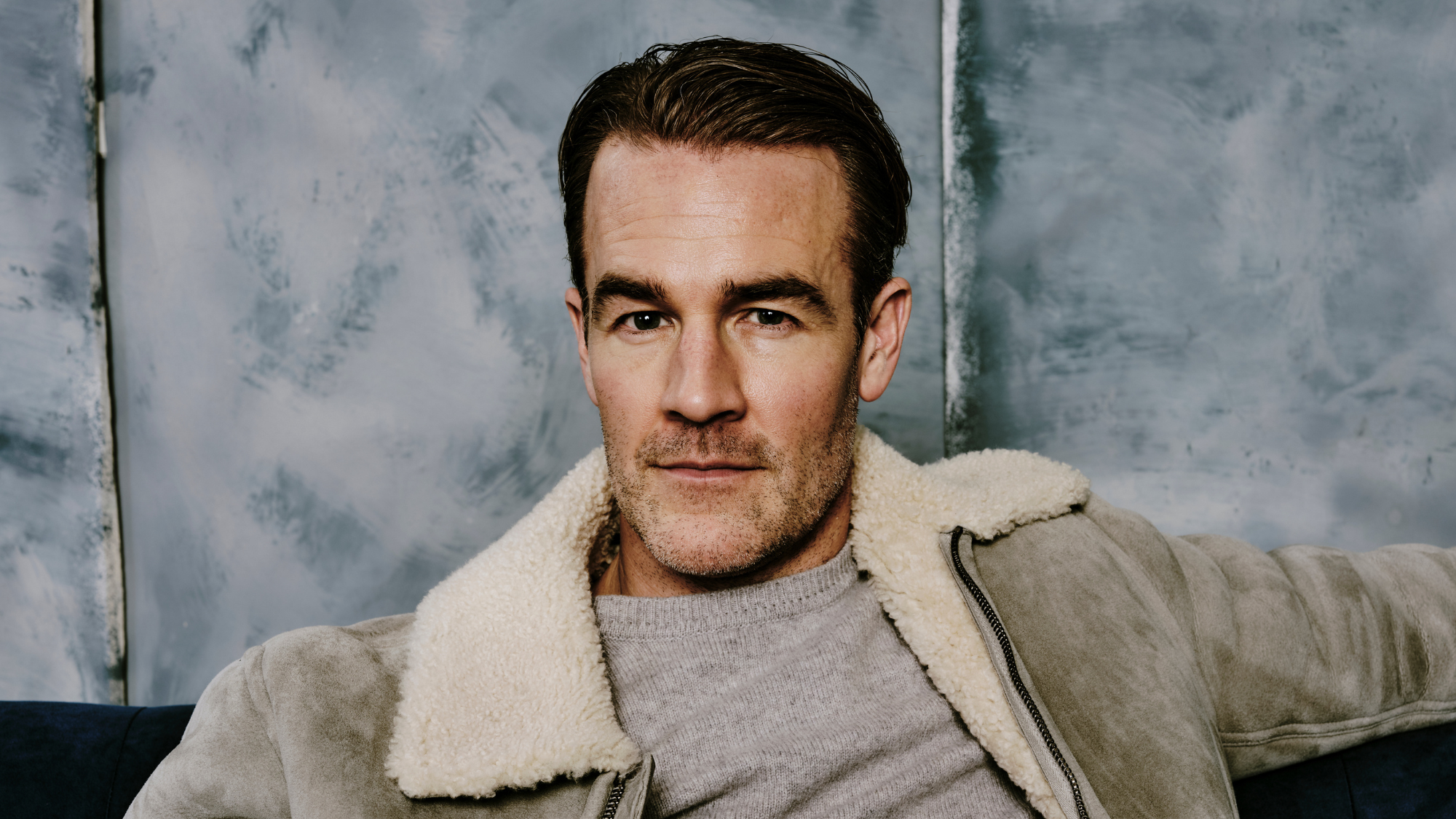

James Van Der Beek obituary: fresh-faced Dawson’s Creek star

James Van Der Beek obituary: fresh-faced Dawson’s Creek starIn The Spotlight Van Der Beek fronted one of the most successful teen dramas of the 90s – but his Dawson fame proved a double-edged sword

-

Is Andrew’s arrest the end for the monarchy?

Is Andrew’s arrest the end for the monarchy?Today's Big Question The King has distanced the Royal Family from his disgraced brother but a ‘fit of revolutionary disgust’ could still wipe them out

-

Quiz of The Week: 14 – 20 February

Quiz of The Week: 14 – 20 FebruaryQuiz Have you been paying attention to The Week’s news?

-

Elon Musk’s pivot from Mars to the moon

Elon Musk’s pivot from Mars to the moonIn the Spotlight SpaceX shifts focus with IPO approaching

-

Claude Code: Anthropic’s wildly popular AI coding app

Claude Code: Anthropic’s wildly popular AI coding appThe Explainer Engineers and noncoders alike are helping the app go viral

-

Will regulators put a stop to Grok’s deepfake porn images of real people?

Will regulators put a stop to Grok’s deepfake porn images of real people?Today’s Big Question Users command AI chatbot to undress pictures of women and children

-

Most data centers are being built in the wrong climate

Most data centers are being built in the wrong climateThe explainer Data centers require substantial water and energy. But certain locations are more strained than others, mainly due to rising temperatures.

-

The dark side of how kids are using AI

The dark side of how kids are using AIUnder the Radar Chatbots have become places where children ‘talk about violence, explore romantic or sexual roleplay, and seek advice when no adult is watching’

-

Why 2025 was a pivotal year for AI

Why 2025 was a pivotal year for AITalking Point The ‘hype’ and ‘hopes’ around artificial intelligence are ‘like nothing the world has seen before’

-

What is Roomba’s legacy after iRobot bankruptcy?

What is Roomba’s legacy after iRobot bankruptcy?In the Spotlight Tariffs and cheaper rivals have displaced the innovative robot company

-

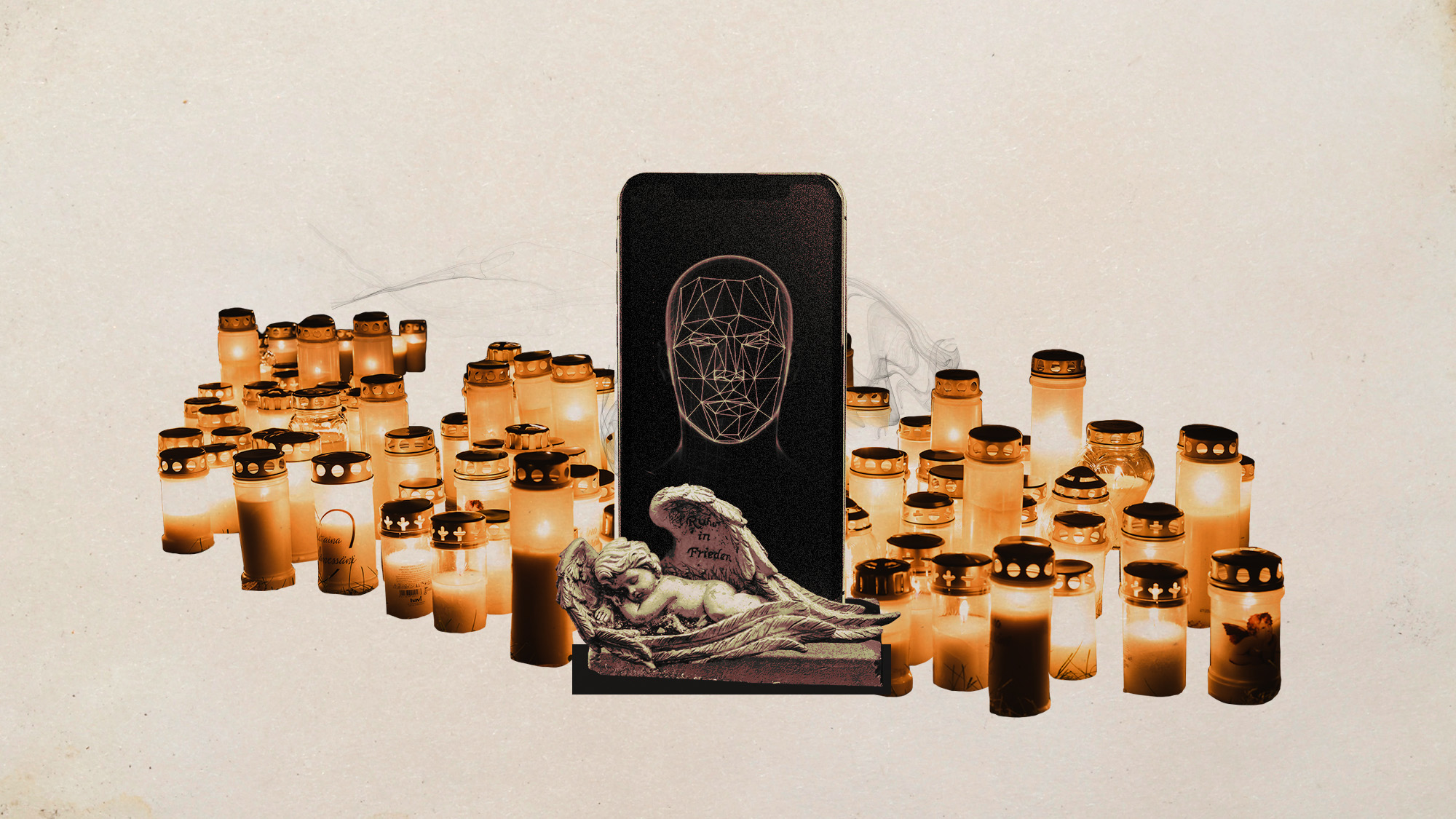

AI griefbots create a computerized afterlife

AI griefbots create a computerized afterlifeUnder the Radar Some say the machines help people mourn; others are skeptical