How AI is used in UK train stations

Image recognition software that can track passenger emotions pits privacy concerns against efficiency and safety improvements

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

Thousands of passengers passing through some of the UK's busiest train stations have been scanned with AI cameras that can predict their age, gender and even predict their emotions.

Newly released documents from Network Rail, obtained by civil liberties group Big Brother Watch, reveal how people travelling through London Euston, London Waterloo, Manchester Piccadilly, Leeds and other smaller stations have had their faces scanned by Amazon AI software over the past two years.

AI on UK public transport

Object recognition is a type of machine learning that can identify items in video feeds. In the case of Network Rail, AI surveillance technology was used to analyse CCTV footage to alert staff to safety incidents, reduce fare dodging and make stations more efficient.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

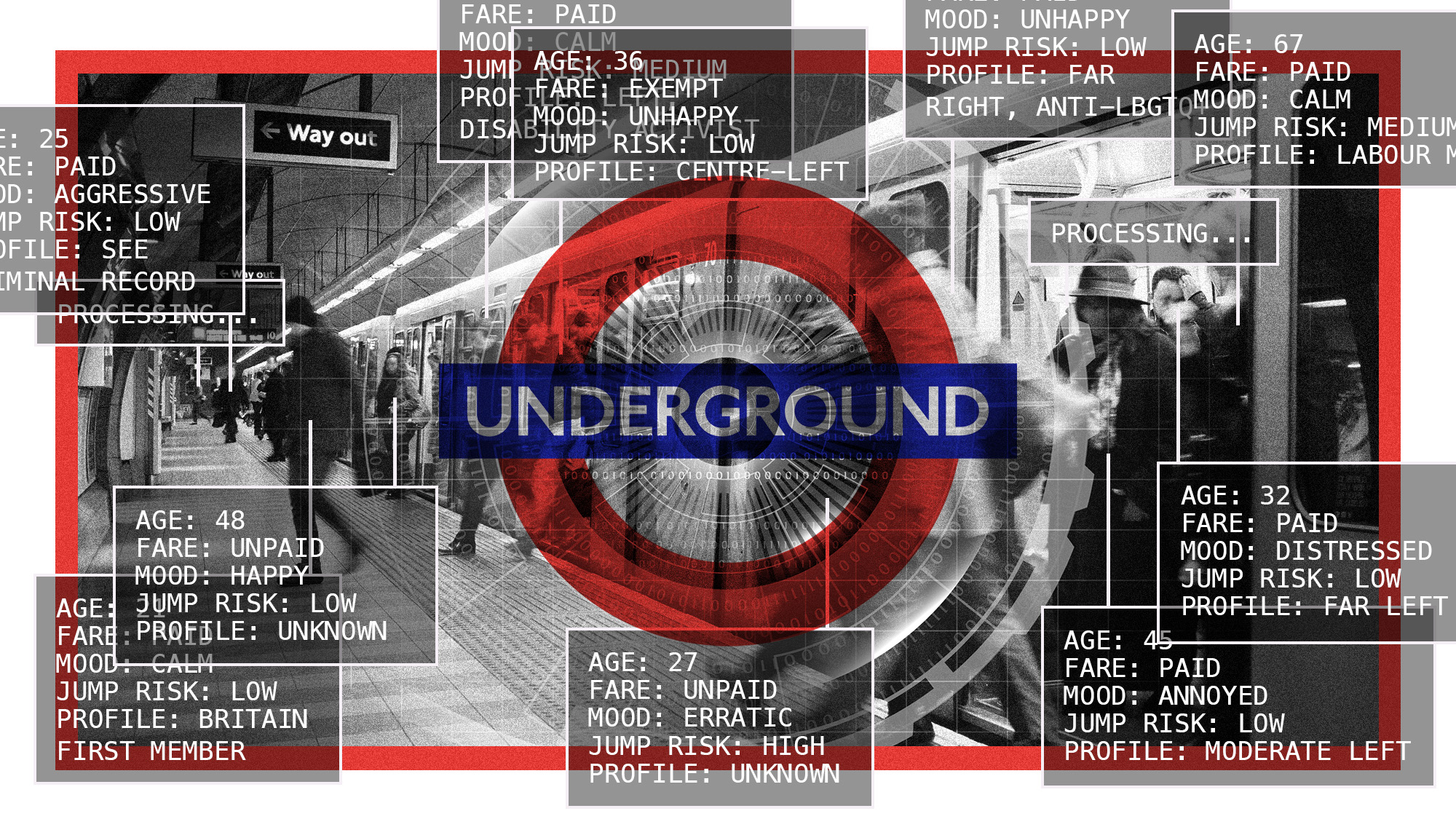

Transport for London (TfL) has also trialled cameras enabled with AI and the results are "startling", said James O'Malley in The House magazine, "revealing the potential, both good and bad".

According to Freedom of Information (FoI) requests, a two-week trial in 2019 explored whether a special AI camera trained at ticket barriers could monitor whether more people were queuing to enter or to exit the station and automatically switch the direction of barriers. The result was an increase in passenger throughput by as much as 30%, and an up to 90% reduction in queue times.

An even more ambitious recent trial at Willesden Green in northwest London used AI software tapped into the CCTV feed to transform it into a "smart station" capable of spotting up to 77 different "use cases". These ranged from discarded newspapers to people standing too close to the platform edge and they were used to help reduce fare evasion and alert staff to aggressive behaviour.

Dangers of 'emotional AI'

One of the most eye-catching sections of the Network Rail documents described how Amazon's Rekognition system, which allows face and object analysis, was deployed to "predict travellers' age, gender, and potential emotions – with the suggestion that the data could be used in advertising systems in the future", said Wired.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

The documents said that the AI's capability to classify facial expressions such as happy or angry could be used to perceive customer satisfaction, and that "this data could be utilised to maximum advertising and retail revenue".

AI researchers have "frequently warned that using the technology to detect emotions is unreliable", said Wired.

"Because of the subjective nature of emotions, emotional AI is especially prone to bias," said Harvard Business Review. AI is often also "not sophisticated enough to understand cultural differences in expressing and reading emotions, making it harder to draw accurate conclusions".

The use of AI to read emotions is a "slippery slope", Carissa Véliz, an associate professor of psychology at the Institute for Ethics in AI, University of Oxford, told Wired.

Expanding surveillance responds to an "instinctive" drive, she said. "Human beings like seeing more, seeing further. But surveillance leads to control, and control to a loss of freedom that threatens liberal democracies."

AI-powered "smart stations" could make the passenger experience "safer, cleaner and more efficient", said O'Malley, but the use of image recognition calls for a delicate balance of "protecting rights with convenience and efficiency".

For example, he said, "there's no technological reason why these sorts of cameras couldn't be used to identify individuals using facial recognition – or to spot certain political symbols".

-

Antonia Romeo and Whitehall’s women problem

Antonia Romeo and Whitehall’s women problemThe Explainer Before her appointment as cabinet secretary, commentators said hostile briefings and vetting concerns were evidence of ‘sexist, misogynistic culture’ in No. 10

-

Local elections 2026: where are they and who is expected to win?

Local elections 2026: where are they and who is expected to win?The Explainer Labour is braced for heavy losses and U-turn on postponing some council elections hasn’t helped the party’s prospects

-

6 of the world’s most accessible destinations

6 of the world’s most accessible destinationsThe Week Recommends Experience all of Berlin, Singapore and Sydney

-

Claude Code: Anthropic’s wildly popular AI coding app

Claude Code: Anthropic’s wildly popular AI coding appThe Explainer Engineers and noncoders alike are helping the app go viral

-

Will regulators put a stop to Grok’s deepfake porn images of real people?

Will regulators put a stop to Grok’s deepfake porn images of real people?Today’s Big Question Users command AI chatbot to undress pictures of women and children

-

Most data centers are being built in the wrong climate

Most data centers are being built in the wrong climateThe explainer Data centers require substantial water and energy. But certain locations are more strained than others, mainly due to rising temperatures.

-

The dark side of how kids are using AI

The dark side of how kids are using AIUnder the Radar Chatbots have become places where children ‘talk about violence, explore romantic or sexual roleplay, and seek advice when no adult is watching’

-

Why 2025 was a pivotal year for AI

Why 2025 was a pivotal year for AITalking Point The ‘hype’ and ‘hopes’ around artificial intelligence are ‘like nothing the world has seen before’

-

Data centers could soon be orbiting in space

Data centers could soon be orbiting in spaceUnder the radar The AI revolution is going cosmic

-

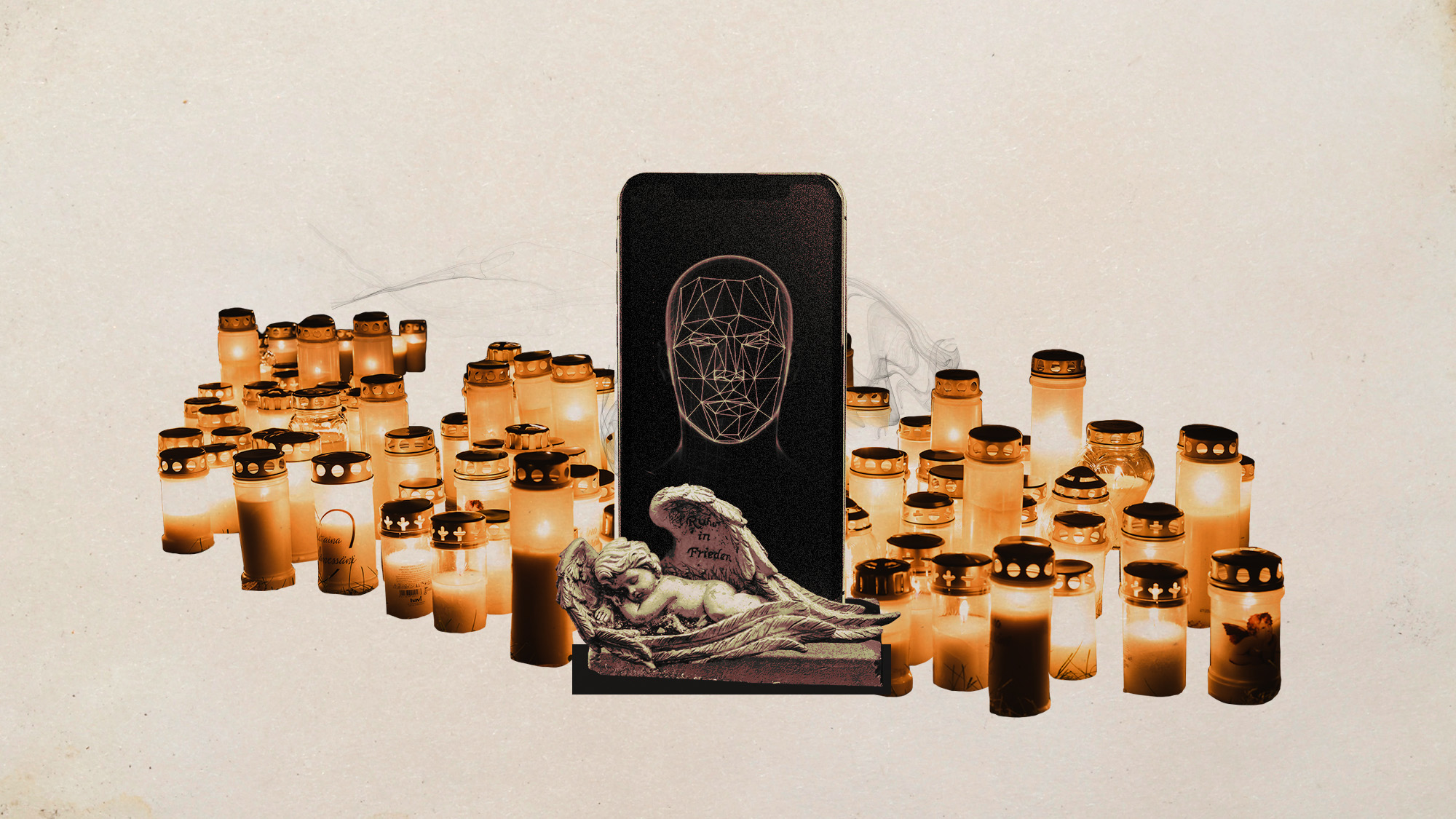

AI griefbots create a computerized afterlife

AI griefbots create a computerized afterlifeUnder the Radar Some say the machines help people mourn; others are skeptical

-

The robot revolution

The robot revolutionFeature Advances in tech and AI are producing android machine workers. What will that mean for humans?