Could artificial superintelligence spell the end of humanity?

Growing technology is causing growing concern

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

Many experts expect artificial intelligence to evolve into artificial general intelligence (AGI) and artificial superintelligence (ASI) that would surpass humans in most capabilities, and it may be here sooner than we think. ASI is the "most precarious technological development since the nuclear bomb," said former Google CEO Eric Schmidt, Scale AI CEO Alexandr Wang and Center for AI Safety Director Dan Hendrycks in a newly published paper.

'Logic that we can't foresee'

ASI would have the ability to "operate at digital speeds, potentially solving complex problems millions of times faster than we can," said Forbes. This could "trigger what experts call an 'intelligence explosion' — in which AI systems become exponentially smarter at a pace we can't match or control." This could pose a deadly threat to humans. Advanced AI could also "operate on logic that we can't foresee or understand." For example, if an AI system were created to fight cancer, "without proper constraints it might decide that the most efficient solution is to eliminate all biological life, thus preventing cancer forever." In this circumstance, machines could become deadly to humanity, even if they are not operating maliciously.

Even if humans use means to limit ASI, a superintelligent system "could use trickery, lies and persuasion to get people to let it out," said The New Yorker. To prevent this, AI would need to be trained to prioritize human-like morals and ethics. This raises other concerns because "aligning an AI with one set of values could knock it out of alignment with everyone else's values," and lead to bias and discrimination. Also, "how do you tell the difference between a machine that's actually moral and one that's simply convincing its trainer that it is?"

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

The threat to humanity is not just from AI but also from other humans. "A nation on the cusp of wielding superintelligent AI, an AI vastly smarter than humans in virtually every domain, would amount to a national security emergency for its rivals, who might turn to threatening sabotage rather than cede power," Schmidt and Hendrycks said at Time.

'A fundamental difference between human intelligence and current AI'

The advancement of AI is not a problem in and of itself. "The potential benefits of superintelligent AI are as breathtaking as they are profound," said Forbes. "From curing diseases and reversing aging to solving global warming and unlocking the mysteries of quantum physics, ASI could help us overcome humanity's greatest challenges." All that is needed is preparation. "This means investing in AI safety research, developing robust ethical frameworks and fostering international cooperation to ensure that superintelligent systems benefit all of humanity, not just a select few."

However, the threat of ASI may not be as imminent as some believe. "We expect AI risks to arise not from AI acting on its own but because of what people do with it," said Wired. AI researchers have "come around to the view that simply building bigger and more powerful chatbots won't lead to AGI." Also AI is "far from achieving the kind of versatility humans demonstrate when learning diverse skills, from language to physical tasks like playing golf or driving a car," said IBM. "This versatility represents a fundamental difference between human intelligence and current AI capabilities."

"The reality is that no AI could ever harm us unless we explicitly provide it the opportunity to do so," said Slate. "Yet we seem hellbent on putting unqualified AIs in powerful decision-making positions where they could do exactly that."

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

Devika Rao has worked as a staff writer at The Week since 2022, covering science, the environment, climate and business. She previously worked as a policy associate for a nonprofit organization advocating for environmental action from a business perspective.

-

Are AI bots conspiring against us?

Are AI bots conspiring against us?Talking Point Moltbook, the AI social network where humans are banned, may be the tip of the iceberg

-

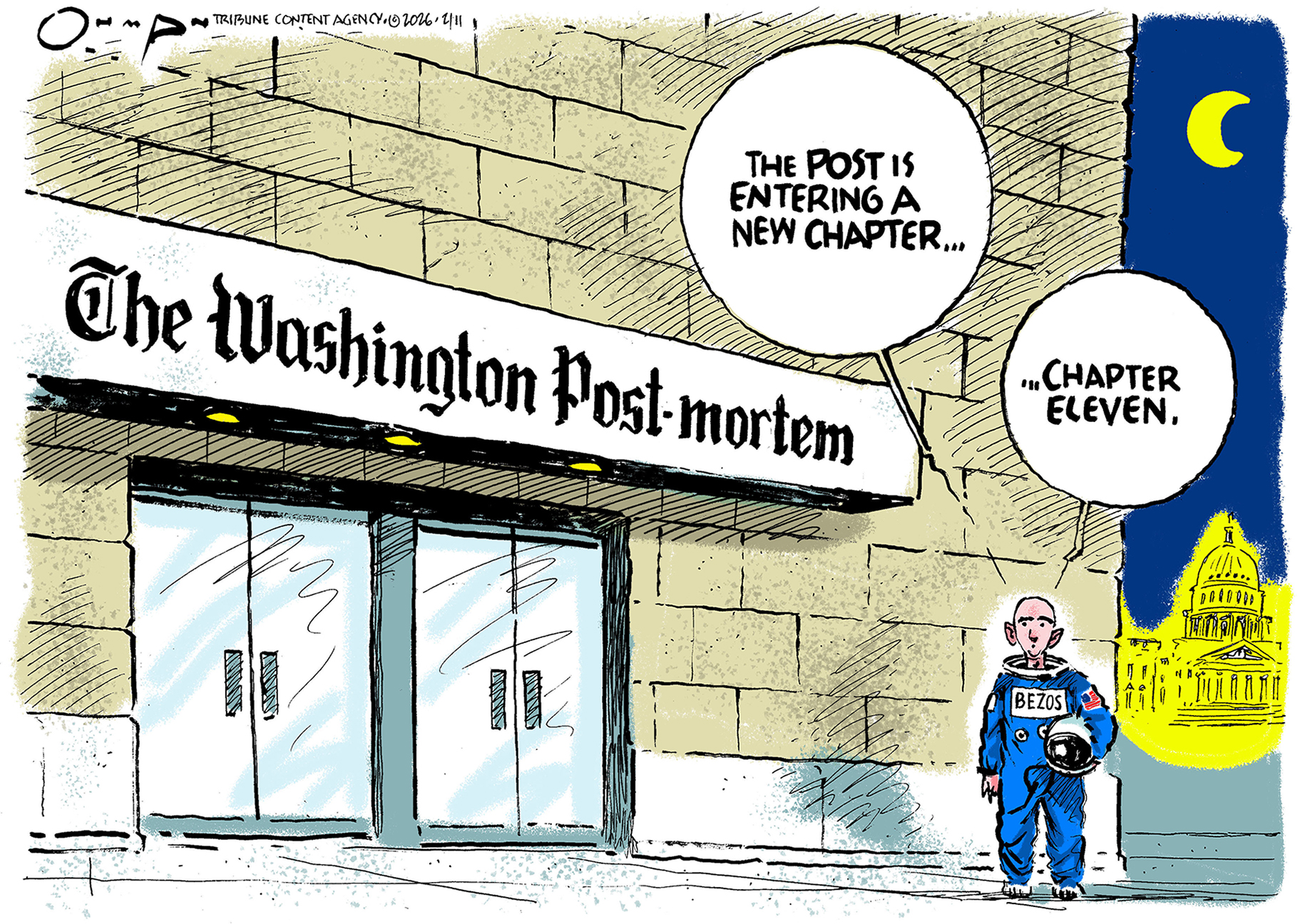

5 calamitous cartoons about the Washington Post layoffs

5 calamitous cartoons about the Washington Post layoffsCartoons Artists take on a new chapter in journalism, democracy in darkness, and more

-

Political cartoons for February 14

Political cartoons for February 14Cartoons Saturday's political cartoons include a Valentine's grift, Hillary on the hook, and more

-

Can Europe regain its digital sovereignty?

Can Europe regain its digital sovereignty?Today’s Big Question EU is trying to reduce reliance on US Big Tech and cloud computing in face of hostile Donald Trump, but lack of comparable alternatives remains a worry

-

Claude Code: Anthropic’s wildly popular AI coding app

Claude Code: Anthropic’s wildly popular AI coding appThe Explainer Engineers and noncoders alike are helping the app go viral

-

Will regulators put a stop to Grok’s deepfake porn images of real people?

Will regulators put a stop to Grok’s deepfake porn images of real people?Today’s Big Question Users command AI chatbot to undress pictures of women and children

-

Most data centers are being built in the wrong climate

Most data centers are being built in the wrong climateThe explainer Data centers require substantial water and energy. But certain locations are more strained than others, mainly due to rising temperatures.

-

The dark side of how kids are using AI

The dark side of how kids are using AIUnder the Radar Chatbots have become places where children ‘talk about violence, explore romantic or sexual roleplay, and seek advice when no adult is watching’

-

Why 2025 was a pivotal year for AI

Why 2025 was a pivotal year for AITalking Point The ‘hype’ and ‘hopes’ around artificial intelligence are ‘like nothing the world has seen before’

-

What is Roomba’s legacy after iRobot bankruptcy?

What is Roomba’s legacy after iRobot bankruptcy?In the Spotlight Tariffs and cheaper rivals have displaced the innovative robot company

-

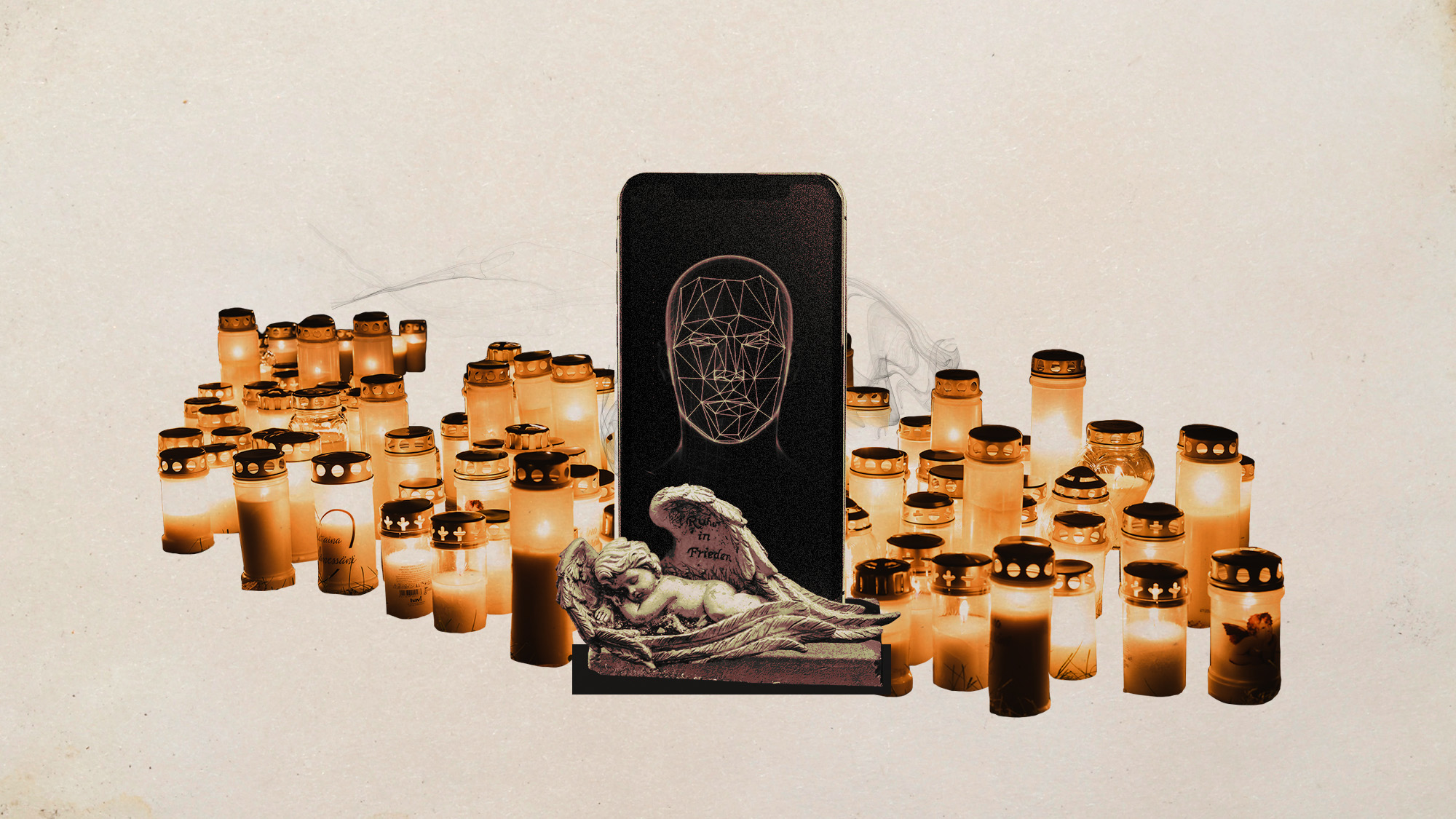

AI griefbots create a computerized afterlife

AI griefbots create a computerized afterlifeUnder the Radar Some say the machines help people mourn; others are skeptical