The new civil rights frontier: artificial intelligence

Experts worry that AI could further inequality and discrimination

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

Artificial intelligence is continuing to grow in many industries. It is expected to replace 85 million jobs globally by 2025 as well as potentially generate 97 million new roles, according to the Future of Jobs Report 2020 from the World Economic Forum. However, the growth of artificial intelligence is shedding light on another problem: a lack of diversity within its components. AI is trained with existing data, but much of the data excludes women and people of color, raising questions as to whether the technology can be properly applied across the board.

How can AI be biased?

AI has to build up its knowledge base through machine learning, essentially training the technology by feeding it data. The problem is much of our pre-existing data excludes a vast number of people, namely women and minorities. The most poignant example is in health data, where "80% or more of clinical trials have historically relied on the western population when it comes to patient recruitment," Harsha Rajasimha, founder and executive chairman of the Indo-US Organization for Rare Diseases, a nonprofit that studies rare diseases, told MedTech Intelligence.

Many worry that biases will become inherently ingrained into AI systems. "If you mess this up, you can really, really harm people by entrenching systemic racism further into the health system," Mark Sendak, a lead data scientist at the Duke Institute for Health Innovation, told NPR. This problem may already be in the works, as there have already been instances where facial recognition software was unable to identify Black faces. "The impact on minority communities — especially the Black community — is not considered until something goes wrong," California Rep. Barbara Lee (D) said during a panel at the annual Congressional Black Caucus legislative conference. For example, AI technology could inadvertently discriminate between white and Black job applicants based on previous data on job hirings.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

How can it be fixed?

The biases within artificial intelligence often reflect the biases of humanity as a whole. "Our propensity to think fast and fill in the blanks of information by generalizing and jumping to conclusions explains the ubiquity of biases in any area of social life," wrote Fast Company. This translates into much of the data we have on Earth — and therefore what gets imprinted onto AI. "AI can only be unbiased if it learns from unbiased data, which is notoriously hard to come by," Fast Company added. Even if an AI algorithm is created to be unbiased, "it doesn’t mean that the AI won’t find other ways to introduce biases into its decision-making process," Vox wrote.

The good news is that experts believe that this is a problem that can be solved. "Even though the early systems before people figured out these techniques certainly reinforced bias, I think we can now explain that we want a model to be unbiased, and it’s pretty good at that," Sam Altman, the founder of OpenAI, told Rest of World. "I’m optimistic that we will get to a world where these models can be a force to reduce bias in society, not reinforce it."

Some programs are trying to get ahead of the curve; There is a new AI model called Latimer that "deeply incorporates cultural and historical perspectives of Black and Brown communities," Forbes reported. "We are establishing the building blocks of what the future of AI needs to include, and in doing so, we are working to create an equitable and necessary layer of technology that can be utilized by all demographics," Latimer Founder and CEO John Pasmore told Forbes. Most experts agree that AI has a lot of potential to do good in a number of industries as long as actions are taken to consider the pitfalls. "AI is not bad for diversity — if diversity is part of the design itself," Fast Company concluded.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

Devika Rao has worked as a staff writer at The Week since 2022, covering science, the environment, climate and business. She previously worked as a policy associate for a nonprofit organization advocating for environmental action from a business perspective.

-

Local elections 2026: where are they and who is expected to win?

Local elections 2026: where are they and who is expected to win?The Explainer Labour is braced for heavy losses and U-turn on postponing some council elections hasn’t helped the party’s prospects

-

6 of the world’s most accessible destinations

6 of the world’s most accessible destinationsThe Week Recommends Experience all of Berlin, Singapore and Sydney

-

How the FCC’s ‘equal time’ rule works

How the FCC’s ‘equal time’ rule worksIn the Spotlight The law is at the heart of the Colbert-CBS conflict

-

Claude Code: Anthropic’s wildly popular AI coding app

Claude Code: Anthropic’s wildly popular AI coding appThe Explainer Engineers and noncoders alike are helping the app go viral

-

Will regulators put a stop to Grok’s deepfake porn images of real people?

Will regulators put a stop to Grok’s deepfake porn images of real people?Today’s Big Question Users command AI chatbot to undress pictures of women and children

-

Most data centers are being built in the wrong climate

Most data centers are being built in the wrong climateThe explainer Data centers require substantial water and energy. But certain locations are more strained than others, mainly due to rising temperatures.

-

The dark side of how kids are using AI

The dark side of how kids are using AIUnder the Radar Chatbots have become places where children ‘talk about violence, explore romantic or sexual roleplay, and seek advice when no adult is watching’

-

Why 2025 was a pivotal year for AI

Why 2025 was a pivotal year for AITalking Point The ‘hype’ and ‘hopes’ around artificial intelligence are ‘like nothing the world has seen before’

-

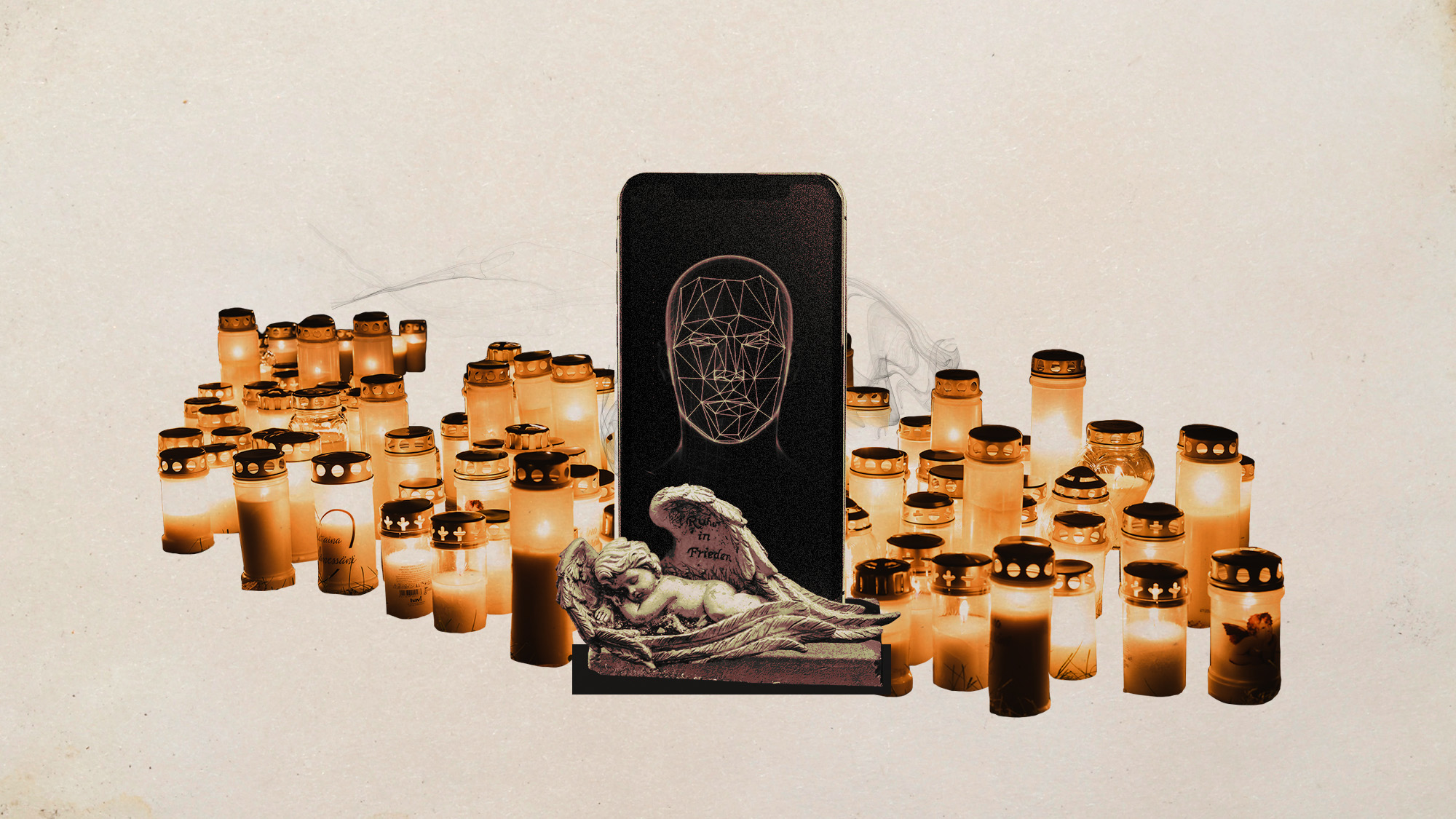

AI griefbots create a computerized afterlife

AI griefbots create a computerized afterlifeUnder the Radar Some say the machines help people mourn; others are skeptical

-

The robot revolution

The robot revolutionFeature Advances in tech and AI are producing android machine workers. What will that mean for humans?

-

Separating the real from the fake: tips for spotting AI slop

Separating the real from the fake: tips for spotting AI slopThe Week Recommends Advanced AI may have made slop videos harder to spot, but experts say it’s still possible to detect them