Inside Facebook's global constitutional convention

Can an 'oversight board' solve the company's content problems?

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

Everybody gets criticized — especially in the social media era. Faced with that flood of negativity, it can be hard to figure out what to listen to, and most of us default to listening to friends or people who know what they're talking about.

But if friendly, informed criticism is the best sort, then it must have been profoundly uncomfortable in the Facebook offices recently. In a New York Times op-ed this week, Facebook co-founder Chris Hughes called for the breakup of the company he helped start, claiming that the company has simply become too big and too powerful and too slow to react to the numerous issues of privacy, misinformation, and extremism on the platform.

Facebook wasn't happy about it (it claimed breaking up a successful company is the wrong approach) but also knows it has a lot work to do. Late last year, Mark Zuckerberg announced he intended to create a Facebook Oversight Board, a quasi-independent board of non-Facebook employees whose job it would be help with content moderation decisions. Facebook is also looking for feedback on board, partly through some roundtables held around the world with policy experts, one of which I attended this week in Ottawa, Canada. Though it occurred before the publishing of Hughes' op-ed, it was clear that Facebook is looking to preempt the kind of aggressive government intervention proposed.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

What was also clear during the four hour session, however, was just how complicated the issue of moderating what people can post on Facebook is. It's also seems that the company is serious about the effort. Yet, despite what appears to be a sincere desire on the part of the company to make itself better, more accountable, and more transparent, it was difficult not to wonder if what Hughes and others are calling for isn't in fact correct — that even if and when Facebook gets better at being responsible, it still isn't is too big.

The roundtable was attended by Peter Stern, Manager of the Policy Group, and Kevin Chan, who is the Head of Public Policy at Facebook Canada. It took place under guidelines called “Chatham House Rules” which allow discussion of what was said of the group but forbid attributing what was said by whom. Around 25 policy experts attended from a variety of fields, from media literacy and the academy, to anti-hate advocacy groups and representatives from government.

The oversight board is ostensibly aimed at adjudicating content removal decisions — that is, when and why a piece of content gets removed, whether due to issues of hate speech, nudity, credible threats of violence and so on. It is in essence a bit like a supreme court for Facebook, a final step for significant or controversial decisions that extend beyond or challenge normal policy. Composed of 40 people drawn from a diverse background in both identity and area expertise, the decisions it makes will be binding and are meant to make Facebook more accountable.

In that sense, the proposed board is a step forward. Facebook's Peter Stern made it clear that the company was all in on the idea, and that the desire for the board also comes from a recognition that Facebook should not be making decisions alone. With a footprint of over 2 billion users, the platform accounts for an enormous percentage of speech online, and thus some sort of accountability that extends beyond, say, responsibility to shareholders seems a welcome change.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

The scope of Facebook's purview is itself mind-boggling, with billions of pieces of content going up each day, and is also why the process of policing content is so complicated. But it's not just the sheer scale of things. With a global footprint, Facebook walks a fine line. On the one hand, it helps promote certain near-universal ideals like free speech, a boon in places under authoritarian rule. At the same time, it also needs to respect the massive variety of both legal and social differences across the globe. How, for example, can you come up with a rule for nudity when what is acceptable differs so much depending on where you are?

Its desire, then, to seek input from both experts and the public around the world seems well-intentioned, and it was clear that Facebook is grappling not just with the obvious questions — what are good rules, how do we apply them and so on — but also of issues over whether historically disadvantaged groups deserve special protection, or the sticky areas of how to separate satire and irony from hate speech.

All that said, serious concerns remain. For example, how might a 40 person board adequately represent the concerns of the thousands of groups across the globe? How might a set of “universal” rules address the significant disparities in belief and practice by the billions of people who use or will soon use the platform? How might a company firmly committed to American ideals not end up exporting those ideals, or enforcing such social values over and against smaller states? It's a dizzying miasma of problems for which solutions are not merely difficult to find, but perhaps impossible.

That difficulty seems inherent to what Facebook is actually doing: in essence, writing a constitution for the global moderation of speech. That it is doing so at all is a recognition of what is at stake on its platform. Yet, sitting and listening to Facebook dutifully receive and genuinely listen to feedback, the absurdity of the situation was also hard to ignore. Here was a private company with historically unprecedented reach trying its best to do the right thing, in which the “right thing” was to find the right way to govern speech on the world's largest democratic platform. It is indicative of the fact that the company is a kind of supra-state unto itself, significantly more powerful than most countries across the globe, and with enormous influence.

Faced with the utter strangeness of that fact, it is hard not to wonder if Chris Hughes et al are not on the right path — not just that Facebook needs to be broken up, but that its sheer size is itself the root of many problems. We are caught in a complex binary in which, on one hand, the Web and companies like Facebook play an important role in democratic and liberal processes around the world, but in which such companies also appear to have far too much power and too little accountability.

During the process of the roundtable, there was reason for both hope and despair. But one thing was clear: the proposed oversight board would be funded by Facebook, and work to better serve its own stated values, whether those are fairness and transparency — or, perhaps less generously, a desire to stave off punitive regulation. It is the bind we find ourselves in when private companies come to form core parts of public life: they have their interests, and we have ours, and in far too many ways, those are simply not the same thing. Facebook appears to be trying its best to respond sincerely to criticism — and alas, that simply may not be enough.

Navneet Alang is a technology and culture writer based out of Toronto. His work has appeared in The Atlantic, New Republic, Globe and Mail, and Hazlitt.

-

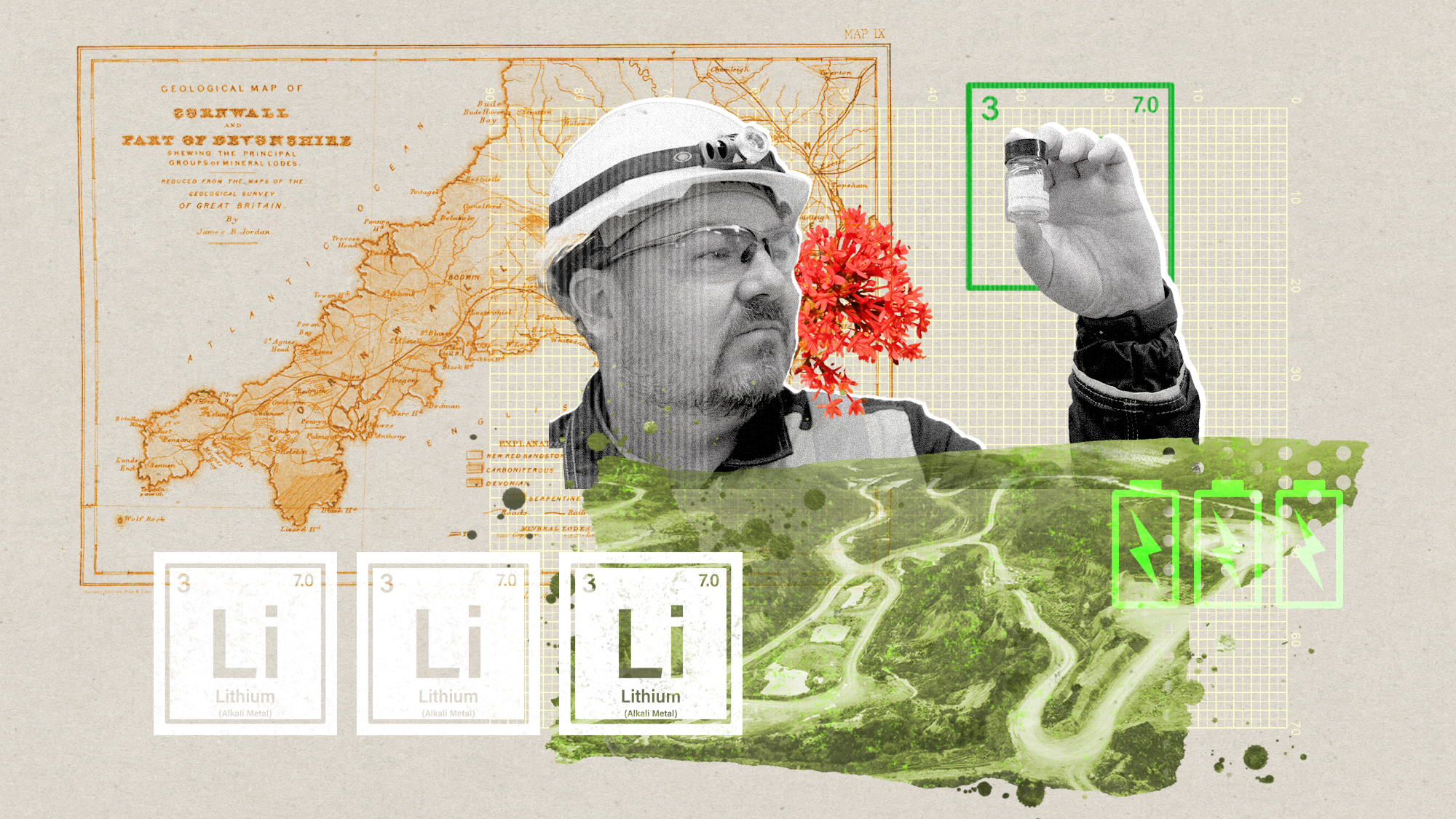

The ‘ravenous’ demand for Cornish minerals

The ‘ravenous’ demand for Cornish mineralsUnder the Radar Growing need for critical minerals to power tech has intensified ‘appetite’ for lithium, which could be a ‘huge boon’ for local economy

-

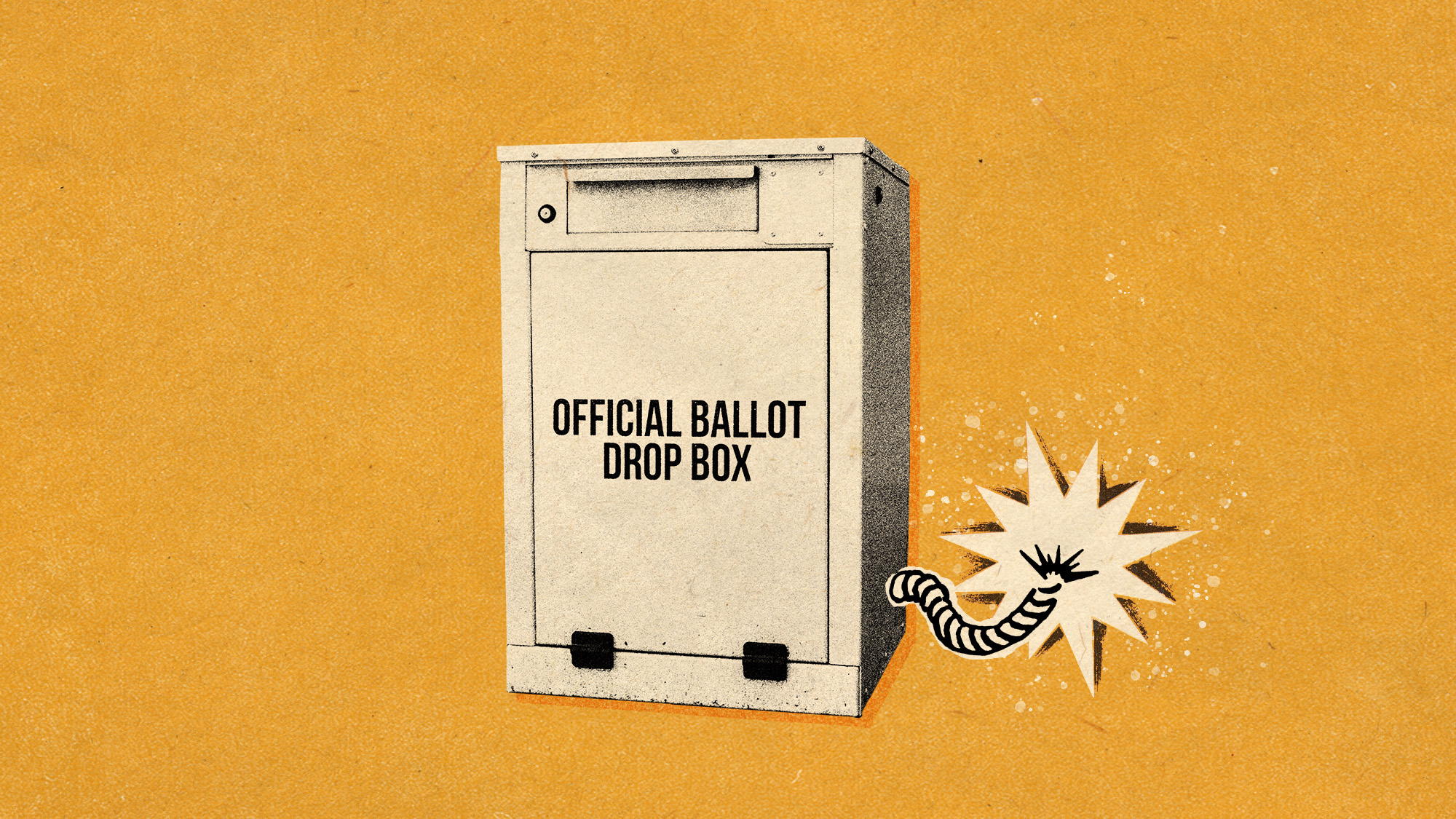

Why are election experts taking Trump’s midterm threats seriously?

Why are election experts taking Trump’s midterm threats seriously?IN THE SPOTLIGHT As the president muses about polling place deployments and a centralized electoral system aimed at one-party control, lawmakers are taking this administration at its word

-

‘Restaurateurs have become millionaires’

‘Restaurateurs have become millionaires’Instant Opinion Opinion, comment and editorials of the day

-

The billionaires’ wealth tax: a catastrophe for California?

The billionaires’ wealth tax: a catastrophe for California?Talking Point Peter Thiel and Larry Page preparing to change state residency

-

Bari Weiss’ ‘60 Minutes’ scandal is about more than one report

Bari Weiss’ ‘60 Minutes’ scandal is about more than one reportIN THE SPOTLIGHT By blocking an approved segment on a controversial prison holding US deportees in El Salvador, the editor-in-chief of CBS News has become the main story

-

Has Zohran Mamdani shown the Democrats how to win again?

Has Zohran Mamdani shown the Democrats how to win again?Today’s Big Question New York City mayoral election touted as victory for left-wing populists but moderate centrist wins elsewhere present more complex path for Democratic Party

-

Millions turn out for anti-Trump ‘No Kings’ rallies

Millions turn out for anti-Trump ‘No Kings’ ralliesSpeed Read An estimated 7 million people participated, 2 million more than at the first ‘No Kings’ protest in June

-

Ghislaine Maxwell: angling for a Trump pardon

Ghislaine Maxwell: angling for a Trump pardonTalking Point Convicted sex trafficker's testimony could shed new light on president's links to Jeffrey Epstein

-

The last words and final moments of 40 presidents

The last words and final moments of 40 presidentsThe Explainer Some are eloquent quotes worthy of the holders of the highest office in the nation, and others... aren't

-

The JFK files: the truth at last?

The JFK files: the truth at last?In The Spotlight More than 64,000 previously classified documents relating the 1963 assassination of John F. Kennedy have been released by the Trump administration

-

'Seriously, not literally': how should the world take Donald Trump?

'Seriously, not literally': how should the world take Donald Trump?Today's big question White House rhetoric and reality look likely to become increasingly blurred