Jan. 6 was fueled by misinformation. More content moderation won't fix it.

The chaos at the Capitol showed the real-world consequences of propaganda

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

A year ago today, a rogue group of protestors launched a violent assault on the United States Capitol, stoked by misinformation and a lie of a stolen election from those entrusted to lead us. That underlying problem of confusion and deception was already recognized when the riot happened, as the controversial congressional Jan. 6 commission has clearly illustrated, but recognition alone didn't prevent the attack.

And in the year since, misinformation has only become more mainstream. It is an increasingly dangerous threat to our common public square. Truth is being redefined to fit partisan politics, and many struggle to know if what they read online is trustworthy. We need a solution to misinformation — but what?

Democrats argue social media companies must moderate or suppress more content online to protect people from mis/disinformation, while Republicans tend to say content moderation is ideologically fraught, an affront to free speech, and a contributor to much of the cultural polarization of the day.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

Social media companies also joined in these debates, making substantial shifts since Jan. 6 in content moderation and decision processes for banning or punishing users who repeatedly spread false information, incite violence, or violate often vague hate speech policies. From larger moderation teams with more resources to increased community watch dogs, many in Big Tech seem to grasp just how formative social media has become on our national psyche, even as they continue to fuel its growth and implement inconsistent and ill-conceived moderation policies.

But are these governmental and corporate measures really the only solutions to our problem of misinformation?

Jan. 6 represents far more than what took place on that brisk afternoon in Washington, D.C., and should point us to a more holistic approach that acknowledges our own responsibility in this age of misinformation. This could be a watershed moment for our nation, one at which no one in good faith could deny the corruption of truth has real-world consequences for the common good. If our democracy has any hope of moving forward with any sort of unity, we must see last year's assault on truth isn't all that new. For nearly two decades, we've shirked personal responsibility for the increasingly toxic landscape of social media.

We do that because it's convenient, and it satisfies the human proclivity to overlook our own faults. We can blame the breakdown of truth on those people or scapegoat a particular voting bloc. And though our vocabulary here ("fake news," "misinformation," or discussion around the Capitol riot itself) is new, what used to be called "propaganda" is not.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

As the late French sociologist and Protestant theologian Jacques Ellul argues in his influential work, The Technological Society, one of the great strengths of propaganda is that it can "become as natural as air or food" in society where "the individual is able to declare in all honesty that no such thing as propaganda exists." This becomes possible only when humanity has become "so absorbed by [technology] that he is literally no longer able to see the truth." Since Ellul penned those words in 1954, what has changed is that anyone with a smartphone and rhetorical savviness can create and distribute misinformation to the masses in a matter of moments. We can all be propagandists if we're not careful.

Rushing toward ill-conceived government or corporate measures or to simply blame others for the rise of misinformation and fake news in our society ignores that truth. It ignores how we each bear moral responsibility for our actions, including the things we share online and the ways that we choose to treat our neighbors. This is true even if we disagree with them on the most fundamental moral aspects of public life.

The United States is a country that has long stood for free speech, but this freedom was never intended to be untethered from its corresponding reality of being morally upright people who bear an immense responsibility to our neighbors. Upending our basic freedoms of expression, as many have proposed in the last year, would do little to address digital problems before us, as these measures tend to exacerbate the divides and polarize the public square rather than help to restore the intricate balance of freedom and moral responsibility. And maintaining that balance is especially important now, when the very nature of truth is increasingly seen as a partisan football to be altered, manipulated, or lobbed at our political opponents for personal and social gain.

Because social media makes propaganda easier than ever before, we must take a hard look in the mirror to see how each of us is routinely tempted to succumb to our worst proclivities and share information online that whets our appetites or validates lies we want to be truths.

Every one of us has an obligation to stand up for truth in all areas of life and refuse to tolerate the spreading of misinformation, lies, and the prognostications of those seeking to retain power, position, or influence. But this problem isn't just about them; this is the world that we all inhabit, whether we like it or not.

If Jan. 6 has taught us anything, it is that the things we do and say online have real-world consequences. We must all bear that responsibility in this age of misinformation.

Jason Thacker is chair of research in technology ethics and director of the research institute at The Ethics and Religious Liberty Commission. He teaches ethics and philosophy at Boyce College in Louisville, KY. His work has been featured at Christianity Today, The Gospel Coalition, Slate, and Politico. He is the author or editor of numerous works, including The Age of AI: Artificial Intelligence and the Future of Humanity, Following Jesus in a Digital Age, and The Digital Public Square: Christian Ethics in a Technological Society.

-

Trump touts pledges at 1st Board of Peace meeting

Trump touts pledges at 1st Board of Peace meetingSpeed Read At the inaugural meeting, the president announced nine countries have agreed to pledge a combined $7 billion for a Gaza relief package

-

Britain’s ex-Prince Andrew arrested over Epstein ties

Britain’s ex-Prince Andrew arrested over Epstein tiesSpeed Read The younger brother of King Charles III has not yet been charged

-

Political cartoons for February 20

Political cartoons for February 20Cartoons Friday’s political cartoons include just the ice, winter games, and more

-

The billionaires’ wealth tax: a catastrophe for California?

The billionaires’ wealth tax: a catastrophe for California?Talking Point Peter Thiel and Larry Page preparing to change state residency

-

Bari Weiss’ ‘60 Minutes’ scandal is about more than one report

Bari Weiss’ ‘60 Minutes’ scandal is about more than one reportIN THE SPOTLIGHT By blocking an approved segment on a controversial prison holding US deportees in El Salvador, the editor-in-chief of CBS News has become the main story

-

Has Zohran Mamdani shown the Democrats how to win again?

Has Zohran Mamdani shown the Democrats how to win again?Today’s Big Question New York City mayoral election touted as victory for left-wing populists but moderate centrist wins elsewhere present more complex path for Democratic Party

-

Millions turn out for anti-Trump ‘No Kings’ rallies

Millions turn out for anti-Trump ‘No Kings’ ralliesSpeed Read An estimated 7 million people participated, 2 million more than at the first ‘No Kings’ protest in June

-

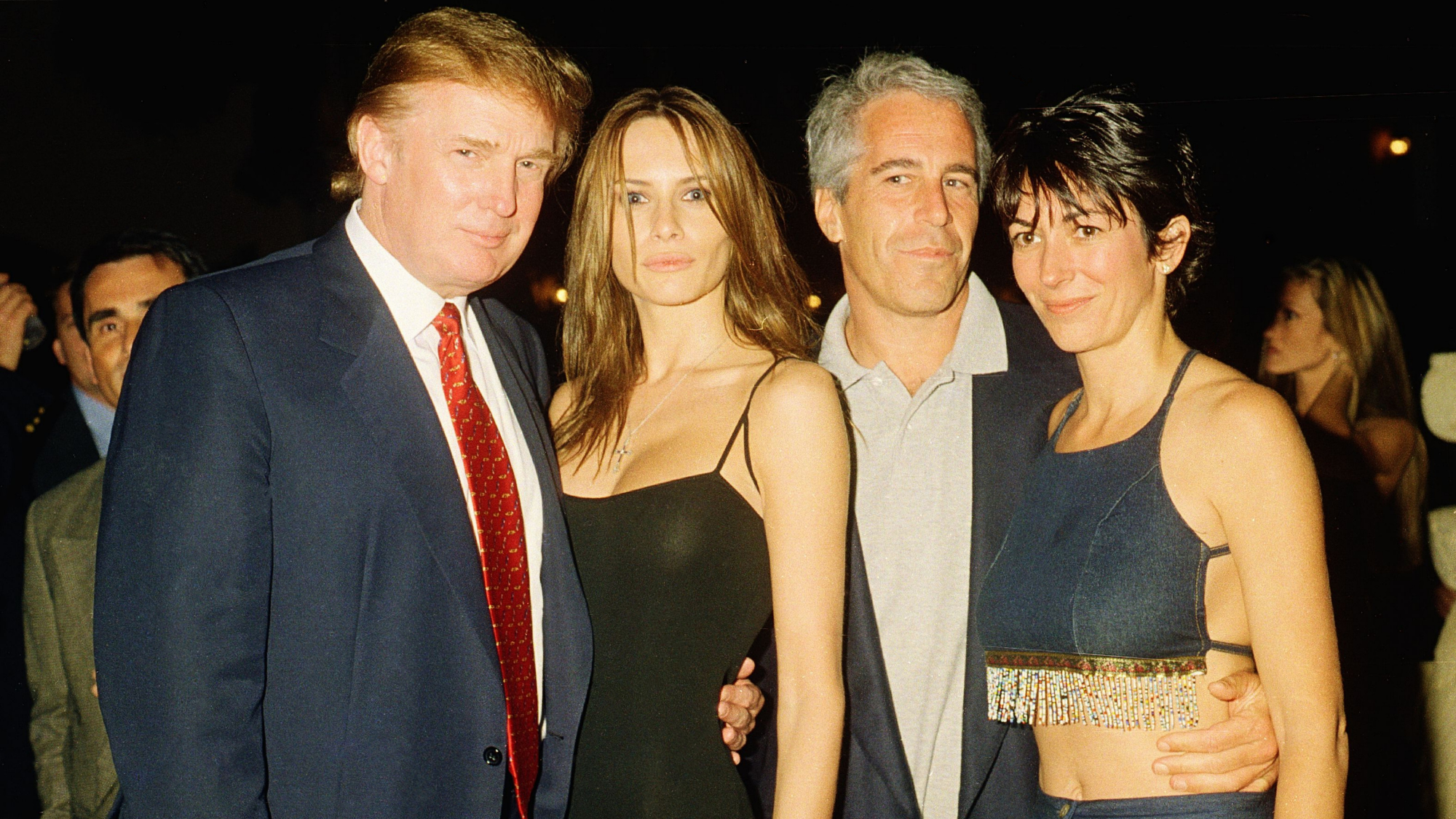

Ghislaine Maxwell: angling for a Trump pardon

Ghislaine Maxwell: angling for a Trump pardonTalking Point Convicted sex trafficker's testimony could shed new light on president's links to Jeffrey Epstein

-

The last words and final moments of 40 presidents

The last words and final moments of 40 presidentsThe Explainer Some are eloquent quotes worthy of the holders of the highest office in the nation, and others... aren't

-

The JFK files: the truth at last?

The JFK files: the truth at last?In The Spotlight More than 64,000 previously classified documents relating the 1963 assassination of John F. Kennedy have been released by the Trump administration

-

'Seriously, not literally': how should the world take Donald Trump?

'Seriously, not literally': how should the world take Donald Trump?Today's big question White House rhetoric and reality look likely to become increasingly blurred