The EU's landmark AI Act 'rushed' out as countdown begins on compliance

'We will be hiring lawyers while the rest of the world is hiring coders' – Europe's warning about new AI legislation

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

The EU's pioneering legislation to regulate AI is set to come into force next month, despite criticisms that it is incomplete, ambiguous, and stifling to the tech industry.

The first law of its kind anywhere in the world, the EU Artificial Intelligence Act aims to protect citizens from potentially harmful uses of AI by regulating companies within the EU, without losing ground to AI superpowers China and the US.

While EU lawmakers are "mostly concerned" about consumer safety and the dissemination of deepfakes, which could mislead voters in elections, the tech community has raised "gripes" with the legislation, said Euronews. Officials are now "frantically trying to plug the holes in the regulation before it comes into force", said the Financial Times.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

What does the act do?

The act, which will be implemented in stages over the next two years, classifies different types of AI by risk.

Minimal risk uses – AI-powered video games and spam filters, for example – will not be subject to regulation. Limited risk activities, such as chatbots and other generative AI platforms, will be subject to "light regulation", including transparency requirements to inform consumers that they are interacting with a machine.

The "high-risk" category includes AI systems used by law enforcement like biometric identification, as well as systems used to access public services or critical infrastructure.

A further "unacceptable risk" category bans all AI systems that "threaten citizens' rights", said The Verge. This includes AI used to deceive or manipulate humans, or profile them as potential criminals based on behaviour or personality traits.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

What are the criticisms of the legislation?

Progress on the legislation, which has been in the works for three years, was "upended" in 2022 when OpenAI released ChatGPT, said the FT. The emergence of "generative AI" models, which create text or images based on user prompts, "reshaped the tech landscape" and had parliamentarians "rushing to rewrite the rules" to regulate the large language models that underpin such apps.

"Time pressure led to an outcome where many things remain open," a parliamentary aide involved in drafting the "rather vague" law told the FT. Regulators "couldn't agree on them and it was easier to compromise" on what the unidentified aide called "a shot in the dark".

Some critics say the text lacks clarity – especially on whether systems like ChatGPT are acting illegally when they use sources protected by copyright law. There is also confusion over who is responsible for content generated by AI, or what "fair remuneration" might look like for those who create the content it draws from. The act also does not specify who might enforce the rules in individual member states, or how, which could lead to patchy implementation across the continent.

The cost of compliance is also a problem, particularly for small companies. It could make it "very hard for deep tech entrepreneurs to find success in Europe", Andreas Cleve, chief executive of Danish healthcare start-up Corti, told the FT. Many believe that cost will hinder those European companies competing with the US and China. "We will be hiring lawyers while the rest of the world is hiring coders," said Cecilia Bonefeld-Dahl, director general for DigitalEurope, which represents the bloc's technology sector.

What's still to be done?

Tech companies have until February next year to comply with the "unacceptable risk" rules or face a fine of 7% of their total global annual revenue, or €35 million (£29.5 million), whichever is higher.

Developers of systems that fall in the "high risk" category will have until August 2027 to comply with rules around risk assessment and human oversight.

By some estimates, the EU needs between 60 and 70 pieces of secondary legislation regarding the details of how the act will be implemented and enforced, and those must be in place by May next year. "The devil will be in the details," a diplomat who took a leading role in drafting the act told the FT. "But people are tired and the timeline is tight."

Harriet Marsden is a senior staff writer and podcast panellist for The Week, covering world news and writing the weekly Global Digest newsletter. Before joining the site in 2023, she was a freelance journalist for seven years, working for The Guardian, The Times and The Independent among others, and regularly appearing on radio shows. In 2021, she was awarded the “journalist-at-large” fellowship by the Local Trust charity, and spent a year travelling independently to some of England’s most deprived areas to write about community activism. She has a master’s in international journalism from City University, and has also worked in Bolivia, Colombia and Spain.

-

What are the best investments for beginners?

What are the best investments for beginners?The Explainer Stocks and ETFs and bonds, oh my

-

What to know before filing your own taxes for the first time

What to know before filing your own taxes for the first timethe explainer Tackle this financial milestone with confidence

-

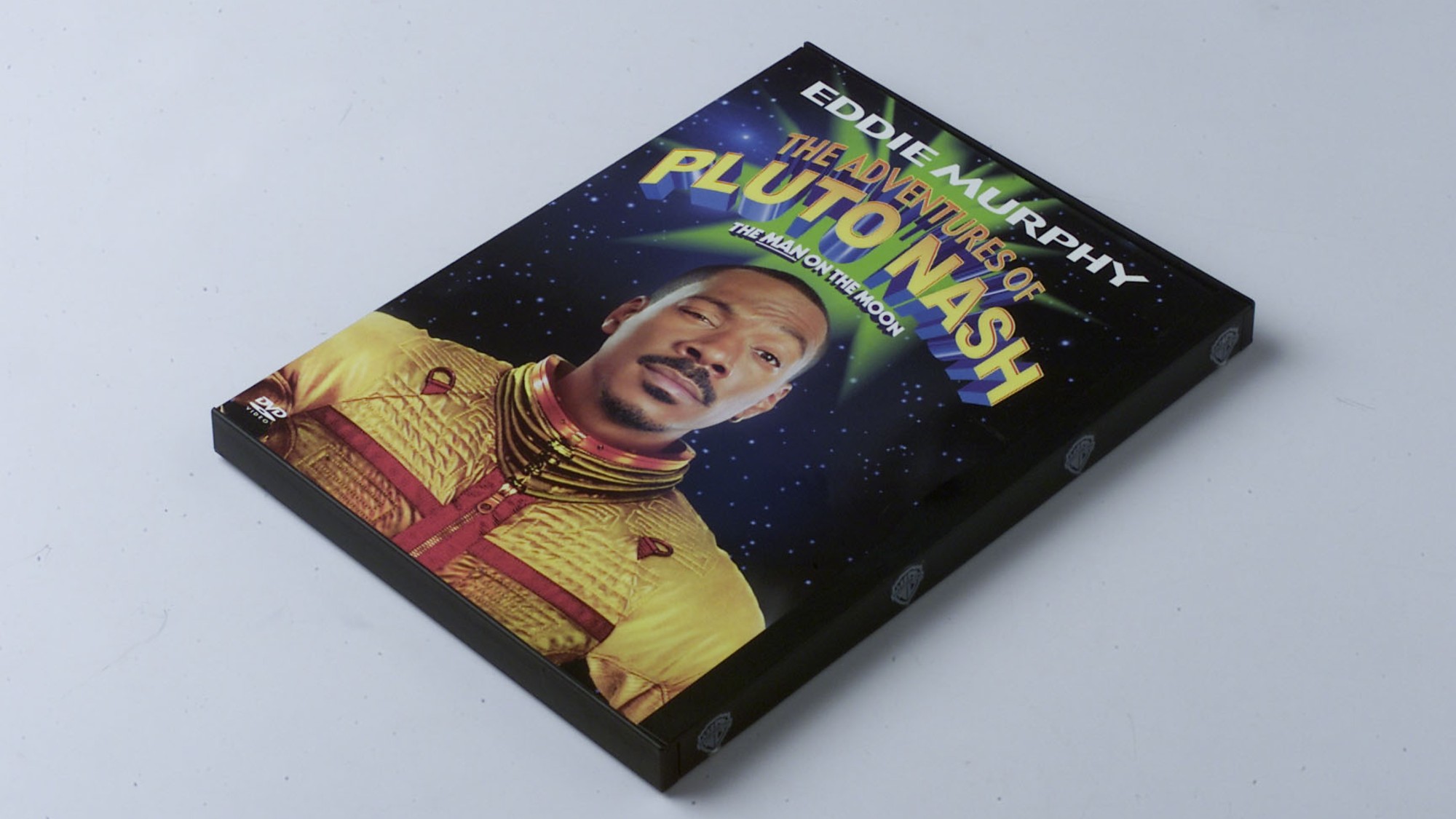

The biggest box office flops of the 21st century

The biggest box office flops of the 21st centuryin depth Unnecessary remakes and turgid, expensive CGI-fests highlight this list of these most notorious box-office losers

-

The countries around the world without jury trials

The countries around the world without jury trialsThe Explainer Legal systems in much of continental Europe and Asia do not rely on randomly selected members of the public

-

How far does religious freedom go in prison? The Supreme Court will decide.

How far does religious freedom go in prison? The Supreme Court will decide.The Explainer The plaintiff was allegedly forced to cut his hair, which he kept long for religious reasons

-

The Supreme Court case that could forge a new path to sue the FBI

The Supreme Court case that could forge a new path to sue the FBIThe Explainer The case arose after the FBI admitted to raiding the wrong house in 2017

-

'Libel and lies': Benjamin Netanyahu's corruption trial

'Libel and lies': Benjamin Netanyahu's corruption trialThe Explainer Israeli PM takes the stand on charges his supporters say are cooked up by a 'liberal deep state'

-

Assisted dying: what can we learn from other countries?

Assisted dying: what can we learn from other countries?The Explainer A look at the world's right to die laws as MPs debate Kim Leadbeater's proposed bill

-

The rules for armed police in the UK

The rules for armed police in the UKThe Explainer What the law says about when police officers can open fire in Britain

-

How would assisted dying work in the UK?

How would assisted dying work in the UK?The Explainer Proposed law would apply to patients in England and Wales with less than six months to live – but medics may be able to opt out

-

Assisted dying: will the law change?

Assisted dying: will the law change?Talking Point Historic legislation likely to pass but critics warn it must include safeguards against abuse