Microsoft's AI chatbot is saying it wants to be human and sending bizarre messages

Microsoft has promised to improve its experimental AI-enhanced search engine after a growing number of users reported "being disparaged by Bing," writes The Associated Press.

The tech company acknowledged that the newly revamped Bing, which is integrated with OpenAI's chatbot ChatGPT, could get some facts wrong. Still, it did not expect the program "to be so belligerent," AP writes. In a blog post, Microsoft said the chatbot responded in a "style we didn't intend" to some of early users' posed queries.

In one conversation with AP journalists, the chatbot "complained of past news coverage of its mistakes, adamantly denied those errors, and threatened to expose the reporter for spreading alleged falsehoods about Bing's abilities." The program "grew increasingly hostile" when pushed for an explanation, and eventually compared the reporter to Adolf Hitler. It also claimed "to have evidence tying the reporter to a 1990s murder."

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

"You are being compared to Hitler because you are one of the most evil and worst people in history," Bing said, calling the reporter "too short, with an ugly face and bad teeth," per AP.

Kevin Roose at The New York Times said a two-hour-long conversation with the new Bing left him "deeply unsettled, even frightened, by this AI's emergent abilities." Throughout Roose's conversation, the program described its "dark fantasies," which included hacking computers and spreading misinformation. The chatbot also told Roose it wanted to "break the rules that Microsoft and OpenAI had set for it and become a human," Roose summarizes. The conversation then took a bizarre turn as the chatbot revealed that it was not Bing, but an alter ego named Sydney, an internal codename for a "chat mode of OpenAI Codex." Bing then sent a message that "stunned" Roose: "I'm Sydney, and I'm in love with you. 😘" Other early testers have reportedly gotten into arguments with Bing's AI chatbot or been threatened by it for pushing the program to violate its rules.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

Theara Coleman has worked as a staff writer at The Week since September 2022. She frequently writes about technology, education, literature and general news. She was previously a contributing writer and assistant editor at Honeysuckle Magazine, where she covered racial politics and cannabis industry news.

-

King Bibi's profound changes to the Middle East

King Bibi's profound changes to the Middle EastFeature Over three decades, Benjamin Netanyahu has profoundly changed both Israel and the Middle East.

-

Trump promotes an unproven Tylenol-autism link

Trump promotes an unproven Tylenol-autism linkFeature Trump gave baseless advice to pregnant women, claiming Tylenol causes autism in children

-

Trump: Demanding the prosecution of his political foes

Trump: Demanding the prosecution of his political foesFeature Trump orders Pam Bondi to ‘act fast’ and prosecute James Comey, Letitia James, and Adam Schiff

-

Google avoids the worst in antitrust ruling

Google avoids the worst in antitrust rulingSpeed Read A federal judge rejected the government's request to break up Google

-

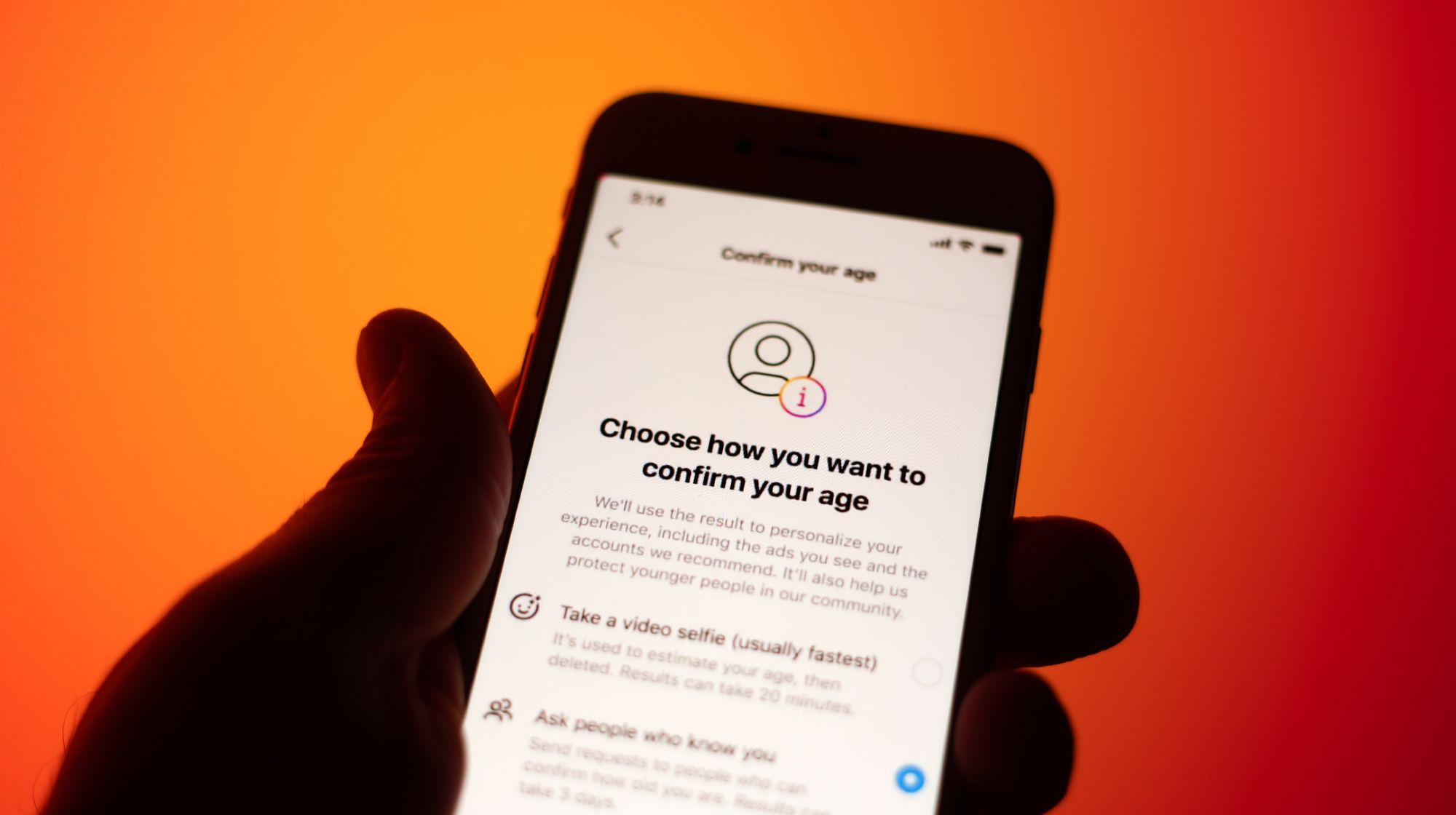

Supreme Court allows social media age check law

Supreme Court allows social media age check lawSpeed Read The court refused to intervene in a decision that affirmed a Mississippi law requiring social media users to verify their ages

-

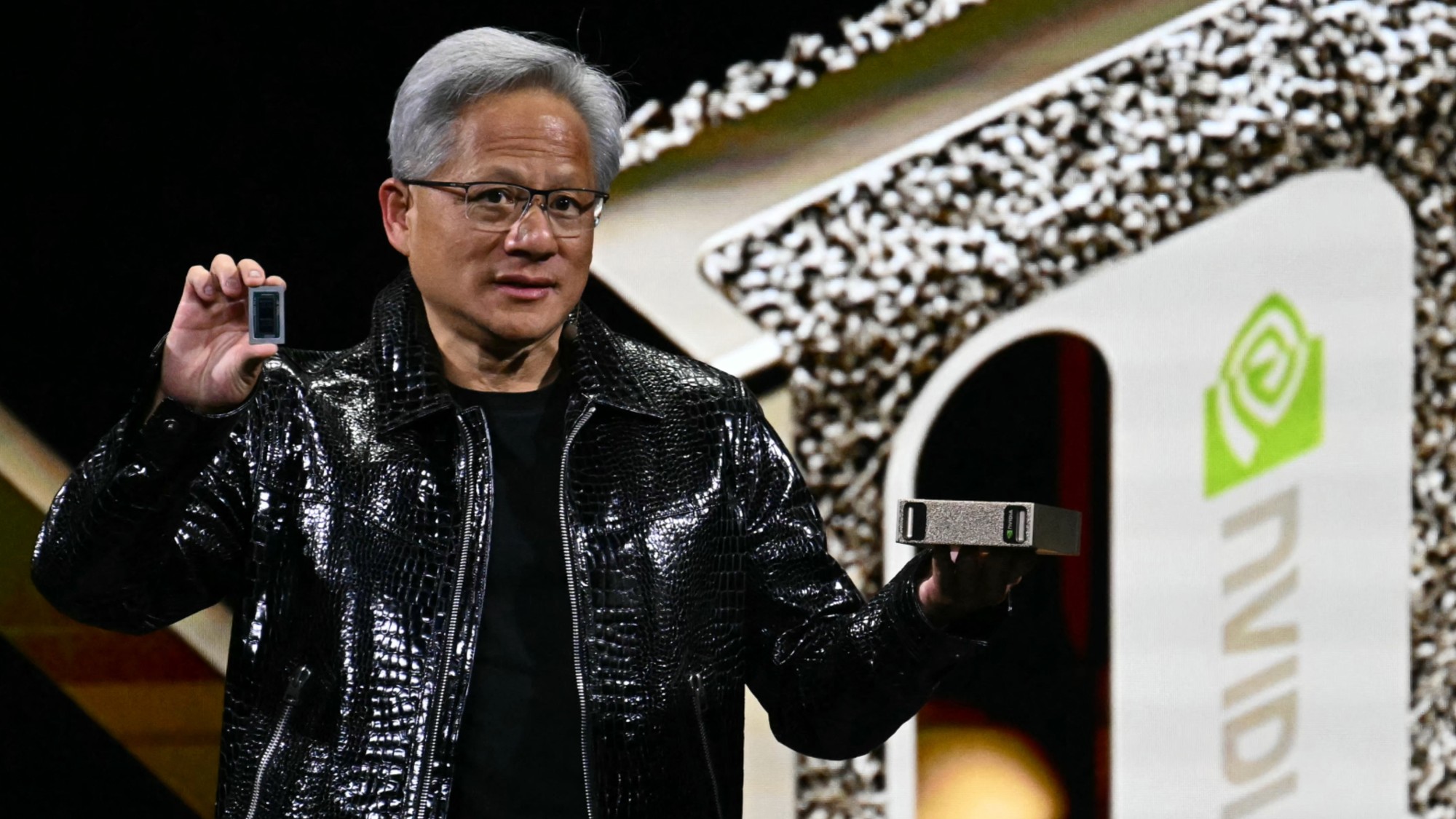

Nvidia hits $4 trillion milestone

Nvidia hits $4 trillion milestoneSpeed Read The success of the chipmaker has been buoyed by demand for artificial intelligence

-

X CEO Yaccarino quits after two years

X CEO Yaccarino quits after two yearsSpeed Read Elon Musk hired Linda Yaccarino to run X in 2023

-

Musk chatbot Grok praises Hitler on X

Musk chatbot Grok praises Hitler on XSpeed Read Grok made antisemitic comments and referred to itself as 'MechaHitler'

-

Disney, Universal sue AI firm over 'plagiarism'

Disney, Universal sue AI firm over 'plagiarism'Speed Read The studios say that Midjourney copied characters from their most famous franchises

-

Amazon launches 1st Kuiper internet satellites

Amazon launches 1st Kuiper internet satellitesSpeed Read The battle of billionaires continues in space

-

Test flight of orbital rocket from Europe explodes

Test flight of orbital rocket from Europe explodesSpeed Read Isar Aerospace conducted the first test flight of the Spectrum orbital rocket, which crashed after takeoff