Turns out Facebook isn't as polarizing as previously thought

New studies show that, contrary to prior belief, the algorithm has little effect on driving polarization

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

Many worry the U.S. has become increasingly polarized over the past few years, and activists, regulators and lawmakers have often blamed social media. They've argued that the algorithm that powers Meta's platforms, Facebook and Instagram, creates echo chambers that have spread disinformation and further perpetuate political division. However, four new studies published in the Science and Nature journals "complicate that narrative," The New York Times reported. The results paint a "contradictory and nuanced" picture of social media feeds' influence on politics. They suggested that "understanding social media's role in shaping discourse may take years to unwind," the Times added.

The papers are the first in a series of 16 peer-reviewed studies in collaboration with Meta. The research stands out because they could access internal data provided by the company, as opposed to publicly available information like previous experiments. The teams ran various experiments by altering users' Facebook and Instagram feeds in the fall leading up to the 2020 election to see if it could "change political beliefs, knowledge or polarization," The Washington Post explained. Methods included changing the chronology of the feeds, limiting viral content, removing the ability to reshare content, and reducing content from like-minded users. A study published in Science based on the data of 208 million anonymized users found the resharing of "content from untrustworthy sources." They also found that conservative users share and consume most content flagged as misinformation by third-party fact-checkers. Still, across the studies, researchers found that the changes had little effect on polarization or offline political activity for users.

Algorithms do play a significant role in what people see on the platforms. But researchers found they had "very little impact in changes to people's attitudes about politics and even people's self-reported participation around politics." Joshua Tucker, the co-director of the Center for Social Media and Politics at New York University and one of the heads of the project, said in an interview. The response to the study's complicated results has been mixed.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

Social media isn't the only cause of polarization

For Meta, the findings bolster the company's argument that its algorithm was not perpetuating political division. Nick Clegg, Meta's president of global affairs, applauded the studies for showing there's "little evidence that key features of Meta's platforms alone cause harmful 'affective' polarization or have meaningful effects on these outcomes."

However, we should be "careful about what we assume is happening versus what actually is," Katie Harbath, a former public policy director at Meta, told the Times. Together, the studies contradict the "assumed impacts of social media." Multiple factors shape our political preferences, and social media "alone is not to blame for all our woes," she added.

Tech companies aren't off the hook

Some critics and researchers who observed the studies before they were published remain ambivalent about the results. One thing they can't ignore is that Meta was a partner in the research project and spent $20 million for data gathering from the National Opinion Research Center at the University of Chicago, a nonpartisan organization. Although Meta didn't directly pay the researchers, some of their employees worked with the teams. Additionally, Meta had the authority to reject data requests that infringed on users' privacy rights.

Advocates argue that the studies don't exonerate tech companies from working to push back against viral misinformation. Studies endorsed by Meta that "look piecemeal at small sample time periods shouldn't serve as excuses for allowing lies to spread," Nora Benavidez, a senior counsel at digital civil rights group Free Press, argued to the Post. Companies "should be stepping up more in advance of elections not concocting new schemes to dodge accountability," Benavidez concluded.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

"It's a little too buttoned up to say this shows Facebook is not a huge problem or social media platforms aren't a problem," Michael W. Wagner, a professor at the University of Wisconsin at Madison's School of Journalism and Mass Communication and an independent observer of the project, told the outlet. Instead, it presents "good scientific evidence there is not just one problem that is easy to solve."

Theara Coleman has worked as a staff writer at The Week since September 2022. She frequently writes about technology, education, literature and general news. She was previously a contributing writer and assistant editor at Honeysuckle Magazine, where she covered racial politics and cannabis industry news.

-

Switzerland could vote to cap its population

Switzerland could vote to cap its populationUnder the Radar Swiss People’s Party proposes referendum on radical anti-immigration measure to limit residents to 10 million

-

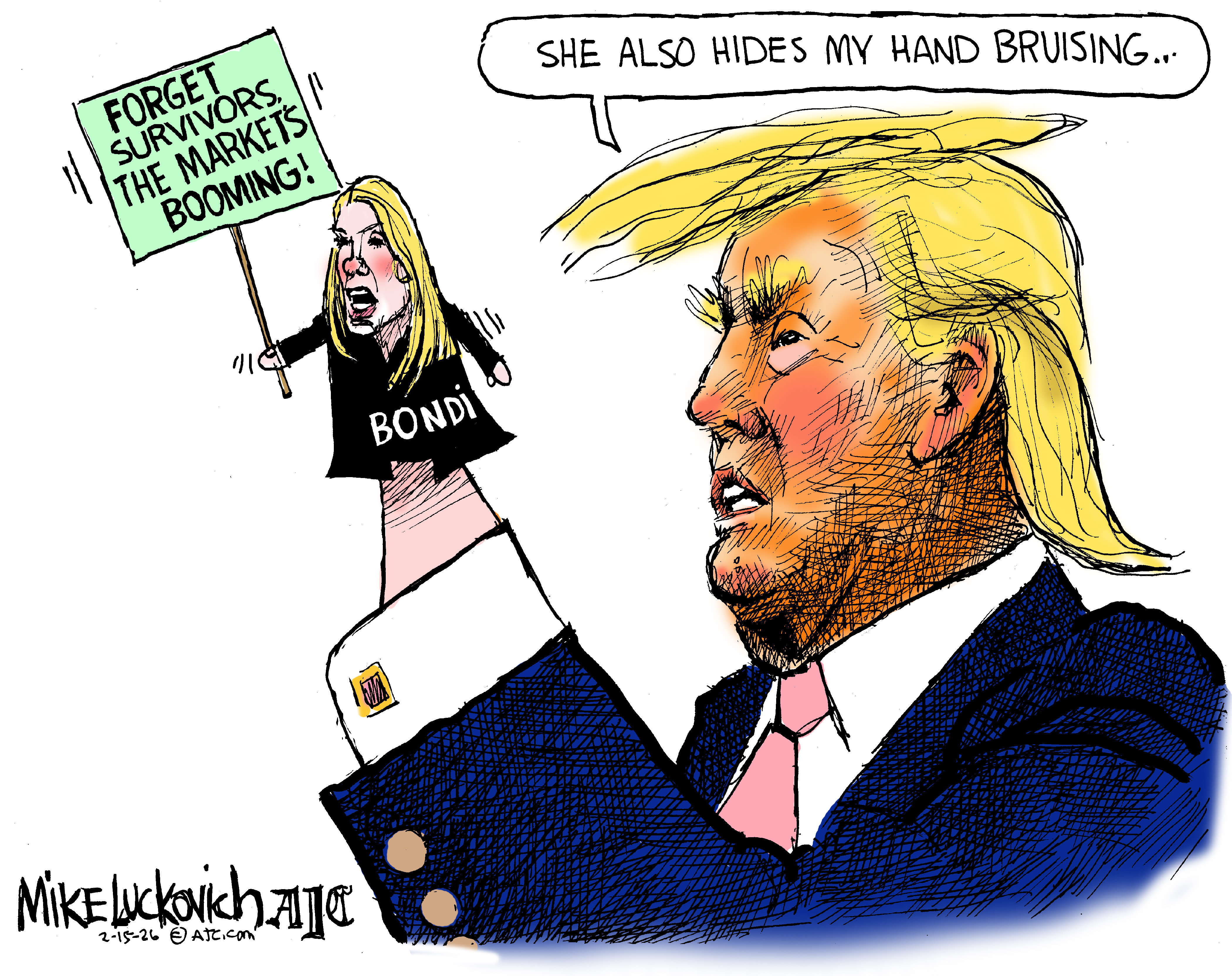

Political cartoons for February 15

Political cartoons for February 15Cartoons Sunday's political cartoons include political ventriloquism, Europe in the middle, and more

-

The broken water companies failing England and Wales

The broken water companies failing England and WalesExplainer With rising bills, deteriorating river health and a lack of investment, regulators face an uphill battle to stabilise the industry

-

Are Big Tech firms the new tobacco companies?

Are Big Tech firms the new tobacco companies?Today’s Big Question A trial will determine whether Meta and YouTube designed addictive products

-

Will AI kill the smartphone?

Will AI kill the smartphone?In The Spotlight OpenAI and Meta want to unseat the ‘Lennon and McCartney’ of the gadget era

-

Is social media over?

Is social media over?Today’s Big Question We may look back on 2025 as the moment social media jumped the shark

-

Metaverse: Zuckerberg quits his virtual obsession

Metaverse: Zuckerberg quits his virtual obsessionFeature The tech mogul’s vision for virtual worlds inhabited by millions of users was clearly a flop

-

Has Google burst the Nvidia bubble?

Has Google burst the Nvidia bubble?Today’s Big Question The world’s most valuable company faces a challenge from Google, as companies eye up ‘more specialised’ and ‘less power-hungry’ alternatives

-

Sora 2 and the fear of an AI video future

Sora 2 and the fear of an AI video futureIn the Spotlight Cutting-edge video-creation app shares ‘hyperrealistic’ AI content for free

-

Social media: How 'content' replaced friendship

Social media: How 'content' replaced friendshipFeature Facebook has shifted from connecting with friends to competing with entertainment companies

-

Meta on trial: What will become of Mark Zuckerberg's social media empire?

Meta on trial: What will become of Mark Zuckerberg's social media empire?Today's Big Question Despite the CEO's attempt to ingratiate himself with Trump, Meta is on trial, accused by the U.S. government of breaking antitrust law