How might AI chatbots replace mental health therapists?

Clients form 'strong relationships' with tech

There is a striking shortage of mental health care providers in the United States. New research suggests that AI chatbots can fill in the gaps — and be remarkably effective while doing so.

Artificial intelligence can deliver mental health therapy "with as much efficacy as — or more than — human clinicians," said NPR. New research published in the New England Journal of Medicine looked at the results delivered by a bot designed at Dartmouth College.

What did the commentators say?

There was initially a lot of "trial and error" in training AI to work with humans suffering from depression and anxiety, said Nick Jacobson, one of the researchers, but the bot ultimately delivered outcomes similar to the "best evidence-based trials of psychotherapy." Patients developed a "strong relationship with an ability to trust" the digital therapist, he said.

Subscribe to The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

Other experts see "reliance on bot-based therapy as a poor substitute for the real thing," said Axios. Therapy is about "forming a relationship with another human being who understands the complexity of life," said sociologist Sherry Turkle. But another expert, Skidmore College's Lucas LaFreniere, said it depends on whether patients are willing to suspend their disbelief. "If the client is perceiving empathy," he said, "they benefit from the empathy."

AI therapists could "further isolate vulnerable patients instead of easing suffering," Nigel Mulligan, a lecturer in psychotherapy at Dublin City University, said at The Conversation. It is easy to understand why people would turn to a "convenient and cost-effective resource" for mental health services, but while bots can be "beneficial for some," they are generally not an "effective substitute" for a human therapist. Humans can offer "emotional nuance, intuition and a personal connection." AI, though, cannot duplicate that nuance, making it "unsuitable for those with severe mental health issues."

The technology can make mental health services "more accessible, more personalized, and more efficient," Dr. Jacques Ambrose said at NewYork-Presbyterian's blog. Large language models have the ability to "analyze the vast amount of patient data in psychiatry" and come up with tailored treatments specific to a client. But there are concerns about privacy and the "human-to-human connection" that makes therapy effective. The best approach is one that creates a "partnership between the clinician and the technology."

What next?

In February, the American Psychological Association made a presentation to the Federal Trade Commission warning against chatbots "masquerading" as therapists that "could drive vulnerable people to harm themselves or others," said The New York Times. "People are going to be misled, and will misunderstand what good psychological care is," said Arthur C. Evans Jr., the Association's chief executive.

There are some efforts to limit the reach of AI therapy. In California, a bill has been introduced that would ban tech companies from deploying an AI program that "pretends to be a human certified as a health provider," said Vox. Chatbots are "not licensed health professionals," said state Assembly Member Mia Bonta, "and they shouldn't be allowed to present themselves as such."

Sign up for Today's Best Articles in your inbox

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

Joel Mathis is a writer with 30 years of newspaper and online journalism experience. His work also regularly appears in National Geographic and The Kansas City Star. His awards include best online commentary at the Online News Association and (twice) at the City and Regional Magazine Association.

-

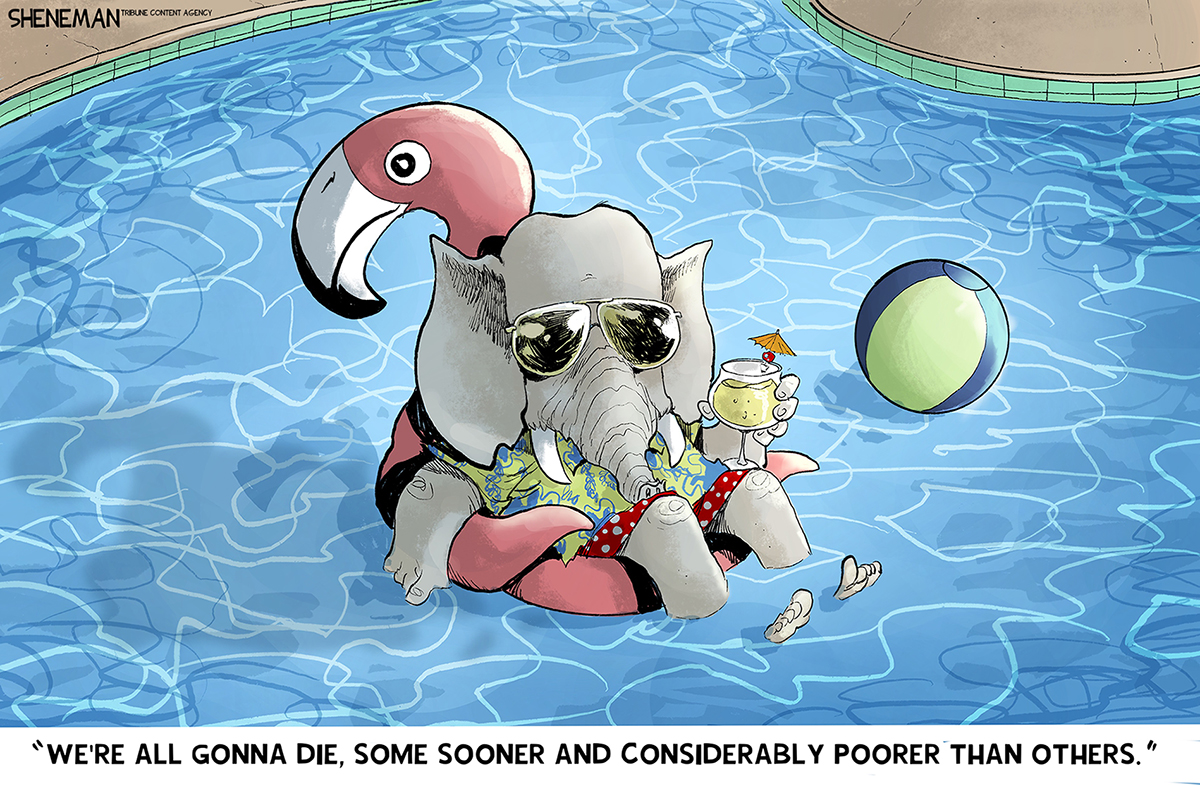

June 7 editorial cartoons

June 7 editorial cartoonsCartoons Saturday's political cartoons include reminders that we are all going to die, and Elon Musk taking a chainsaw to the 'Big, Beautiful, Bill'

-

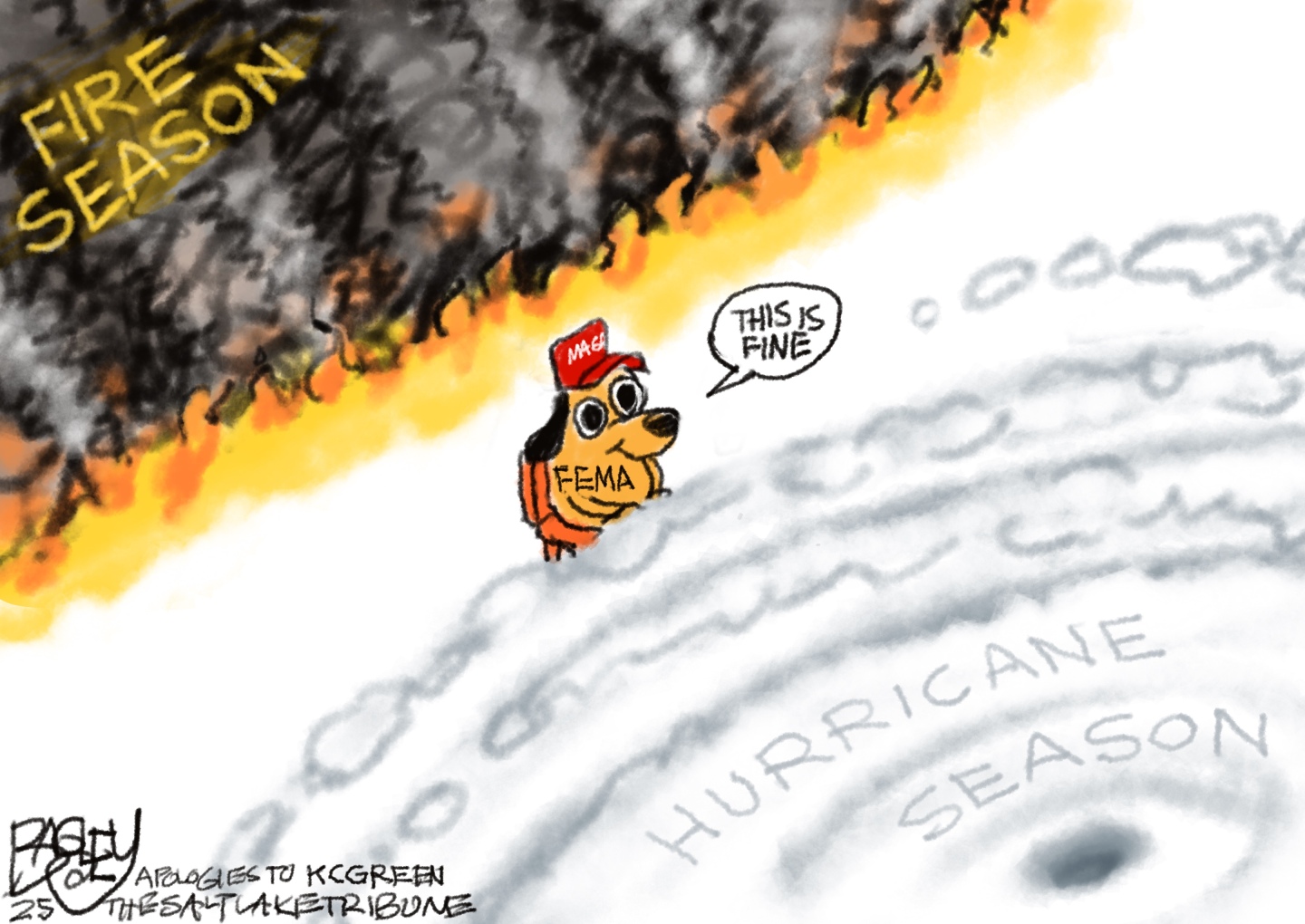

5 naturally disastrous editorial cartoons about FEMA

5 naturally disastrous editorial cartoons about FEMACartoons Political cartoonists take on FEMA, the hurricane season, and the This is Fine meme

-

Amanda Feilding: the serious legacy of the 'Crackpot Countess'

Amanda Feilding: the serious legacy of the 'Crackpot Countess'In the Spotlight Nicknamed 'Lady Mindbender', eccentric aristocrat was a pioneer in the field of psychedelic research

-

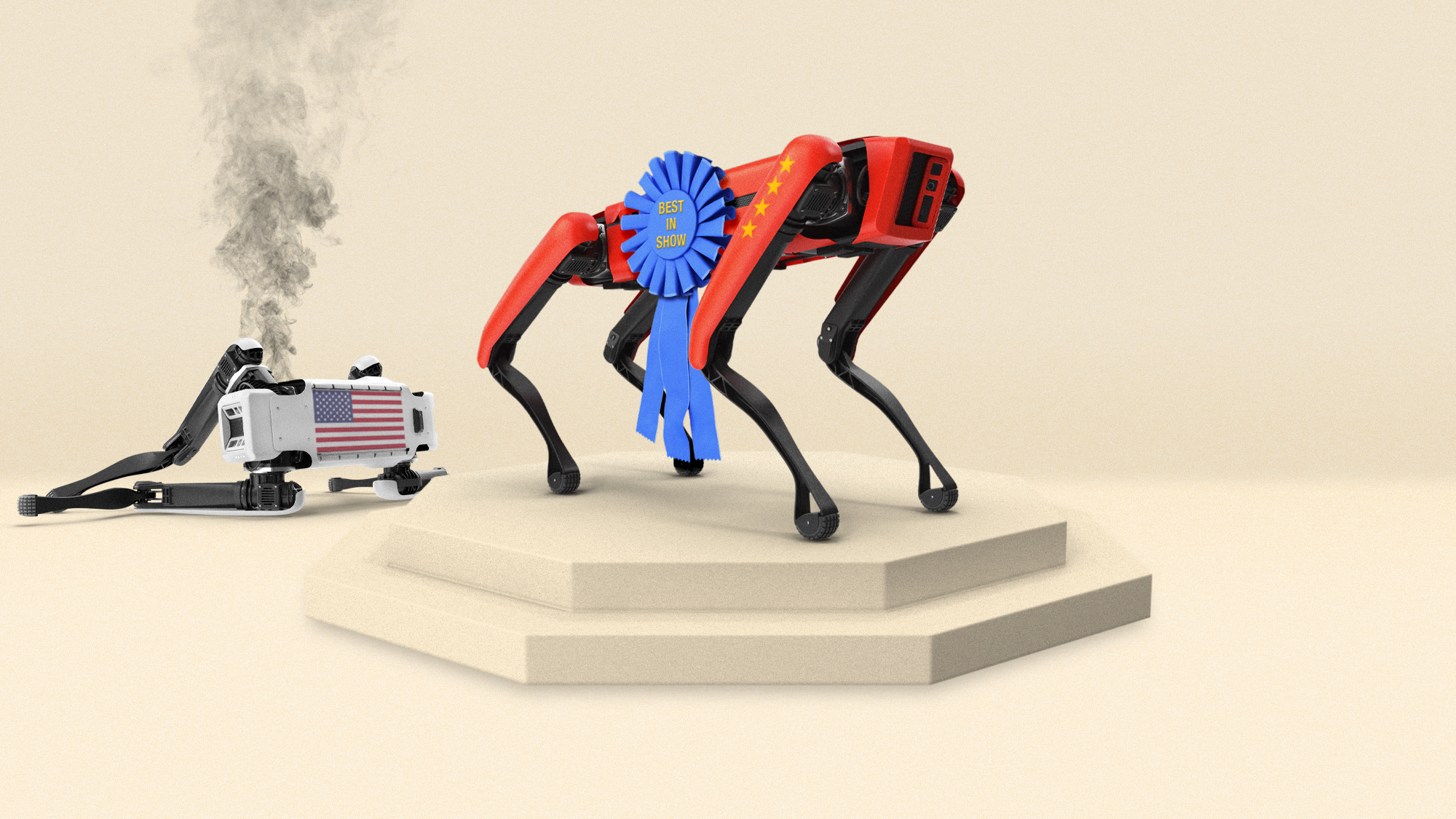

Is China winning the AI race?

Is China winning the AI race?Today's Big Question Or is it playing a different game than the US?

-

Google's new AI Mode feature hints at the next era of search

Google's new AI Mode feature hints at the next era of searchIn the Spotlight The search giant is going all in on AI, much to the chagrin of the rest of the web

-

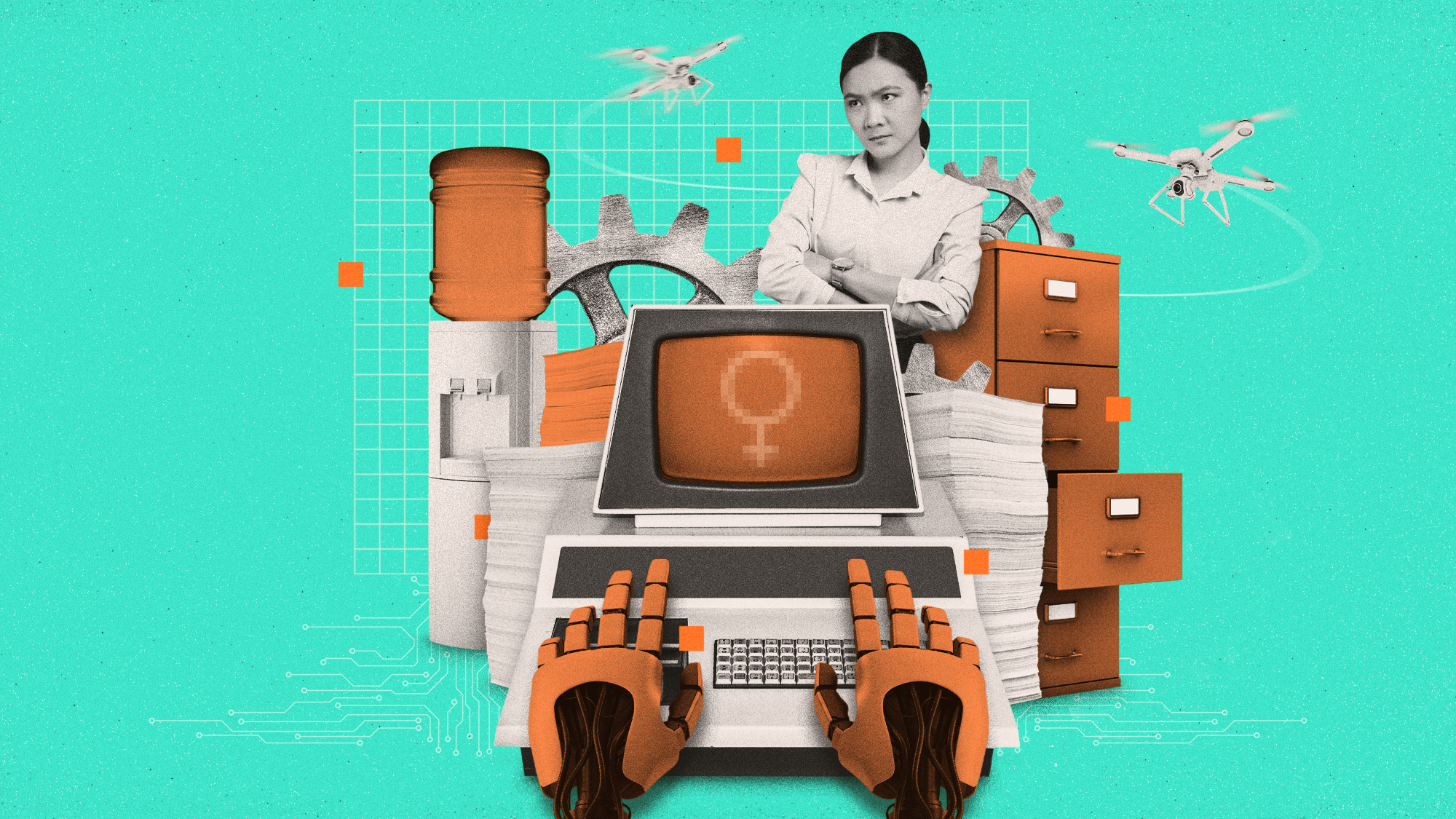

How the AI takeover might affect women more than men

How the AI takeover might affect women more than menThe Explainer The tech boom is a blow to gender equality

-

Did you get a call from a government official? It might be an AI scam.

Did you get a call from a government official? It might be an AI scam.The Explainer Hackers may be using AI to impersonate senior government officers, said the FBI

-

What Elon Musk's Grok AI controversy reveals about chatbots

What Elon Musk's Grok AI controversy reveals about chatbotsIn the Spotlight The spread of misinformation is a reminder of how imperfect chatbots really are

-

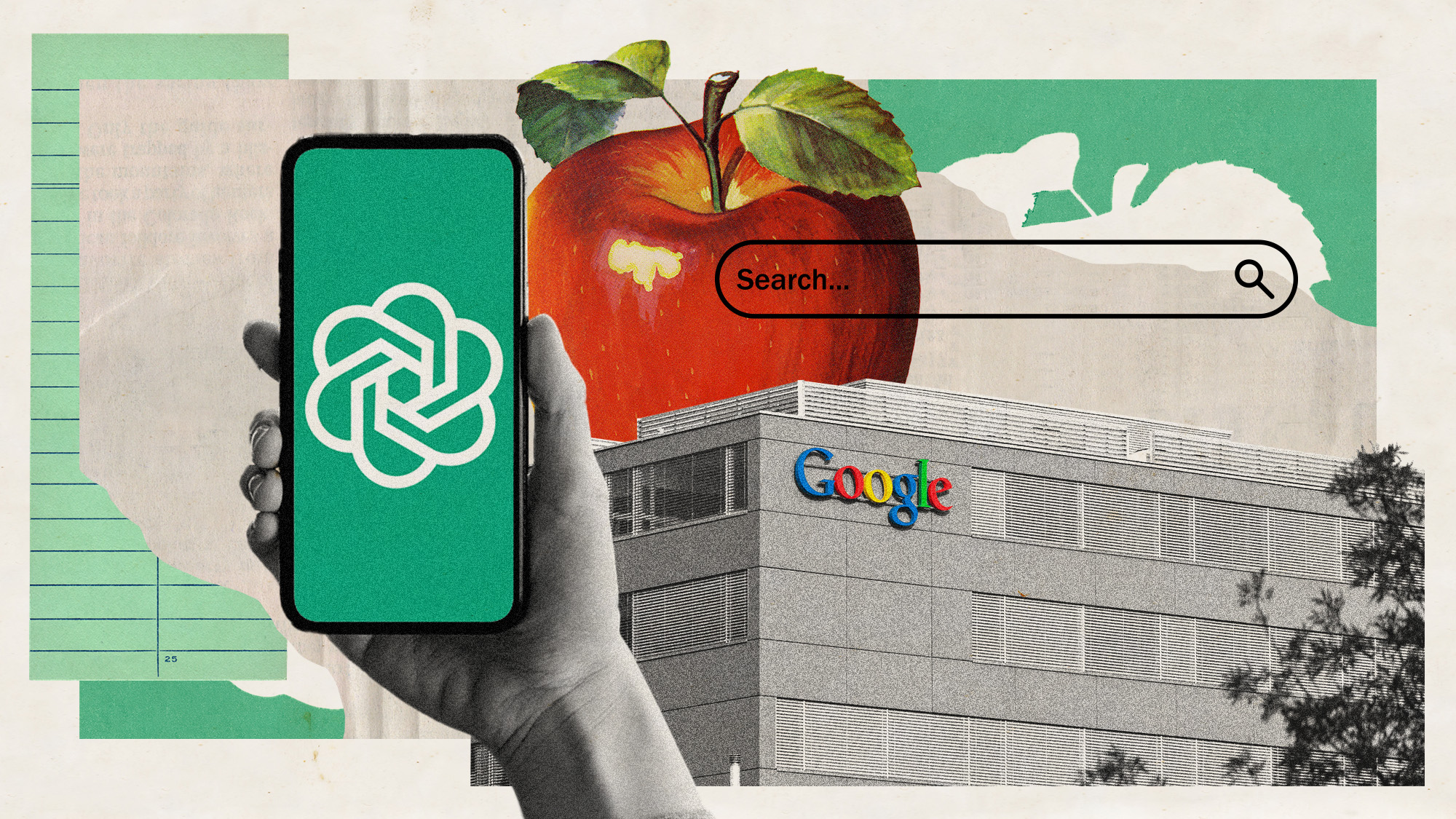

Is Apple breaking up with Google?

Is Apple breaking up with Google?Today's Big Question Google is the default search engine in the Safari browser. The emergence of artificial intelligence could change that.

-

Inside the FDA's plans to embrace AI agencywide

Inside the FDA's plans to embrace AI agencywideIn the Spotlight Rumors are swirling about a bespoke AI chatbot being developed for the FDA by OpenAI

-

Digital consent: Law targets deepfake and revenge porn

Digital consent: Law targets deepfake and revenge pornFeature The Senate has passed a new bill that will make it a crime to share explicit AI-generated images of minors and adults without consent