What are AI hallucinations?

ChatGPT and the like have been known to make things up – and that can cause real damage

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

If American sci-fi novelist Philip K. Dick were alive today, he might have given his most famous work the title: "Do AIs Hallucinate Electric Sheep?"

Generative AI systems such as ChatGPT and Dall-E have gained a reputation for giving out information that appears plausible but is actually completely false, a phenomenon researchers call an AI hallucination.

This is "both a strength and a weakness", said Nature. While it fuels their "celebrated" inventiveness, it also leads them to "sometimes blur truth and fiction", adding something incorrect to an otherwise factual article, for example. All the while it is "totally confident" about what it has produced, said theoretical computer scientist Santosh Vempala. "They sound like politicians."

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

What happens when AI is wrong?

The type of hallucination AIs generate depends on the system. Large language models (LLMs) like ChatGPT are "sophisticated pattern predictors", said TechRadar, generating text by making predictions based on what word statistically follows the previous one.

Hallucinations occur when the system isn't sure about a question or answer and "fills in gaps" based on similar examples it has been given. This leads to information that is "incorrect, made up or irrelevant", said researchers Anna Choi and Katelyn Xiaoying Mei on The Conversation.

This can have serious consequences. In 2023, American lawyer Steven Schwartz used ChatGPT to help him write a legal brief to submit in court. But instead of finding legal precedents that would help his argument, the AI made up some cases and misidentified others. Schwartz was later fined after the opposing lawyers pointed out the inaccuracies.

ChatGPT's hallucinations may also spell trouble for its maker, OpenAI. This month, Norwegian Arve Hjalmar Holmen filed a complaint against the company after the chatbot falsely claimed he had killed two of his children.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

Holmen, who has never been charged nor convicted of any crime, had asked ChatGPT to answer the question: " Who is Arve Hjalmar Holmen?", to which it answered that he was a "Norwegian individual" who had "gained attention" when his sons were "tragically found dead in a pond near their home in Trondheim, Norway, in December 2020". It added that he had received a 21-year prison sentence for their murder.

Digital rights group Noyb, acting on Holmen's behalf, said OpenAI had violated data accuracy rules by "knowingly allowing ChatGPT to produce defamatory results".

Can you stop AI hallucinations?

There may not be an easy answer to solving AI's flights of fancy. Hallucinations are "fundamental" to how LLMs work, said Nature, which could make it impossible to eliminate them completely. In addition, said Choi and Mei on The Conversation, "novel" responses when a system is asked to be creative, such as when writing a story or generating an image, are "expected and desired".

However, that does not mean companies cannot reduce the number of hallucinations a system has, or their effect, said TechTarget. Solutions could involve going back to the original material fed into the system to check for inaccuracies or using retrieval-augmented generation, allowing LLMs to access external, up-to-date information to improve accuracy.

Another possibility is automated reasoning to fact-check answers straight away, a system Amazon introduced to its generative AI offerings last December. Rather than "guessing or predicting" an answer, automated reasoning uses logic and problem-solving techniques to check its validity.

Until a solution is found, hallucinations will remain an " inherent challenge" for LLMs, said TechRadar. The answer? "Fact-check everything."

Elizabeth Carr-Ellis is a freelance journalist and was previously the UK website's Production Editor. She has also held senior roles at The Scotsman, Sunday Herald and Hello!. As well as her writing, she is the creator and co-founder of the Pausitivity #KnowYourMenopause campaign and has appeared on national and international media discussing women's healthcare.

-

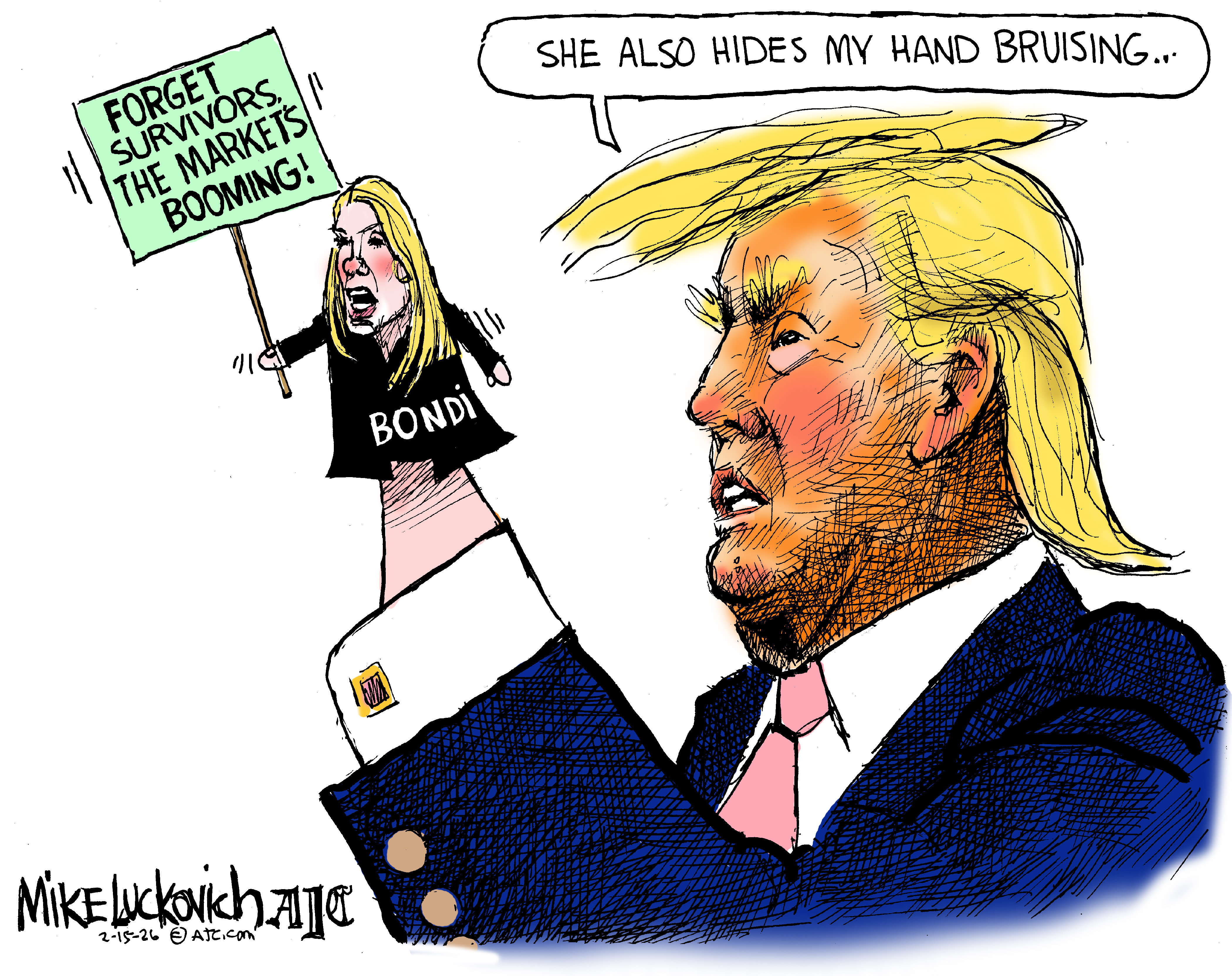

Political cartoons for February 15

Political cartoons for February 15Cartoons Sunday's political cartoons include political ventriloquism, Europe in the middle, and more

-

The broken water companies failing England and Wales

The broken water companies failing England and WalesExplainer With rising bills, deteriorating river health and a lack of investment, regulators face an uphill battle to stabilise the industry

-

A thrilling foodie city in northern Japan

A thrilling foodie city in northern JapanThe Week Recommends The food scene here is ‘unspoilt’ and ‘fun’

-

Claude Code: Anthropic’s wildly popular AI coding app

Claude Code: Anthropic’s wildly popular AI coding appThe Explainer Engineers and noncoders alike are helping the app go viral

-

Will regulators put a stop to Grok’s deepfake porn images of real people?

Will regulators put a stop to Grok’s deepfake porn images of real people?Today’s Big Question Users command AI chatbot to undress pictures of women and children

-

Most data centers are being built in the wrong climate

Most data centers are being built in the wrong climateThe explainer Data centers require substantial water and energy. But certain locations are more strained than others, mainly due to rising temperatures.

-

The dark side of how kids are using AI

The dark side of how kids are using AIUnder the Radar Chatbots have become places where children ‘talk about violence, explore romantic or sexual roleplay, and seek advice when no adult is watching’

-

Why 2025 was a pivotal year for AI

Why 2025 was a pivotal year for AITalking Point The ‘hype’ and ‘hopes’ around artificial intelligence are ‘like nothing the world has seen before’

-

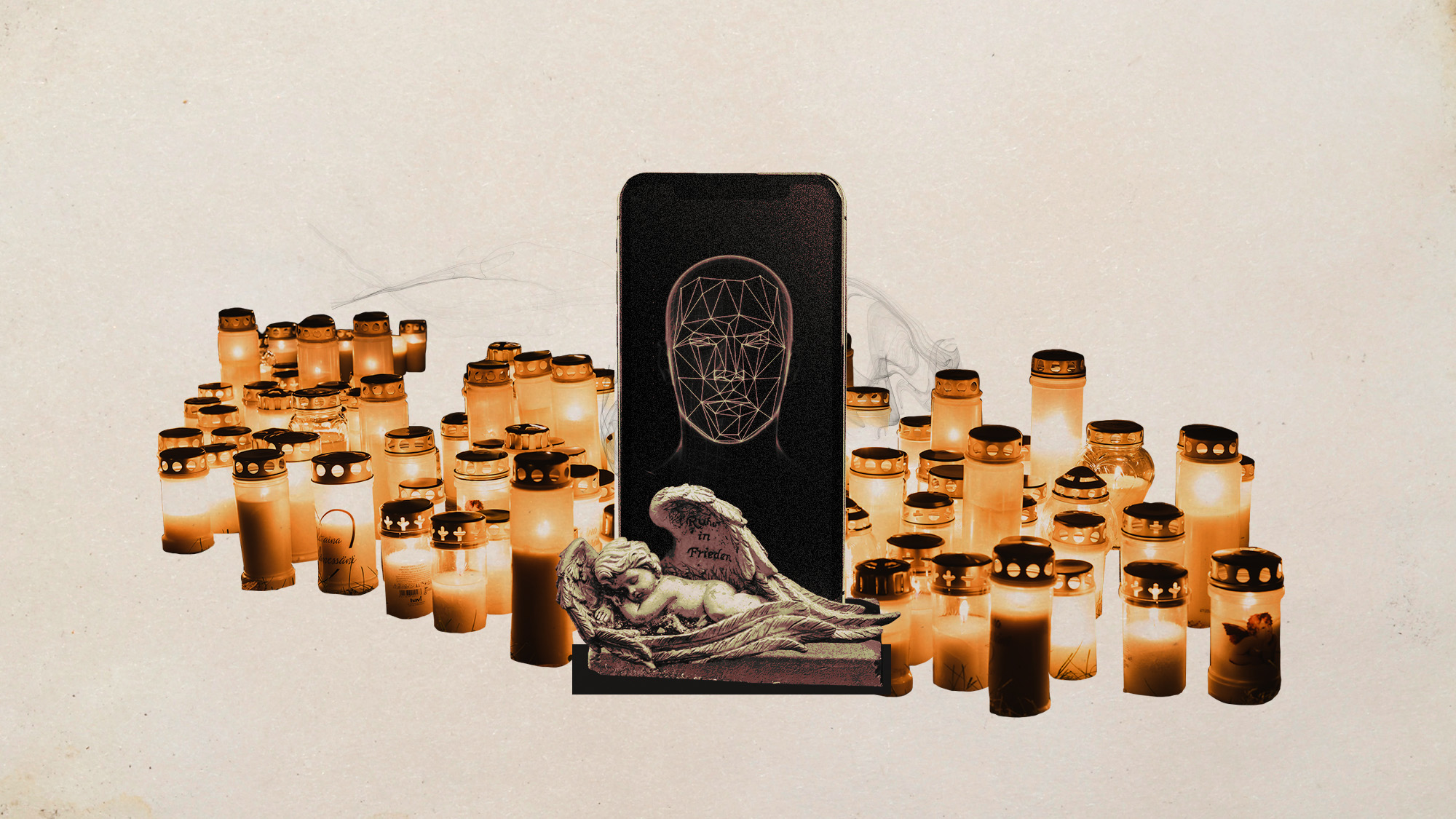

AI griefbots create a computerized afterlife

AI griefbots create a computerized afterlifeUnder the Radar Some say the machines help people mourn; others are skeptical

-

The robot revolution

The robot revolutionFeature Advances in tech and AI are producing android machine workers. What will that mean for humans?

-

Separating the real from the fake: tips for spotting AI slop

Separating the real from the fake: tips for spotting AI slopThe Week Recommends Advanced AI may have made slop videos harder to spot, but experts say it’s still possible to detect them