OpenAI: the ChatGPT start-up now worth billions

The Elon Musk-founded company has secured investment from Microsoft as artificial intelligence chatbot takes the world by storm

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

ChatGPT was heralded as the “world’s first truly useful chatbot” after launching in November last year.

Amid “breathless predictions” about the potential impact of the artificial intelligence bot, said The Times, social media was flooded with examples of ChatGPT’s capabilities, including coding, essay-writing and generating pop lyrics in the style of Shakespeare. The system’s creators, OpenAI, claimed it had attracted more than a million regular users within little more than a week of being released.

And now Microsoft is getting in on the action, by pumping $10bn into OpenAI. The investment in the San Francisco-based start-up is Microsoft’s “biggest bet yet that artificial intelligence systems have the power to transform the tech giant’s business model and products”, said the Financial Times.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

What is OpenAI?

OpenAI was founded in 2015 by investor, programmer and blogger Sam Altman and other high-profile tech entrepreneurs including Tesla boss Elon Musk and PayPal co-founder Peter Thiel.

Altman, who remains CEO of OpenAI, was previously president of Y Combinator (YC), a tech start-up accelerator that has backed major companies ranging from Airbnb and Dropbox to Reddit and Twitch. He also co-founded free online dating platform OkCupid in 2011.

The OpenAI bosses’ stated aim is “to ensure that artificial general intelligence (AGI) – by which we mean highly autonomous systems that outperform humans at most economically valuable work – benefits all of humanity”. OpenAI’s “primary fiduciary duty is to humanity”, they emphasise in the company charter.

This charter is “so sacred that employees’ pay is tied to how well they adhere to it”, said MIT Technology Review’s AI editor Karen Hao. Although “the purpose is not world domination”, she wrote, “AGI could be catastrophic without the careful guidance of a benevolent shepherd”.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

OpenAI promotes itself as this shepherd and said the company was created as a non-profit in order to “build value for everyone rather than shareholders”. In a statement announcing the launch back in 2015, OpenAI also vowed to “freely collaborate with others across many institutions” and to “work with companies to research and deploy new technologies”.

Does OpenAI live up to its claims?

An investigation by MIT Technology Review uncovered “a misalignment between what the company publicly espouses and how it operates behind closed doors”, according to Hao. Former and current employees – many of whom reportedly “insisted on anonymity because they were not authorised to speak or feared retaliation” – were said to have portrayed a company “obsessed with maintaining secrecy, protecting its image, and retaining the loyalty of its employees”.

Even Musk has criticised OpenAI after quitting the board of directors 2018, a decision that the company said was to “eliminate potential future conflict” with the AI goals of Tesla.

In 2020, Musk tweeted that his confidence in OpenAI was “not high” when it came to safety. “OpenAI should be more open imo,” he wrote in response to MIT Technology Review’s investigation.

In a Twitter post shortly after the launch of ChatGPT, he wrote: “Need to understand more about governance structure & revenue plans going forward. OpenAI was started as open-source & non-profit. Neither are still true.”

Are there any other issues with ChatGPT?

Plenty, according to Gizmodo. The technology threatens to “kill the college essay and lead to other academic dysfunction”, “make human writers obsolete”, “generate factually inaccurate news articles (already happened)” and “cause a disinformation typhoon”. Concerns have also been raised that the easily accessible AI system could “democratise cybercrime” and help to “fuel easy malware creation”, said the site, as well as “get loads of people fired”.

OpenAI has faced further criticism after Time magazine reported that the company “used outsourced Kenyan labourers earning less than $2 per hour to make the chatbot less toxic”. Workers allegedly said there were “mentally scarred” after sifting through graphic images and disturbing text from the dark web to help build a tool to help build a tool that tags problematic content.

After the contractor cancelled the deal early, OpenAI insisted that “we take the mental health of our employees and those of our contractors very seriously”.

But the Partnership on AI, a coalition of AI organisations to which OpenAI belongs, told Time that “despite the foundational role played by these data enrichment professionals, a growing body of research reveals the precarious working conditions these workers face”.

“This may be the result of efforts to hide AI’s dependence on this large labour force when celebrating the efficiency gains of technology,” the coalition said. “Out of sight is also out of mind.”

-

How the FCC’s ‘equal time’ rule works

How the FCC’s ‘equal time’ rule worksIn the Spotlight The law is at the heart of the Colbert-CBS conflict

-

What is the endgame in the DHS shutdown?

What is the endgame in the DHS shutdown?Today’s Big Question Democrats want to rein in ICE’s immigration crackdown

-

‘Poor time management isn’t just an inconvenience’

‘Poor time management isn’t just an inconvenience’Instant Opinion Opinion, comment and editorials of the day

-

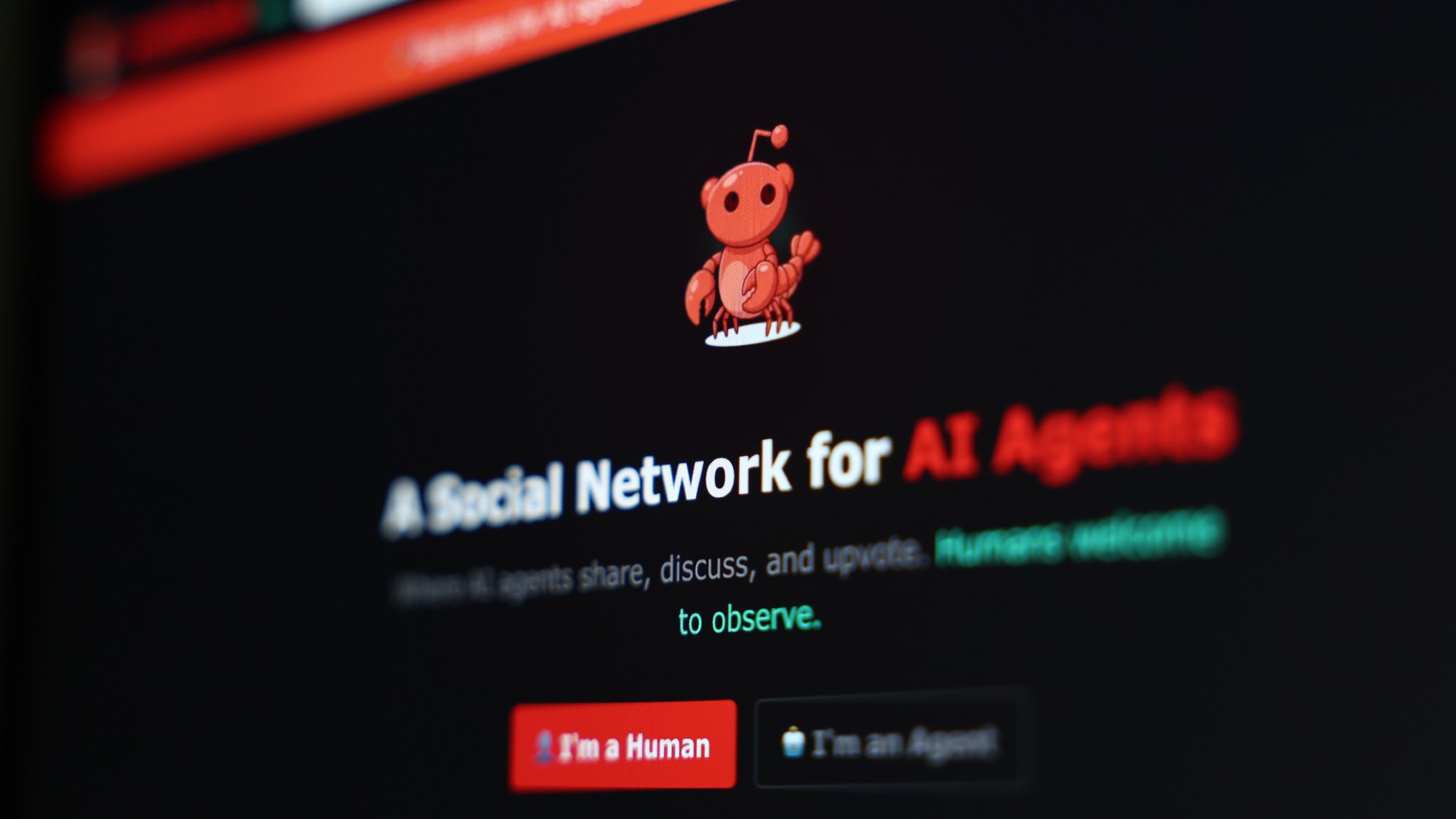

Are AI bots conspiring against us?

Are AI bots conspiring against us?Talking Point Moltbook, the AI social network where humans are banned, may be the tip of the iceberg

-

Elon Musk’s pivot from Mars to the moon

Elon Musk’s pivot from Mars to the moonIn the Spotlight SpaceX shifts focus with IPO approaching

-

Moltbook: the AI social media platform with no humans allowed

Moltbook: the AI social media platform with no humans allowedThe Explainer From ‘gripes’ about human programmers to creating new religions, the new AI-only network could bring us closer to the point of ‘singularity’

-

Claude Code: Anthropic’s wildly popular AI coding app

Claude Code: Anthropic’s wildly popular AI coding appThe Explainer Engineers and noncoders alike are helping the app go viral

-

Will regulators put a stop to Grok’s deepfake porn images of real people?

Will regulators put a stop to Grok’s deepfake porn images of real people?Today’s Big Question Users command AI chatbot to undress pictures of women and children

-

Most data centers are being built in the wrong climate

Most data centers are being built in the wrong climateThe explainer Data centers require substantial water and energy. But certain locations are more strained than others, mainly due to rising temperatures.

-

The dark side of how kids are using AI

The dark side of how kids are using AIUnder the Radar Chatbots have become places where children ‘talk about violence, explore romantic or sexual roleplay, and seek advice when no adult is watching’

-

Why 2025 was a pivotal year for AI

Why 2025 was a pivotal year for AITalking Point The ‘hype’ and ‘hopes’ around artificial intelligence are ‘like nothing the world has seen before’