Nightshade: the 'data poisoning' tool boosting fightback against AI

Tool is like 'putting hot sauce in your lunch so it doesn't get stolen from the workplace fridge', says creator

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

A new tool used by creatives to "poison" artificial intelligence models and stop them from using their artwork without consent was downloaded more than a quarter of a million times in just five days.

Nightshade, a free tool created by computer science researchers at the University of Chicago, is the latest "weapon" helping to protect artists' work from becoming "machine learning fodder", said Mashable.

Ben Zhao, a professor of computer science and leader of the project, said he expected there to be "extremely high enthusiasm" but he still "underestimated" how much. In an email to VentureBeat after the number of downloads surpassed 250,000 earlier this month, he said the response was "simply beyond anything we imagined".

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

Why is it needed?

The availability of generative artificial intelligence (AI) models that can create text and images which mimic other people's work has caused an "uproar among creatives", reported the Evening Standard.

The sudden emergence and popularity of tools such as DALL-E, Midjourney and Stable Diffusion pose an existential threat to artists, who face an "uphill battle" protecting their work from being used to train AI models without consent, said TechCrunch. "Opt-out requests and do-not-scrape codes rely on AI companies to engage in good faith, but those motivated by profit over privacy can easily disregard such measures," said the tech site.

Some have decided to take legal action against AI giants, such as Meta and OpenAI, claiming copyright infringement, while artificial intelligence was one of the main factors behind last year's long-running Hollywood writers' strike. Actors have protested at the rise of digital replicas, and celebrities have raised concern about their voices being cloned without their consent.

But for the vast majority of artists, going on strike or taking their work offline is not an option as they do not have guaranteed employment and rely on social media exposure for commissions.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

How does Nightshade work?

Nightshade, named after the deadly nightshade plant used to poison Roman emperors, "preys upon a security vulnerability inherent within AI models, which allows them to be tampered with", said the Standard. "It does this by adding changes to the pixels in a digital image that are invisible to the human eye. These modifications affect the image itself and the text or captions associated with it, both of which an AI relies on to identify what's in a picture."

Known as a prompt-specific poisoning attack, this can cause AI tools to malfunction and, for example, mistake pictures of cats for dogs or handbags for toasters.

"Unpredictable responses of this sort make text-to-image models significantly less useful", said The Register, "which means model makers have an incentive to ensure that they only train on data that's been offered freely."

Zhao compared it to "putting hot sauce in your lunch so it doesn't get stolen from the workplace fridge".

If applied at scale, it would be "incredibly difficult" for AI companies to fix, said Mashable, "as each poisoned image must be individually identified and removed from their training pool. This could create a powerful incentive for such companies to think twice before dragging a trawl net through the internet and using artists' work without their explicit consent."

According to The Register, the Nightshade team are looking to release Nightshade in combination with an earlier tool, Glaze, which works to prevent AI models from learning an artist's signature "style" by subtly altering pixels.

Will it work?

In a research paper published last October, the team claimed Nightshade "can provide a powerful tool for content owners to protect their intellectual property against model trainers that disregard or ignore copyright notices, do-not-scrape/crawl directives, and opt-out lists".

The aim is not to take down Big AI, said TechCrunch, but "to force tech giants to pay for licensed work, instead of training AI models on scraped images".

"There is a right way of doing this," Zhao agreed. "The real issue here is about consent, is about compensation. We are just giving content creators a way to push back against unauthorised training."

Eva Toorenent, an illustrator, told MIT Technology Review that she hoped Nightshade will change the status quo.

"It is going to make [AI companies] think twice, because they have the possibility of destroying their entire model by taking our work without our consent," she said, while another artist, Autumn Beverly, said the tool "can help return the power back to the artists for their own work".

-

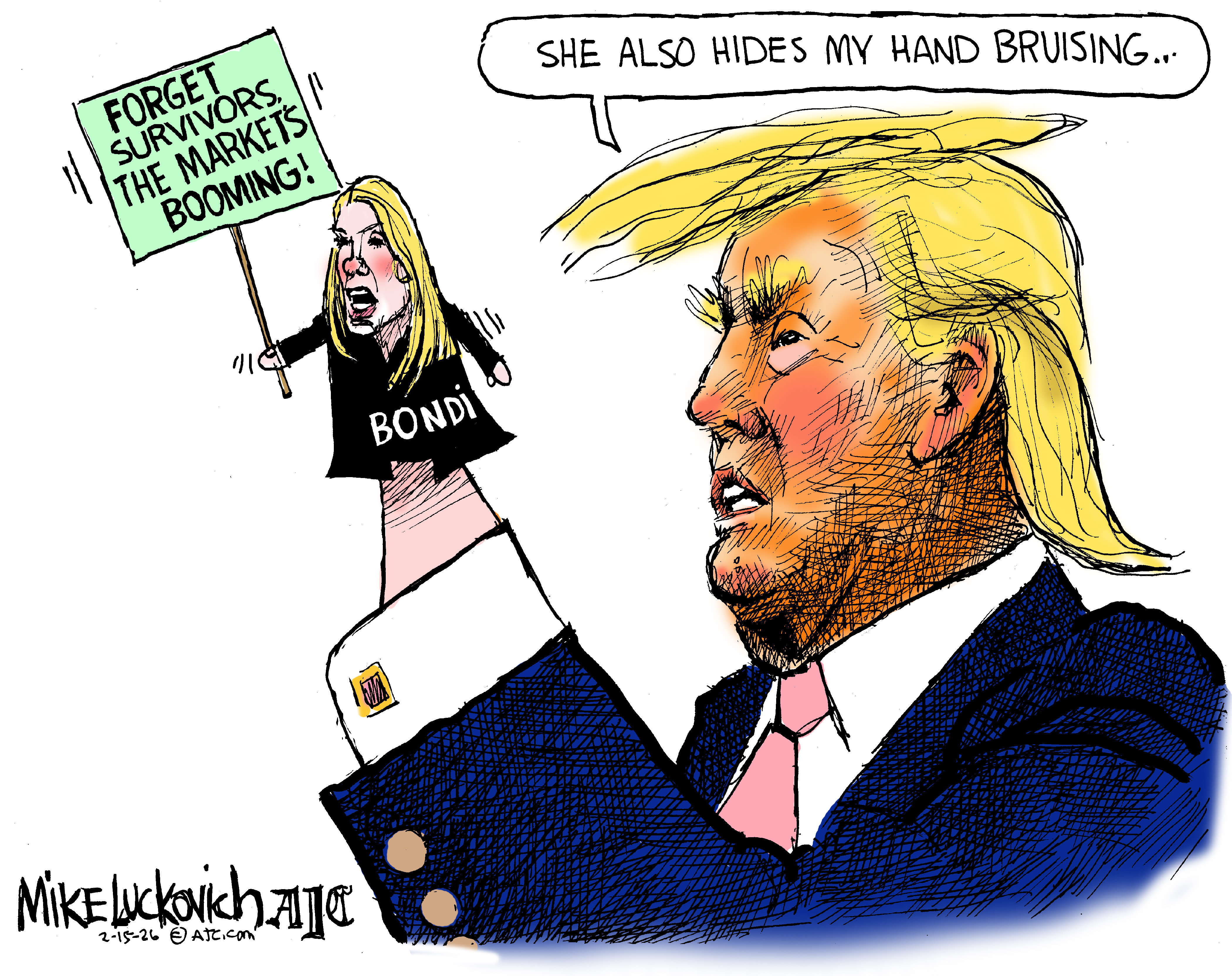

Political cartoons for February 15

Political cartoons for February 15Cartoons Sunday's political cartoons include political ventriloquism, Europe in the middle, and more

-

The broken water companies failing England and Wales

The broken water companies failing England and WalesExplainer With rising bills, deteriorating river health and a lack of investment, regulators face an uphill battle to stabilise the industry

-

A thrilling foodie city in northern Japan

A thrilling foodie city in northern JapanThe Week Recommends The food scene here is ‘unspoilt’ and ‘fun’

-

Are Big Tech firms the new tobacco companies?

Are Big Tech firms the new tobacco companies?Today’s Big Question A trial will determine whether Meta and YouTube designed addictive products

-

Will AI kill the smartphone?

Will AI kill the smartphone?In The Spotlight OpenAI and Meta want to unseat the ‘Lennon and McCartney’ of the gadget era

-

Claude Code: Anthropic’s wildly popular AI coding app

Claude Code: Anthropic’s wildly popular AI coding appThe Explainer Engineers and noncoders alike are helping the app go viral

-

Will regulators put a stop to Grok’s deepfake porn images of real people?

Will regulators put a stop to Grok’s deepfake porn images of real people?Today’s Big Question Users command AI chatbot to undress pictures of women and children

-

Is social media over?

Is social media over?Today’s Big Question We may look back on 2025 as the moment social media jumped the shark

-

Most data centers are being built in the wrong climate

Most data centers are being built in the wrong climateThe explainer Data centers require substantial water and energy. But certain locations are more strained than others, mainly due to rising temperatures.

-

The dark side of how kids are using AI

The dark side of how kids are using AIUnder the Radar Chatbots have become places where children ‘talk about violence, explore romantic or sexual roleplay, and seek advice when no adult is watching’

-

Why 2025 was a pivotal year for AI

Why 2025 was a pivotal year for AITalking Point The ‘hype’ and ‘hopes’ around artificial intelligence are ‘like nothing the world has seen before’