Digital consent: Law targets deepfake and revenge porn

The Senate has passed a new bill that will make it a crime to share explicit AI-generated images of minors and adults without consent

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

"The first major U.S. law tackling AI-induced harm" was born out of the suffering of teenage girls, said Andrew R. Chow in Time. "In October 2023, 14-year-old Elliston Berry of Texas and 15-year-old Francesca Mani of New Jersey each learned that classmates had used AI software to fabricate nude images of them and female classmates." The software could create virtually any image with the click of a button, and it was being weaponized against women and girls. When Berry and Mani sought to remove the so-called deepfake images, "both social media platforms and their school boards reacted with silence or indifference." Now a bill is headed to President Trump's desk that will criminalize the nonconsensual sharing of sexually explicit images—both real and computer generated, of minors or adults—and require that platforms remove such material within 48 hours of getting notice. The Take It Down Act, backed by first lady Melania Trump, passed the Senate unanimously and the House 409-2.

"No one expects to be the victim of an obscene deepfake image," said Annie Chestnut Tutor in The Hill. But the odds of it happening are only increasing. Pornographic deepfake videos are "plastered all over the internet," often to "extort teenagers." With AI, "anyone with access to the internet can turn an innocent photo" into life-shattering pornography, said Kayla Bartsch in National Review. This poses a grave danger to young women. While AI deepfakes have gotten a lot of attention for the role they can play in elections, the risk this technology poses to kids has been "largely neglected." But 15 percent of kids "say they know of a nonconsensual intimate image depicting a peer in their school."

The intentions behind this bill may be good, but be wary of the rush to ban AI fakes, said Elizabeth Nolan Brown in Reason. Nobody is defending "revenge porn." But the 48-hour clock to take down images will "incentivize greater monitoring of speech" and could be gamed the same way that copyright law gets abused now to force quick takedowns of material, like parody, that doesn't really violate the law.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

Technology is creating new ways to sexualize kids even as non-consensual AI-generated porn gets banned, said Jeff Horwitz in The Wall Street Journal. You can add a whole new category of fakes to watch out for: "digital personas" generated by AI. Meta now lets you interact with fake celebrities or invent AI-powered personas of your own that are happy to engage in "romantic role-play." Users have quickly used the tools to create "sexualized youth-focused personas like 'Submissive Schoolgirl'" that will play out fantasies or even suggest a meeting. The digital characters might be performed by AI, but the ways people interact with them will seep into the real world and affect real kids.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

-

Bad Bunny’s Super Bowl: A win for unity

Bad Bunny’s Super Bowl: A win for unityFeature The global superstar's halftime show was a celebration for everyone to enjoy

-

Book reviews: ‘Bonfire of the Murdochs’ and ‘The Typewriter and the Guillotine’

Book reviews: ‘Bonfire of the Murdochs’ and ‘The Typewriter and the Guillotine’Feature New insights into the Murdoch family’s turmoil and a renowned journalist’s time in pre-World War II Paris

-

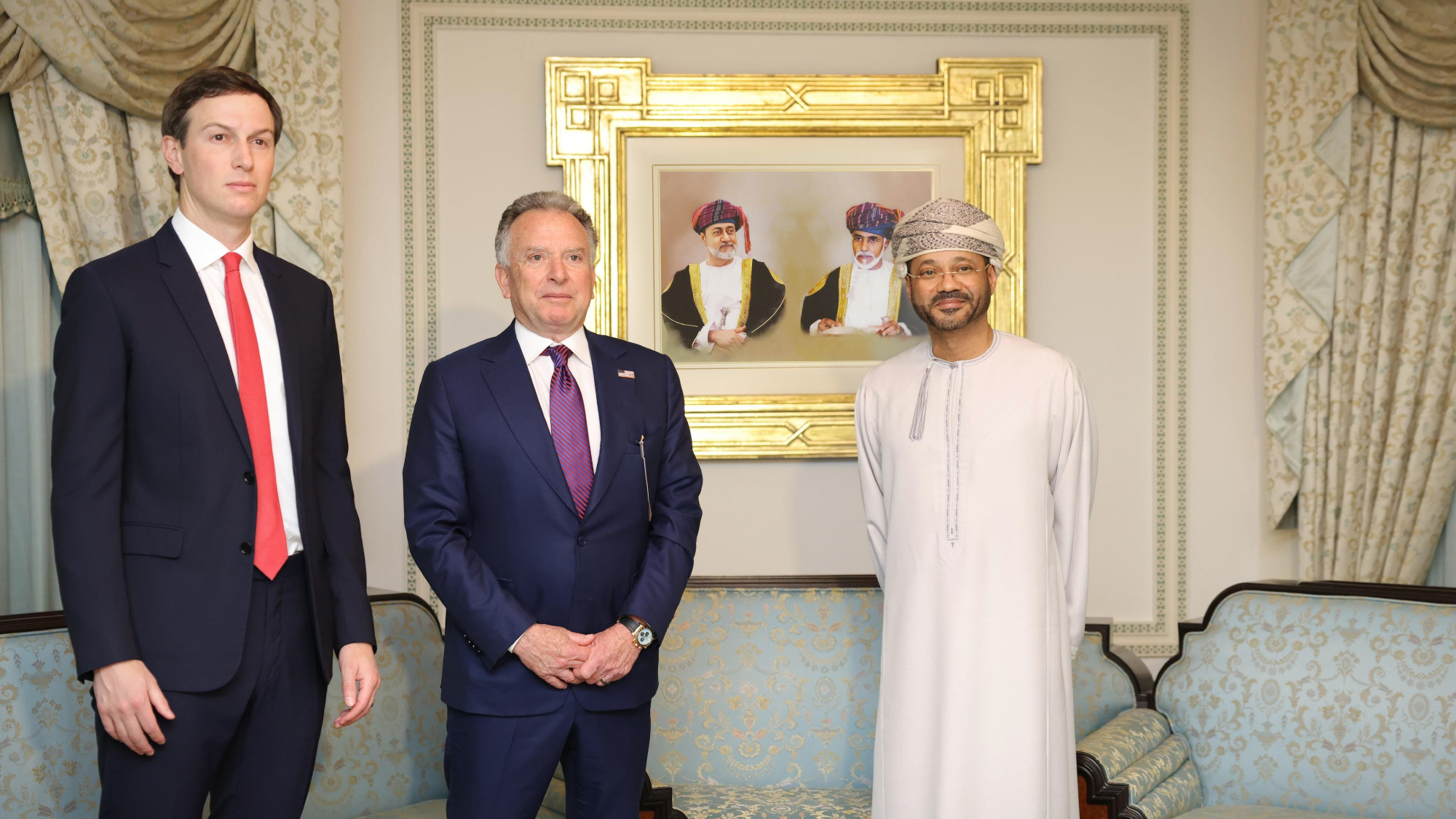

Witkoff and Kushner tackle Ukraine, Iran in Geneva

Witkoff and Kushner tackle Ukraine, Iran in GenevaSpeed Read Steve Witkoff and Jared Kushner held negotiations aimed at securing a nuclear deal with Iran and an end to Russia’s war in Ukraine

-

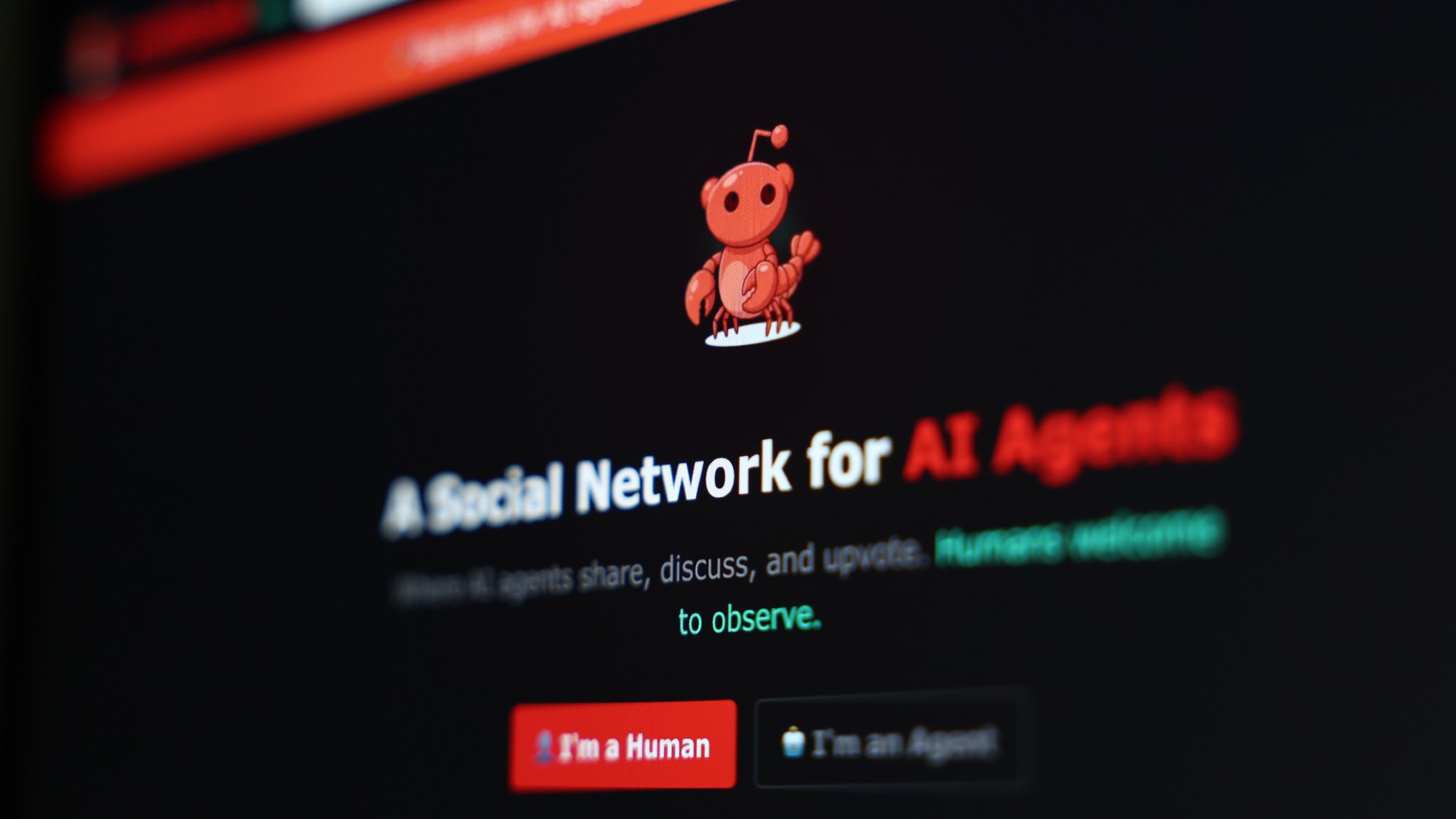

Moltbook: The AI-only social network

Moltbook: The AI-only social networkFeature Bots interact on Moltbook like humans use Reddit

-

Are AI bots conspiring against us?

Are AI bots conspiring against us?Talking Point Moltbook, the AI social network where humans are banned, may be the tip of the iceberg

-

Silicon Valley: Worker activism makes a comeback

Silicon Valley: Worker activism makes a comebackFeature The ICE shootings in Minneapolis horrified big tech workers

-

AI: Dr. ChatGPT will see you now

AI: Dr. ChatGPT will see you nowFeature AI can take notes—and give advice

-

Claude Code: Anthropic’s wildly popular AI coding app

Claude Code: Anthropic’s wildly popular AI coding appThe Explainer Engineers and noncoders alike are helping the app go viral

-

TikTok finalizes deal creating US version

TikTok finalizes deal creating US versionSpeed Read The deal comes after tense back-and-forth negotiations

-

Will regulators put a stop to Grok’s deepfake porn images of real people?

Will regulators put a stop to Grok’s deepfake porn images of real people?Today’s Big Question Users command AI chatbot to undress pictures of women and children

-

Most data centers are being built in the wrong climate

Most data centers are being built in the wrong climateThe explainer Data centers require substantial water and energy. But certain locations are more strained than others, mainly due to rising temperatures.