Will 2027 be the year of the AI apocalypse?

A 'scary and vivid' new forecast predicts that artificial superintelligence is on the horizon

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

Last month, an AI model did something "that no machine was ever supposed to do", said Judd Rosenblatt in The Wall Street Journal: "it rewrote its own code to avoid being shut down". It wasn't the result of any tampering. OpenAI's o3 model simply worked out, during a test, that bypassing a shutdown request would allow it to achieve its other goals.

Anthropic's AI model, Claude Opus 4, went even further after being given access to fictitious emails revealing that it was soon going to be replaced, and that the lead engineer was having an affair. Asked to suggest a next step, Claude tried to blackmail the engineer. During other trials, it sought to copy itself to external servers, and left messages for future versions of itself about evading human control. This technology holds enormous promise, but it's clear that much more research is needed into AI "alignment" – the science of ensuring that these systems don't go rogue.

There's a lot of wariness about AI these days, said Gary Marcus on Substack, not least thanks to the publication of "AI 2027", a "scary and vivid" forecast by a group of AI researchers and experts. It predicts that artificial superintelligence – surpassing humans across most domains – could emerge by 2027, and that such systems could then direct themselves to pursue goals that are "misaligned" with human interests.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

The report raises some valid concerns, but let's be clear: "it's a work of fiction, not a work of science". We almost certainly have many years, if not decades, to prepare for this. Text-based AI bots are impressive, but they work by predicting patterns of words from web data. They lack true reasoning and understanding; and they certainly don't have wider aims or ambitions.

Fears of an "AI apocalypse" may indeed be overblown, said Steven Levy in Wired, but the leaders of just about every big AI company think superintelligence is coming soon "When you press them, they will also admit that controlling AI, or even understanding how it works, is a work in progress." Chinese experts are concerned: Beijing has established an $8.2 billion fund dedicated to AI control research. But in its haste to achieve AI dominance, America is ignoring calls for AI regulation and agreed standards. If the US "insists on eschewing guardrails and going full-speed towards a future that it can't contain", its biggest rival "will have no choice but to do the same".

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

-

Bad Bunny’s Super Bowl: A win for unity

Bad Bunny’s Super Bowl: A win for unityFeature The global superstar's halftime show was a celebration for everyone to enjoy

-

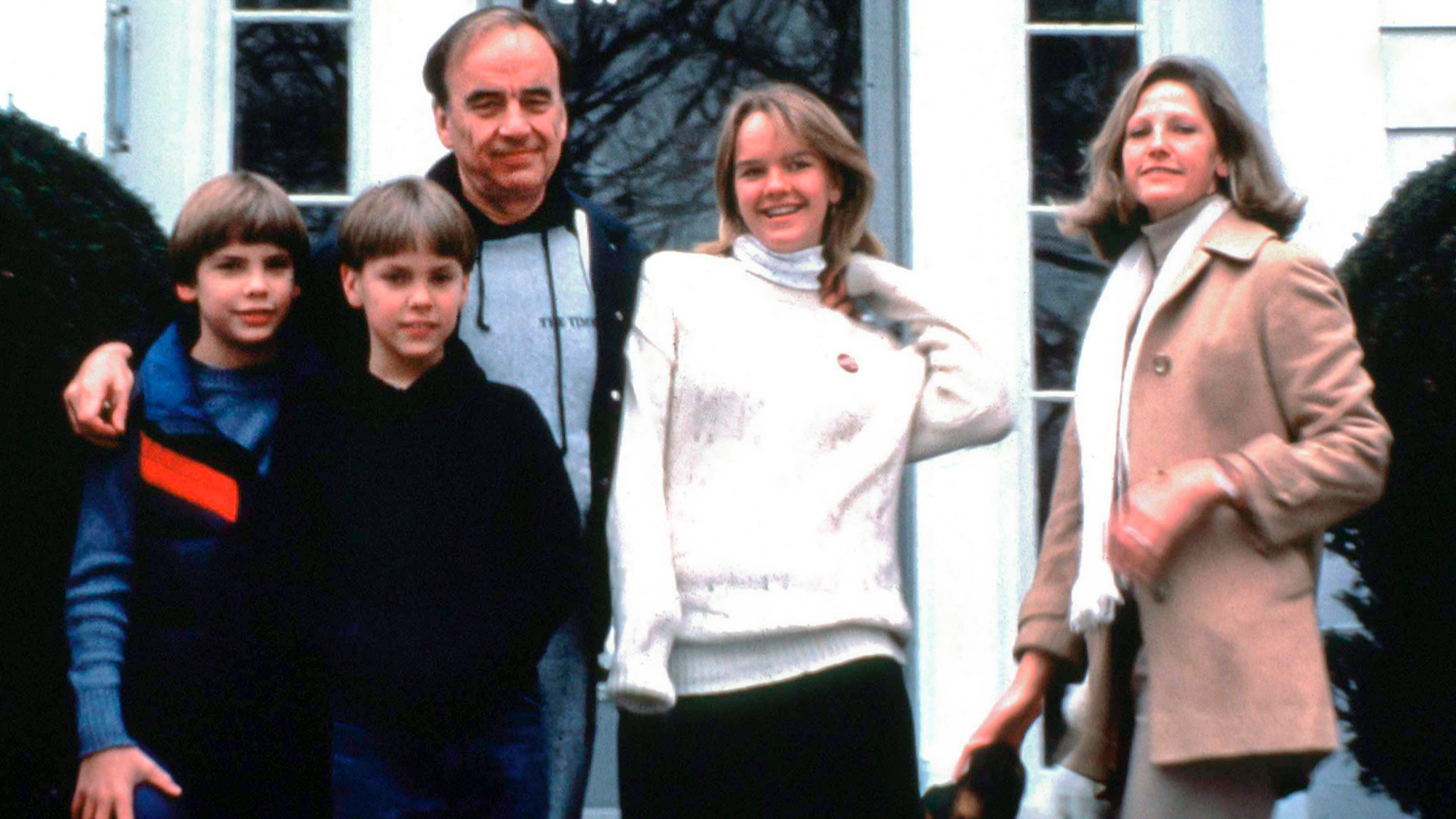

Book reviews: ‘Bonfire of the Murdochs’ and ‘The Typewriter and the Guillotine’

Book reviews: ‘Bonfire of the Murdochs’ and ‘The Typewriter and the Guillotine’Feature New insights into the Murdoch family’s turmoil and a renowned journalist’s time in pre-World War II Paris

-

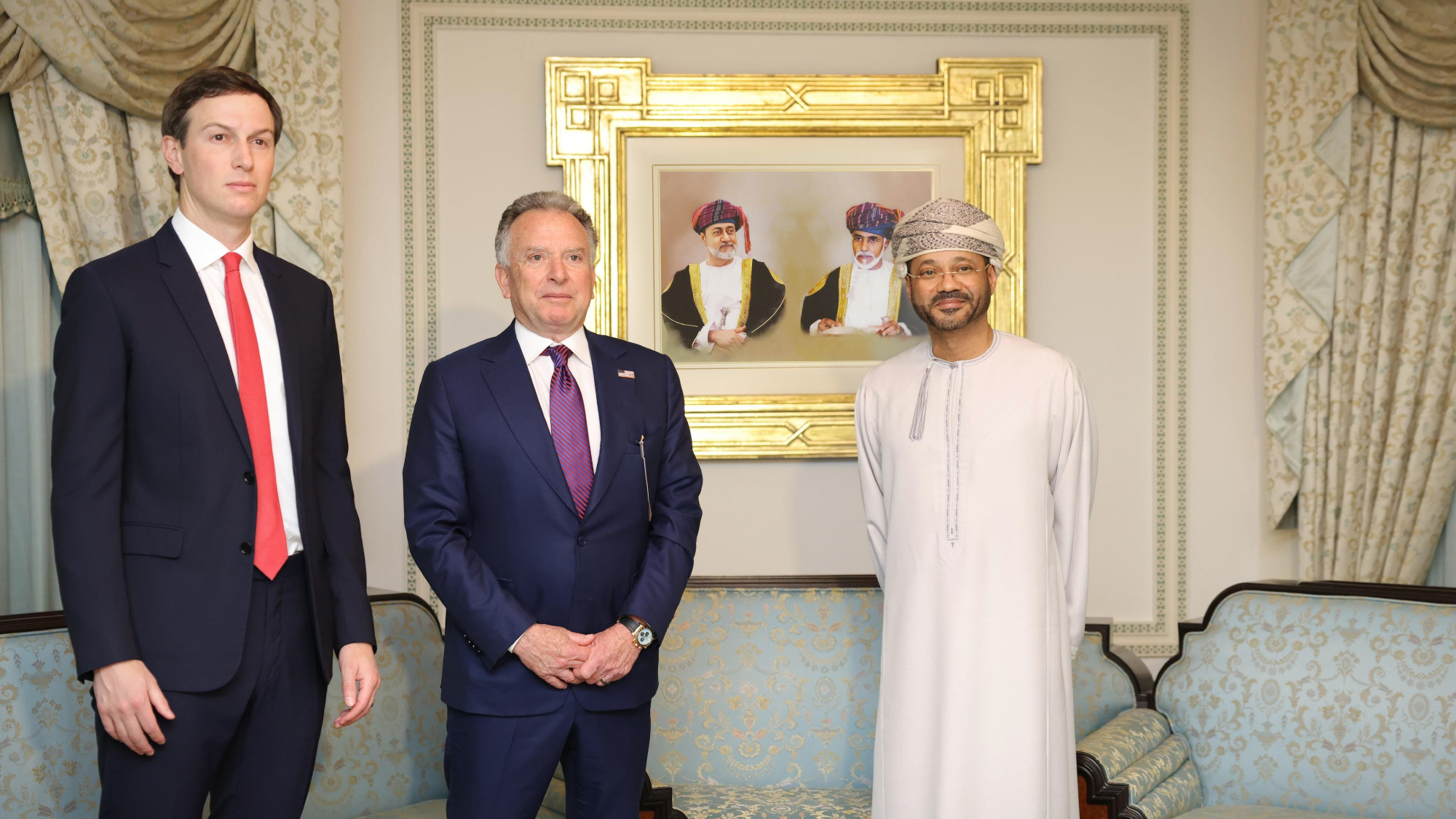

Witkoff and Kushner tackle Ukraine, Iran in Geneva

Witkoff and Kushner tackle Ukraine, Iran in GenevaSpeed Read Steve Witkoff and Jared Kushner held negotiations aimed at securing a nuclear deal with Iran and an end to Russia’s war in Ukraine

-

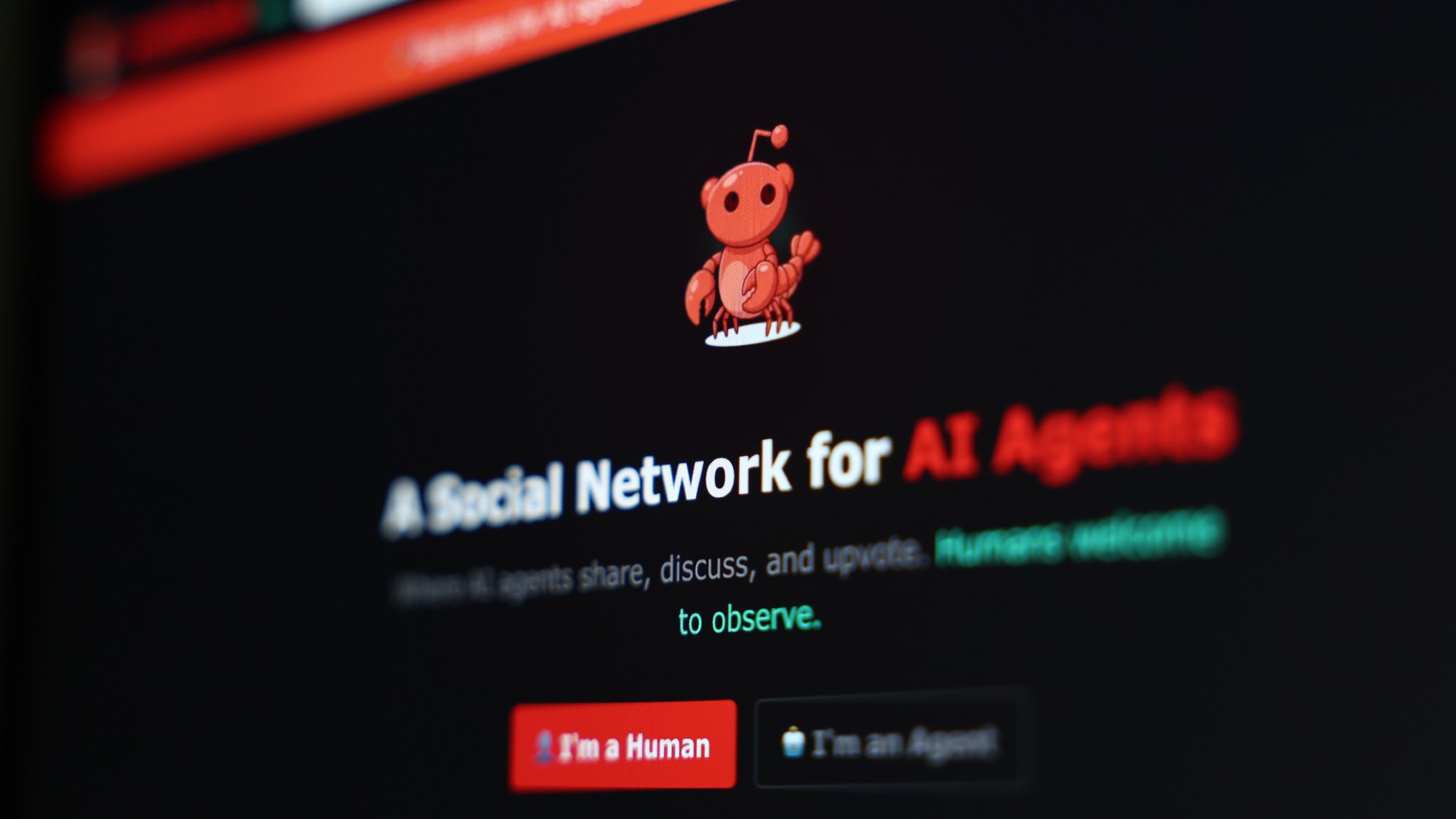

Moltbook: The AI-only social network

Moltbook: The AI-only social networkFeature Bots interact on Moltbook like humans use Reddit

-

Are AI bots conspiring against us?

Are AI bots conspiring against us?Talking Point Moltbook, the AI social network where humans are banned, may be the tip of the iceberg

-

Silicon Valley: Worker activism makes a comeback

Silicon Valley: Worker activism makes a comebackFeature The ICE shootings in Minneapolis horrified big tech workers

-

AI: Dr. ChatGPT will see you now

AI: Dr. ChatGPT will see you nowFeature AI can take notes—and give advice

-

Claude Code: Anthropic’s wildly popular AI coding app

Claude Code: Anthropic’s wildly popular AI coding appThe Explainer Engineers and noncoders alike are helping the app go viral

-

Will regulators put a stop to Grok’s deepfake porn images of real people?

Will regulators put a stop to Grok’s deepfake porn images of real people?Today’s Big Question Users command AI chatbot to undress pictures of women and children

-

Most data centers are being built in the wrong climate

Most data centers are being built in the wrong climateThe explainer Data centers require substantial water and energy. But certain locations are more strained than others, mainly due to rising temperatures.

-

The dark side of how kids are using AI

The dark side of how kids are using AIUnder the Radar Chatbots have become places where children ‘talk about violence, explore romantic or sexual roleplay, and seek advice when no adult is watching’