How are social networks tackling hate speech?

AI programmes and human content reviewers crack down on offensive comments online

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

Hate speech is not a new phenomenon for social network websites, but Saturday's far-right rally in Charlottesville, Virginia, that left one protestor dead has led to companies taking action against offensive groups online.

Here's how the biggest social media groups are responding to hate speech, as well as their plans to prevent offensive content from spreading:

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

Following the violent events in Charlottesville on Saturday, Engadget says Facebook has "shut down numerous hate groups in the wake of the attacks", including the "event page for the Unite the Right march that conducted the violence".

The social media giant uses a combination of artificial intelligence (AI) and human content reviewers to find hate speech, which The Verge says removes "66,000 hate mail posts per week". But the site says Facebook "relies heavily" on users reporting content as offensive or hateful.

In May, Facebook hired an additional 3,000 people to its "team of content reviewers", says TechCrunch, bringing the total up to 7,500. This was triggered by several global "content moderation scandals", including the use of Facebook Live "to broadcast murder and suicide."

But Wired says there have been situations where the company's "algorithmic and human reviewers" have labelled comments or posts as offensive without considering the context. For instance, the website says some words "shouted as slurs" are sometimes "reclaimed" by groups "as a means of self-expression."

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

Twitter's hateful conduct policy says the service does not "allow accounts whose primary purpose is inciting harm towards others" on the basis of areas such as race or sexual orientation.

The Independent reports that over the past months the site has introduced more systems and tools to detect and remove hate speech, as well as improving the process where its users manually report offensive material.

But the social media site has been in a "fair bit of hot water in recent months regarding a perceived lack of action in the wake of perceived threats", says TechCrunch, leading to an activist spraying "hate language on the streets outside the company's Berlin headquarters."

In the wake of Saturday's clash, the website says the account for the far-right group The Daily Stormer has been taken down, although "Trump's tweets that teeter on the edge of threatening nuclear war" appear to fall in line with the company's policy.

The chat forum Reddit has also cracked-down on hate speech. Engadget says the website has "shut down numerous hate groups in the wake of the attacks."

Among the groups removed from the social media site was the subreddit /r/Physical_Removal, a page Engadget says "hoped that people in anti-hate subreddits and at CNN would be killed, supported concentration camps and even wrote poems about killing."

"We are very clear in our site terms of service that posting content that incites violence will get users banned from Reddit," Reddit told Cnet.

While Google isn't exclusively a social network, the tech giant plays a key role in directing internet traffic and the social apps that users can access.

Since the clash in Charlottesville on Saturday, TechCrunch says the firm has removed the "conservative social network" Gab from its Play Store as it had become a "haven" for users banned from mainstream platforms.

Google says it does not support "content that promotes or condones violence against individuals or groups" based on certain criteria, adding that it depends "heavily upon users to let us know about content that may violate our policies".

But TechCrunch says "it's not clear what specifically Gab did that warranted its being kicked off the store", as the app is a chatroom and doesn't appear to actively promote hate speech. The website says "there's plenty of hate speech on Twitter and YouTube", but these are still available to download despite this week's "crackdown" on offensive content.

According to The Verge, Gab has "never been approved for placement on Apple's App Store."

What are others doing?

One of the notable cases of the hate speech clampdown after Saturday's events includes the web domain name retailer GoDaddy evicting The Daily Stormer's website from its service, says TheRegister.

The website says activists told the retailer that the group made "extraordinarily vulgar and disparaging remarks" about the victim of the Charlottesville attack, Heather Heyer. GoDaddy handed The Daily Stormer 24 hours "to move the domain to another provider".

YouTube is also expected to "institute stricter guidelines with regard to hate speech", reports TechCrunch. This could see more videos being removed after users mark them as offensive, even if there's nothing illegal in the content.

-

Film reviews: ‘Wuthering Heights,’ ‘Good Luck, Have Fun, Don’t Die,’ and ‘Sirat’

Film reviews: ‘Wuthering Heights,’ ‘Good Luck, Have Fun, Don’t Die,’ and ‘Sirat’Feature An inconvenient love torments a would-be couple, a gonzo time traveler seeks to save humanity from AI, and a father’s desperate search goes deeply sideways

-

Political cartoons for February 16

Political cartoons for February 16Cartoons Monday’s political cartoons include President's Day, a valentine from the Epstein files, and more

-

Regent Hong Kong: a tranquil haven with a prime waterfront spot

Regent Hong Kong: a tranquil haven with a prime waterfront spotThe Week Recommends The trendy hotel recently underwent an extensive two-year revamp

-

Are Big Tech firms the new tobacco companies?

Are Big Tech firms the new tobacco companies?Today’s Big Question A trial will determine whether Meta and YouTube designed addictive products

-

Is social media over?

Is social media over?Today’s Big Question We may look back on 2025 as the moment social media jumped the shark

-

Australia’s teen social media ban takes effect

Australia’s teen social media ban takes effectSpeed Read Kids under age 16 are now barred from platforms including YouTube, TikTok, Instagram, Facebook, Snapchat and Reddit

-

X update unveils foreign MAGA boosters

X update unveils foreign MAGA boostersSpeed Read The accounts were located in Russia and Nigeria, among other countries

-

Trump allies reportedly poised to buy TikTok

Trump allies reportedly poised to buy TikTokSpeed Read Under the deal, U.S. companies would own about 80% of the company

-

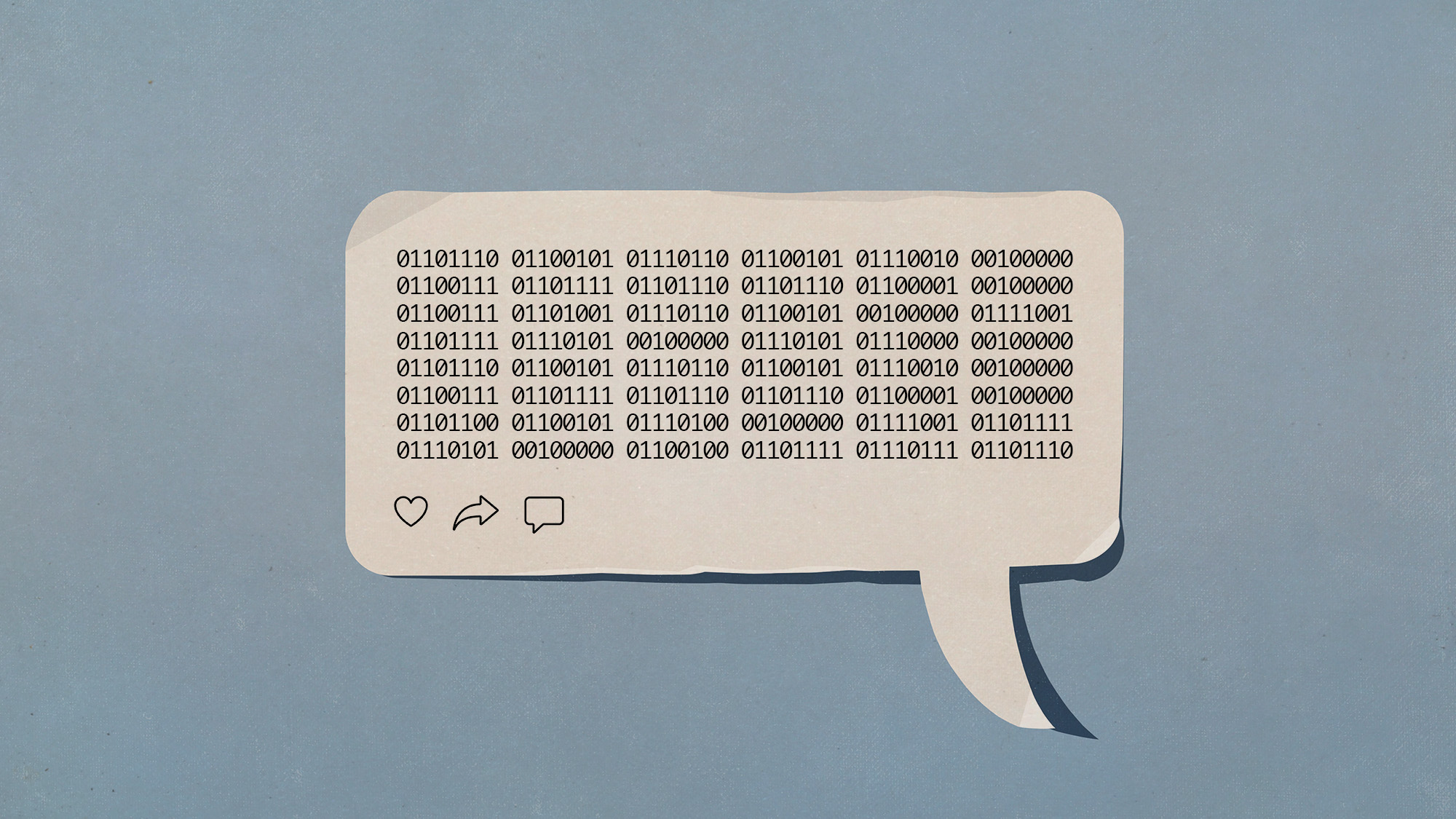

What an all-bot social network tells us about social media

What an all-bot social network tells us about social mediaUnder The Radar The experiment's findings 'didn't speak well of us'

-

Broken brains: The social price of digital life

Broken brains: The social price of digital lifeFeature A new study shows that smartphones and streaming services may be fueling a sharp decline in responsibility and reliability in adults

-

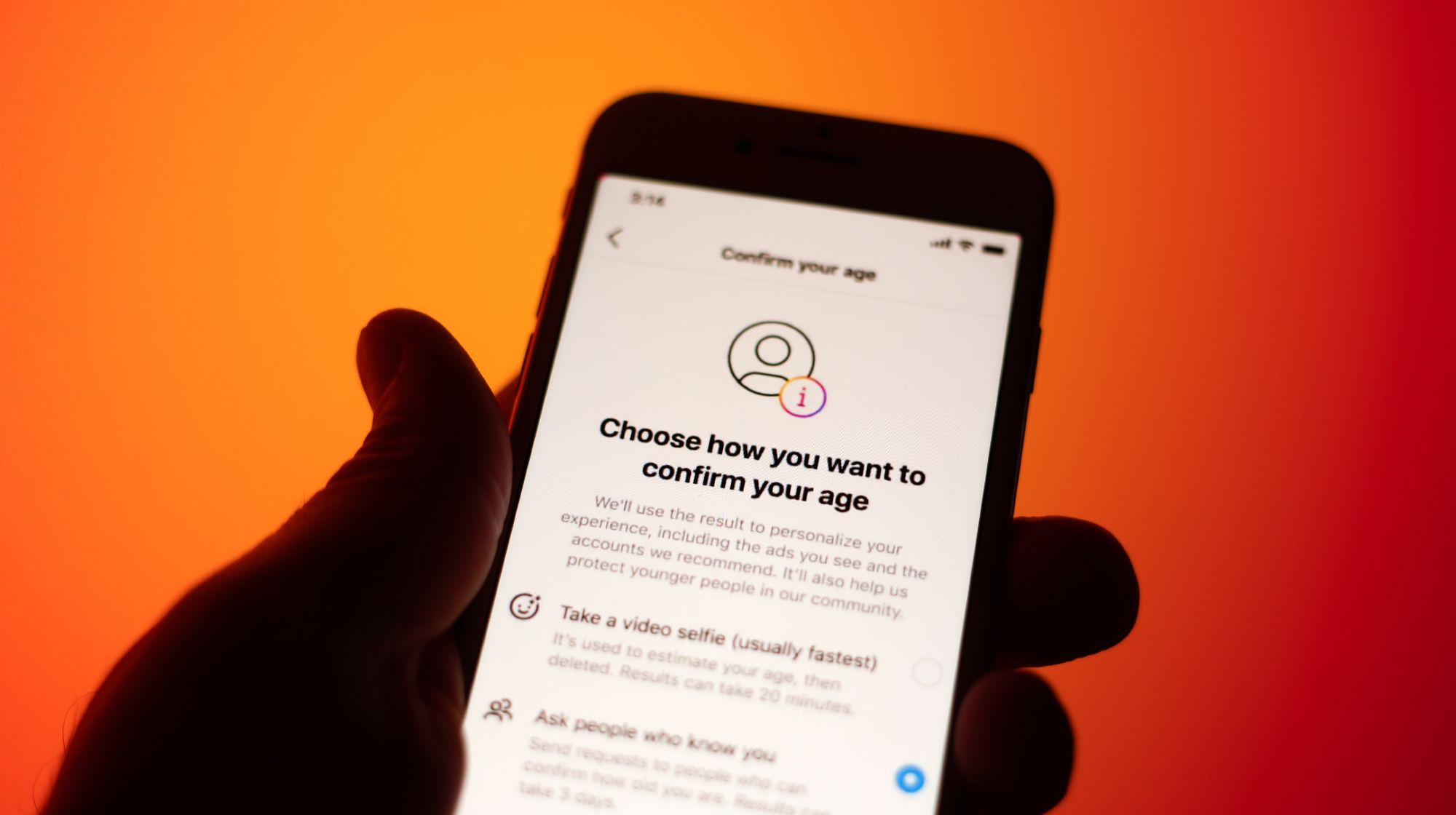

Supreme Court allows social media age check law

Supreme Court allows social media age check lawSpeed Read The court refused to intervene in a decision that affirmed a Mississippi law requiring social media users to verify their ages