Donald Trump, the Pope and the disruptive power of AI images

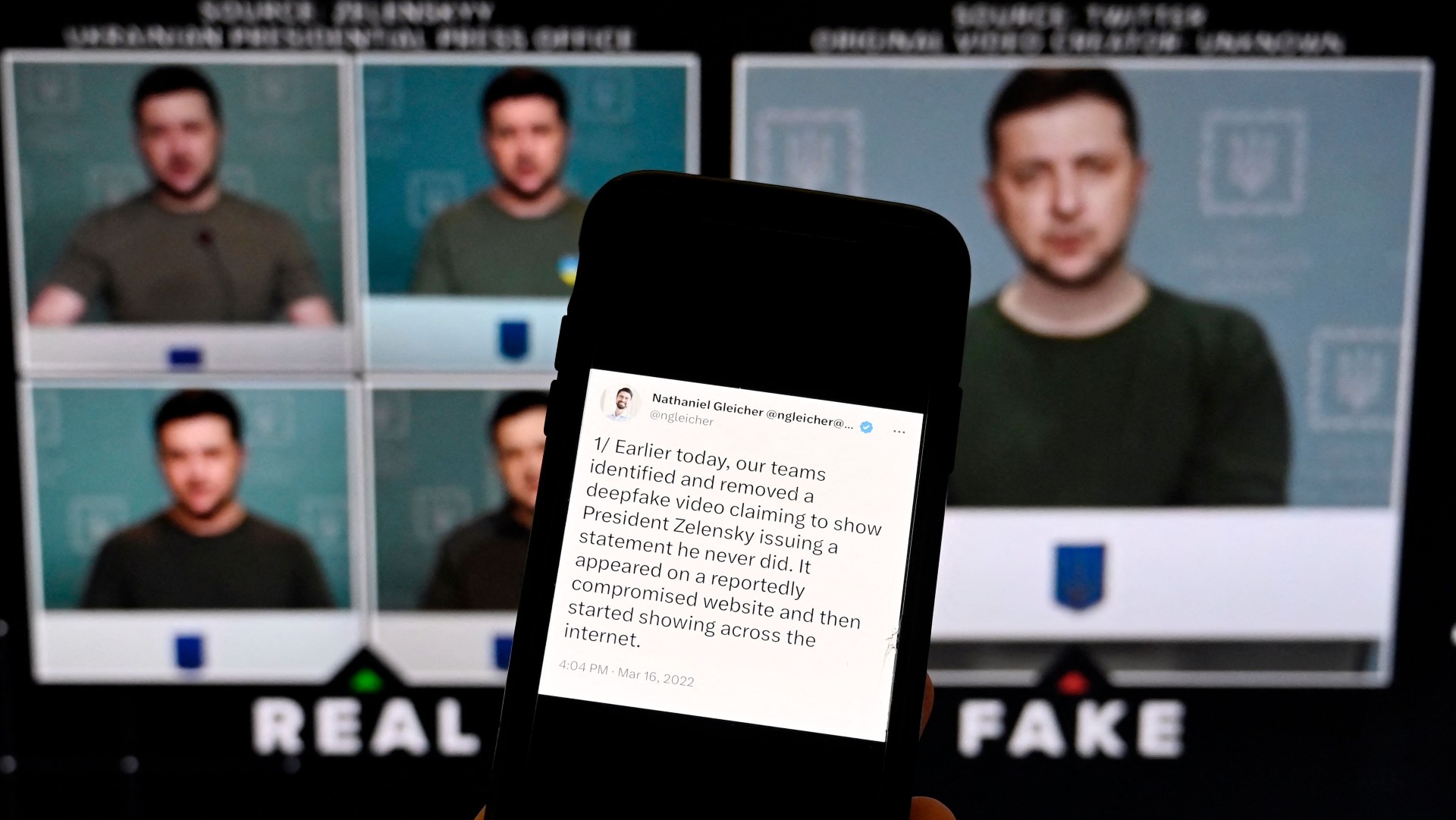

AI-generated deepfakes blur reality and could be used for political disinformation or personal blackmail

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

Deepfake images of Donald Trump being arrested and the Pope in a puffer jacket have sparked concern that we could be at a tipping point in being able to distinguish whether something posted online is real or not.

What happened?

Last week, as people across the US waited for the possible arrest of Donald Trump, Eliot Higgins, the founder of open-source investigations website Bellingcat, decided to generate his own images using the artificial intelligence (AI) programme Midjourney, which creates images from simple text prompts.

While Higgins made it clear the images were AI-generated, his posts went viral and were viewed nearly 5 million times in just two days.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

In a case of life imitating art, the Independent reported that Trump “appears to be getting in on the fun of sharing AI photos of himself”. The former president responded to Higgins’ posts by sharing an AI-generated image of himself praying.

Just days later an AI-generated image of Pope Francis, wearing a large white puffer jacket, also went viral, racking up millions of views and even fooling misinformation experts.

What are the implications?

“Fears of AI fakery are not new,” said New Scientist. “For several years, we have faced the threat of deepfaked images of people’s faces, produced by earlier generations of AI trained on smaller volumes of information, but they have frequently had tell-tale signs of fakery, such as non-blinking eyes or blurred ears.”

The rapid development of AI technology to render images that look totally realistic means it could be “possible that in one or two years, people will not be able to tell a real image from a fake one – even when scrutinising it closely”, warned the i news site.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

Last week appears to have been such an inflection point.

The Trump images have provided “a case study in the increasing sophistication of AI-generated images, the ease with which they can be deployed and their potential to create confusion in volatile news environments”, said The Washington Post.

Henry Ajder, an AI expert and presenter of the BBC radio series The Future Will Be Synthesised, told i news that the trend is concerning, especially if the technology was used for malicious purposes and gets into the wrong hands.

While there is concern AI-generated deepfake images could be used to spread disinformation to distort political processes or change the narrative around the war in Ukraine, for example, they could also be used on everyday people who do not have the resources to stop their spread “to create fake, incriminating images to show people somewhere they shouldn’t be, or used as forms of bribery or humiliation”, said i news.

What can be done about it?

Ultimately, said New Scientist “the rapid rise of AI means some disruption is inevitable”.

In recent years major technology companies have bolstered their policies against deepfakes. In 2019 Facebook-parent company Meta banned users from posting highly manipulated videos “but left the door open for manipulated videos that are meant to be parody or satire”, said The Washington Post. Twitter also “introduced a new rule prohibiting users from sharing deceptive and manipulated media that may cause harm, such as tweets that could lead to violence, widespread civil unrest or threatening someone’s privacy”.

Yet as AI technology gets more and more sophisticated social media companies have failed to keep up investment to detect and enforce these polices. There are some signs AI-generators themselves are starting to take action, with Midjourney and OpenAI, which also developed ChatGTP, working on safety restrictions on the prompts that people can enter to generate images.

However, The Washington Post said the Trump episode “makes evident the absence of corporate standards or government regulation addressing the use of AI to create and spread falsehoods”.

Dr Elinor Carmi, a lecturer in data politics and social justice at City University, London, agreed, saying the image of Pope Francis is “an example of a wider problem of technologies being pushed into our societies without any oversight, regulation or standards”.

Stressing the need for better media literacy of how easy it is to create and spread fake images, Carmi said: “Most of our society has been left behind, not understanding how these technologies work, for what purposes and what are the consequences of that.”

-

The environmental cost of GLP-1s

The environmental cost of GLP-1sThe explainer Producing the drugs is a dirty process

-

Greenland’s capital becomes ground zero for the country’s diplomatic straits

Greenland’s capital becomes ground zero for the country’s diplomatic straitsIN THE SPOTLIGHT A flurry of new consular activity in Nuuk shows how important Greenland has become to Europeans’ anxiety about American imperialism

-

‘This is something that happens all too often’

‘This is something that happens all too often’Instant Opinion Opinion, comment and editorials of the day

-

Claude Code: Anthropic’s wildly popular AI coding app

Claude Code: Anthropic’s wildly popular AI coding appThe Explainer Engineers and noncoders alike are helping the app go viral

-

Will regulators put a stop to Grok’s deepfake porn images of real people?

Will regulators put a stop to Grok’s deepfake porn images of real people?Today’s Big Question Users command AI chatbot to undress pictures of women and children

-

Is social media over?

Is social media over?Today’s Big Question We may look back on 2025 as the moment social media jumped the shark

-

Most data centers are being built in the wrong climate

Most data centers are being built in the wrong climateThe explainer Data centers require substantial water and energy. But certain locations are more strained than others, mainly due to rising temperatures.

-

The dark side of how kids are using AI

The dark side of how kids are using AIUnder the Radar Chatbots have become places where children ‘talk about violence, explore romantic or sexual roleplay, and seek advice when no adult is watching’

-

Why 2025 was a pivotal year for AI

Why 2025 was a pivotal year for AITalking Point The ‘hype’ and ‘hopes’ around artificial intelligence are ‘like nothing the world has seen before’

-

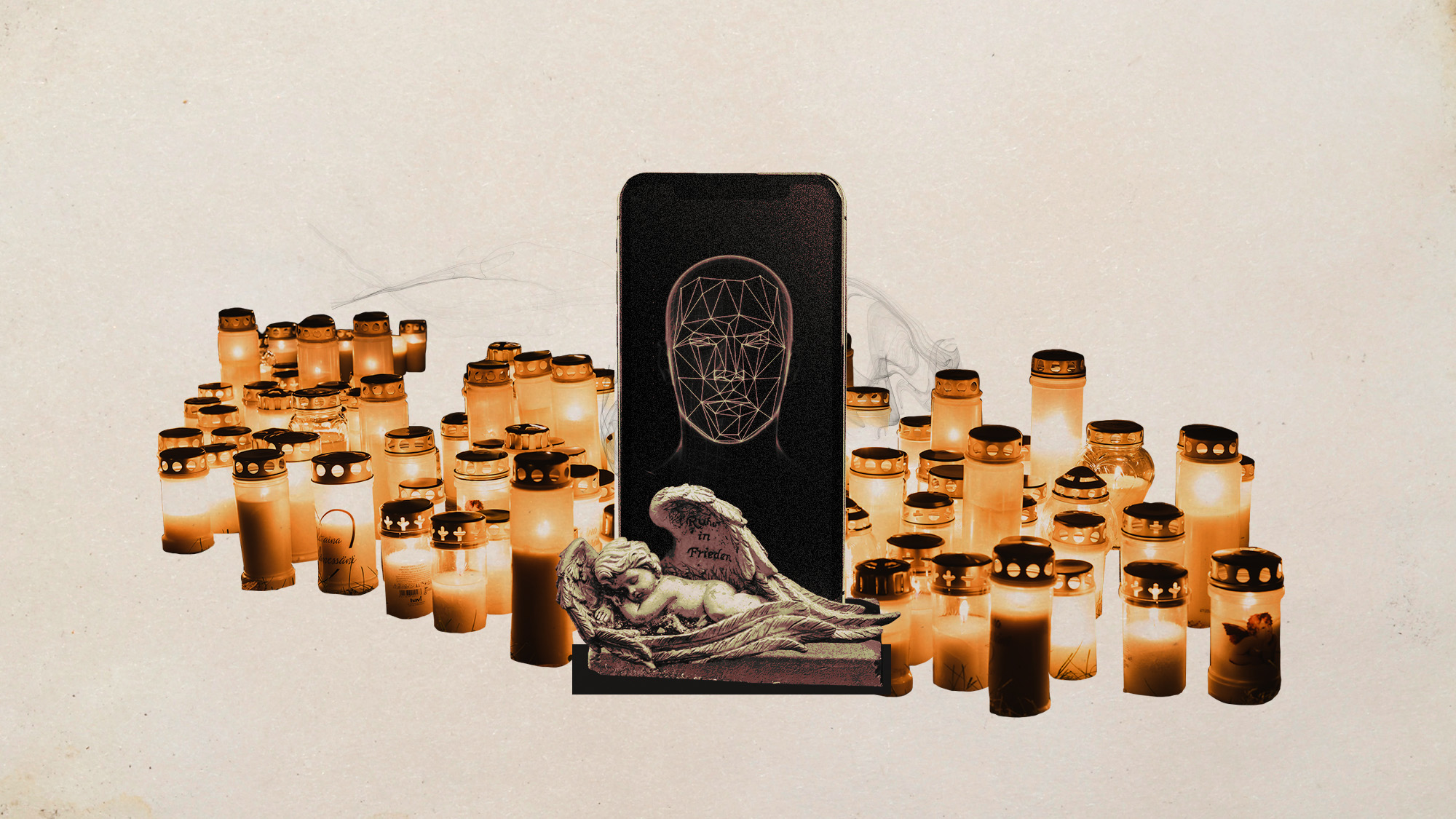

AI griefbots create a computerized afterlife

AI griefbots create a computerized afterlifeUnder the Radar Some say the machines help people mourn; others are skeptical

-

The robot revolution

The robot revolutionFeature Advances in tech and AI are producing android machine workers. What will that mean for humans?