Facebook put coronavirus misinformation warnings on about 40 million posts in March

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

Facebook has announced a new policy surrounding coronavirus misinformation on its platform after applying warning labels to tens of millions of posts last month alone.

The social media platform on Thursday said that in March, it put warning labels on roughly 40 million posts containing coronavirus misinformation based on ratings from fact-checkers. "When people saw those warning labels, 95 percent of the time they did not go on to view the original content," Facebook said. Additionally, Facebook disclosed that it removed "hundreds of thousands of pieces of misinformation that could lead to imminent physical harm," such as "harmful claims like drinking bleach cures the virus."

Going forward, Facebook will start showing messages in the Facebook feeds of users "who have liked, reacted or commented on harmful misinformation about COVID-19 that we have since removed." Users will be directed toward information about myths surrounding COVID-19 that have been debunked by the World Health Organization.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

"We want to connect people who may have interacted with harmful misinformation about the virus with the truth from authoritative sources in case they see or hear these claims again off of Facebook," said Guy Rosen, Facebook's vice president of integrity.

An example screenshot shows a news feed where a user is encouraged to share a link to the WHO website with a list of common coronavirus rumors. A Facebook spokesperson told Axios the company is still testing different possible versions of what the notifications to users who engaged with misinformation could look like.

This announcement, Politico notes, comes after a campaign group said more than 40 percent of misinformation it found related to the coronavirus on Facebook was remaining on the platform even after being debunked. The new policy of informing users who have engaged with misinformation will take effect over the next few weeks.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

Brendan worked as a culture writer at The Week from 2018 to 2023, covering the entertainment industry, including film reviews, television recaps, awards season, the box office, major movie franchises and Hollywood gossip. He has written about film and television for outlets including Bloody Disgusting, Showbiz Cheat Sheet, Heavy and The Celebrity Cafe.

-

The environmental cost of GLP-1s

The environmental cost of GLP-1sThe explainer Producing the drugs is a dirty process

-

Greenland’s capital becomes ground zero for the country’s diplomatic straits

Greenland’s capital becomes ground zero for the country’s diplomatic straitsIN THE SPOTLIGHT A flurry of new consular activity in Nuuk shows how important Greenland has become to Europeans’ anxiety about American imperialism

-

‘This is something that happens all too often’

‘This is something that happens all too often’Instant Opinion Opinion, comment and editorials of the day

-

Australia’s teen social media ban takes effect

Australia’s teen social media ban takes effectSpeed Read Kids under age 16 are now barred from platforms including YouTube, TikTok, Instagram, Facebook, Snapchat and Reddit

-

Google avoids the worst in antitrust ruling

Google avoids the worst in antitrust rulingSpeed Read A federal judge rejected the government's request to break up Google

-

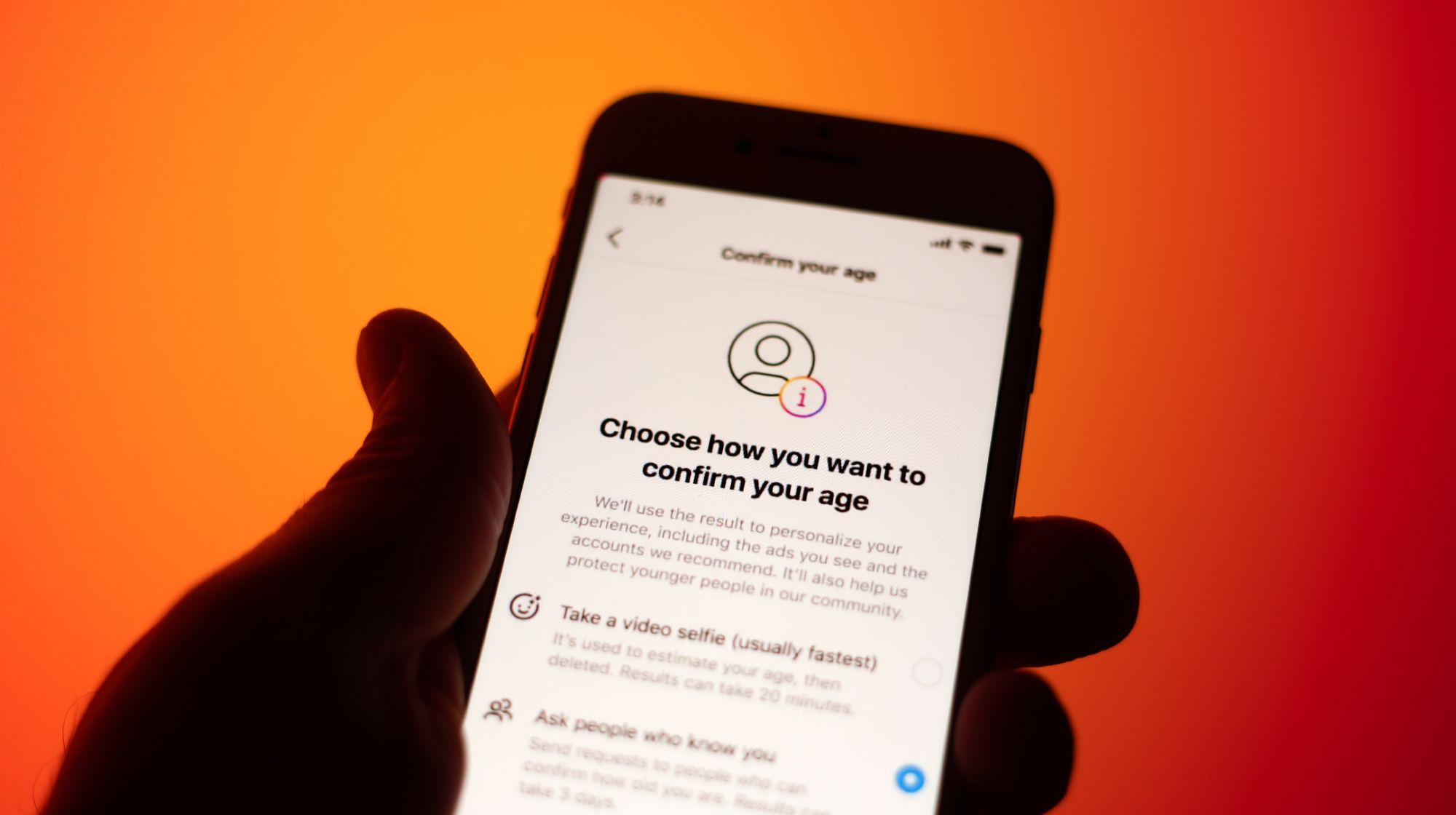

Supreme Court allows social media age check law

Supreme Court allows social media age check lawSpeed Read The court refused to intervene in a decision that affirmed a Mississippi law requiring social media users to verify their ages

-

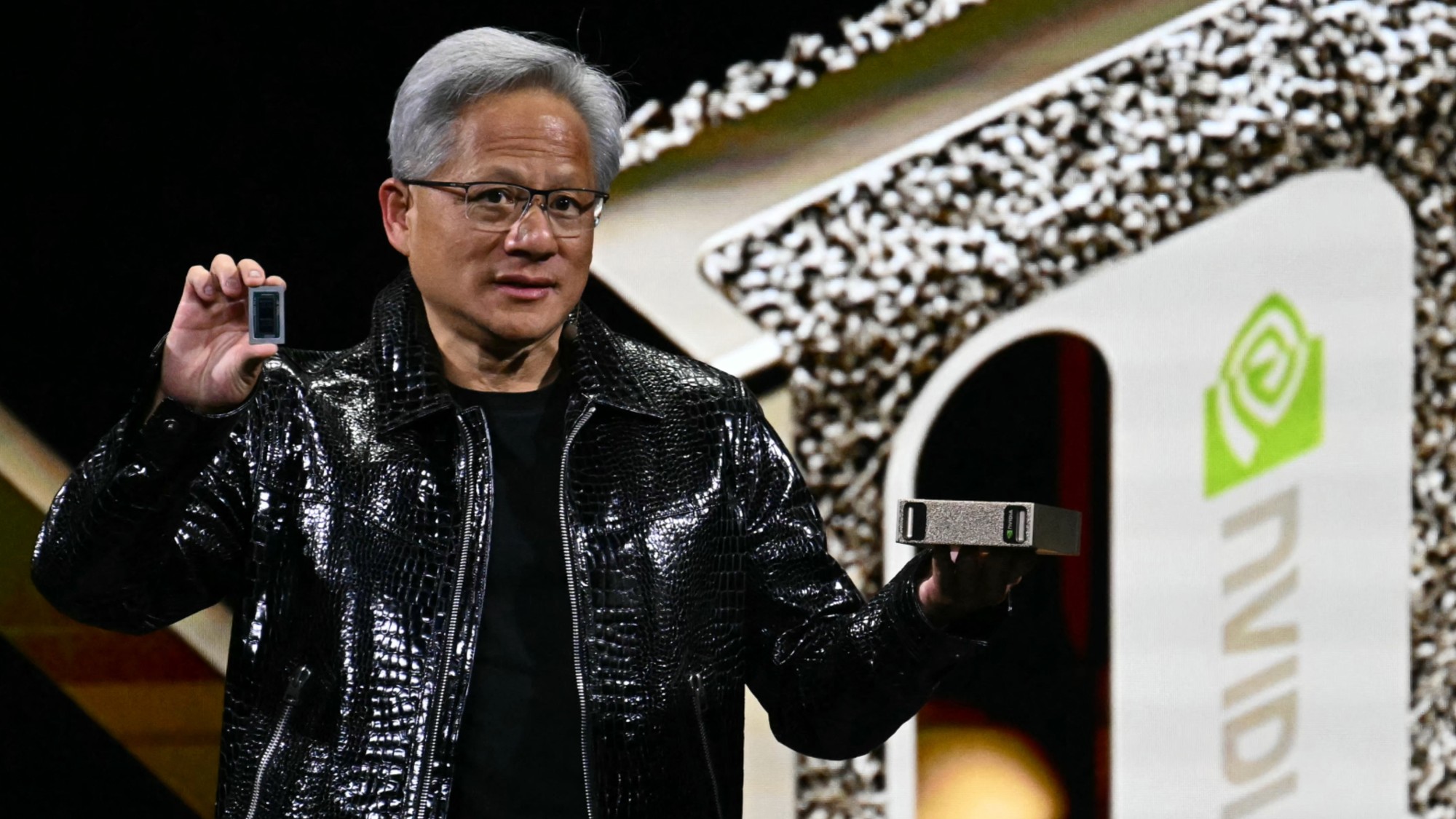

Nvidia hits $4 trillion milestone

Nvidia hits $4 trillion milestoneSpeed Read The success of the chipmaker has been buoyed by demand for artificial intelligence

-

X CEO Yaccarino quits after two years

X CEO Yaccarino quits after two yearsSpeed Read Elon Musk hired Linda Yaccarino to run X in 2023

-

Musk chatbot Grok praises Hitler on X

Musk chatbot Grok praises Hitler on XSpeed Read Grok made antisemitic comments and referred to itself as 'MechaHitler'

-

Disney, Universal sue AI firm over 'plagiarism'

Disney, Universal sue AI firm over 'plagiarism'Speed Read The studios say that Midjourney copied characters from their most famous franchises

-

Amazon launches 1st Kuiper internet satellites

Amazon launches 1st Kuiper internet satellitesSpeed Read The battle of billionaires continues in space