Facebook is broken. Let's all fix it together.

We can't blame this all on the social network. There's personal culpability in fake news gullibility, too.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

How do you solve a problem like fake news?

That's the question Facebook is striving to answer as it faces mounting pressure from critics over its role (or lack thereof) in preventing misinformation during the 2016 U.S. presidential election. But the responsibility is not Facebook's alone. If the social network is broken, it's incumbent upon all of its users to help fix it.

The company has put together a new set of policies and standards for fighting fake news, determining what goes viral, and organizing its news feed — but CEO Mark Zuckerberg says he still wants to be careful to support free speech and "the right to be wrong." In an interview with Recode's Kara Swisher last week, Zuckerberg explained, "If we were taking down people's accounts when they got a few things wrong, that would be a hard world for giving people a voice." He suggested that Facebook should prevent hoaxes and maliciously incorrect information from going viral — but not remove misinformation altogether. (Zuckerberg famously sparked a flurry of facepalms for seeming to suggest that Holocaust deniers might not be willfully spreading misinformation. He later backtracked.)

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

Of course, plenty of critics are hopping mad at Facebook over its apparent unwillingness to do what is necessary to protect us from ourselves.

"We've seen what happens when Facebook builds a thing and gives people the power to use it in whatever ways they want, and it's really destructive," New York Times technology reporter Kevin Roose argued on a recent episode of The Daily. "So now we're asking Zuckerberg to accept the power he has and use it wisely, and it seems he's reluctant to do that. … You make it, you own it. … Whether he wants to or not, Zuckerberg has to solve this problem now, that he's created."

It's true that Zuckerberg could take a more aggressive approach to fake news. And it's equally true that he runs a company, not a country, and he's not necessarily required to follow constitutional principles in protecting free speech. But critics like Roose make it sound as if Facebook users are lost and vulnerable sheep, unable to protect themselves from harm without Zuckerberg's leadership. In this sense, they take initiative away from social media users and encourage an infantilized internet, one in which we treat ourselves as passive consumers rather than responsible users and sharers.

We all ought to know how to Google things and make sure they are real. We should be able to fact-check things before pushing the "share" button. And we should be responsible enough to point out erroneous links or stories to our friends, so that they are not misled by fake news.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

As the internet grows in power and scope, we need to begin adapting our institutions and communities to police it better: to grow in prudence and knowledge, and educate those growing up with the internet to better protect themselves from its falsities and dangers. Yes, some of this might involve Facebook policing its site and fighting misinformation via moderators and censorship. But Facebook users can and should do a better job educating themselves regarding fake news and monitoring their online communities. We need to train up a generation that will exercise prudence and discretion online — not a generation of young people that expects Facebook to vet the news for them.

In 2016, a Stanford study found that middle school, high school, and college students struggled to determine the credibility of online sources, and were often fooled by fake news, sponsored content, and biased stories. As a result, many professors and teachers have begun advocating for "media literacy courses," which would help students navigate the internet with greater skill.

"As a university professor, when I frequently ask my students whether they've received any training or education in media literacy, all I get in response is a bunch of shrugs," professor and author Larry Atkins wrote for HuffPost last year. "My freshman students often cite obscure websites as sources in their papers and articles instead of authoritative government documents or respected news sources. I need to tell them to cite authoritative sources like MayoClinic.org and CDC.gov when discussing the legalization of medical marijuana, not 'Joe's Weed page.'"

This isn't just about teaching students how to write credible papers, however. Knowing which sources to cite is important, but it doesn't teach students how to sift through their news feeds and read judiciously. Young people need to know how to cross-check questionable material with solid sources, so that they're able to pinpoint a scam or hoax when they see one. Just because we're presented with something phony doesn't mean we have to believe and share it. Let's teach ourselves to be better.

It would also be laudatory (if potentially controversial) to teach students how to read "real" news (aka, political stories that are not fake, but still often volatile) with both eyes open. In our highly partisan era, too few readers can determine the difference between editorializing and hard news — and too few are willing to wrestle with political opinions on the other side of the aisle. To bolster our civic discourse, such skills are increasingly necessary. Helping students read and digest various political opinions could foster a healthy political literacy and deeper sense of empathy — both of which we need in our online and offline interactions.

Of course, young people are not the only social media users who need an education in smart news consumption. Often, those propagating fake news are older Americans, part of a generation that did not grow up with the internet and is less versed in navigating its truths and falsehoods. According to a study conducted by Team LEWIS last September, 42 percent of millennials will validate the accuracy of a news story they read online — whereas only 25 percent of baby boomers and 19 percent of Gen Xers will do the same. How do we teach these people to identify fake news?

Facebook's determination to prioritize friends' content and local news on its news feeds will likely have a positive impact. But it (and other social media sites like it) could also help organize ad campaigns that encourage users to cross-check their sources and alert their friends to false news stories. Not only would these efforts ease the burden on Facebook's moderators, they could also foster a salutary sense of responsibility and ownership among site users. Facebook could create a digital version of the "if you see something, say something" slogan that's been used throughout many U.S. airports and metro systems post-9/11. Often, grassroots efforts at rooting out falsehood are more palatable than top-down regulations or censorship — and they may even result in more lasting attitude changes amongst consumers.

The internet has gotten increasingly complex — and perhaps as a result, we've become increasingly lazy in dealing with its chaotic glut of information. But laziness and apathy are a dangerous business when it comes to online news. Sure, Facebook can help keep us safe from misinformation. But ultimately, finding out the truth is our responsibility.

Gracy Olmstead is a writer and journalist located outside Washington, D.C. She's written for The American Conservative, National Review, The Federalist, and The Washington Times, among others.

-

Switzerland could vote to cap its population

Switzerland could vote to cap its populationUnder the Radar Swiss People’s Party proposes referendum on radical anti-immigration measure to limit residents to 10 million

-

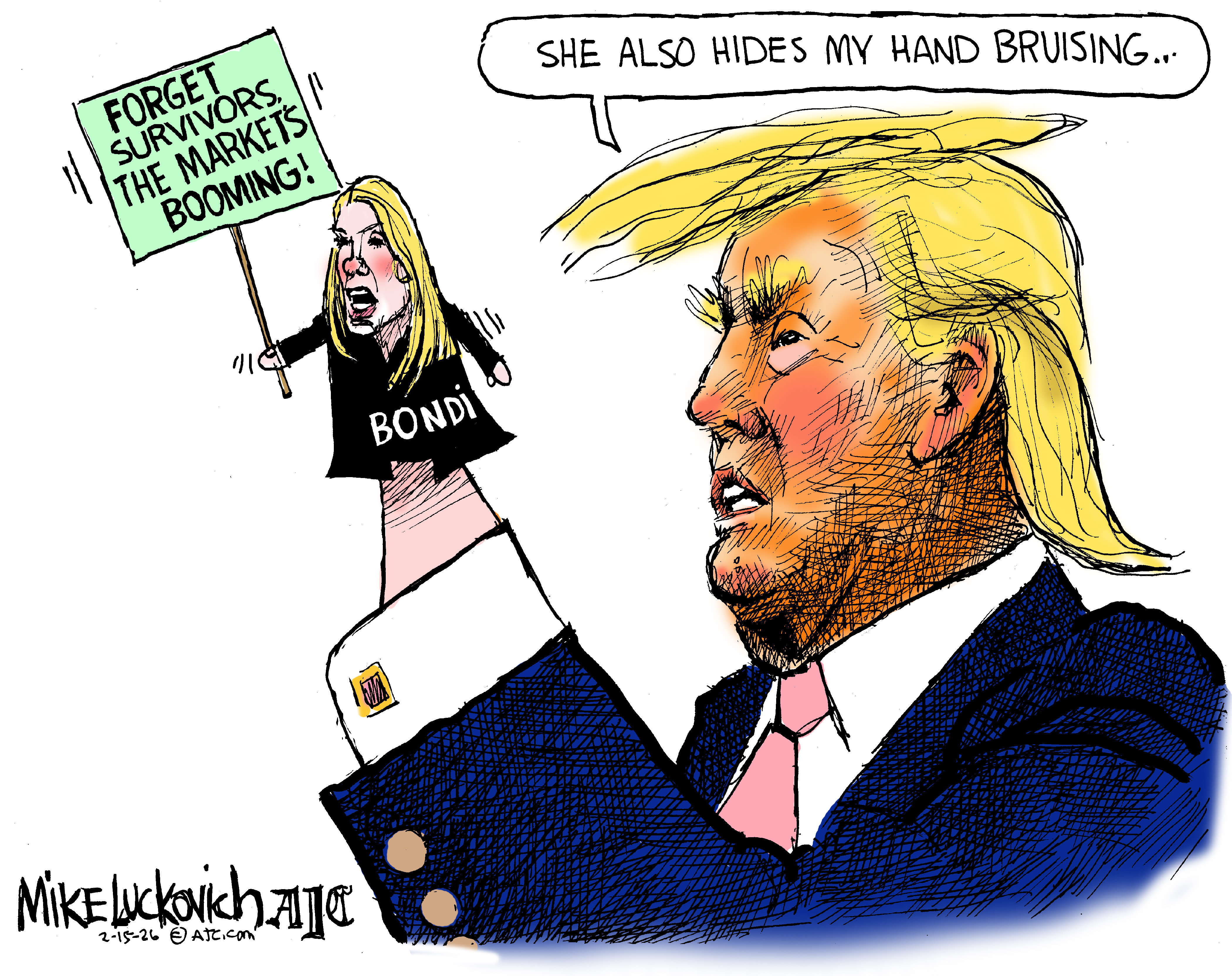

Political cartoons for February 15

Political cartoons for February 15Cartoons Sunday's political cartoons include political ventriloquism, Europe in the middle, and more

-

The broken water companies failing England and Wales

The broken water companies failing England and WalesExplainer With rising bills, deteriorating river health and a lack of investment, regulators face an uphill battle to stabilise the industry