Experts call for AI pause over risk to humanity

Open letter says powerful new systems should only be developed once it is known they are safe

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

Tech leaders and experts including Elon Musk, Apple co-founder Steve Wozniak and engineers from Google, Amazon and Microsoft have called for a six-month pause in the development of artificial intelligence systems to allow time to make sure they are safe.

“AI systems with human-competitive intelligence can pose profound risks to society and humanity,” said the open letter titled Pause Giant AI Experiments.

“Powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable,” it said.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

“We call on all AI labs to immediately pause for at least six months the training of AI systems more powerful than GPT-4,” it added.

The letter also said that in recent months AI labs have been “locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one – not even their creators – can understand, predict, or reliably control”.

“The warning comes after the release earlier this month of GPT-4… an AI program developed by OpenAI with backing from Microsoft,” said Deutsche Welle (DW). The latest iteration from the makers of ChatGPT has “wowed users by engaging them in human-like conversation, composing songs and summarising lengthy documents”, added Reuters.

The open letter has been signed by “major AI players”, according to The Guardian, including Musk, who co-founded OpenAI, Emad Mostaque, who founded London-based Stability AI, and Wozniak.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

Engineers from Amazon, DeepMind, Google, Meta and Microsoft also signed it, but among those who have not yet put their names to it are OpenAI CEO Sam Altman and Sundar Pichai and Satya Nadella, CEOs of Alphabet and Microsoft respectively.

The letter “feels like the next step of sorts”, said Engadget, from a 2022 survey of over 700 machine learning researchers. It found that “nearly half of participants stated there’s a 10 percent chance of an ‘extremely bad outcome’ from AI, including human extinction”.

But the letter has also attracted criticism. Johanna Björklund, an AI researcher and associate professor at Umea University in Sweden, told DW: “I don’t think there’s a need to pull the handbrake.” She called for more transparency rather than a pause.

Jamie Timson is the UK news editor, curating The Week UK's daily morning newsletter and setting the agenda for the day's news output. He was first a member of the team from 2015 to 2019, progressing from intern to senior staff writer, and then rejoined in September 2022. As a founding panellist on “The Week Unwrapped” podcast, he has discussed politics, foreign affairs and conspiracy theories, sometimes separately, sometimes all at once. In between working at The Week, Jamie was a senior press officer at the Department for Transport, with a penchant for crisis communications, working on Brexit, the response to Covid-19 and HS2, among others.

-

Switzerland could vote to cap its population

Switzerland could vote to cap its populationUnder the Radar Swiss People’s Party proposes referendum on radical anti-immigration measure to limit residents to 10 million

-

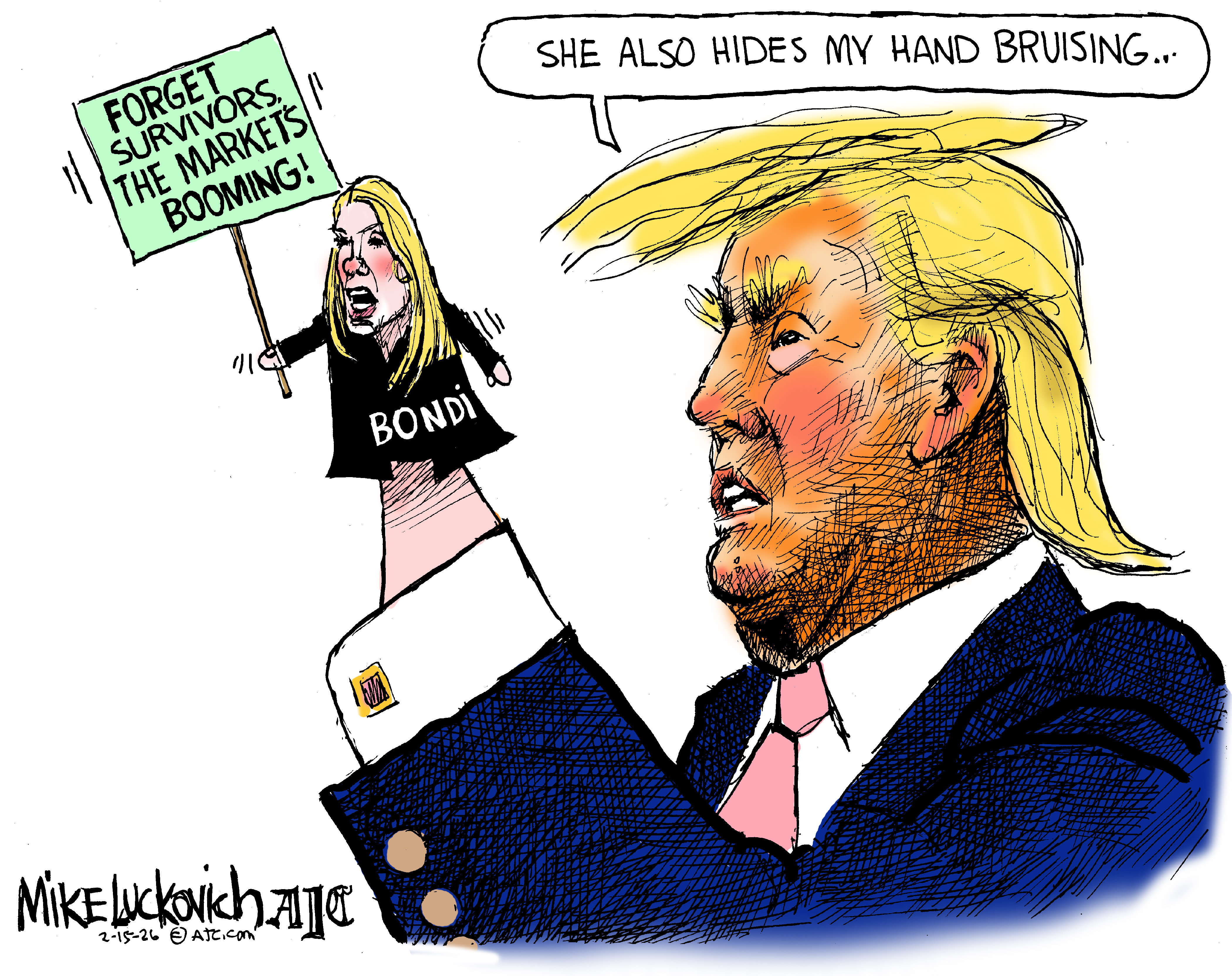

Political cartoons for February 15

Political cartoons for February 15Cartoons Sunday's political cartoons include political ventriloquism, Europe in the middle, and more

-

The broken water companies failing England and Wales

The broken water companies failing England and WalesExplainer With rising bills, deteriorating river health and a lack of investment, regulators face an uphill battle to stabilise the industry

-

Are AI bots conspiring against us?

Are AI bots conspiring against us?Talking Point Moltbook, the AI social network where humans are banned, may be the tip of the iceberg

-

Elon Musk’s pivot from Mars to the moon

Elon Musk’s pivot from Mars to the moonIn the Spotlight SpaceX shifts focus with IPO approaching

-

Moltbook: the AI social media platform with no humans allowed

Moltbook: the AI social media platform with no humans allowedThe Explainer From ‘gripes’ about human programmers to creating new religions, the new AI-only network could bring us closer to the point of ‘singularity’

-

Claude Code: Anthropic’s wildly popular AI coding app

Claude Code: Anthropic’s wildly popular AI coding appThe Explainer Engineers and noncoders alike are helping the app go viral

-

Will regulators put a stop to Grok’s deepfake porn images of real people?

Will regulators put a stop to Grok’s deepfake porn images of real people?Today’s Big Question Users command AI chatbot to undress pictures of women and children

-

Most data centers are being built in the wrong climate

Most data centers are being built in the wrong climateThe explainer Data centers require substantial water and energy. But certain locations are more strained than others, mainly due to rising temperatures.

-

The dark side of how kids are using AI

The dark side of how kids are using AIUnder the Radar Chatbots have become places where children ‘talk about violence, explore romantic or sexual roleplay, and seek advice when no adult is watching’

-

Why 2025 was a pivotal year for AI

Why 2025 was a pivotal year for AITalking Point The ‘hype’ and ‘hopes’ around artificial intelligence are ‘like nothing the world has seen before’